Iman Yi Liao

IFViT: Interpretable Fixed-Length Representation for Fingerprint Matching via Vision Transformer

Apr 12, 2024

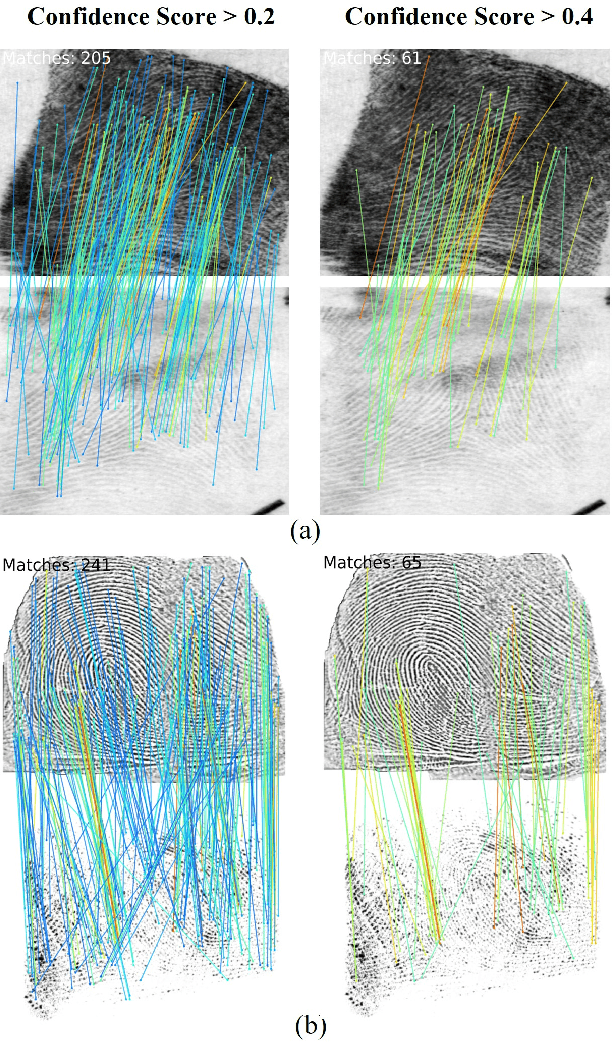

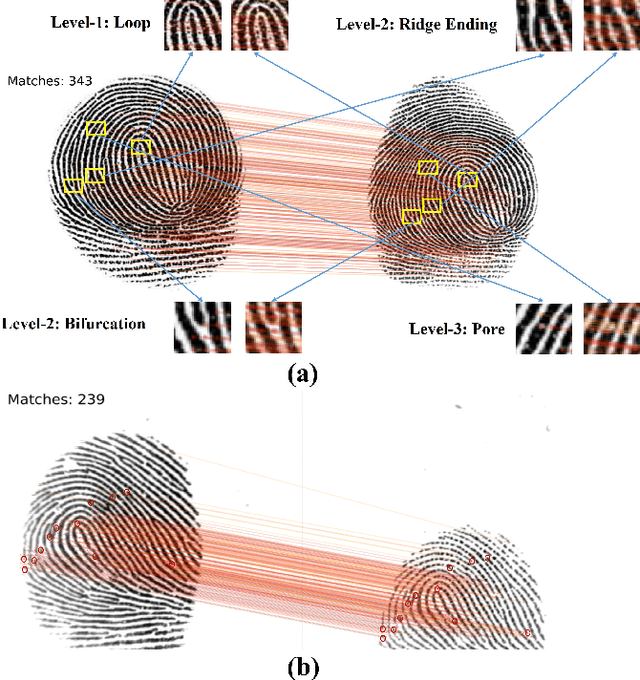

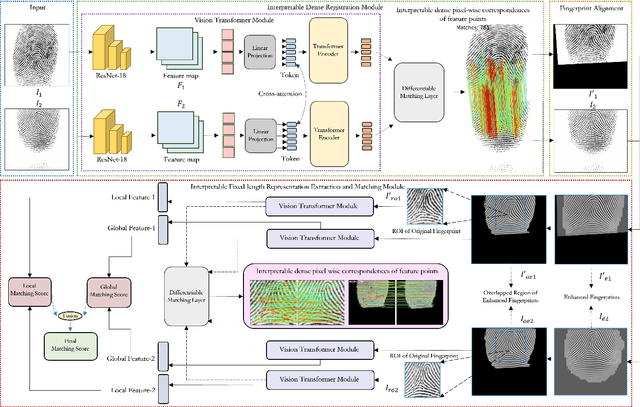

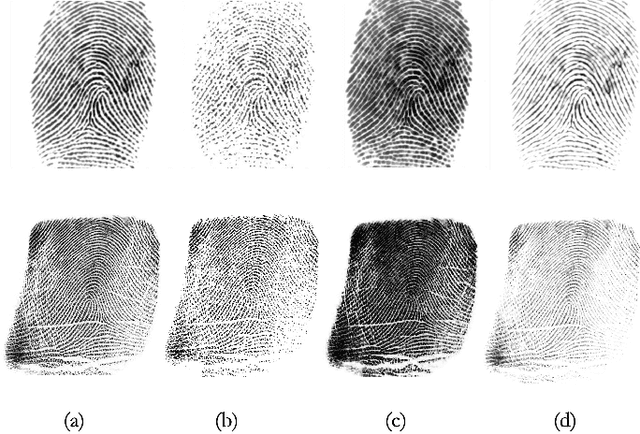

Abstract:Determining dense feature points on fingerprints used in constructing deep fixed-length representations for accurate matching, particularly at the pixel level, is of significant interest. To explore the interpretability of fingerprint matching, we propose a multi-stage interpretable fingerprint matching network, namely Interpretable Fixed-length Representation for Fingerprint Matching via Vision Transformer (IFViT), which consists of two primary modules. The first module, an interpretable dense registration module, establishes a Vision Transformer (ViT)-based Siamese Network to capture long-range dependencies and the global context in fingerprint pairs. It provides interpretable dense pixel-wise correspondences of feature points for fingerprint alignment and enhances the interpretability in the subsequent matching stage. The second module takes into account both local and global representations of the aligned fingerprint pair to achieve an interpretable fixed-length representation extraction and matching. It employs the ViTs trained in the first module with the additional fully connected layer and retrains them to simultaneously produce the discriminative fixed-length representation and interpretable dense pixel-wise correspondences of feature points. Extensive experimental results on diverse publicly available fingerprint databases demonstrate that the proposed framework not only exhibits superior performance on dense registration and matching but also significantly promotes the interpretability in deep fixed-length representations-based fingerprint matching.

Balancing Accuracy and Latency in Multipath Neural Networks

Apr 25, 2021

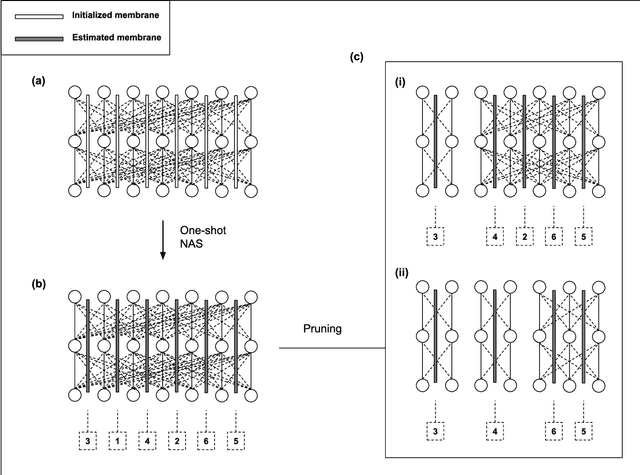

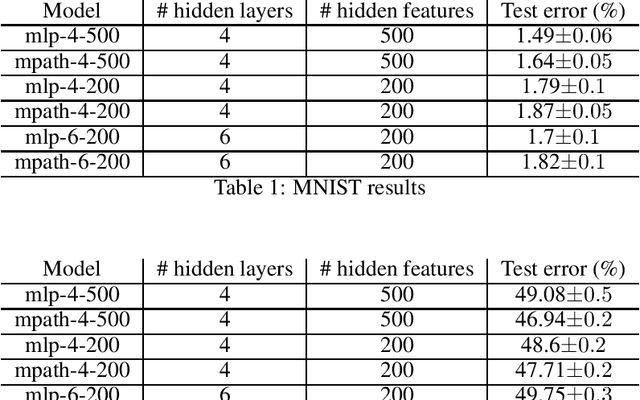

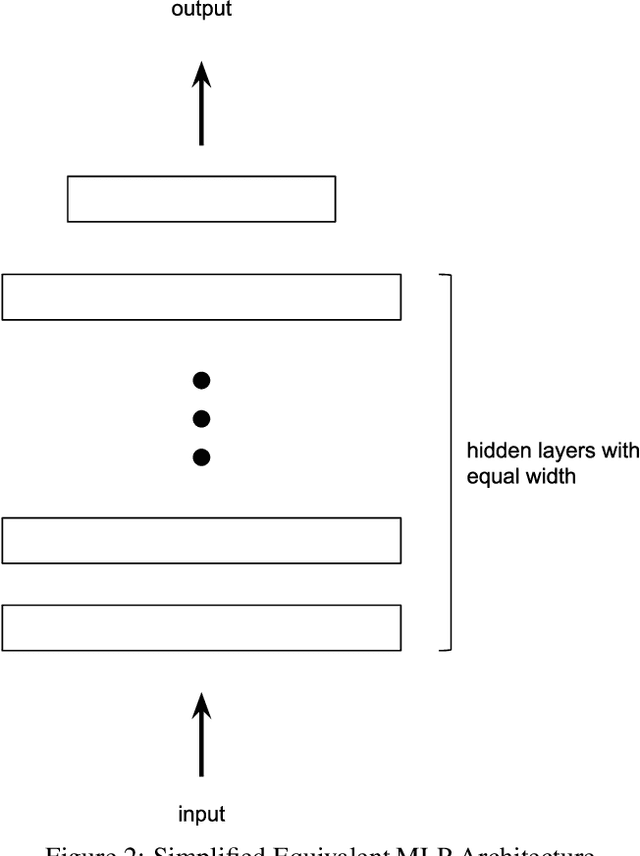

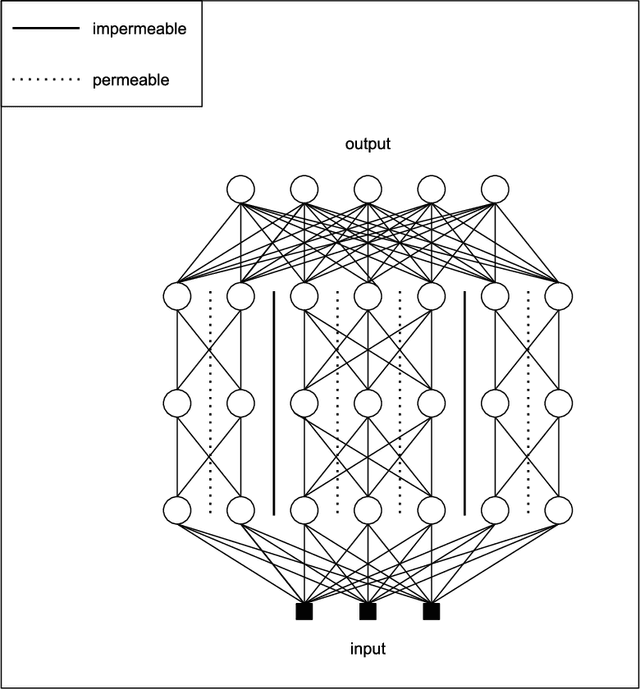

Abstract:The growing capacity of neural networks has strongly contributed to their success at complex machine learning tasks and the computational demand of such large models has, in turn, stimulated a significant improvement in the hardware necessary to accelerate their computations. However, models with high latency aren't suitable for limited-resource environments such as hand-held and IoT devices. Hence, many deep learning techniques aim to address this problem by developing models with reasonable accuracy without violating the limited-resource constraint. In this work, we use a one-shot neural architecture search model to implicitly evaluate the performance of an intractable number of multipath neural networks. Combining this architecture search with a pruning technique and architecture sample evaluation, we can model the relation between the accuracy and the latency of a spectrum of models with graded complexity. We show that our method can accurately model the relative performance between models with different latencies and predict the performance of unseen models with good precision across different datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge