Chaoyang Jiang

GOReloc: Graph-based Object-Level Relocalization for Visual SLAM

Aug 15, 2024

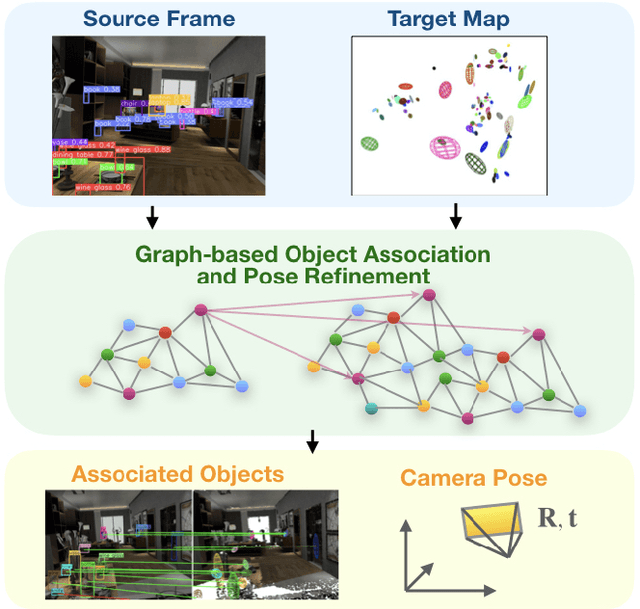

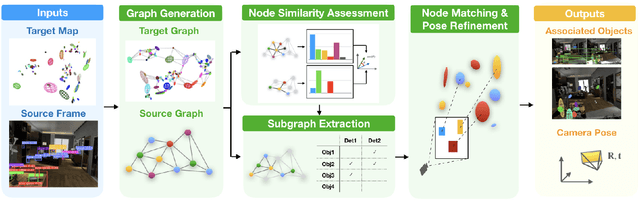

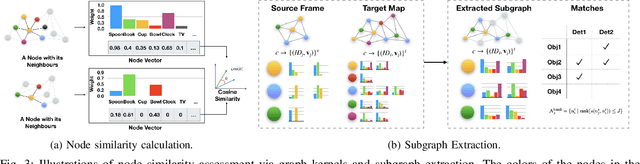

Abstract:This article introduces a novel method for object-level relocalization of robotic systems. It determines the pose of a camera sensor by robustly associating the object detections in the current frame with 3D objects in a lightweight object-level map. Object graphs, considering semantic uncertainties, are constructed for both the incoming camera frame and the pre-built map. Objects are represented as graph nodes, and each node employs unique semantic descriptors based on our devised graph kernels. We extract a subgraph from the target map graph by identifying potential object associations for each object detection, then refine these associations and pose estimations using a RANSAC-inspired strategy. Experiments on various datasets demonstrate that our method achieves more accurate data association and significantly increases relocalization success rates compared to baseline methods. The implementation of our method is released at \url{https://github.com/yutongwangBIT/GOReloc}.

* 8 pages, accepted by IEEE RAL

VOOM: Robust Visual Object Odometry and Mapping using Hierarchical Landmarks

Feb 26, 2024Abstract:In recent years, object-oriented simultaneous localization and mapping (SLAM) has attracted increasing attention due to its ability to provide high-level semantic information while maintaining computational efficiency. Some researchers have attempted to enhance localization accuracy by integrating the modeled object residuals into bundle adjustment. However, few have demonstrated better results than feature-based visual SLAM systems, as the generic coarse object models, such as cuboids or ellipsoids, are less accurate than feature points. In this paper, we propose a Visual Object Odometry and Mapping framework VOOM using high-level objects and low-level points as the hierarchical landmarks in a coarse-to-fine manner instead of directly using object residuals in bundle adjustment. Firstly, we introduce an improved observation model and a novel data association method for dual quadrics, employed to represent physical objects. It facilitates the creation of a 3D map that closely reflects reality. Next, we use object information to enhance the data association of feature points and consequently update the map. In the visual object odometry backend, the updated map is employed to further optimize the camera pose and the objects. Meanwhile, local bundle adjustment is performed utilizing the objects and points-based covisibility graphs in our visual object mapping process. Experiments show that VOOM outperforms both object-oriented SLAM and feature points SLAM systems such as ORB-SLAM2 in terms of localization. The implementation of our method is available at https://github.com/yutongwangBIT/VOOM.git.

Shuffled Differentially Private Federated Learning for Time Series Data Analytics

Jul 30, 2023

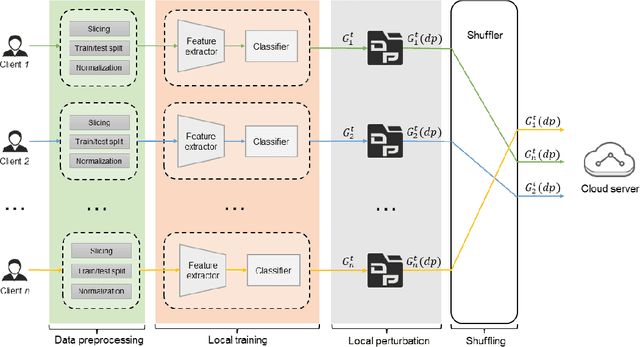

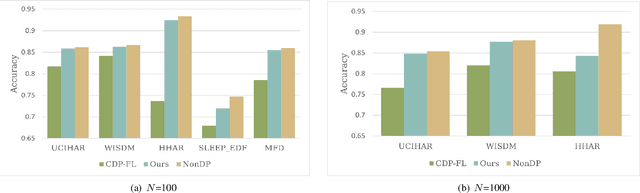

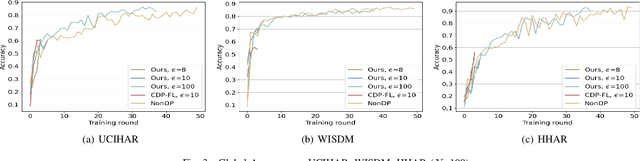

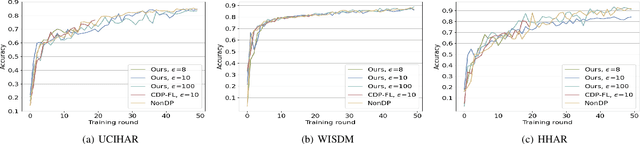

Abstract:Trustworthy federated learning aims to achieve optimal performance while ensuring clients' privacy. Existing privacy-preserving federated learning approaches are mostly tailored for image data, lacking applications for time series data, which have many important applications, like machine health monitoring, human activity recognition, etc. Furthermore, protective noising on a time series data analytics model can significantly interfere with temporal-dependent learning, leading to a greater decline in accuracy. To address these issues, we develop a privacy-preserving federated learning algorithm for time series data. Specifically, we employ local differential privacy to extend the privacy protection trust boundary to the clients. We also incorporate shuffle techniques to achieve a privacy amplification, mitigating the accuracy decline caused by leveraging local differential privacy. Extensive experiments were conducted on five time series datasets. The evaluation results reveal that our algorithm experienced minimal accuracy loss compared to non-private federated learning in both small and large client scenarios. Under the same level of privacy protection, our algorithm demonstrated improved accuracy compared to the centralized differentially private federated learning in both scenarios.

UMS-VINS: United Monocular-Stereo Features for Visual-Inertial Tightly Coupled Odometry

Mar 15, 2023

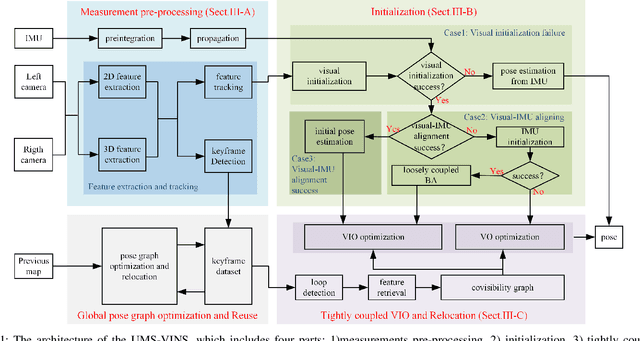

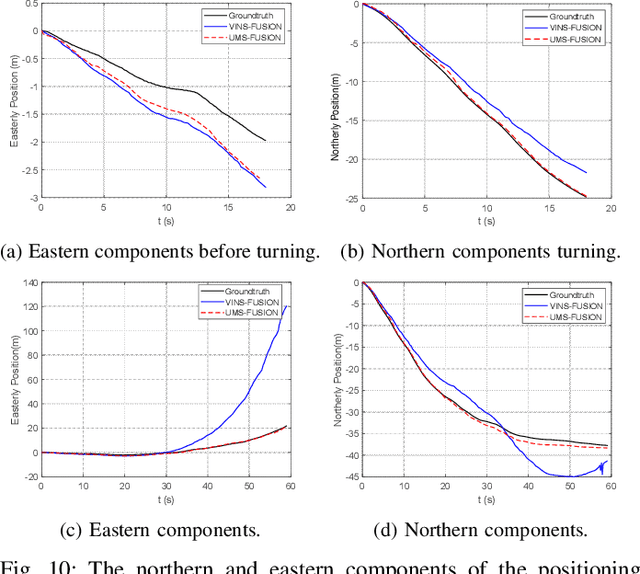

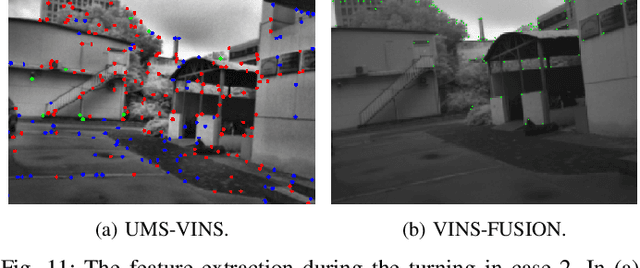

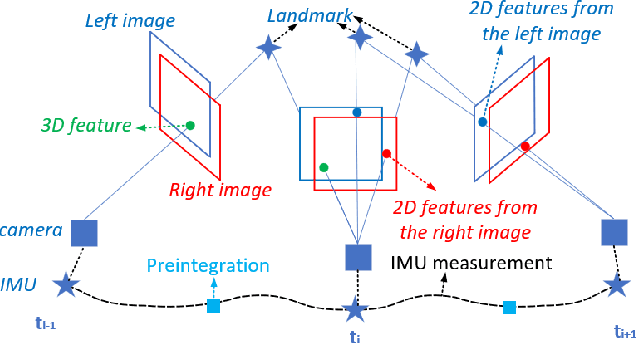

Abstract:This paper introduces the united monocular-stereo features into a visual-inertial tightly coupled odometry (UMS-VINS) for robust pose estimation. UMS-VINS requires two cameras and a low-cost inertial measurement unit (IMU). The UMS-VINS is an evolution of VINS-FUSION, which modifies the VINS-FUSION from the following three perspectives. 1) UMS-VINS extracts and tracks features from the sub-pixel plane to achieve better positions of the features. 2) UMS-VINS introduces additional 2-dimensional features from the left and/or right cameras. 3) If the visual initialization fails, the IMU propagation is directly used for pose estimation, and if the visual-IMU alignment fails, UMS-VINS estimates the pose via the visual odometry. The performances on both public datasets and new real-world experiments indicate that the proposed UMS-VINS outperforms the VINS-FUSION from the perspective of localization accuracy, localization robustness, and environmental adaptability.

Range-Aided LiDAR-Inertial Multi-Vehicle Mapping in Degenerate Environment

Mar 15, 2023

Abstract:This paper presents a range-aided LiDAR-inertial multi-vehicle mapping system (RaLI-Multi). Firstly, we design a multi-metric weights LiDAR-inertial odometry by fusing observations from an inertial measurement unit (IMU) and a light detection and ranging sensor (LiDAR). The degenerate level and direction are evaluated by analyzing the distribution of normal vectors of feature point clouds and are used to activate the degeneration correction module in which range measurements correct the pose estimation from the degeneration direction. We then design a multi-vehicle mapping system in which a centralized vehicle receives local maps of each vehicle and range measurements between vehicles to optimize a global pose graph. The global map is broadcast to other vehicles for localization and mapping updates, and the centralized vehicle is dynamically fungible. Finally, we provide three experiments to verify the effectiveness of the proposed RaLI-Multi. The results show its superiority in degeneration environments

Stop Line Aided Cooperative Positioning of Connected Vehicles

Dec 26, 2021

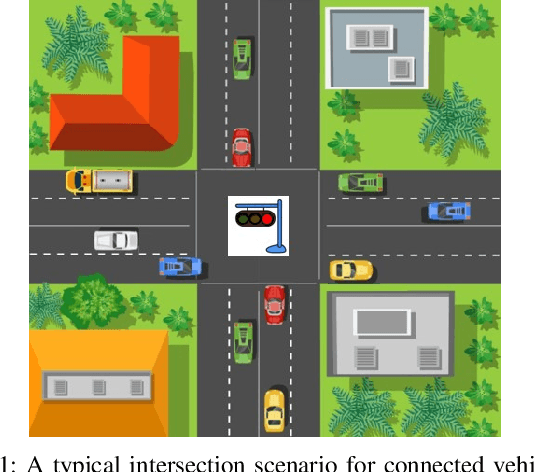

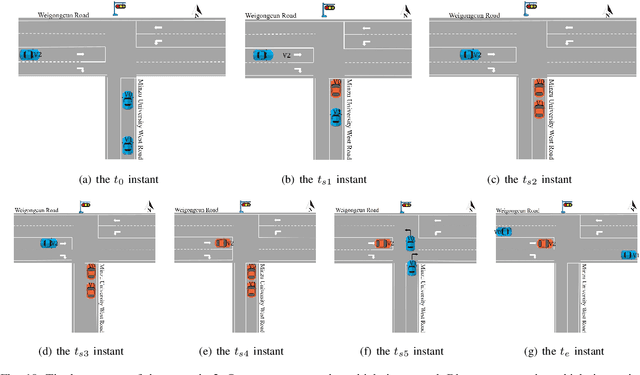

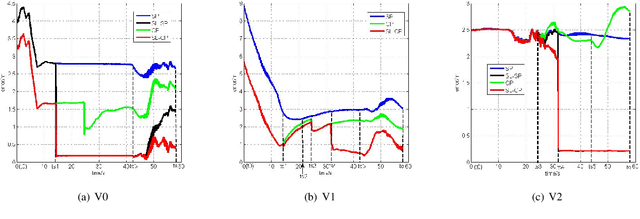

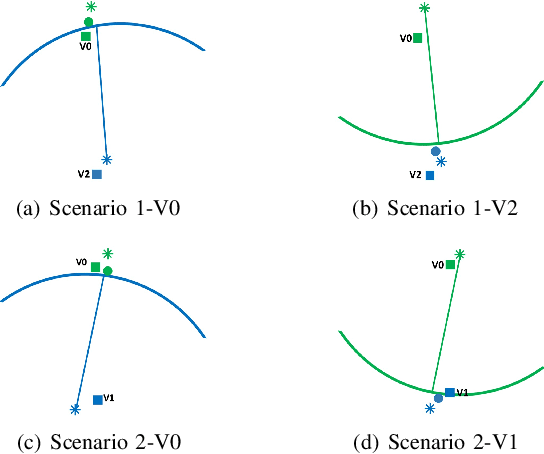

Abstract:This paper develops a stop line aided cooperative positioning framework for connected vehicles, which creatively utilizes the location of the stop-line to achieve the positioning enhancement for a vehicular ad-hoc network (VANET) in intersection scenarios via Vehicle-to-Vehicle (V2V) communication. Firstly, a self-positioning correction scheme for the first stopped vehicle is presented, which applied the stop line information as benchmarks to correct the GNSS/INS positioning results. Then, the local observations of each vehicle are fused with the position estimates of other vehicles and the inter-vehicle distance measurements by using an extended Kalman filter (EKF). In this way, the benefits of the first stopped vehicle are extended to the whole VANET. Such a cooperative inertial navigation (CIN) framework can greatly improve the positioning performance of the VANET. Finally, experiments in Beijing show the effectiveness of the proposed stop line aided cooperative positioning framework.

Learning based Predictive Error Estimation and Compensator Design for Autonomous Vehicle Path Tracking

Jul 18, 2020

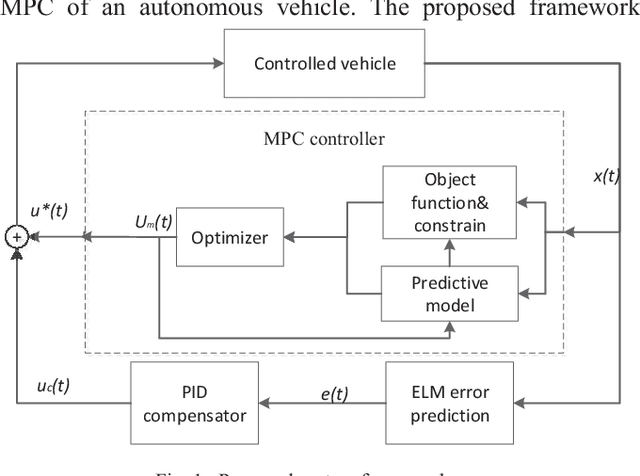

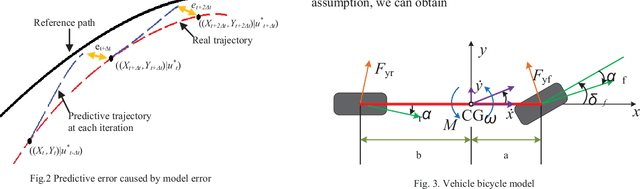

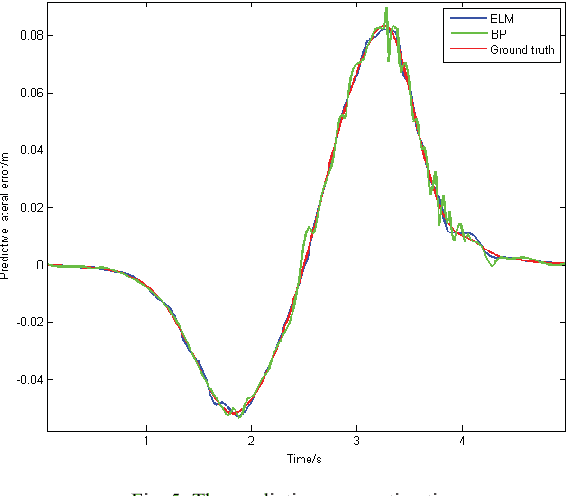

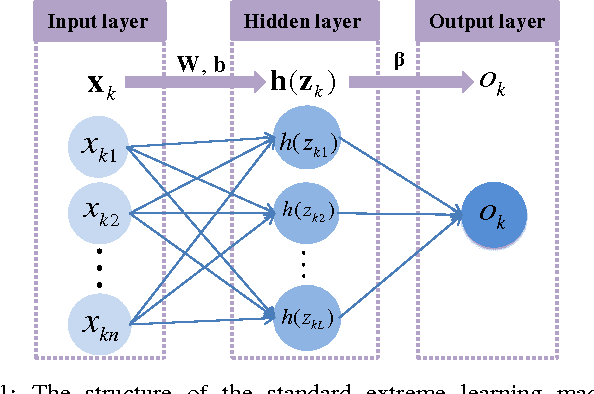

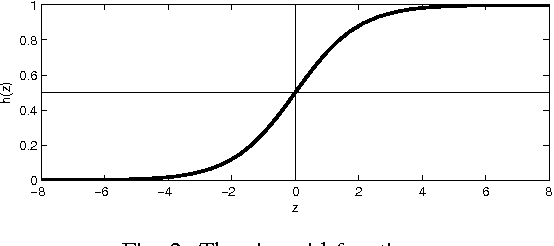

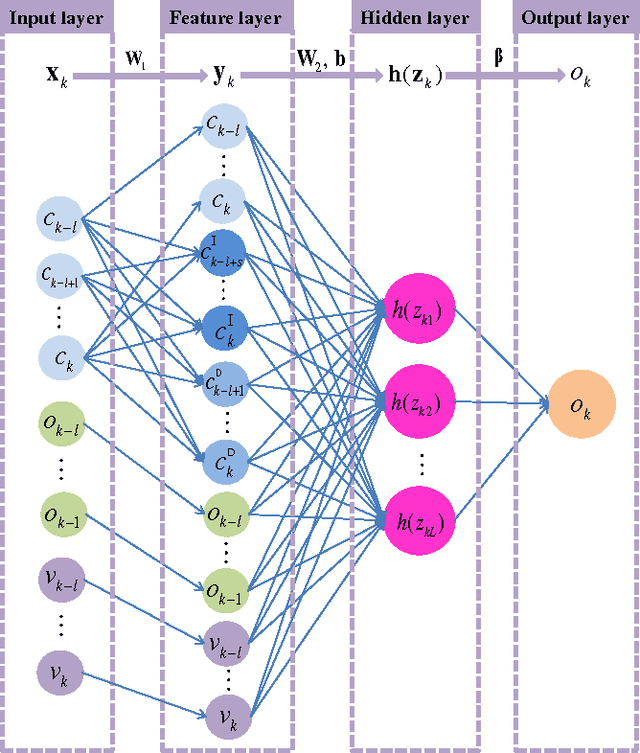

Abstract:Model predictive control (MPC) is widely used for path tracking of autonomous vehicles due to its ability to handle various types of constraints. However, a considerable predictive error exists because of the error of mathematics model or the model linearization. In this paper, we propose a framework combining the MPC with a learning-based error estimator and a feedforward compensator to improve the path tracking accuracy. An extreme learning machine is implemented to estimate the model based predictive error from vehicle state feedback information. Offline training data is collected from a vehicle controlled by a model-defective regular MPC for path tracking in several working conditions, respectively. The data include vehicle state and the spatial error between the current actual position and the corresponding predictive position. According to the estimated predictive error, we then design a PID-based feedforward compensator. Simulation results via Carsim show the estimation accuracy of the predictive error and the effectiveness of the proposed framework for path tracking of an autonomous vehicle.

Indoor occupancy estimation from carbon dioxide concentration

Jul 20, 2016

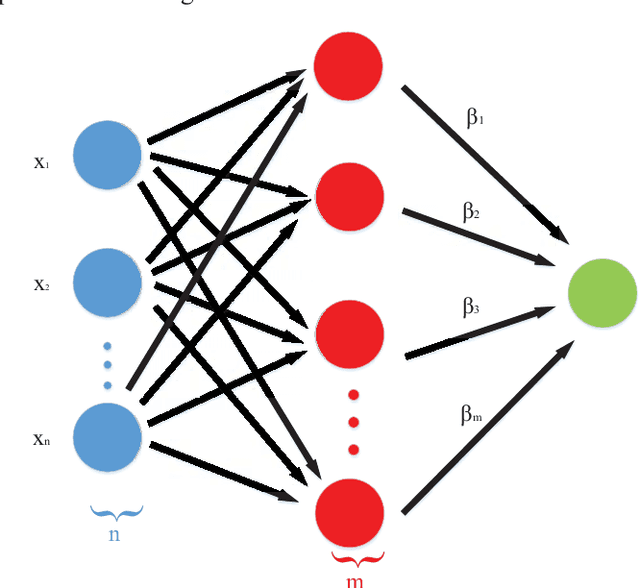

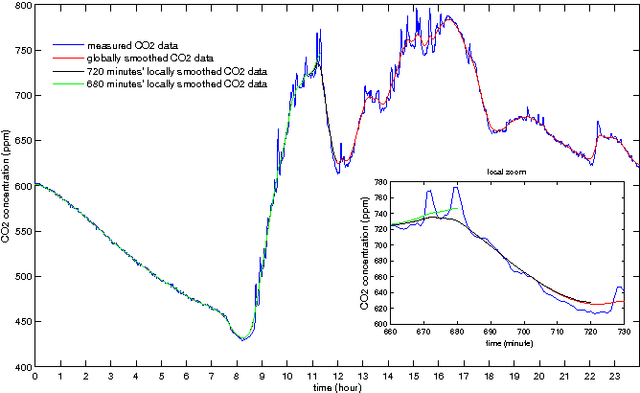

Abstract:This paper presents an indoor occupancy estimator with which we can estimate the number of real-time indoor occupants based on the carbon dioxide (CO2) measurement. The estimator is actually a dynamic model of the occupancy level. To identify the dynamic model, we propose the Feature Scaled Extreme Learning Machine (FS-ELM) algorithm, which is a variation of the standard Extreme Learning Machine (ELM) but is shown to perform better for the occupancy estimation problem. The measured CO2 concentration suffers from serious spikes. We find that pre-smoothing the CO2 data can greatly improve the estimation accuracy. In real applications, however, we cannot obtain the real-time globally smoothed CO2 data. We provide a way to use the locally smoothed CO2 data instead, which is real-time available. We introduce a new criterion, i.e. $x$-tolerance accuracy, to assess the occupancy estimator. The proposed occupancy estimator was tested in an office room with 24 cubicles and 11 open seats. The accuracy is up to 94 percent with a tolerance of four occupants.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge