Yuting He

Dynamic Stream Network for Combinatorial Explosion Problem in Deformable Medical Image Registration

Dec 22, 2025

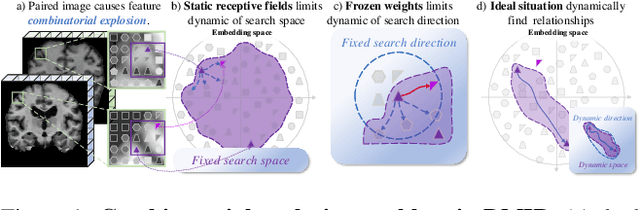

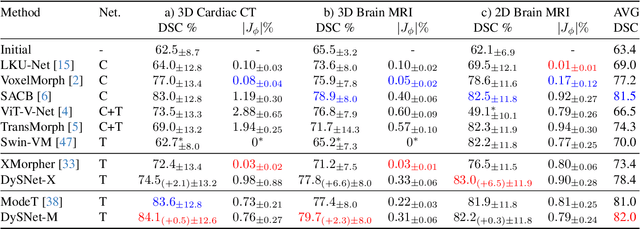

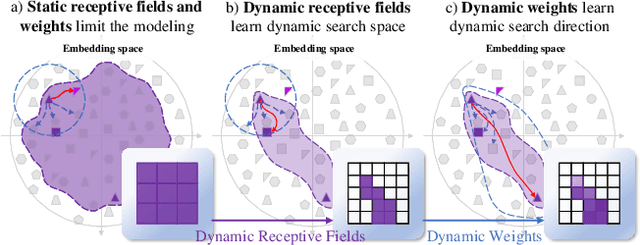

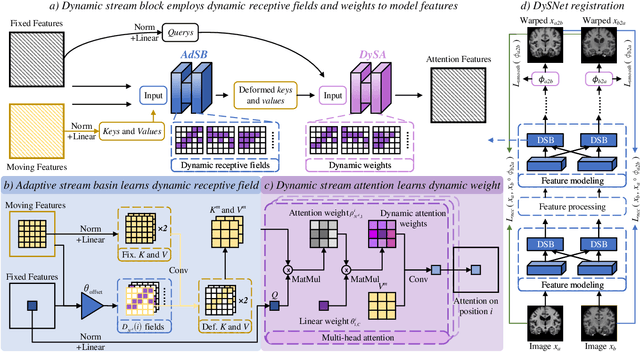

Abstract:Combinatorial explosion problem caused by dual inputs presents a critical challenge in Deformable Medical Image Registration (DMIR). Since DMIR processes two images simultaneously as input, the combination relationships between features has grown exponentially, ultimately the model considers more interfering features during the feature modeling process. Introducing dynamics in the receptive fields and weights of the network enable the model to eliminate the interfering features combination and model the potential feature combination relationships. In this paper, we propose the Dynamic Stream Network (DySNet), which enables the receptive fields and weights to be dynamically adjusted. This ultimately enables the model to ignore interfering feature combinations and model the potential feature relationships. With two key innovations: 1) Adaptive Stream Basin (AdSB) module dynamically adjusts the shape of the receptive field, thereby enabling the model to focus on the feature relationships with greater correlation. 2) Dynamic Stream Attention (DySA) mechanism generates dynamic weights to search for more valuable feature relationships. Extensive experiments have shown that DySNet consistently outperforms the most advanced DMIR methods, highlighting its outstanding generalization ability. Our code will be released on the website: https://github.com/ShaochenBi/DySNet.

DeepFeature: Iterative Context-aware Feature Generation for Wearable Biosignals

Dec 09, 2025Abstract:Biosignals collected from wearable devices are widely utilized in healthcare applications. Machine learning models used in these applications often rely on features extracted from biosignals due to their effectiveness, lower data dimensionality, and wide compatibility across various model architectures. However, existing feature extraction methods often lack task-specific contextual knowledge, struggle to identify optimal feature extraction settings in high-dimensional feature space, and are prone to code generation and automation errors. In this paper, we propose DeepFeature, the first LLM-empowered, context-aware feature generation framework for wearable biosignals. DeepFeature introduces a multi-source feature generation mechanism that integrates expert knowledge with task settings. It also employs an iterative feature refinement process that uses feature assessment-based feedback for feature re-selection. Additionally, DeepFeature utilizes a robust multi-layer filtering and verification approach for robust feature-to-code translation to ensure that the extraction functions run without crashing. Experimental evaluation results show that DeepFeature achieves an average AUROC improvement of 4.21-9.67% across eight diverse tasks compared to baseline methods. It outperforms state-of-the-art approaches on five tasks while maintaining comparable performance on the remaining tasks.

TimeSeriesScientist: A General-Purpose AI Agent for Time Series Analysis

Oct 02, 2025

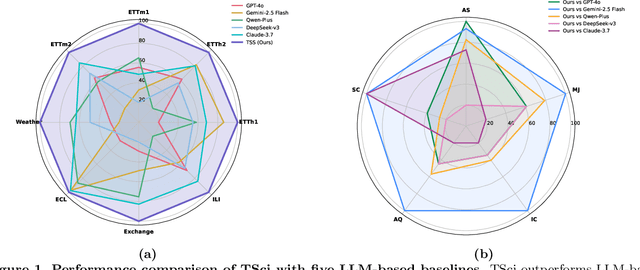

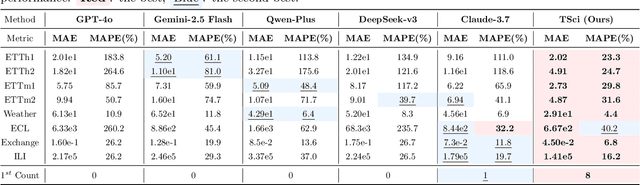

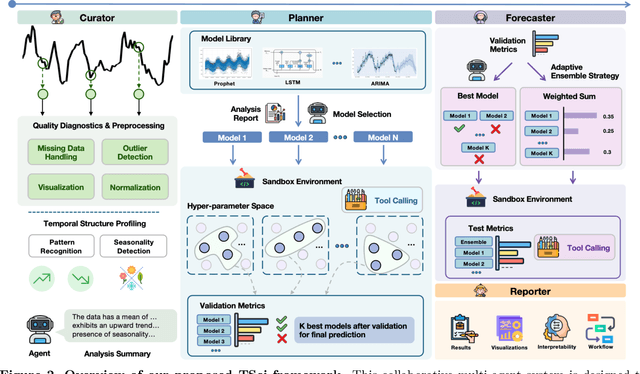

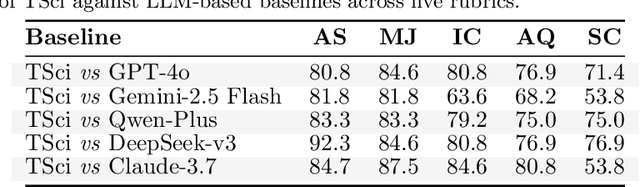

Abstract:Time series forecasting is central to decision-making in domains as diverse as energy, finance, climate, and public health. In practice, forecasters face thousands of short, noisy series that vary in frequency, quality, and horizon, where the dominant cost lies not in model fitting, but in the labor-intensive preprocessing, validation, and ensembling required to obtain reliable predictions. Prevailing statistical and deep learning models are tailored to specific datasets or domains and generalize poorly. A general, domain-agnostic framework that minimizes human intervention is urgently in demand. In this paper, we introduce TimeSeriesScientist (TSci), the first LLM-driven agentic framework for general time series forecasting. The framework comprises four specialized agents: Curator performs LLM-guided diagnostics augmented by external tools that reason over data statistics to choose targeted preprocessing; Planner narrows the hypothesis space of model choice by leveraging multi-modal diagnostics and self-planning over the input; Forecaster performs model fitting and validation and, based on the results, adaptively selects the best model configuration as well as ensemble strategy to make final predictions; and Reporter synthesizes the whole process into a comprehensive, transparent report. With transparent natural-language rationales and comprehensive reports, TSci transforms the forecasting workflow into a white-box system that is both interpretable and extensible across tasks. Empirical results on eight established benchmarks demonstrate that TSci consistently outperforms both statistical and LLM-based baselines, reducing forecast error by an average of 10.4% and 38.2%, respectively. Moreover, TSci produces a clear and rigorous report that makes the forecasting workflow more transparent and interpretable.

Cardiac-CLIP: A Vision-Language Foundation Model for 3D Cardiac CT Images

Jul 29, 2025

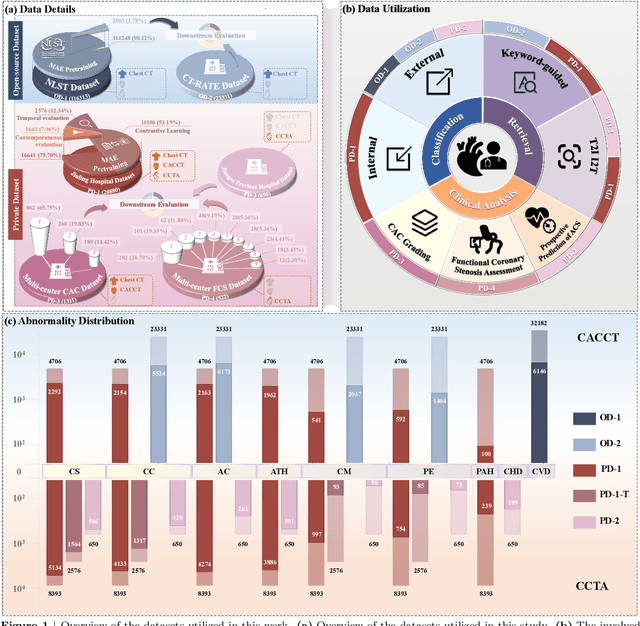

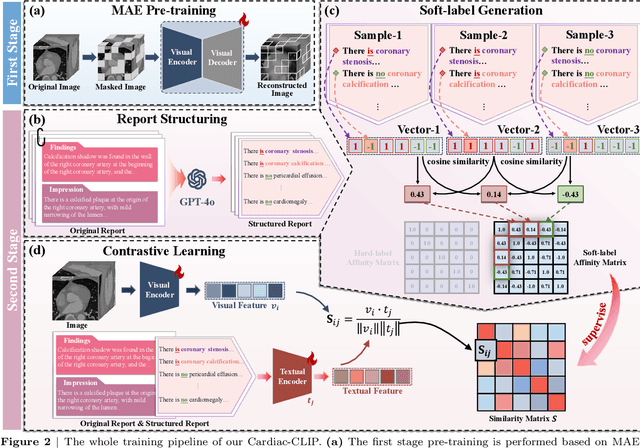

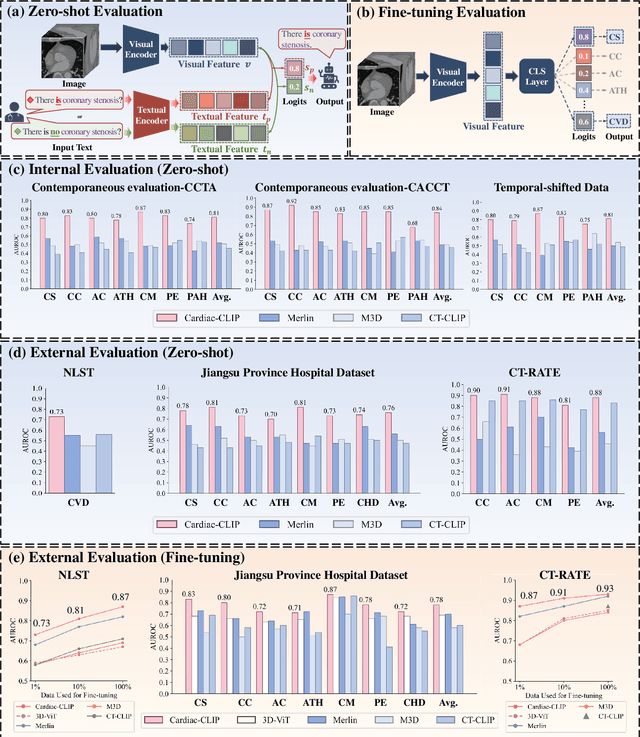

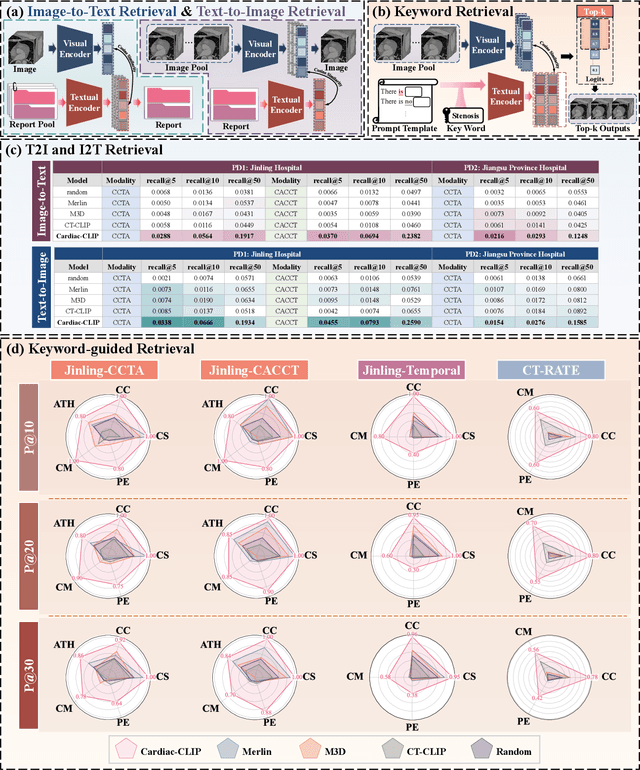

Abstract:Foundation models have demonstrated remarkable potential in medical domain. However, their application to complex cardiovascular diagnostics remains underexplored. In this paper, we present Cardiac-CLIP, a multi-modal foundation model designed for 3D cardiac CT images. Cardiac-CLIP is developed through a two-stage pre-training strategy. The first stage employs a 3D masked autoencoder (MAE) to perform self-supervised representation learning from large-scale unlabeled volumetric data, enabling the visual encoder to capture rich anatomical and contextual features. In the second stage, contrastive learning is introduced to align visual and textual representations, facilitating cross-modal understanding. To support the pre-training, we collect 16641 real clinical CT scans, supplemented by 114k publicly available data. Meanwhile, we standardize free-text radiology reports into unified templates and construct the pathology vectors according to diagnostic attributes, based on which the soft-label matrix is generated to supervise the contrastive learning process. On the other hand, to comprehensively evaluate the effectiveness of Cardiac-CLIP, we collect 6,722 real-clinical data from 12 independent institutions, along with the open-source data to construct the evaluation dataset. Specifically, Cardiac-CLIP is comprehensively evaluated across multiple tasks, including cardiovascular abnormality classification, information retrieval and clinical analysis. Experimental results demonstrate that Cardiac-CLIP achieves state-of-the-art performance across various downstream tasks in both internal and external data. Particularly, Cardiac-CLIP exhibits great effectiveness in supporting complex clinical tasks such as the prospective prediction of acute coronary syndrome, which is notoriously difficult in real-world scenarios.

Vector Contrastive Learning For Pixel-Wise Pretraining In Medical Vision

Jun 25, 2025Abstract:Contrastive learning (CL) has become a cornerstone of self-supervised pretraining (SSP) in foundation models, however, extending CL to pixel-wise representation, crucial for medical vision, remains an open problem. Standard CL formulates SSP as a binary optimization problem (binary CL) where the excessive pursuit of feature dispersion leads to an over-dispersion problem, breaking pixel-wise feature correlation thus disrupting the intra-class distribution. Our vector CL reformulates CL as a vector regression problem, enabling dispersion quantification in pixel-wise pretraining via modeling feature distances in regressing displacement vectors. To implement this novel paradigm, we propose the COntrast in VEctor Regression (COVER) framework. COVER establishes an extendable vector-based self-learning, enforces a consistent optimization flow from vector regression to distance modeling, and leverages a vector pyramid architecture for granularity adaptation, thus preserving pixel-wise feature correlations in SSP. Extensive experiments across 8 tasks, spanning 2 dimensions and 4 modalities, show that COVER significantly improves pixel-wise SSP, advancing generalizable medical visual foundation models.

Learning Critically: Selective Self Distillation in Federated Learning on Non-IID Data

Apr 20, 2025Abstract:Federated learning (FL) enables multiple clients to collaboratively train a global model while keeping local data decentralized. Data heterogeneity (non-IID) across clients has imposed significant challenges to FL, which makes local models re-optimize towards their own local optima and forget the global knowledge, resulting in performance degradation and convergence slowdown. Many existing works have attempted to address the non-IID issue by adding an extra global-model-based regularizing item to the local training but without an adaption scheme, which is not efficient enough to achieve high performance with deep learning models. In this paper, we propose a Selective Self-Distillation method for Federated learning (FedSSD), which imposes adaptive constraints on the local updates by self-distilling the global model's knowledge and selectively weighting it by evaluating the credibility at both the class and sample level. The convergence guarantee of FedSSD is theoretically analyzed and extensive experiments are conducted on three public benchmark datasets, which demonstrates that FedSSD achieves better generalization and robustness in fewer communication rounds, compared with other state-of-the-art FL methods.

Homeomorphism Prior for False Positive and Negative Problem in Medical Image Dense Contrastive Representation Learning

Feb 07, 2025Abstract:Dense contrastive representation learning (DCRL) has greatly improved the learning efficiency for image-dense prediction tasks, showing its great potential to reduce the large costs of medical image collection and dense annotation. However, the properties of medical images make unreliable correspondence discovery, bringing an open problem of large-scale false positive and negative (FP&N) pairs in DCRL. In this paper, we propose GEoMetric vIsual deNse sImilarity (GEMINI) learning which embeds the homeomorphism prior to DCRL and enables a reliable correspondence discovery for effective dense contrast. We propose a deformable homeomorphism learning (DHL) which models the homeomorphism of medical images and learns to estimate a deformable mapping to predict the pixels' correspondence under topological preservation. It effectively reduces the searching space of pairing and drives an implicit and soft learning of negative pairs via a gradient. We also propose a geometric semantic similarity (GSS) which extracts semantic information in features to measure the alignment degree for the correspondence learning. It will promote the learning efficiency and performance of deformation, constructing positive pairs reliably. We implement two practical variants on two typical representation learning tasks in our experiments. Our promising results on seven datasets which outperform the existing methods show our great superiority. We will release our code on a companion link: https://github.com/YutingHe-list/GEMINI.

Gaze-Assisted Human-Centric Domain Adaptation for Cardiac Ultrasound Image Segmentation

Feb 06, 2025

Abstract:Domain adaptation (DA) for cardiac ultrasound image segmentation is clinically significant and valuable. However, previous domain adaptation methods are prone to be affected by the incomplete pseudo-label and low-quality target to source images. Human-centric domain adaptation has great advantages of human cognitive guidance to help model adapt to target domain and reduce reliance on labels. Doctor gaze trajectories contains a large amount of cross-domain human guidance. To leverage gaze information and human cognition for guiding domain adaptation, we propose gaze-assisted human-centric domain adaptation (GAHCDA), which reliably guides the domain adaptation of cardiac ultrasound images. GAHCDA includes following modules: (1) Gaze Augment Alignment (GAA): GAA enables the model to obtain human cognition general features to recognize segmentation target in different domain of cardiac ultrasound images like humans. (2) Gaze Balance Loss (GBL): GBL fused gaze heatmap with outputs which makes the segmentation result structurally closer to the target domain. The experimental results illustrate that our proposed framework is able to segment cardiac ultrasound images more effectively in the target domain than GAN-based methods and other self-train based methods, showing great potential in clinical application.

Imaging foundation model for universal enhancement of non-ideal measurement CT

Oct 02, 2024Abstract:Non-ideal measurement computed tomography (NICT), which sacrifices optimal imaging standards for new advantages in CT imaging, is expanding the clinical application scope of CT images. However, with the reduction of imaging standards, the image quality has also been reduced, extremely limiting the clinical acceptability. Although numerous studies have demonstrated the feasibility of deep learning for the NICT enhancement in specific scenarios, their high data cost and limited generalizability have become large obstacles. The recent research on the foundation model has brought new opportunities for building a universal NICT enhancement model - bridging the image quality degradation with minimal data cost. However, owing to the challenges in the collection of large pre-training datasets and the compatibility of data variation, no success has been reported. In this paper, we propose a multi-scale integrated Transformer AMPlifier (TAMP), the first imaging foundation model for universal NICT enhancement. It has been pre-trained on a large-scale physical-driven simulation dataset with 3.6 million NICT-ICT image pairs, and is able to directly generalize to the NICT enhancement tasks with various non-ideal settings and body regions. Via the adaptation with few data, it can further achieve professional performance in real-world specific scenarios. Our extensive experiments have demonstrated that the proposed TAMP has significant potential for promoting the exploration and application of NICT and serving a wider range of medical scenarios.

Foundation Model for Advancing Healthcare: Challenges, Opportunities, and Future Directions

Apr 04, 2024

Abstract:Foundation model, which is pre-trained on broad data and is able to adapt to a wide range of tasks, is advancing healthcare. It promotes the development of healthcare artificial intelligence (AI) models, breaking the contradiction between limited AI models and diverse healthcare practices. Much more widespread healthcare scenarios will benefit from the development of a healthcare foundation model (HFM), improving their advanced intelligent healthcare services. Despite the impending widespread deployment of HFMs, there is currently a lack of clear understanding about how they work in the healthcare field, their current challenges, and where they are headed in the future. To answer these questions, a comprehensive and deep survey of the challenges, opportunities, and future directions of HFMs is presented in this survey. It first conducted a comprehensive overview of the HFM including the methods, data, and applications for a quick grasp of the current progress. Then, it made an in-depth exploration of the challenges present in data, algorithms, and computing infrastructures for constructing and widespread application of foundation models in healthcare. This survey also identifies emerging and promising directions in this field for future development. We believe that this survey will enhance the community's comprehension of the current progress of HFM and serve as a valuable source of guidance for future development in this field. The latest HFM papers and related resources are maintained on our website: https://github.com/YutingHe-list/Awesome-Foundation-Models-for-Advancing-Healthcare.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge