Yingda Yin

Large Material Gaussian Model for Relightable 3D Generation

Sep 26, 2025

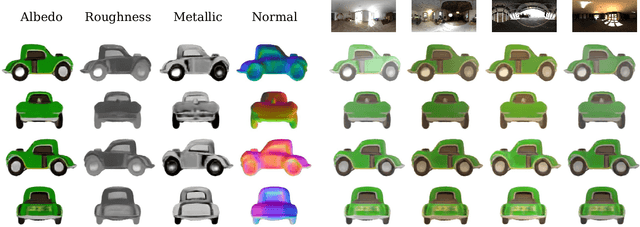

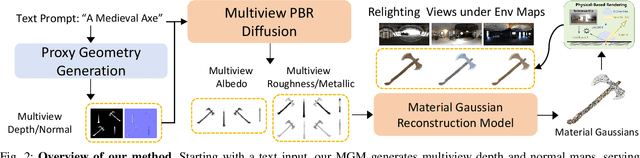

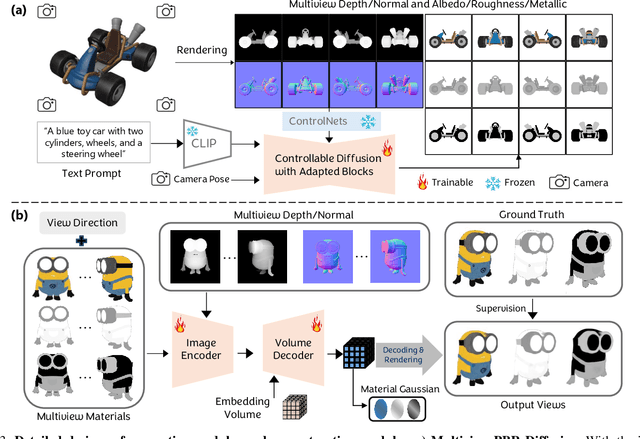

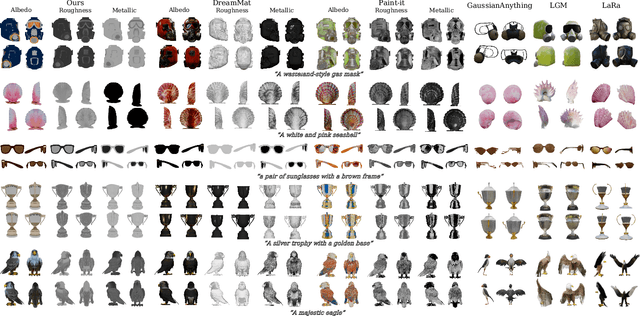

Abstract:The increasing demand for 3D assets across various industries necessitates efficient and automated methods for 3D content creation. Leveraging 3D Gaussian Splatting, recent large reconstruction models (LRMs) have demonstrated the ability to efficiently achieve high-quality 3D rendering by integrating multiview diffusion for generation and scalable transformers for reconstruction. However, existing models fail to produce the material properties of assets, which is crucial for realistic rendering in diverse lighting environments. In this paper, we introduce the Large Material Gaussian Model (MGM), a novel framework designed to generate high-quality 3D content with Physically Based Rendering (PBR) materials, ie, albedo, roughness, and metallic properties, rather than merely producing RGB textures with uncontrolled light baking. Specifically, we first fine-tune a new multiview material diffusion model conditioned on input depth and normal maps. Utilizing the generated multiview PBR images, we explore a Gaussian material representation that not only aligns with 2D Gaussian Splatting but also models each channel of the PBR materials. The reconstructed point clouds can then be rendered to acquire PBR attributes, enabling dynamic relighting by applying various ambient light maps. Extensive experiments demonstrate that the materials produced by our method not only exhibit greater visual appeal compared to baseline methods but also enhance material modeling, thereby enabling practical downstream rendering applications.

SLAM3R: Real-Time Dense Scene Reconstruction from Monocular RGB Videos

Dec 12, 2024Abstract:In this paper, we introduce \textbf{SLAM3R}, a novel and effective monocular RGB SLAM system for real-time and high-quality dense 3D reconstruction. SLAM3R provides an end-to-end solution by seamlessly integrating local 3D reconstruction and global coordinate registration through feed-forward neural networks. Given an input video, the system first converts it into overlapping clips using a sliding window mechanism. Unlike traditional pose optimization-based methods, SLAM3R directly regresses 3D pointmaps from RGB images in each window and progressively aligns and deforms these local pointmaps to create a globally consistent scene reconstruction - all without explicitly solving any camera parameters. Experiments across datasets consistently show that SLAM3R achieves state-of-the-art reconstruction accuracy and completeness while maintaining real-time performance at 20+ FPS. Code and weights at: \url{https://github.com/PKU-VCL-3DV/SLAM3R}.

SAI3D: Segment Any Instance in 3D Scenes

Dec 17, 2023

Abstract:Advancements in 3D instance segmentation have traditionally been tethered to the availability of annotated datasets, limiting their application to a narrow spectrum of object categories. Recent efforts have sought to harness vision-language models like CLIP for open-set semantic reasoning, yet these methods struggle to distinguish between objects of the same categories and rely on specific prompts that are not universally applicable. In this paper, we introduce SAI3D, a novel zero-shot 3D instance segmentation approach that synergistically leverages geometric priors and semantic cues derived from Segment Anything Model (SAM). Our method partitions a 3D scene into geometric primitives, which are then progressively merged into 3D instance segmentations that are consistent with the multi-view SAM masks. Moreover, we design a hierarchical region-growing algorithm with a dynamic thresholding mechanism, which largely improves the robustness of finegrained 3D scene parsing. Empirical evaluations on Scan-Net and the more challenging ScanNet++ datasets demonstrate the superiority of our approach. Notably, SAI3D outperforms existing open-vocabulary baselines and even surpasses fully-supervised methods in class-agnostic segmentation on ScanNet++.

Towards Robust Probabilistic Modeling on SO(3) via Rotation Laplace Distribution

May 17, 2023

Abstract:Estimating the 3DoF rotation from a single RGB image is an important yet challenging problem. As a popular approach, probabilistic rotation modeling additionally carries prediction uncertainty information, compared to single-prediction rotation regression. For modeling probabilistic distribution over SO(3), it is natural to use Gaussian-like Bingham distribution and matrix Fisher, however they are shown to be sensitive to outlier predictions, e.g. $180^\circ$ error and thus are unlikely to converge with optimal performance. In this paper, we draw inspiration from multivariate Laplace distribution and propose a novel rotation Laplace distribution on SO(3). Our rotation Laplace distribution is robust to the disturbance of outliers and enforces much gradient to the low-error region that it can improve. In addition, we show that our method also exhibits robustness to small noises and thus tolerates imperfect annotations. With this benefit, we demonstrate its advantages in semi-supervised rotation regression, where the pseudo labels are noisy. To further capture the multi-modal rotation solution space for symmetric objects, we extend our distribution to rotation Laplace mixture model and demonstrate its effectiveness. Our extensive experiments show that our proposed distribution and the mixture model achieve state-of-the-art performance in all the rotation regression experiments over both probabilistic and non-probabilistic baselines.

Delving into Discrete Normalizing Flows on SO Manifold for Probabilistic Rotation Modeling

Apr 08, 2023Abstract:Normalizing flows (NFs) provide a powerful tool to construct an expressive distribution by a sequence of trackable transformations of a base distribution and form a probabilistic model of underlying data. Rotation, as an important quantity in computer vision, graphics, and robotics, can exhibit many ambiguities when occlusion and symmetry occur and thus demands such probabilistic models. Though much progress has been made for NFs in Euclidean space, there are no effective normalizing flows without discontinuity or many-to-one mapping tailored for SO(3) manifold. Given the unique non-Euclidean properties of the rotation manifold, adapting the existing NFs to SO(3) manifold is non-trivial. In this paper, we propose a novel normalizing flow on SO(3) by combining a Mobius transformation-based coupling layer and a quaternion affine transformation. With our proposed rotation normalizing flows, one can not only effectively express arbitrary distributions on SO(3), but also conditionally build the target distribution given input observations. Extensive experiments show that our rotation normalizing flows significantly outperform the baselines on both unconditional and conditional tasks.

A Laplace-inspired Distribution on SO for Probabilistic Rotation Estimation

Mar 03, 2023Abstract:Estimating the 3DoF rotation from a single RGB image is an important yet challenging problem. Probabilistic rotation regression has raised more and more attention with the benefit of expressing uncertainty information along with the prediction. Though modeling noise using Gaussian-resembling Bingham distribution and matrix Fisher distribution is natural, they are shown to be sensitive to outliers for the nature of quadratic punishment to deviations. In this paper, we draw inspiration from multivariate Laplace distribution and propose a novel Rotation Laplace distribution on SO(3). Rotation Laplace distribution is robust to the disturbance of outliers and enforces much gradient to the low-error region, resulting in a better convergence. Our extensive experiments show that our proposed distribution achieves state-of-the-art performance for rotation regression tasks over both probabilistic and non-probabilistic baselines. Our project page is at https://pku-epic.github.io/RotationLaplace.

FisherMatch: Semi-Supervised Rotation Regression via Entropy-based Filtering

Mar 29, 2022

Abstract:Estimating the 3DoF rotation from a single RGB image is an important yet challenging problem. Recent works achieve good performance relying on a large amount of expensive-to-obtain labeled data. To reduce the amount of supervision, we for the first time propose a general framework, FisherMatch, for semi-supervised rotation regression, without assuming any domain-specific knowledge or paired data. Inspired by the popular semi-supervised approach, FixMatch, we propose to leverage pseudo label filtering to facilitate the information flow from labeled data to unlabeled data in a teacher-student mutual learning framework. However, incorporating the pseudo label filtering mechanism into semi-supervised rotation regression is highly non-trivial, mainly due to the lack of a reliable confidence measure for rotation prediction. In this work, we propose to leverage matrix Fisher distribution to build a probabilistic model of rotation and devise a matrix Fisher-based regressor for jointly predicting rotation along with its prediction uncertainty. We then propose to use the entropy of the predicted distribution as a confidence measure, which enables us to perform pseudo label filtering for rotation regression. For supervising such distribution-like pseudo labels, we further investigate the problem of how to enforce loss between two matrix Fisher distributions. Our extensive experiments show that our method can work well even under very low labeled data ratios on different benchmarks, achieving significant and consistent performance improvement over supervised learning and other semi-supervised learning baselines. Our project page is at https://yd-yin.github.io/FisherMatch.

Projective Manifold Gradient Layer for Deep Rotation Regression

Oct 22, 2021

Abstract:Regressing rotations on SO(3) manifold using deep neural networks is an important yet unsolved problem. The gap between Euclidean network output space and the non-Euclidean SO(3) manifold imposes a severe challenge for neural network learning in both forward and backward passes. While several works have proposed different regression-friendly rotation representations, very few works have been devoted to improving the gradient backpropagating in the backward pass. In this paper, we propose a manifold-aware gradient that directly backpropagates into deep network weights. Leveraging the Riemannian gradient and a novel projective gradient, our proposed regularized projective manifold gradient (RPMG) helps networks achieve new state-of-the-art performance in a variety of rotation estimation tasks. The proposed gradient layer can also be applied to other smooth manifolds such as the unit sphere.

Active Visual Localization in Partially Calibrated Environments

Dec 08, 2020

Abstract:Humans can robustly localize themselves without a map after they get lost following prominent visual cues or landmarks. In this work, we aim at endowing autonomous agents the same ability. Such ability is important in robotics applications yet very challenging when an agent is exposed to partially calibrated environments, where camera images with accurate 6 Degree-of-Freedom pose labels only cover part of the scene. To address the above challenge, we explore using Reinforcement Learning to search for a policy to generate intelligent motions so as to actively localize the agent given visual information in partially calibrated environments. Our core contribution is to formulate the active visual localization problem as a Partially Observable Markov Decision Process and propose an algorithmic framework based on Deep Reinforcement Learning to solve it. We further propose an indoor scene dataset ACR-6, which consists of both synthetic and real data and simulates challenging scenarios for active visual localization. We benchmark our algorithm against handcrafted baselines for localization and demonstrate that our approach significantly outperforms them on localization success rate.

Deep Reflection Prior

Dec 08, 2019

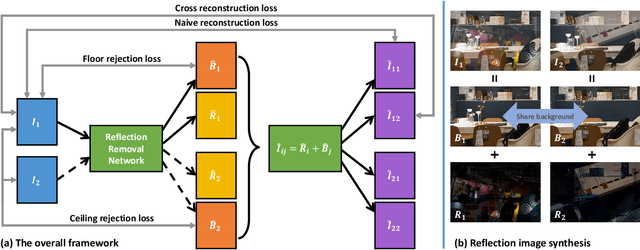

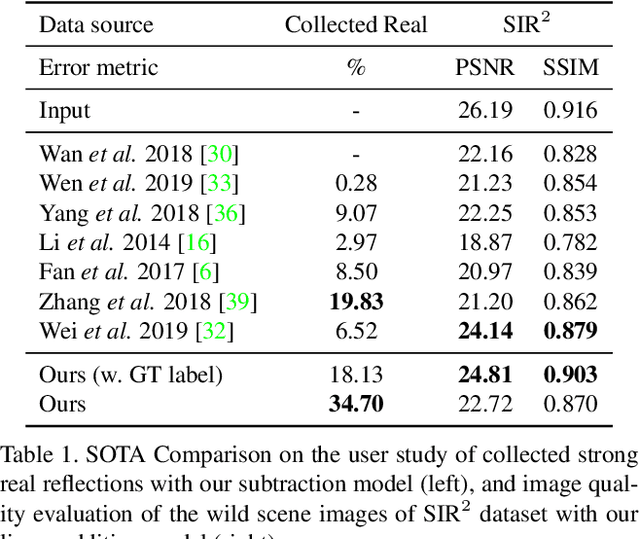

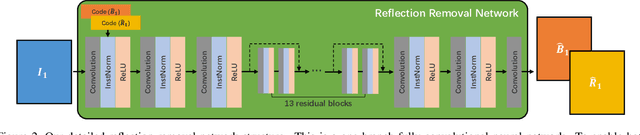

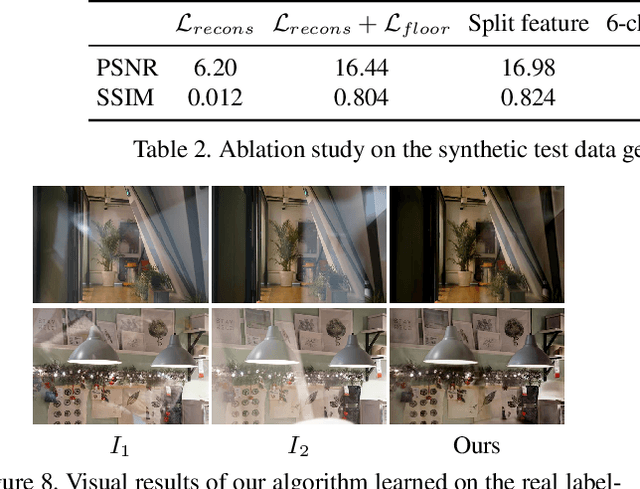

Abstract:Reflections are very common phenomena in our daily photography, which distract people's attention from the scene behind the glass. The problem of removing reflection artifacts is important but challenging due to its ill-posed nature. Recent learning-based approaches have demonstrated a significant improvement in removing reflections. However, these methods are limited as they require a large number of synthetic reflection/clean image pairs for supervision, at the risk of overfitting in the synthetic image domain. In this paper, we propose a learning-based approach that captures the reflection statistical prior for single image reflection removal. Our algorithm is driven by optimizing the target with joint constraints enhanced between multiple input images during the training stage, but is able to eliminate reflections only from a single input for evaluation. Our framework allows to predict both background and reflection via a one-branch deep neural network, which is implemented by the controllable latent code that indicates either the background or reflection output. We demonstrate superior performance over the state-of-the-art methods on a large range of real-world images. We further provide insightful analysis behind the learned latent code, which may inspire more future work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge