Yibo Chen

IVC-Prune: Revealing the Implicit Visual Coordinates in LVLMs for Vision Token Pruning

Feb 03, 2026Abstract:Large Vision-Language Models (LVLMs) achieve impressive performance across multiple tasks. A significant challenge, however, is their prohibitive inference cost when processing high-resolution visual inputs. While visual token pruning has emerged as a promising solution, existing methods that primarily focus on semantic relevance often discard tokens that are crucial for spatial reasoning. We address this gap through a novel insight into \emph{how LVLMs process spatial reasoning}. Specifically, we reveal that LVLMs implicitly establish visual coordinate systems through Rotary Position Embeddings (RoPE), where specific token positions serve as \textbf{implicit visual coordinates} (IVC tokens) that are essential for spatial reasoning. Based on this insight, we propose \textbf{IVC-Prune}, a training-free, prompt-aware pruning strategy that retains both IVC tokens and semantically relevant foreground tokens. IVC tokens are identified by theoretically analyzing the mathematical properties of RoPE, targeting positions at which its rotation matrices approximate identity matrix or the $90^\circ$ rotation matrix. Foreground tokens are identified through a robust two-stage process: semantic seed discovery followed by contextual refinement via value-vector similarity. Extensive evaluations across four representative LVLMs and twenty diverse benchmarks show that IVC-Prune reduces visual tokens by approximately 50\% while maintaining $\geq$ 99\% of the original performance and even achieving improvements on several benchmarks. Source codes are available at https://github.com/FireRedTeam/IVC-Prune.

Toward Super Agent System with Hybrid AI Routers

Apr 11, 2025Abstract:AI Agents powered by Large Language Models are transforming the world through enormous applications. A super agent has the potential to fulfill diverse user needs, such as summarization, coding, and research, by accurately understanding user intent and leveraging the appropriate tools to solve tasks. However, to make such an agent viable for real-world deployment and accessible at scale, significant optimizations are required to ensure high efficiency and low cost. This paper presents a design of the Super Agent System. Upon receiving a user prompt, the system first detects the intent of the user, then routes the request to specialized task agents with the necessary tools or automatically generates agentic workflows. In practice, most applications directly serve as AI assistants on edge devices such as phones and robots. As different language models vary in capability and cloud-based models often entail high computational costs, latency, and privacy concerns, we then explore the hybrid mode where the router dynamically selects between local and cloud models based on task complexity. Finally, we introduce the blueprint of an on-device super agent enhanced with cloud. With advances in multi-modality models and edge hardware, we envision that most computations can be handled locally, with cloud collaboration only as needed. Such architecture paves the way for super agents to be seamlessly integrated into everyday life in the near future.

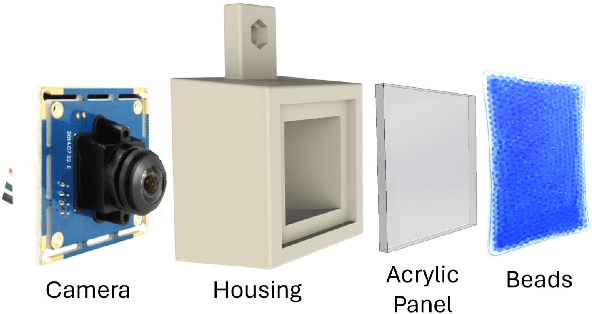

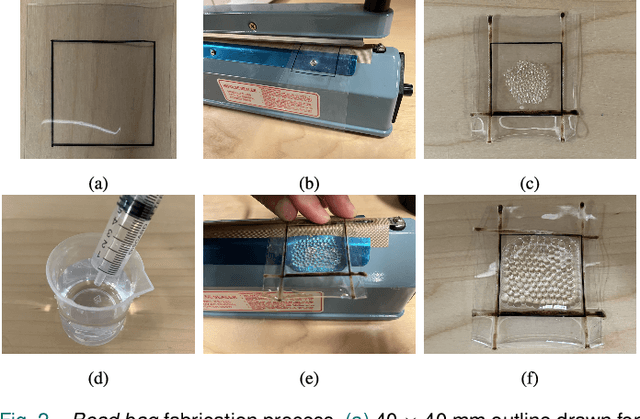

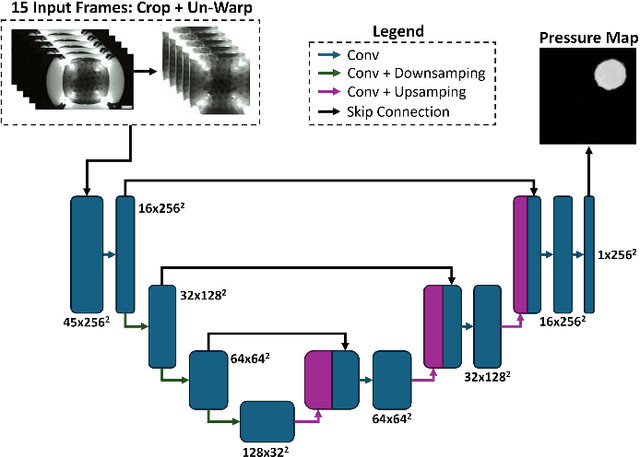

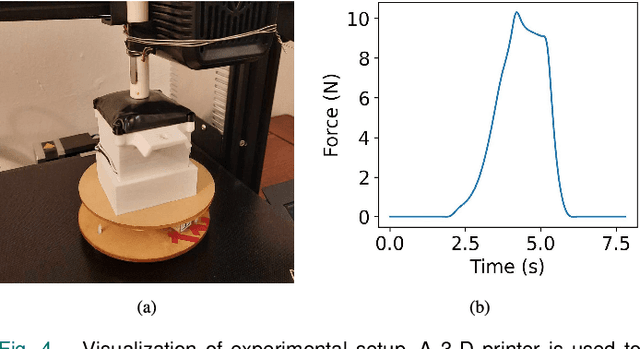

BeadSight: An Inexpensive Tactile Sensor Using Hydro-Gel Beads

May 21, 2024

Abstract:In robotic manipulation, tactile sensors are indispensable, especially when dealing with soft objects, objects of varying dimensions, or those out of the robot's direct line of sight. Traditional tactile sensors often grapple with challenges related to cost and durability. To address these issues, our study introduces a novel approach to visuo-tactile sensing with an emphasis on economy and replacablity. Our proposed sensor, BeadSight, uses hydro-gel beads encased in a vinyl bag as an economical, easily replaceable sensing medium. When the sensor makes contact with a surface, the deformation of the hydrogel beads is observed using a rear camera. This observation is then passed through a U-net Neural Network to predict the forces acting on the surface of the bead bag, in the form of a pressure map. Our results show that the sensor can accurately predict these pressure maps, detecting the location and magnitude of forces applied to the surface. These abilities make BeadSight an effective, inexpensive, and easily replaceable tactile sensor, ideal for many robotics applications.

GraphPAS: Parallel Architecture Search for Graph Neural Networks

Dec 07, 2021

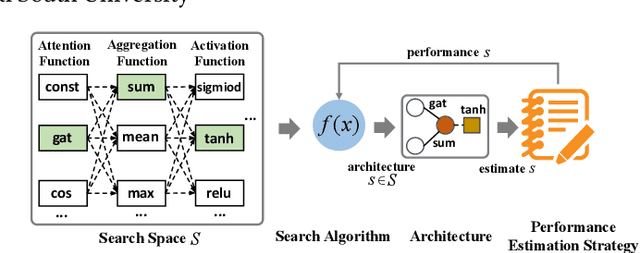

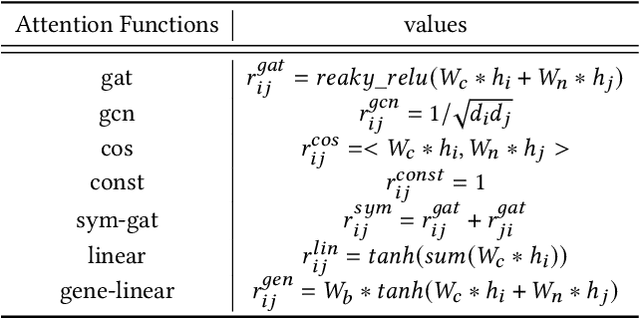

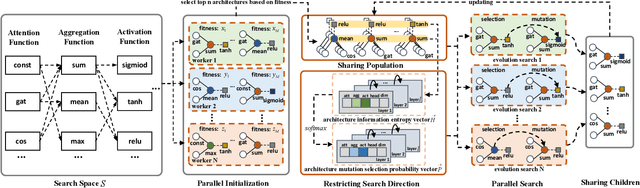

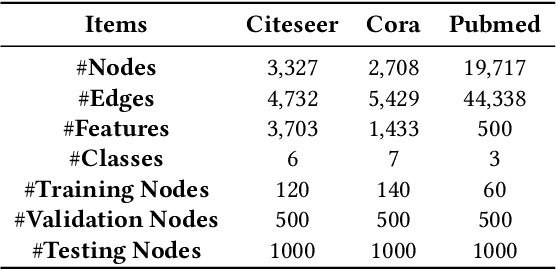

Abstract:Graph neural architecture search has received a lot of attention as Graph Neural Networks (GNNs) has been successfully applied on the non-Euclidean data recently. However, exploring all possible GNNs architectures in the huge search space is too time-consuming or impossible for big graph data. In this paper, we propose a parallel graph architecture search (GraphPAS) framework for graph neural networks. In GraphPAS, we explore the search space in parallel by designing a sharing-based evolution learning, which can improve the search efficiency without losing the accuracy. Additionally, architecture information entropy is adopted dynamically for mutation selection probability, which can reduce space exploration. The experimental result shows that GraphPAS outperforms state-of-art models with efficiency and accuracy simultaneously.

Zoom-In-to-Check: Boosting Video Interpolation via Instance-level Discrimination

Dec 04, 2018

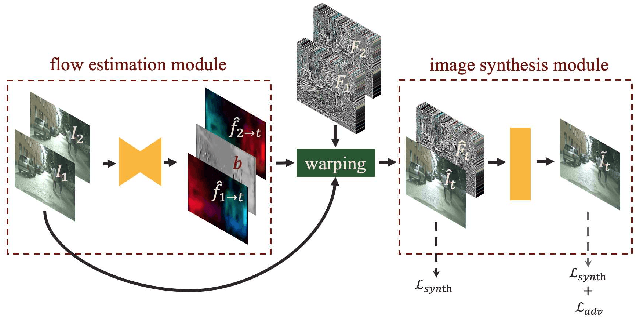

Abstract:We propose a light-weight video frame interpolation algorithm. Our key innovation is an instance-level supervision that allows information to be learned from the high-resolution version of similar objects. Our experiment shows that the proposed method can generate state-of-art results across different datasets, with fractional computation resources (time and memory) with competing methods. Given two image frames, a cascade network creates an intermediate frame with 1) a flow-warping module that computes large bi-directional optical flow and creates an interpolated image via flow-based warping, followed by 2) an image synthesis module to make fine-scale corrections. In the learning stage, object detection proposals are generated on the interpolated image. Lower resolution objects are zoomed into, and the learning algorithms using an adversarial loss trained on high-resolution objects to guide the system towards the instance-level refinement corrects details of object shape and boundaries. As all our proposed network modules are fully convolutional, our proposed system can be trained end-to-end.

Realtime Time Synchronized Event-based Stereo

Oct 18, 2018

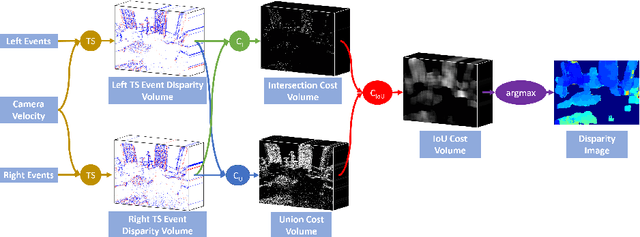

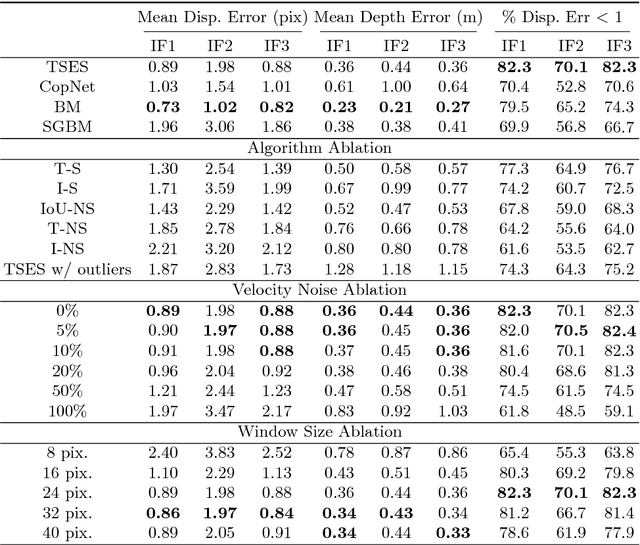

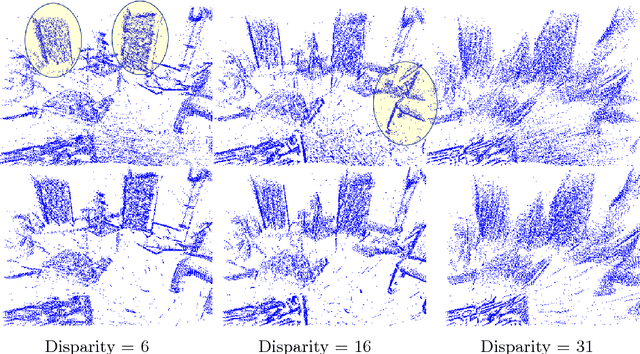

Abstract:In this work, we propose a novel event based stereo method which addresses the problem of motion blur for a moving event camera. Our method uses the velocity of the camera and a range of disparities to synchronize the positions of the events, as if they were captured at a single point in time. We represent these events using a pair of novel time synchronized event disparity volumes, which we show remove motion blur for pixels at the correct disparity in the volume, while further blurring pixels at the wrong disparity. We then apply a novel matching cost over these time synchronized event disparity volumes, which both rewards similarity between the volumes while penalizing blurriness. We show that our method outperforms more expensive, smoothing based event stereo methods, by evaluating on the Multi Vehicle Stereo Event Camera dataset.

* 13 pages, 3 figures, 1 table. Video: https://youtu.be/4oa7e4hsrYo. Updated with final version with additional experiments

DoraPicker: An Autonomous Picking System for General Objects

Mar 21, 2016

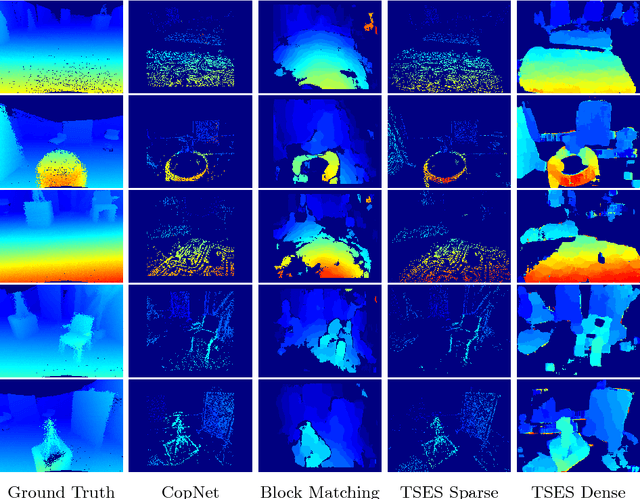

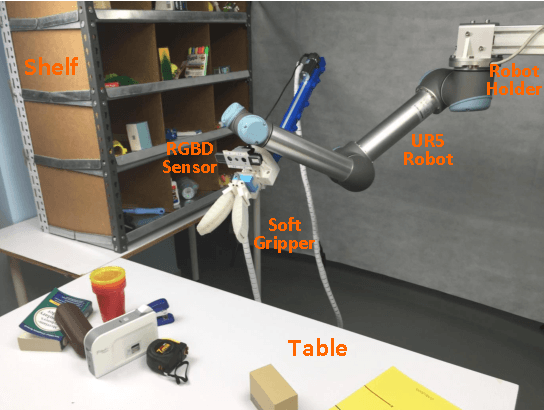

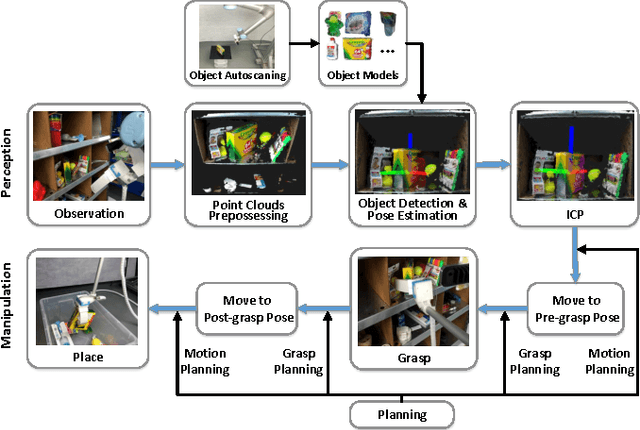

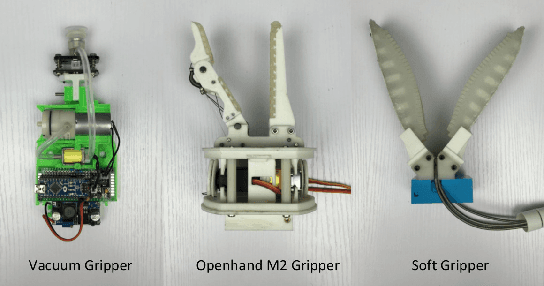

Abstract:Robots that autonomously manipulate objects within warehouses have the potential to shorten the package delivery time and improve the efficiency of the e-commerce industry. In this paper, we present a robotic system that is capable of both picking and placing general objects in warehouse scenarios. Given a target object, the robot autonomously detects it from a shelf or a table and estimates its full 6D pose. With this pose information, the robot picks the object using its gripper, and then places it into a container or at a specified location. We describe our pick-and-place system in detail while highlighting our design principles for the warehouse settings, including the perception method that leverages knowledge about its workspace, three grippers designed to handle a large variety of different objects in terms of shape, weight and material, and grasp planning in cluttered scenarios. We also present extensive experiments to evaluate the performance of our picking system and demonstrate that the robot is competent to accomplish various tasks in warehouse settings, such as picking a target item from a tight space, grasping different objects from the shelf, and performing pick-and-place tasks on the table.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge