Peter Pak

AdditiveLLM: Large Language Models Predict Defects in Additive Manufacturing

Jan 29, 2025

Abstract:In this work we investigate the ability of large language models to predict additive manufacturing defect regimes given a set of process parameter inputs. For this task we utilize a process parameter defect dataset to fine-tune a collection of models, titled AdditiveLLM, for the purpose of predicting potential defect regimes including Keyholing, Lack of Fusion, and Balling. We compare different methods of input formatting in order to gauge the model's performance to correctly predict defect regimes on our sparse Baseline dataset and our natural language Prompt dataset. The model displays robust predictive capability, achieving an accuracy of 93\% when asked to provide the defect regimes associated with a set of process parameters. The incorporation of natural language input further simplifies the task of process parameters selection, enabling users to identify optimal settings specific to their build.

LLM-3D Print: Large Language Models To Monitor and Control 3D Printing

Aug 26, 2024Abstract:Industry 4.0 has revolutionized manufacturing by driving digitalization and shifting the paradigm toward additive manufacturing (AM). Fused Deposition Modeling (FDM), a key AM technology, enables the creation of highly customized, cost-effective products with minimal material waste through layer-by-layer extrusion, posing a significant challenge to traditional subtractive methods. However, the susceptibility of material extrusion techniques to errors often requires expert intervention to detect and mitigate defects that can severely compromise product quality. While automated error detection and machine learning models exist, their generalizability across diverse 3D printer setups, firmware, and sensors is limited, and deep learning methods require extensive labeled datasets, hindering scalability and adaptability. To address these challenges, we present a process monitoring and control framework that leverages pre-trained Large Language Models (LLMs) alongside 3D printers to detect and address printing defects. The LLM evaluates print quality by analyzing images captured after each layer or print segment, identifying failure modes and querying the printer for relevant parameters. It then generates and executes a corrective action plan. We validated the effectiveness of the proposed framework in identifying defects by comparing it against a control group of engineers with diverse AM expertise. Our evaluation demonstrated that LLM-based agents not only accurately identify common 3D printing errors, such as inconsistent extrusion, stringing, warping, and layer adhesion, but also effectively determine the parameters causing these failures and autonomously correct them without any need for human intervention.

BeadSight: An Inexpensive Tactile Sensor Using Hydro-Gel Beads

May 21, 2024

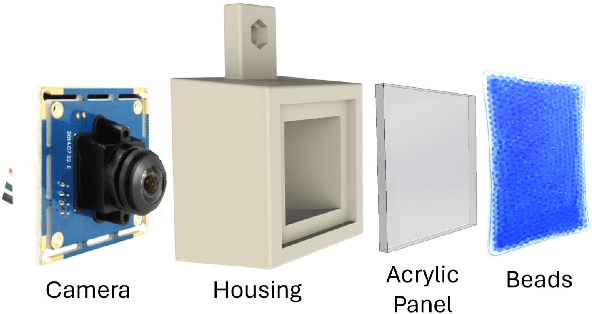

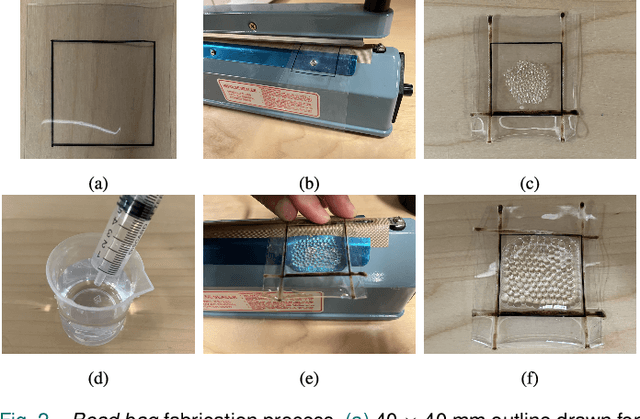

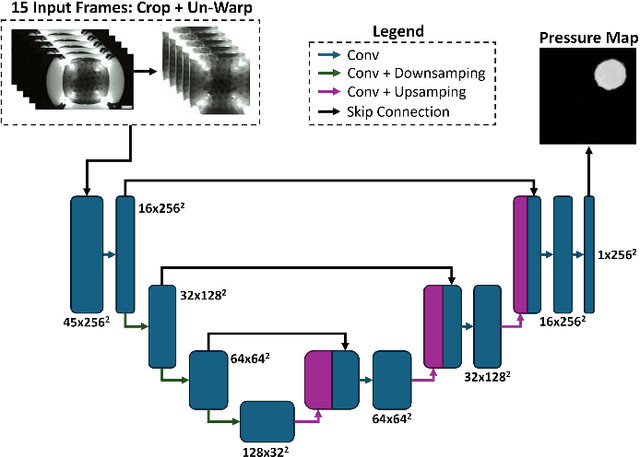

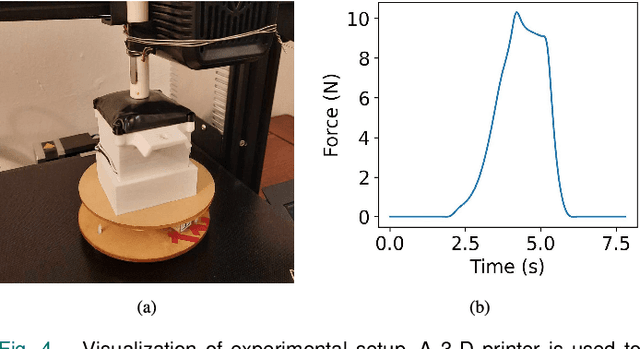

Abstract:In robotic manipulation, tactile sensors are indispensable, especially when dealing with soft objects, objects of varying dimensions, or those out of the robot's direct line of sight. Traditional tactile sensors often grapple with challenges related to cost and durability. To address these issues, our study introduces a novel approach to visuo-tactile sensing with an emphasis on economy and replacablity. Our proposed sensor, BeadSight, uses hydro-gel beads encased in a vinyl bag as an economical, easily replaceable sensing medium. When the sensor makes contact with a surface, the deformation of the hydrogel beads is observed using a rear camera. This observation is then passed through a U-net Neural Network to predict the forces acting on the surface of the bead bag, in the form of a pressure map. Our results show that the sensor can accurately predict these pressure maps, detecting the location and magnitude of forces applied to the surface. These abilities make BeadSight an effective, inexpensive, and easily replaceable tactile sensor, ideal for many robotics applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge