Xueming Li

InterChat: Enhancing Generative Visual Analytics using Multimodal Interactions

Mar 06, 2025

Abstract:The rise of Large Language Models (LLMs) and generative visual analytics systems has transformed data-driven insights, yet significant challenges persist in accurately interpreting users' analytical and interaction intents. While language inputs offer flexibility, they often lack precision, making the expression of complex intents inefficient, error-prone, and time-intensive. To address these limitations, we investigate the design space of multimodal interactions for generative visual analytics through a literature review and pilot brainstorming sessions. Building on these insights, we introduce a highly extensible workflow that integrates multiple LLM agents for intent inference and visualization generation. We develop InterChat, a generative visual analytics system that combines direct manipulation of visual elements with natural language inputs. This integration enables precise intent communication and supports progressive, visually driven exploratory data analyses. By employing effective prompt engineering, and contextual interaction linking, alongside intuitive visualization and interaction designs, InterChat bridges the gap between user interactions and LLM-driven visualizations, enhancing both interpretability and usability. Extensive evaluations, including two usage scenarios, a user study, and expert feedback, demonstrate the effectiveness of InterChat. Results show significant improvements in the accuracy and efficiency of handling complex visual analytics tasks, highlighting the potential of multimodal interactions to redefine user engagement and analytical depth in generative visual analytics.

Sketch-1-to-3: One Single Sketch to 3D Detailed Face Reconstruction

Feb 25, 2025Abstract:3D face reconstruction from a single sketch is a critical yet underexplored task with significant practical applications. The primary challenges stem from the substantial modality gap between 2D sketches and 3D facial structures, including: (1) accurately extracting facial keypoints from 2D sketches; (2) preserving diverse facial expressions and fine-grained texture details; and (3) training a high-performing model with limited data. In this paper, we propose Sketch-1-to-3, a novel framework for realistic 3D face reconstruction from a single sketch, to address these challenges. Specifically, we first introduce the Geometric Contour and Texture Detail (GCTD) module, which enhances the extraction of geometric contours and texture details from facial sketches. Additionally, we design a deep learning architecture with a domain adaptation module and a tailored loss function to align sketches with the 3D facial space, enabling high-fidelity expression and texture reconstruction. To facilitate evaluation and further research, we construct SketchFaces, a real hand-drawn facial sketch dataset, and Syn-SketchFaces, a synthetic facial sketch dataset. Extensive experiments demonstrate that Sketch-1-to-3 achieves state-of-the-art performance in sketch-based 3D face reconstruction.

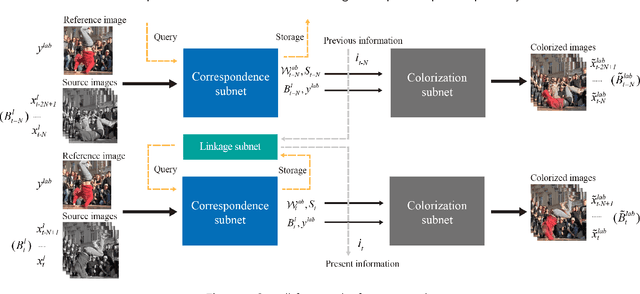

Temporal Consistent Automatic Video Colorization via Semantic Correspondence

May 13, 2023Abstract:Video colorization task has recently attracted wide attention. Recent methods mainly work on the temporal consistency in adjacent frames or frames with small interval. However, it still faces severe challenge of the inconsistency between frames with large interval.To address this issue, we propose a novel video colorization framework, which combines semantic correspondence into automatic video colorization to keep long-range consistency. Firstly, a reference colorization network is designed to automatically colorize the first frame of each video, obtaining a reference image to supervise the following whole colorization process. Such automatically colorized reference image can not only avoid labor-intensive and time-consuming manual selection, but also enhance the similarity between reference and grayscale images. Afterwards, a semantic correspondence network and an image colorization network are introduced to colorize a series of the remaining frames with the help of the reference. Each frame is supervised by both the reference image and the immediately colorized preceding frame to improve both short-range and long-range temporal consistency. Extensive experiments demonstrate that our method outperforms other methods in maintaining temporal consistency both qualitatively and quantitatively. In the NTIRE 2023 Video Colorization Challenge, our method ranks at the 3rd place in Color Distribution Consistency (CDC) Optimization track.

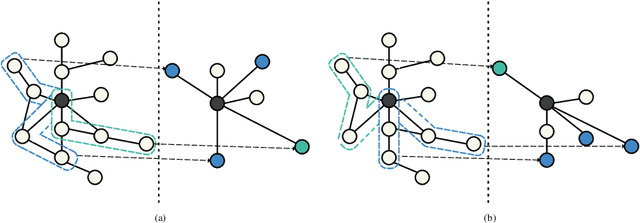

SPColor: Semantic Prior Guided Exemplar-based Image Colorization

Apr 14, 2023

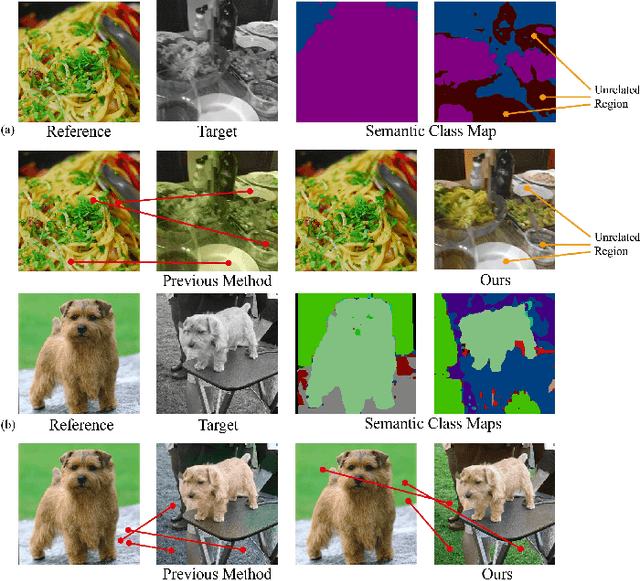

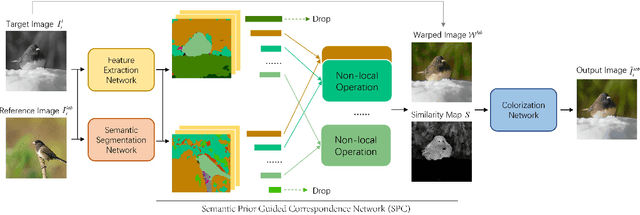

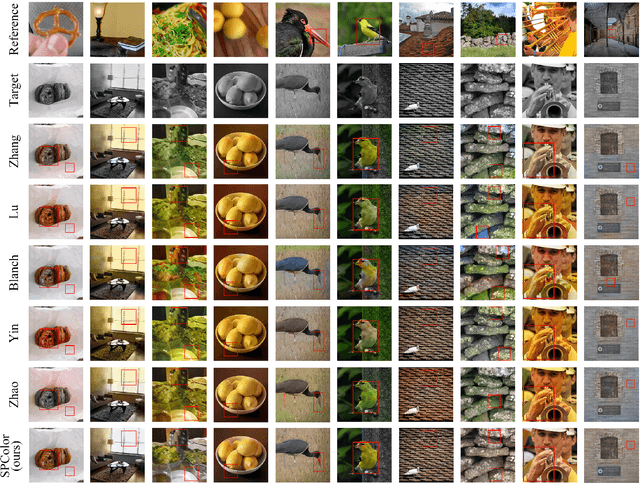

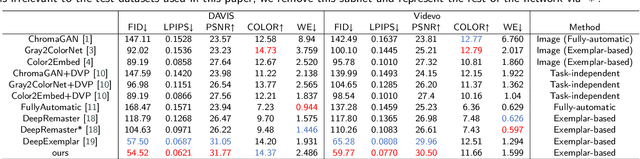

Abstract:Exemplar-based image colorization aims to colorize a target grayscale image based on a color reference image, and the key is to establish accurate pixel-level semantic correspondence between these two images. Previous methods search for correspondence across the entire reference image, and this type of global matching is easy to get mismatch. We summarize the difficulties in two aspects: (1) When the reference image only contains a part of objects related to target image, improper correspondence will be established in unrelated regions. (2) It is prone to get mismatch in regions where the shape or texture of the object is easily confused. To overcome these issues, we propose SPColor, a semantic prior guided exemplar-based image colorization framework. Different from previous methods, SPColor first coarsely classifies pixels of the reference and target images to several pseudo-classes under the guidance of semantic prior, then the correspondences are only established locally between the pixels in the same class via the newly designed semantic prior guided correspondence network. In this way, improper correspondence between different semantic classes is explicitly excluded, and the mismatch is obviously alleviated. Besides, to better reserve the color from reference, a similarity masked perceptual loss is designed. Noting that the carefully designed SPColor utilizes the semantic prior provided by an unsupervised segmentation model, which is free for additional manual semantic annotations. Experiments demonstrate that our model outperforms recent state-of-the-art methods both quantitatively and qualitatively on public dataset.

Exemplar-based Video Colorization with Long-term Spatiotemporal Dependency

Mar 27, 2023

Abstract:Exemplar-based video colorization is an essential technique for applications like old movie restoration. Although recent methods perform well in still scenes or scenes with regular movement, they always lack robustness in moving scenes due to their weak ability in modeling long-term dependency both spatially and temporally, leading to color fading, color discontinuity or other artifacts. To solve this problem, we propose an exemplar-based video colorization framework with long-term spatiotemporal dependency. To enhance the long-term spatial dependency, a parallelized CNN-Transformer block and a double head non-local operation are designed. The proposed CNN-Transformer block can better incorporate long-term spatial dependency with local texture and structural features, and the double head non-local operation further leverages the performance of augmented feature. While for long-term temporal dependency enhancement, we further introduce the novel linkage subnet. The linkage subnet propagate motion information across adjacent frame blocks and help to maintain temporal continuity. Experiments demonstrate that our model outperforms recent state-of-the-art methods both quantitatively and qualitatively. Also, our model can generate more colorful, realistic and stabilized results, especially for scenes where objects change greatly and irregularly.

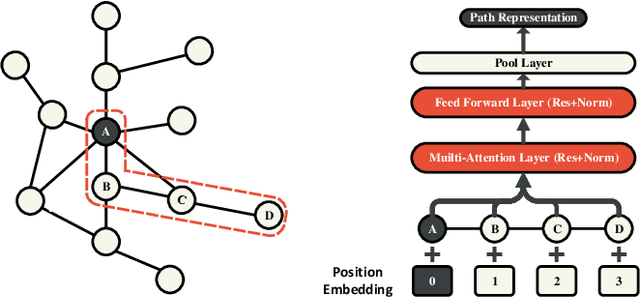

PathSAGE: Spatial Graph Attention Neural Networks With Random Path Sampling

Mar 11, 2022

Abstract:Graph Convolutional Networks (GCNs) achieve great success in non-Euclidean structure data processing recently. In existing studies, deeper layers are used in CCNs to extract deeper features of Euclidean structure data. However, for non-Euclidean structure data, too deep GCNs will confront with problems like "neighbor explosion" and "over-smoothing", it also cannot be applied to large datasets. To address these problems, we propose a model called PathSAGE, which can learn high-order topological information and improve the model's performance by expanding the receptive field. The model randomly samples paths starting from the central node and aggregates them by Transformer encoder. PathSAGE has only one layer of structure to aggregate nodes which avoid those problems above. The results of evaluation shows that our model achieves comparable performance with the state-of-the-art models in inductive learning tasks.

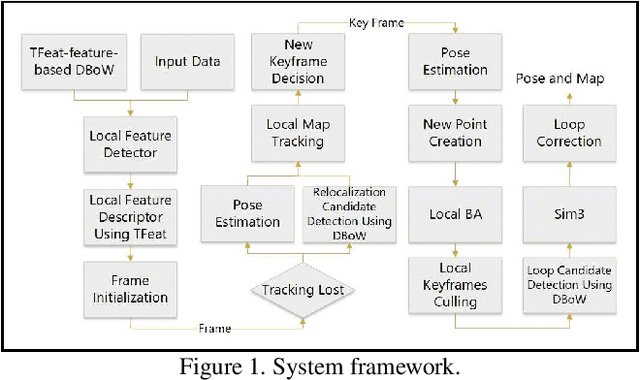

DF-SLAM: A Deep-Learning Enhanced Visual SLAM System based on Deep Local Features

Jan 24, 2019

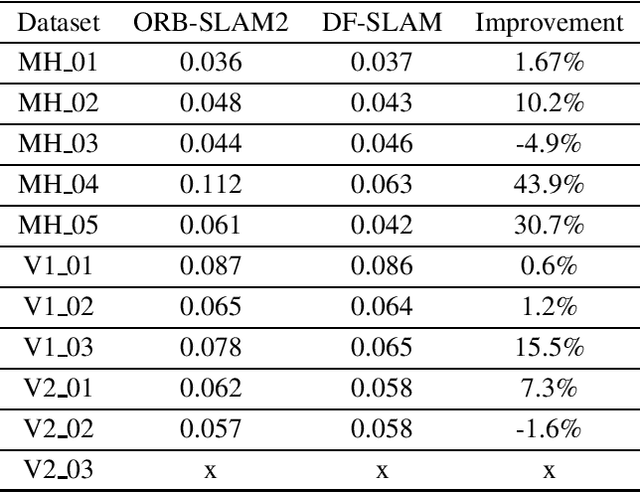

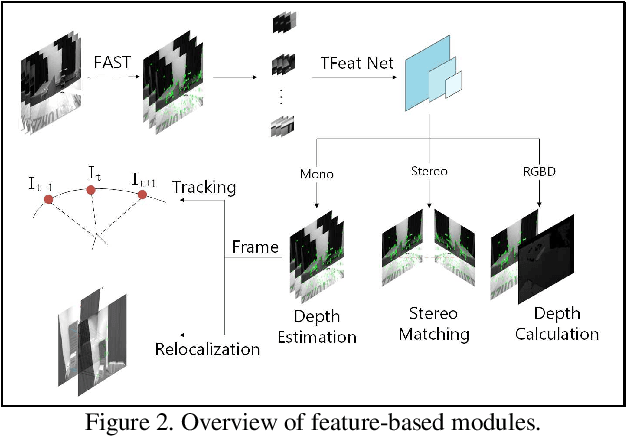

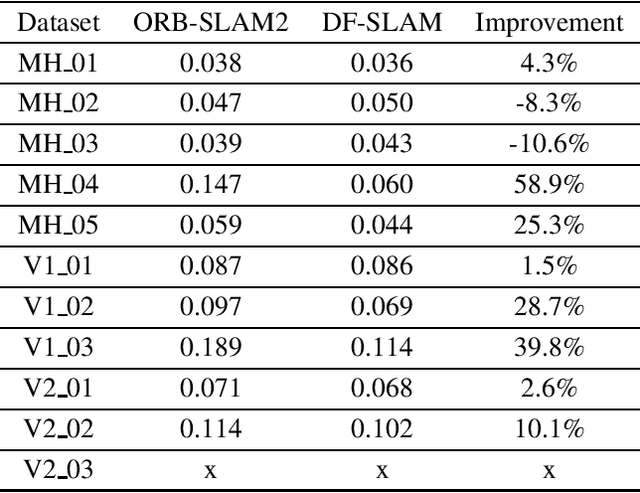

Abstract:As the foundation of driverless vehicle and intelligent robots, Simultaneous Localization and Mapping(SLAM) has attracted much attention these days. However, non-geometric modules of traditional SLAM algorithms are limited by data association tasks and have become a bottleneck preventing the development of SLAM. To deal with such problems, many researchers seek to Deep Learning for help. But most of these studies are limited to virtual datasets or specific environments, and even sacrifice efficiency for accuracy. Thus, they are not practical enough. We propose DF-SLAM system that uses deep local feature descriptors obtained by the neural network as a substitute for traditional hand-made features. Experimental results demonstrate its improvements in efficiency and stability. DF-SLAM outperforms popular traditional SLAM systems in various scenes, including challenging scenes with intense illumination changes. Its versatility and mobility fit well into the need for exploring new environments. Since we adopt a shallow network to extract local descriptors and remain others the same as original SLAM systems, our DF-SLAM can still run in real-time on GPU.

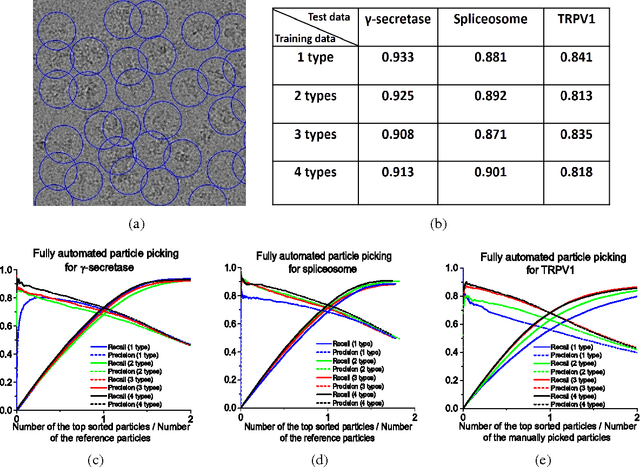

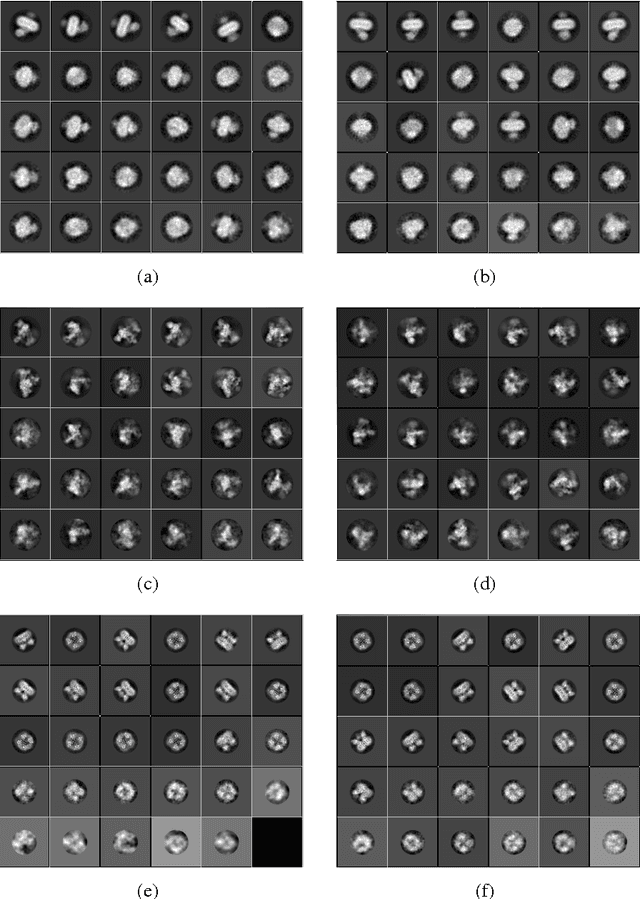

DeepPicker: a Deep Learning Approach for Fully Automated Particle Picking in Cryo-EM

May 06, 2016

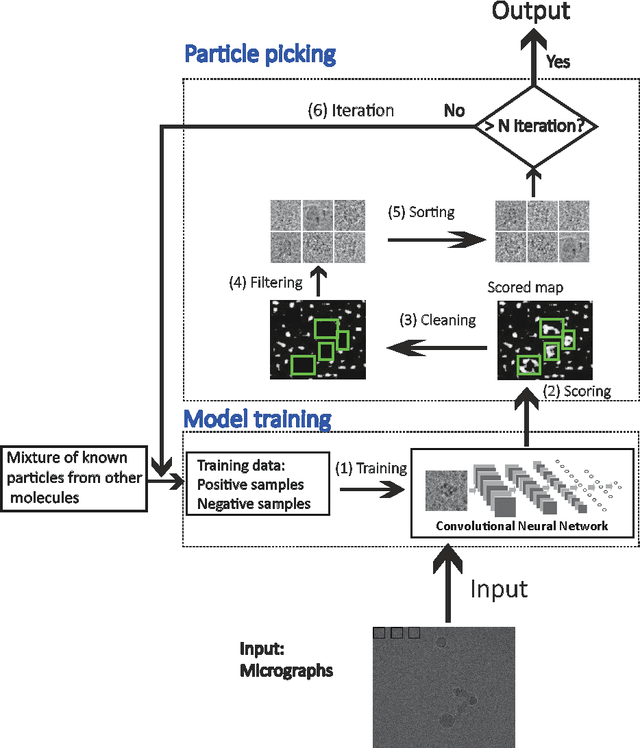

Abstract:Particle picking is a time-consuming step in single-particle analysis and often requires significant interventions from users, which has become a bottleneck for future automated electron cryo-microscopy (cryo-EM). Here we report a deep learning framework, called DeepPicker, to address this problem and fill the current gaps toward a fully automated cryo-EM pipeline. DeepPicker employs a novel cross-molecule training strategy to capture common features of particles from previously-analyzed micrographs, and thus does not require any human intervention during particle picking. Tests on the recently-published cryo-EM data of three complexes have demonstrated that our deep learning based scheme can successfully accomplish the human-level particle picking process and identify a sufficient number of particles that are comparable to those manually by human experts. These results indicate that DeepPicker can provide a practically useful tool to significantly reduce the time and manual effort spent in single-particle analysis and thus greatly facilitate high-resolution cryo-EM structure determination.

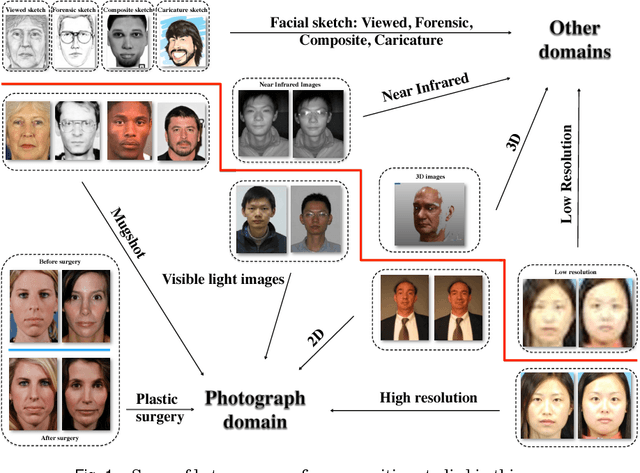

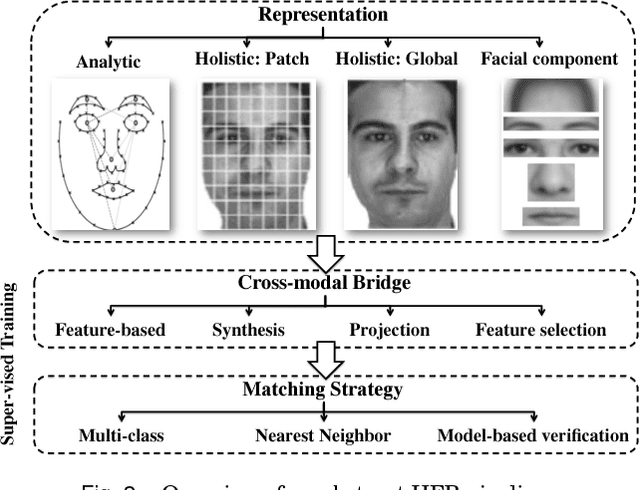

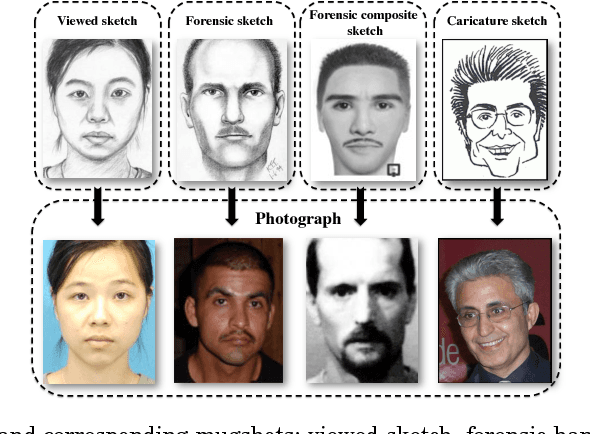

A Survey on Heterogeneous Face Recognition: Sketch, Infra-red, 3D and Low-resolution

Oct 10, 2014

Abstract:Heterogeneous face recognition (HFR) refers to matching face imagery across different domains. It has received much interest from the research community as a result of its profound implications in law enforcement. A wide variety of new invariant features, cross-modality matching models and heterogeneous datasets being established in recent years. This survey provides a comprehensive review of established techniques and recent developments in HFR. Moreover, we offer a detailed account of datasets and benchmarks commonly used for evaluation. We finish by assessing the state of the field and discussing promising directions for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge