Xiangjun Wang

Parameters Inference for Nonlinear Wave Equations with Markovian Switching

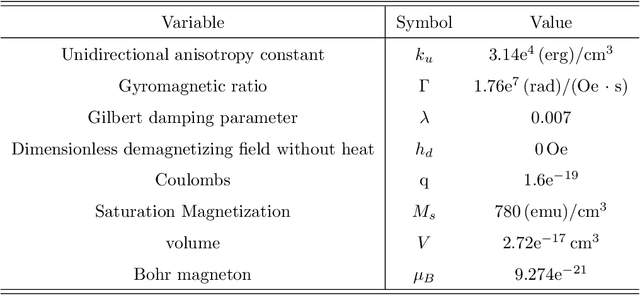

Aug 12, 2024Abstract:Traditional partial differential equations with constant coefficients often struggle to capture abrupt changes in real-world phenomena, leading to the development of variable coefficient PDEs and Markovian switching models. Recently, research has introduced the concept of PDEs with Markov switching models, established their well-posedness and presented numerical methods. However, there has been limited discussion on parameter estimation for the jump coefficients in these models. This paper addresses this gap by focusing on parameter inference for the wave equation with Markovian switching. We propose a Bayesian statistical framework using discrete sparse Bayesian learning to establish its convergence and a uniform error bound. Our method requires fewer assumptions and enables independent parameter inference for each segment by allowing different underlying structures for the parameter estimation problem within each segmented time interval. The effectiveness of our approach is demonstrated through three numerical cases, which involve noisy spatiotemporal data from different wave equations with Markovian switching. The results show strong performance in parameter estimation for variable coefficient PDEs.

Machine Learning for Complex Systems with Abnormal Pattern by Exception Maximization Outlier Detection Method

Jul 05, 2024

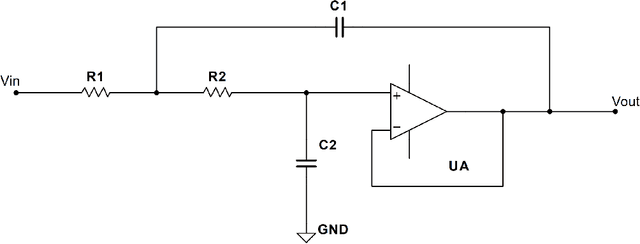

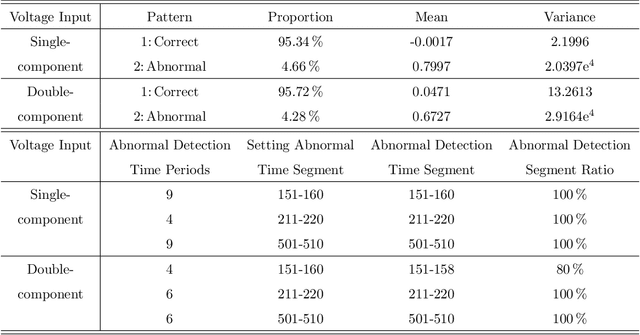

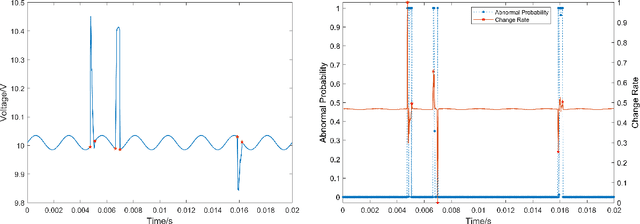

Abstract:This paper proposes a novel fast online methodology for outlier detection called the exception maximization outlier detection method(EMODM), which employs probabilistic models and statistical algorithms to detect abnormal patterns from the outputs of complex systems. The EMODM is based on a two-state Gaussian mixture model and demonstrates strong performance in probability anomaly detection working on real-time raw data rather than using special prior distribution information. We confirm this using the synthetic data from two numerical cases. For the real-world data, we have detected the short circuit pattern of the circuit system using EMODM by the current and voltage output of a three-phase inverter. The EMODM also found an abnormal period due to COVID-19 in the insured unemployment data of 53 regions in the United States from 2000 to 2024. The application of EMODM to these two real-life datasets demonstrated the effectiveness and accuracy of our algorithm.

Entity Divider with Language Grounding in Multi-Agent Reinforcement Learning

Oct 25, 2022Abstract:We investigate the use of natural language to drive the generalization of policies in multi-agent settings. Unlike single-agent settings, the generalization of policies should also consider the influence of other agents. Besides, with the increasing number of entities in multi-agent settings, more agent-entity interactions are needed for language grounding, and the enormous search space could impede the learning process. Moreover, given a simple general instruction,e.g., beating all enemies, agents are required to decompose it into multiple subgoals and figure out the right one to focus on. Inspired by previous work, we try to address these issues at the entity level and propose a novel framework for language grounding in multi-agent reinforcement learning, entity divider (EnDi). EnDi enables agents to independently learn subgoal division at the entity level and act in the environment based on the associated entities. The subgoal division is regularized by opponent modeling to avoid subgoal conflicts and promote coordinated strategies. Empirically, EnDi demonstrates the strong generalization ability to unseen games with new dynamics and expresses the superiority over existing methods.

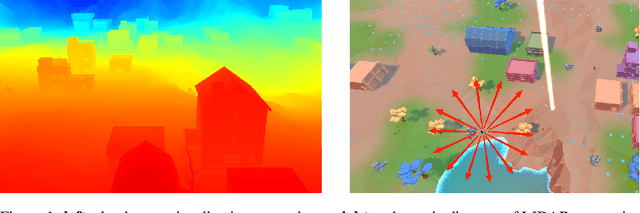

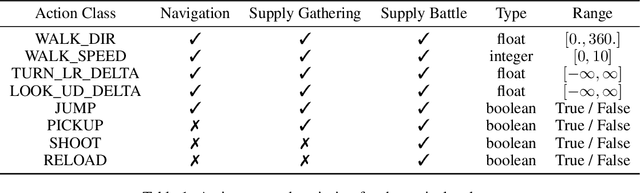

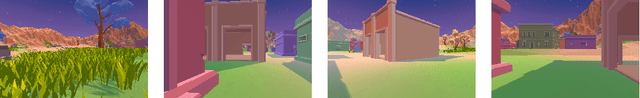

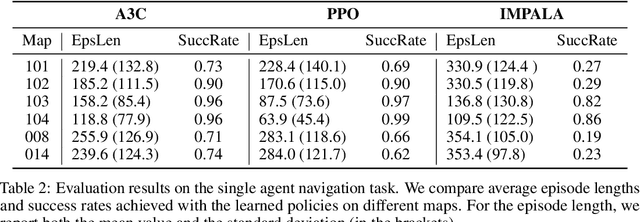

WILD-SCAV: Benchmarking FPS Gaming AI on Unity3D-based Environments

Oct 14, 2022

Abstract:Recent advances in deep reinforcement learning (RL) have demonstrated complex decision-making capabilities in simulation environments such as Arcade Learning Environment, MuJoCo, and ViZDoom. However, they are hardly extensible to more complicated problems, mainly due to the lack of complexity and variations in the environments they are trained and tested on. Furthermore, they are not extensible to an open-world environment to facilitate long-term exploration research. To learn realistic task-solving capabilities, we need to develop an environment with greater diversity and complexity. We developed WILD-SCAV, a powerful and extensible environment based on a 3D open-world FPS (First-Person Shooter) game to bridge the gap. It provides realistic 3D environments of variable complexity, various tasks, and multiple modes of interaction, where agents can learn to perceive 3D environments, navigate and plan, compete and cooperate in a human-like manner. WILD-SCAV also supports different complexities, such as configurable maps with different terrains, building structures and distributions, and multi-agent settings with cooperative and competitive tasks. The experimental results on configurable complexity, multi-tasking, and multi-agent scenarios demonstrate the effectiveness of WILD-SCAV in benchmarking various RL algorithms, as well as it is potential to give rise to intelligent agents with generalized task-solving abilities. The link to our open-sourced code can be found here https://github.com/inspirai/wilderness-scavenger.

MA2QL: A Minimalist Approach to Fully Decentralized Multi-Agent Reinforcement Learning

Sep 17, 2022

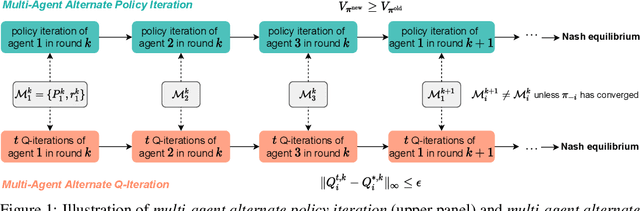

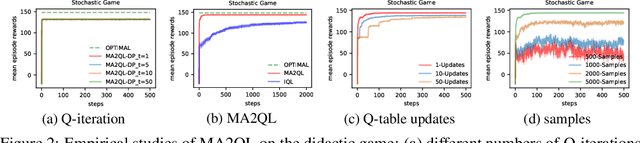

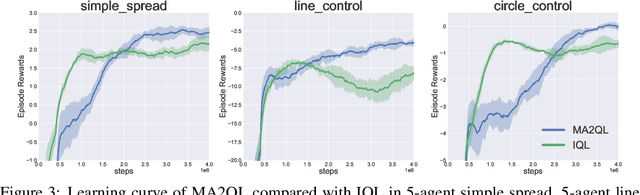

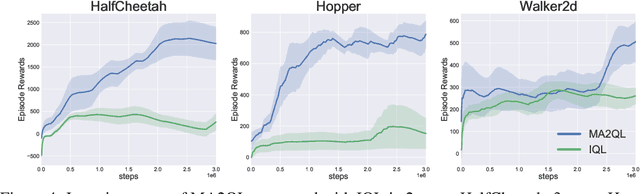

Abstract:Decentralized learning has shown great promise for cooperative multi-agent reinforcement learning (MARL). However, non-stationarity remains a significant challenge in decentralized learning. In the paper, we tackle the non-stationarity problem in the simplest and fundamental way and propose \textit{multi-agent alternate Q-learning} (MA2QL), where agents take turns to update their Q-functions by Q-learning. MA2QL is a \textit{minimalist} approach to fully decentralized cooperative MARL but is theoretically grounded. We prove that when each agent guarantees a $\varepsilon$-convergence at each turn, their joint policy converges to a Nash equilibrium. In practice, MA2QL only requires minimal changes to independent Q-learning (IQL). We empirically evaluate MA2QL on a variety of cooperative multi-agent tasks. Results show MA2QL consistently outperforms IQL, which verifies the effectiveness of MA2QL, despite such minimal changes.

SCC: an efficient deep reinforcement learning agent mastering the game of StarCraft II

Dec 24, 2020

Abstract:AlphaStar, the AI that reaches GrandMaster level in StarCraft II, is a remarkable milestone demonstrating what deep reinforcement learning can achieve in complex Real-Time Strategy (RTS) games. However, the complexities of the game, algorithms and systems, and especially the tremendous amount of computation needed are big obstacles for the community to conduct further research in this direction. We propose a deep reinforcement learning agent, StarCraft Commander (SCC). With order of magnitude less computation, it demonstrates top human performance defeating GrandMaster players in test matches and top professional players in a live event. Moreover, it shows strong robustness to various human strategies and discovers novel strategies unseen from human plays. In this paper, we will share the key insights and optimizations on efficient imitation learning and reinforcement learning for StarCraft II full game.

State estimation under non-Gaussian Levy noise: A modified Kalman filtering method

Mar 10, 2013

Abstract:The Kalman filter is extensively used for state estimation for linear systems under Gaussian noise. When non-Gaussian L\'evy noise is present, the conventional Kalman filter may fail to be effective due to the fact that the non-Gaussian L\'evy noise may have infinite variance. A modified Kalman filter for linear systems with non-Gaussian L\'evy noise is devised. It works effectively with reasonable computational cost. Simulation results are presented to illustrate this non-Gaussian filtering method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge