Weimin Li

A Transformer-based representation-learning model with unified processing of multimodal input for clinical diagnostics

Jun 01, 2023Abstract:During the diagnostic process, clinicians leverage multimodal information, such as chief complaints, medical images, and laboratory-test results. Deep-learning models for aiding diagnosis have yet to meet this requirement. Here we report a Transformer-based representation-learning model as a clinical diagnostic aid that processes multimodal input in a unified manner. Rather than learning modality-specific features, the model uses embedding layers to convert images and unstructured and structured text into visual tokens and text tokens, and bidirectional blocks with intramodal and intermodal attention to learn a holistic representation of radiographs, the unstructured chief complaint and clinical history, structured clinical information such as laboratory-test results and patient demographic information. The unified model outperformed an image-only model and non-unified multimodal diagnosis models in the identification of pulmonary diseases (by 12% and 9%, respectively) and in the prediction of adverse clinical outcomes in patients with COVID-19 (by 29% and 7%, respectively). Leveraging unified multimodal Transformer-based models may help streamline triage of patients and facilitate the clinical decision process.

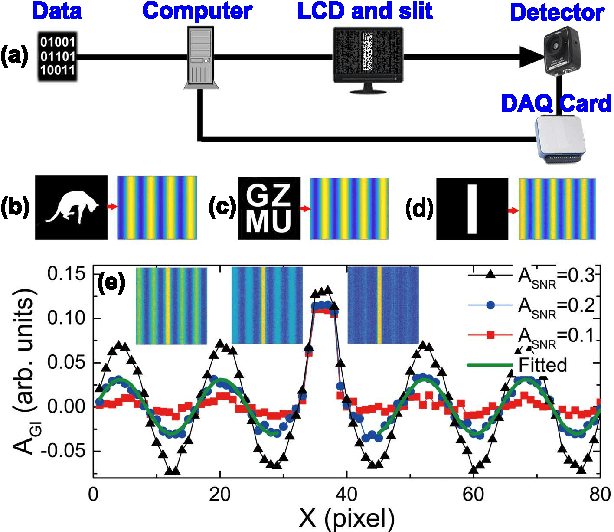

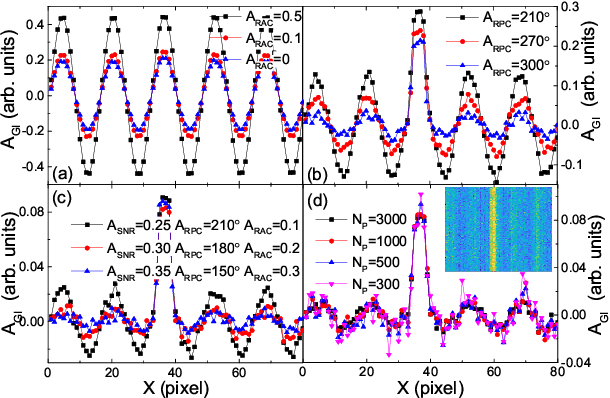

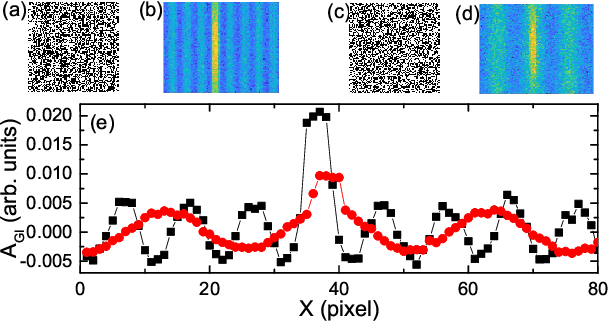

Robust data analysis and imaging with computational ghost imaging

Nov 06, 2021

Abstract:Nowadays the world has entered into the digital age, in which the data analysis and visualization have become more and more important. In analogy to imaging the real object, we demonstrate that the computational ghost imaging can image the digital data to show their characteristics, such as periodicity. Furthermore, our experimental results show that the use of optical imaging methods to analyse data exhibits unique advantages, especially in anti-interference. The data analysis with computational ghost imaging can be well performed against strong noise, random amplitude and phase changes in the binarized signals. Such robust data data analysis and imaging has an important application prospect in big data analysis, meteorology, astronomy, economics and many other fields.

Contention-based Grant-free Transmission with Extremely Sparse Orthogonal Pilot Scheme

Jun 08, 2021

Abstract:Due to the limited number of traditional orthogonal pilots, pilot collision will severely degrade the performance of contention-based grant-free transmission. To alleviate the pilot collision and exploit the spatial degree of freedom as much as possible, an extremely sparse orthogonal pilot scheme is proposed for uplink grant-free transmission. The proposed sparse pilot is used to perform active user detection and estimate the spatial channel. Then, inter-user interference suppression is performed by spatially combining the received data symbols using the estimated spatial channel. After that, the estimation and compensation of wireless channel and time/frequency offset are performed utilizing the geometric characteristics of combined data symbols. The task of pilot is much lightened, so that the extremely sparse orthogonal pilot can occupy minimized resources, and the number of orthogonal pilots can be increased significantly, which greatly reduces the probability of pilot collision. The numerical results show that the proposed extremely sparse orthogonal pilot scheme significantly improves the performance in high-overloading grant-free scenario.

SSMD: Semi-Supervised Medical Image Detection with Adaptive Consistency and Heterogeneous Perturbation

Jun 03, 2021Abstract:Semi-Supervised classification and segmentation methods have been widely investigated in medical image analysis. Both approaches can improve the performance of fully-supervised methods with additional unlabeled data. However, as a fundamental task, semi-supervised object detection has not gained enough attention in the field of medical image analysis. In this paper, we propose a novel Semi-Supervised Medical image Detector (SSMD). The motivation behind SSMD is to provide free yet effective supervision for unlabeled data, by regularizing the predictions at each position to be consistent. To achieve the above idea, we develop a novel adaptive consistency cost function to regularize different components in the predictions. Moreover, we introduce heterogeneous perturbation strategies that work in both feature space and image space, so that the proposed detector is promising to produce powerful image representations and robust predictions. Extensive experimental results show that the proposed SSMD achieves the state-of-the-art performance at a wide range of settings. We also demonstrate the strength of each proposed module with comprehensive ablation studies.

PDRS: A Fast Non-iterative Scheme for Massive Grant-free Access in Massive MIMO

Dec 25, 2020

Abstract:Grant-free multiple-input multiple-output (MIMO) usually employs non-orthogonal pilots for joint user detection and channel estimation. However, existing methods are too complex for massive grant-free access in massive MIMO. This letter proposes pilot detection reference signal (PDRS) to greatly reduce the complexity. In PDRS scheme, no iteration is required. Direct weight estimation is also proposed to calculate combining weights without channel estimation. After combining, PDRS recovery errors are used to decide the pilot activity. The simulation results show that the proposed grant-free scheme performs good with a complexity reduced by orders of magnitude.

SCC: an efficient deep reinforcement learning agent mastering the game of StarCraft II

Dec 24, 2020

Abstract:AlphaStar, the AI that reaches GrandMaster level in StarCraft II, is a remarkable milestone demonstrating what deep reinforcement learning can achieve in complex Real-Time Strategy (RTS) games. However, the complexities of the game, algorithms and systems, and especially the tremendous amount of computation needed are big obstacles for the community to conduct further research in this direction. We propose a deep reinforcement learning agent, StarCraft Commander (SCC). With order of magnitude less computation, it demonstrates top human performance defeating GrandMaster players in test matches and top professional players in a live event. Moreover, it shows strong robustness to various human strategies and discovers novel strategies unseen from human plays. In this paper, we will share the key insights and optimizations on efficient imitation learning and reinforcement learning for StarCraft II full game.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge