Tiantian Zhang

AirHunt: Bridging VLM Semantics and Continuous Planning for Efficient Aerial Object Navigation

Jan 19, 2026Abstract:Recent advances in large Vision-Language Models (VLMs) have provided rich semantic understanding that empowers drones to search for open-set objects via natural language instructions. However, prior systems struggle to integrate VLMs into practical aerial systems due to orders-of-magnitude frequency mismatch between VLM inference and real-time planning, as well as VLMs' limited 3D scene understanding. They also lack a unified mechanism to balance semantic guidance with motion efficiency in large-scale environments. To address these challenges, we present AirHunt, an aerial object navigation system that efficiently locates open-set objects with zero-shot generalization in outdoor environments by seamlessly fusing VLM semantic reasoning with continuous path planning. AirHunt features a dual-pathway asynchronous architecture that establishes a synergistic interface between VLM reasoning and path planning, enabling continuous flight with adaptive semantic guidance that evolves through motion. Moreover, we propose an active dual-task reasoning module that exploits geometric and semantic redundancy to enable selective VLM querying, and a semantic-geometric coherent planning module that dynamically reconciles semantic priorities and motion efficiency in a unified framework, enabling seamless adaptation to environmental heterogeneity. We evaluate AirHunt across diverse object navigation tasks and environments, demonstrating a higher success rate with lower navigation error and reduced flight time compared to state-of-the-art methods. Real-world experiments further validate AirHunt's practical capability in complex and challenging environments. Code and dataset will be made publicly available before publication.

UACER: An Uncertainty-Aware Critic Ensemble Framework for Robust Adversarial Reinforcement Learning

Dec 11, 2025Abstract:Robust adversarial reinforcement learning has emerged as an effective paradigm for training agents to handle uncertain disturbance in real environments, with critical applications in sequential decision-making domains such as autonomous driving and robotic control. Within this paradigm, agent training is typically formulated as a zero-sum Markov game between a protagonist and an adversary to enhance policy robustness. However, the trainable nature of the adversary inevitably induces non-stationarity in the learning dynamics, leading to exacerbated training instability and convergence difficulties, particularly in high-dimensional complex environments. In this paper, we propose a novel approach, Uncertainty-Aware Critic Ensemble for robust adversarial Reinforcement learning (UACER), which consists of two strategies: 1) Diversified critic ensemble: a diverse set of K critic networks is exploited in parallel to stabilize Q-value estimation rather than conventional single-critic architectures for both variance reduction and robustness enhancement. 2) Time-varying Decay Uncertainty (TDU) mechanism: advancing beyond simple linear combinations, we develop a variance-derived Q-value aggregation strategy that explicitly incorporates epistemic uncertainty to dynamically regulate the exploration-exploitation trade-off while simultaneously stabilizing the training process. Comprehensive experiments across several MuJoCo control problems validate the superior effectiveness of UACER, outperforming state-of-the-art methods in terms of overall performance, stability, and efficiency.

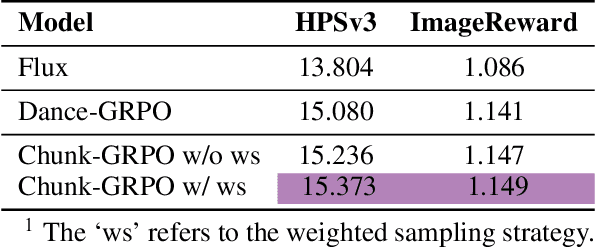

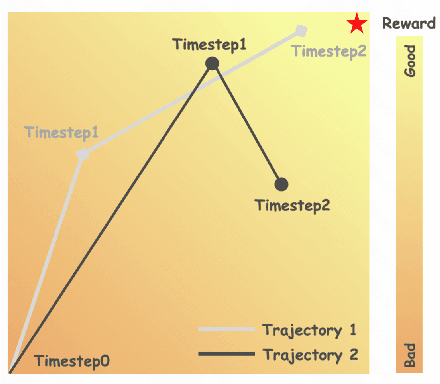

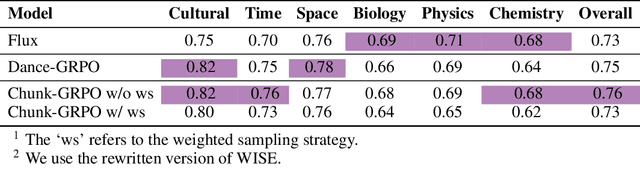

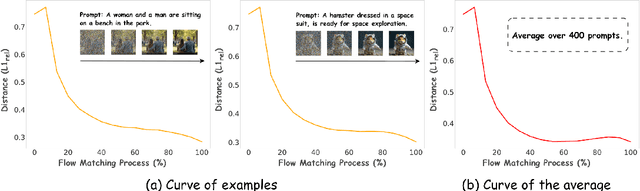

Sample By Step, Optimize By Chunk: Chunk-Level GRPO For Text-to-Image Generation

Oct 24, 2025

Abstract:Group Relative Policy Optimization (GRPO) has shown strong potential for flow-matching-based text-to-image (T2I) generation, but it faces two key limitations: inaccurate advantage attribution, and the neglect of temporal dynamics of generation. In this work, we argue that shifting the optimization paradigm from the step level to the chunk level can effectively alleviate these issues. Building on this idea, we propose Chunk-GRPO, the first chunk-level GRPO-based approach for T2I generation. The insight is to group consecutive steps into coherent 'chunk's that capture the intrinsic temporal dynamics of flow matching, and to optimize policies at the chunk level. In addition, we introduce an optional weighted sampling strategy to further enhance performance. Extensive experiments show that ChunkGRPO achieves superior results in both preference alignment and image quality, highlighting the promise of chunk-level optimization for GRPO-based methods.

Distribution Preference Optimization: A Fine-grained Perspective for LLM Unlearning

Oct 06, 2025Abstract:As Large Language Models (LLMs) demonstrate remarkable capabilities learned from vast corpora, concerns regarding data privacy and safety are receiving increasing attention. LLM unlearning, which aims to remove the influence of specific data while preserving overall model utility, is becoming an important research area. One of the mainstream unlearning classes is optimization-based methods, which achieve forgetting directly through fine-tuning, exemplified by Negative Preference Optimization (NPO). However, NPO's effectiveness is limited by its inherent lack of explicit positive preference signals. Attempts to introduce such signals by constructing preferred responses often necessitate domain-specific knowledge or well-designed prompts, fundamentally restricting their generalizability. In this paper, we shift the focus to the distribution-level, directly targeting the next-token probability distribution instead of entire responses, and derive a novel unlearning algorithm termed \textbf{Di}stribution \textbf{P}reference \textbf{O}ptimization (DiPO). We show that the requisite preference distribution pairs for DiPO, which are distributions over the model's output tokens, can be constructed by selectively amplifying or suppressing the model's high-confidence output logits, thereby effectively overcoming NPO's limitations. We theoretically prove the consistency of DiPO's loss function with the desired unlearning direction. Extensive experiments demonstrate that DiPO achieves a strong trade-off between model utility and forget quality. Notably, DiPO attains the highest forget quality on the TOFU benchmark, and maintains leading scalability and sustainability in utility preservation on the MUSE benchmark.

Reinforcement Fine-Tuning Powers Reasoning Capability of Multimodal Large Language Models

May 24, 2025Abstract:Standing in 2025, at a critical juncture in the pursuit of Artificial General Intelligence (AGI), reinforcement fine-tuning (RFT) has demonstrated significant potential in enhancing the reasoning capability of large language models (LLMs) and has led to the development of cutting-edge AI models such as OpenAI-o1 and DeepSeek-R1. Moreover, the efficient application of RFT to enhance the reasoning capability of multimodal large language models (MLLMs) has attracted widespread attention from the community. In this position paper, we argue that reinforcement fine-tuning powers the reasoning capability of multimodal large language models. To begin with, we provide a detailed introduction to the fundamental background knowledge that researchers interested in this field should be familiar with. Furthermore, we meticulously summarize the improvements of RFT in powering reasoning capability of MLLMs into five key points: diverse modalities, diverse tasks and domains, better training algorithms, abundant benchmarks and thriving engineering frameworks. Finally, we propose five promising directions for future research that the community might consider. We hope that this position paper will provide valuable insights to the community at this pivotal stage in the advancement toward AGI. Summary of works done on RFT for MLLMs is available at https://github.com/Sun-Haoyuan23/Awesome-RL-based-Reasoning-MLLMs.

Evaluation Study on SAM 2 for Class-agnostic Instance-level Segmentation

Sep 04, 2024

Abstract:Segment Anything Model (SAM) has demonstrated powerful zero-shot segmentation performance in natural scenes. The recently released Segment Anything Model 2 (SAM2) has further heightened researchers' expectations towards image segmentation capabilities. To evaluate the performance of SAM2 on class-agnostic instance-level segmentation tasks, we adopt different prompt strategies for SAM2 to cope with instance-level tasks for three relevant scenarios: Salient Instance Segmentation (SIS), Camouflaged Instance Segmentation (CIS), and Shadow Instance Detection (SID). In addition, to further explore the effectiveness of SAM2 in segmenting granular object structures, we also conduct detailed tests on the high-resolution Dichotomous Image Segmentation (DIS) benchmark to assess the fine-grained segmentation capability. Qualitative and quantitative experimental results indicate that the performance of SAM2 varies significantly across different scenarios. Besides, SAM2 is not particularly sensitive to segmenting high-resolution fine details. We hope this technique report can drive the emergence of SAM2-based adapters, aiming to enhance the performance ceiling of large vision models on class-agnostic instance segmentation tasks.

Incorporating Clinical Guidelines through Adapting Multi-modal Large Language Model for Prostate Cancer PI-RADS Scoring

May 14, 2024Abstract:The Prostate Imaging Reporting and Data System (PI-RADS) is pivotal in the diagnosis of clinically significant prostate cancer through MRI imaging. Current deep learning-based PI-RADS scoring methods often lack the incorporation of essential PI-RADS clinical guidelines~(PICG) utilized by radiologists, potentially compromising scoring accuracy. This paper introduces a novel approach that adapts a multi-modal large language model (MLLM) to incorporate PICG into PI-RADS scoring without additional annotations and network parameters. We present a two-stage fine-tuning process aimed at adapting MLLMs originally trained on natural images to the MRI data domain while effectively integrating the PICG. In the first stage, we develop a domain adapter layer specifically tailored for processing 3D MRI image inputs and design the MLLM instructions to differentiate MRI modalities effectively. In the second stage, we translate PICG into guiding instructions for the model to generate PICG-guided image features. Through feature distillation, we align scoring network features with the PICG-guided image feature, enabling the scoring network to effectively incorporate the PICG information. We develop our model on a public dataset and evaluate it in a real-world challenging in-house dataset. Experimental results demonstrate that our approach improves the performance of current scoring networks.

Adaptive Intra-Class Variation Contrastive Learning for Unsupervised Person Re-Identification

Apr 06, 2024Abstract:The memory dictionary-based contrastive learning method has achieved remarkable results in the field of unsupervised person Re-ID. However, The method of updating memory based on all samples does not fully utilize the hardest sample to improve the generalization ability of the model, and the method based on hardest sample mining will inevitably introduce false-positive samples that are incorrectly clustered in the early stages of the model. Clustering-based methods usually discard a significant number of outliers, leading to the loss of valuable information. In order to address the issues mentioned before, we propose an adaptive intra-class variation contrastive learning algorithm for unsupervised Re-ID, called AdaInCV. And the algorithm quantitatively evaluates the learning ability of the model for each class by considering the intra-class variations after clustering, which helps in selecting appropriate samples during the training process of the model. To be more specific, two new strategies are proposed: Adaptive Sample Mining (AdaSaM) and Adaptive Outlier Filter (AdaOF). The first one gradually creates more reliable clusters to dynamically refine the memory, while the second can identify and filter out valuable outliers as negative samples.

Replay-enhanced Continual Reinforcement Learning

Nov 20, 2023

Abstract:Replaying past experiences has proven to be a highly effective approach for averting catastrophic forgetting in supervised continual learning. However, some crucial factors are still largely ignored, making it vulnerable to serious failure, when used as a solution to forgetting in continual reinforcement learning, even in the context of perfect memory where all data of previous tasks are accessible in the current task. On the one hand, since most reinforcement learning algorithms are not invariant to the reward scale, the previously well-learned tasks (with high rewards) may appear to be more salient to the current learning process than the current task (with small initial rewards). This causes the agent to concentrate on those salient tasks at the expense of generality on the current task. On the other hand, offline learning on replayed tasks while learning a new task may induce a distributional shift between the dataset and the learned policy on old tasks, resulting in forgetting. In this paper, we introduce RECALL, a replay-enhanced method that greatly improves the plasticity of existing replay-based methods on new tasks while effectively avoiding the recurrence of catastrophic forgetting in continual reinforcement learning. RECALL leverages adaptive normalization on approximate targets and policy distillation on old tasks to enhance generality and stability, respectively. Extensive experiments on the Continual World benchmark show that RECALL performs significantly better than purely perfect memory replay, and achieves comparable or better overall performance against state-of-the-art continual learning methods.

Implementation and Evaluation of Physical Layer Key Generation on SDR based LoRa Platform

Aug 30, 2023

Abstract:Physical layer key generation technology which leverages channel randomness to generate secret keys has attracted extensive attentions in long range (LoRa)-based networks recently. We in this paper develop a software-defined radio (SDR) based LoRa communications platform using GNU Radio on universal software radio peripheral (USRP) to implement and evaluate typical physical layer key generation schemes. Thanks to the flexibility and configurability of GNU Radio to extract LoRa packets, we are able to obtain the fine-grained channel frequency response (CFR) through LoRa preamble based channel estimation for key generation. Besides, we propose a lowcomplexity preprocessing method to enhance the randomness of quantization while reducing the secret key disagreement ratio. The results indicate that we can achieve 367 key bits with a high level of randomness through just a single effective channel probing in an indoor environment at a distance of 2 meters under the circumstance of a spreading factor (SF) of 7, a preamble length of 8, a signal bandwidth of 250 kHz, and a sampling rate of 1 MHz.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge