Shaoshan Liu

Context and Diversity Matter: The Emergence of In-Context Learning in World Models

Sep 26, 2025Abstract:The capability of predicting environmental dynamics underpins both biological neural systems and general embodied AI in adapting to their surroundings. Yet prevailing approaches rest on static world models that falter when confronted with novel or rare configurations. We investigate in-context environment learning (ICEL), shifting attention from zero-shot performance to the growth and asymptotic limits of the world model. Our contributions are three-fold: (1) we formalize in-context learning of a world model and identify two core mechanisms: environment recognition and environment learning; (2) we derive error upper-bounds for both mechanisms that expose how the mechanisms emerge; and (3) we empirically confirm that distinct ICL mechanisms exist in the world model, and we further investigate how data distribution and model architecture affect ICL in a manner consistent with theory. These findings demonstrate the potential of self-adapting world models and highlight the key factors behind the emergence of ICEL, most notably the necessity of long context and diverse environments.

Conceptual Framework Toward Embodied Collective Adaptive Intelligence

May 29, 2025Abstract:Collective Adaptive Intelligence (CAI) represent a transformative approach in artificial intelligence, wherein numerous autonomous agents collaborate, adapt, and self-organize to navigate complex, dynamic environments. This paradigm is particularly impactful in embodied AI applications, where adaptability and resilience are paramount. By enabling systems to reconfigure themselves in response to unforeseen challenges, CAI facilitate robust performance in real-world scenarios. This article introduces a conceptual framework for designing and analyzing CAI. It delineates key attributes including task generalization, resilience, scalability, and self-assembly, aiming to bridge theoretical foundations with practical methodologies for engineering adaptive, emergent intelligence. By providing a structured foundation for understanding and implementing CAI, this work seeks to guide researchers and practitioners in developing more resilient, scalable, and adaptable AI systems across various domains.

ADDT -- A Digital Twin Framework for Proactive Safety Validation in Autonomous Driving Systems

Apr 13, 2025Abstract:Autonomous driving systems continue to face safety-critical failures, often triggered by rare and unpredictable corner cases that evade conventional testing. We present the Autonomous Driving Digital Twin (ADDT) framework, a high-fidelity simulation platform designed to proactively identify hidden faults, evaluate real-time performance, and validate safety before deployment. ADDT combines realistic digital models of driving environments, vehicle dynamics, sensor behavior, and fault conditions to enable scalable, scenario-rich stress-testing under diverse and adverse conditions. It supports adaptive exploration of edge cases using reinforcement-driven techniques, uncovering failure modes that physical road testing often misses. By shifting from reactive debugging to proactive simulation-driven validation, ADDT enables a more rigorous and transparent approach to autonomous vehicle safety engineering. To accelerate adoption and facilitate industry-wide safety improvements, the entire ADDT framework has been released as open-source software, providing developers with an accessible and extensible tool for comprehensive safety testing at scale.

OmniRL: In-Context Reinforcement Learning by Large-Scale Meta-Training in Randomized Worlds

Feb 05, 2025

Abstract:We introduce OmniRL, a highly generalizable in-context reinforcement learning (ICRL) model that is meta-trained on hundreds of thousands of diverse tasks. These tasks are procedurally generated by randomizing state transitions and rewards within Markov Decision Processes. To facilitate this extensive meta-training, we propose two key innovations: 1. An efficient data synthesis pipeline for ICRL, which leverages the interaction histories of diverse behavior policies; and 2. A novel modeling framework that integrates both imitation learning and reinforcement learning (RL) within the context, by incorporating prior knowledge. For the first time, we demonstrate that in-context learning (ICL) alone, without any gradient-based fine-tuning, can successfully tackle unseen Gymnasium tasks through imitation learning, online RL, or offline RL. Additionally, we show that achieving generalized ICRL capabilities-unlike task identification-oriented few-shot learning-critically depends on long trajectories generated by variant tasks and diverse behavior policies. By emphasizing the potential of ICL and departing from pre-training focused on acquiring specific skills, we further underscore the significance of meta-training aimed at cultivating the ability of ICL itself.

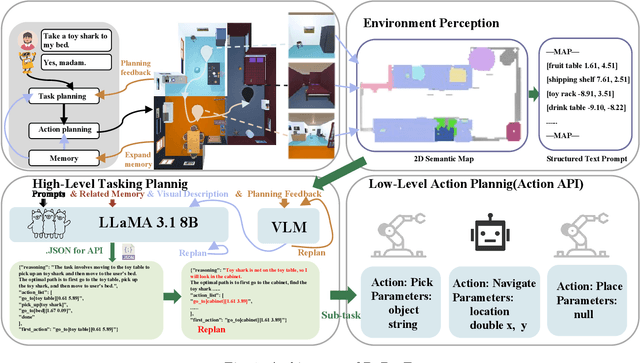

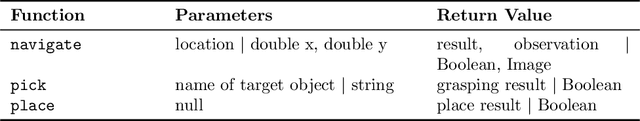

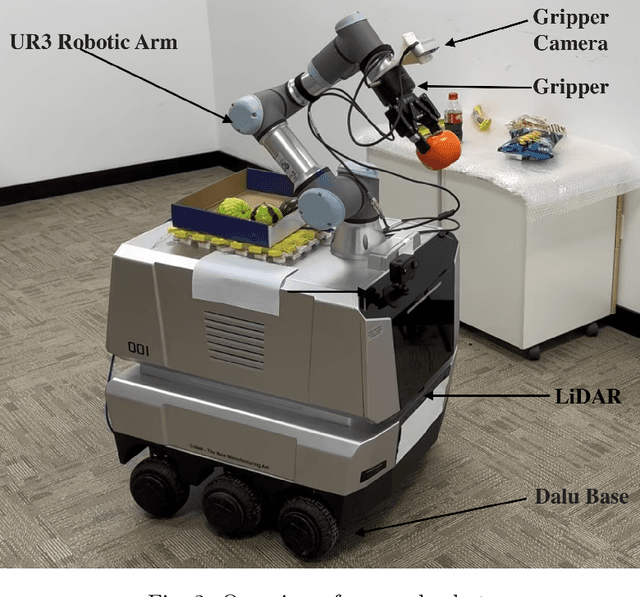

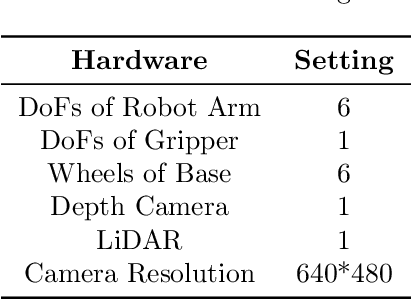

DaDu-E: Rethinking the Role of Large Language Model in Robotic Computing Pipeline

Dec 02, 2024

Abstract:Performing complex tasks in open environments remains challenging for robots, even when using large language models (LLMs) as the core planner. Many LLM-based planners are inefficient due to their large number of parameters and prone to inaccuracies because they operate in open-loop systems. We think the reason is that only applying LLMs as planners is insufficient. In this work, we propose DaDu-E, a robust closed-loop planning framework for embodied AI robots. Specifically, DaDu-E is equipped with a relatively lightweight LLM, a set of encapsulated robot skill instructions, a robust feedback system, and memory augmentation. Together, these components enable DaDu-E to (i) actively perceive and adapt to dynamic environments, (ii) optimize computational costs while maintaining high performance, and (iii) recover from execution failures using its memory and feedback mechanisms. Extensive experiments on real-world and simulated tasks show that DaDu-E achieves task success rates comparable to embodied AI robots with larger models as planners like COME-Robot, while reducing computational requirements by $6.6 \times$. Users are encouraged to explore our system at: \url{https://rlc-lab.github.io/dadu-e/}.

VAP: The Vulnerability-Adaptive Protection Paradigm Toward Reliable Autonomous Machines

Sep 30, 2024

Abstract:The next ubiquitous computing platform, following personal computers and smartphones, is poised to be inherently autonomous, encompassing technologies like drones, robots, and self-driving cars. Ensuring reliability for these autonomous machines is critical. However, current resiliency solutions make fundamental trade-offs between reliability and cost, resulting in significant overhead in performance, energy consumption, and chip area. This is due to the "one-size-fits-all" approach commonly used, where the same protection scheme is applied throughout the entire software computing stack. This paper presents the key insight that to achieve high protection coverage with minimal cost, we must leverage the inherent variations in robustness across different layers of the autonomous machine software stack. Specifically, we demonstrate that various nodes in this complex stack exhibit different levels of robustness against hardware faults. Our findings reveal that the front-end of an autonomous machine's software stack tends to be more robust, whereas the back-end is generally more vulnerable. Building on these inherent robustness differences, we propose a Vulnerability-Adaptive Protection (VAP) design paradigm. In this paradigm, the allocation of protection resources - whether spatially (e.g., through modular redundancy) or temporally (e.g., via re-execution) - is made inversely proportional to the inherent robustness of tasks or algorithms within the autonomous machine system. Experimental results show that VAP provides high protection coverage while maintaining low overhead in both autonomous vehicle and drone systems.

Corki: Enabling Real-time Embodied AI Robots via Algorithm-Architecture Co-Design

Jul 05, 2024

Abstract:Embodied AI robots have the potential to fundamentally improve the way human beings live and manufacture. Continued progress in the burgeoning field of using large language models to control robots depends critically on an efficient computing substrate. In particular, today's computing systems for embodied AI robots are designed purely based on the interest of algorithm developers, where robot actions are divided into a discrete frame-basis. Such an execution pipeline creates high latency and energy consumption. This paper proposes Corki, an algorithm-architecture co-design framework for real-time embodied AI robot control. Our idea is to decouple LLM inference, robotic control and data communication in the embodied AI robots compute pipeline. Instead of predicting action for one single frame, Corki predicts the trajectory for the near future to reduce the frequency of LLM inference. The algorithm is coupled with a hardware that accelerates transforming trajectory into actual torque signals used to control robots and an execution pipeline that parallels data communication with computation. Corki largely reduces LLM inference frequency by up to 8.0x, resulting in up to 3.6x speed up. The success rate improvement can be up to 17.3%. Code is provided for re-implementation. https://github.com/hyy0613/Corki

ICE-SEARCH: A Language Model-Driven Feature Selection Approach

Mar 09, 2024

Abstract:This study unveils the In-Context Evolutionary Search (ICE-SEARCH) method, the first work that melds language models (LMs) with evolutionary algorithms for feature selection (FS) tasks and demonstrates its effectiveness in Medical Predictive Analytics (MPA) applications. ICE-SEARCH harnesses the crossover and mutation capabilities inherent in LMs within an evolutionary framework, significantly improving FS through the model's comprehensive world knowledge and its adaptability to a variety of roles. Our evaluation of this methodology spans three crucial MPA tasks: stroke, cardiovascular disease, and diabetes, where ICE-SEARCH outperforms traditional FS methods in pinpointing essential features for medical applications. ICE-SEARCH achieves State-of-the-Art (SOTA) performance in stroke prediction and diabetes prediction; the Decision-Randomized ICE-SEARCH ranks as SOTA in cardiovascular disease prediction. Our results not only demonstrate the efficacy of ICE-SEARCH in medical FS but also underscore the versatility, efficiency, and scalability of integrating LMs in FS tasks. The study emphasizes the critical role of incorporating domain-specific insights, illustrating ICE-SEARCH's robustness, generalizability, and swift convergence. This opens avenues for further research into comprehensive and intricate FS landscapes, marking a significant stride in the application of artificial intelligence in medical predictive analytics.

Timely Fusion of Surround Radar/Lidar for Object Detection in Autonomous Driving Systems

Sep 09, 2023

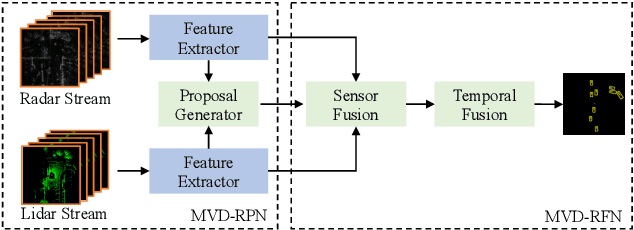

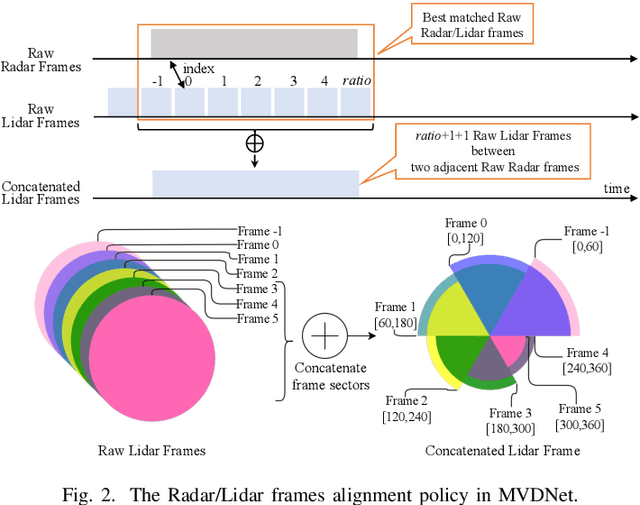

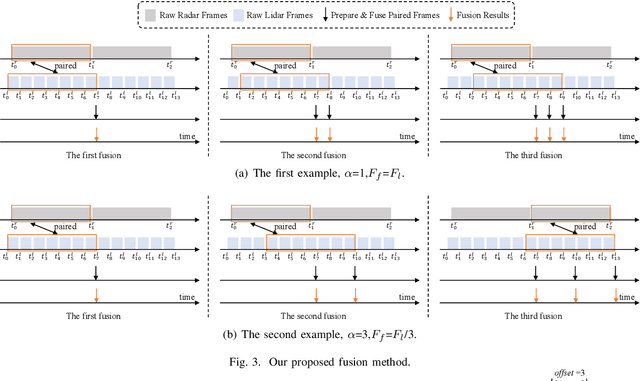

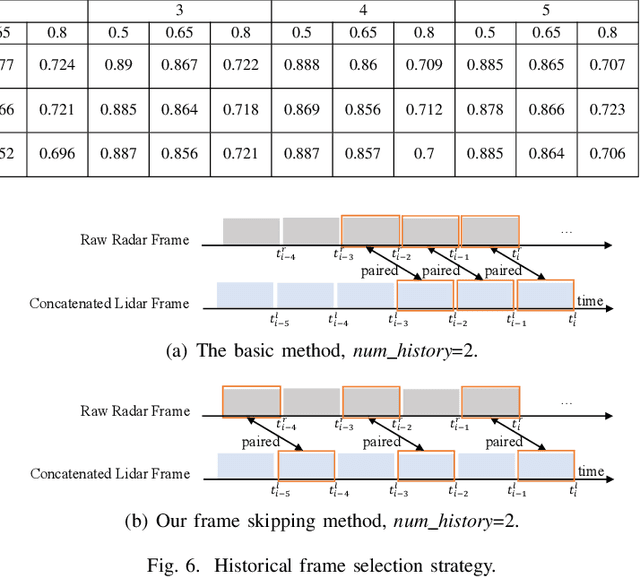

Abstract:Fusing Radar and Lidar sensor data can fully utilize their complementary advantages and provide more accurate reconstruction of the surrounding for autonomous driving systems. Surround Radar/Lidar can provide 360-degree view sampling with the minimal cost, which are promising sensing hardware solutions for autonomous driving systems. However, due to the intrinsic physical constraints, the rotating speed of surround Radar, and thus the frequency to generate Radar data frames, is much lower than surround Lidar. Existing Radar/Lidar fusion methods have to work at the low frequency of surround Radar, which cannot meet the high responsiveness requirement of autonomous driving systems.This paper develops techniques to fuse surround Radar/Lidar with working frequency only limited by the faster surround Lidar instead of the slower surround Radar, based on the state-of-the-art object detection model MVDNet. The basic idea of our approach is simple: we let MVDNet work with temporally unaligned data from Radar/Lidar, so that fusion can take place at any time when a new Lidar data frame arrives, instead of waiting for the slow Radar data frame. However, directly applying MVDNet to temporally unaligned Radar/Lidar data greatly degrades its object detection accuracy. The key information revealed in this paper is that we can achieve high output frequency with little accuracy loss by enhancing the training procedure to explore the temporal redundancy in MVDNet so that it can tolerate the temporal unalignment of input data. We explore several different ways of training enhancement and compare them quantitatively with experiments.

A Comprehensive Review and Systematic Analysis of Artificial Intelligence Regulation Policies

Jul 23, 2023

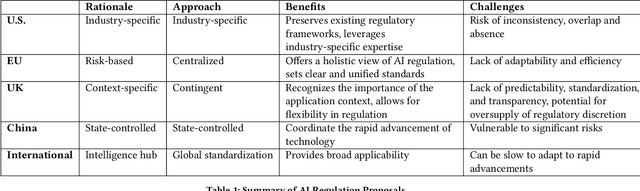

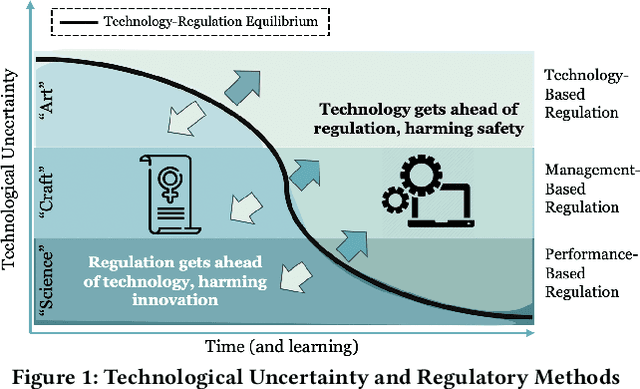

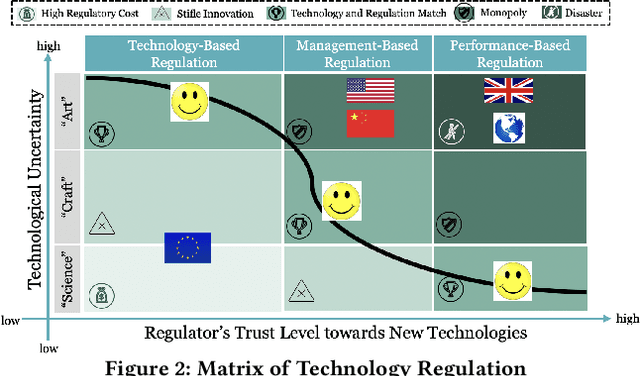

Abstract:Due to the cultural and governance differences of countries around the world, there currently exists a wide spectrum of AI regulation policy proposals that have created a chaos in the global AI regulatory space. Properly regulating AI technologies is extremely challenging, as it requires a delicate balance between legal restrictions and technological developments. In this article, we first present a comprehensive review of AI regulation proposals from different geographical locations and cultural backgrounds. Then, drawing from historical lessons, we develop a framework to facilitate a thorough analysis of AI regulation proposals. Finally, we perform a systematic analysis of these AI regulation proposals to understand how each proposal may fail. This study, containing historical lessons and analysis methods, aims to help governing bodies untangling the AI regulatory chaos through a divide-and-conquer manner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge