Shahid Mumtaz

UAV-Enabled Joint Sensing, Communication, Powering and Backhaul Transmission in Maritime Monitoring Networks

May 18, 2025

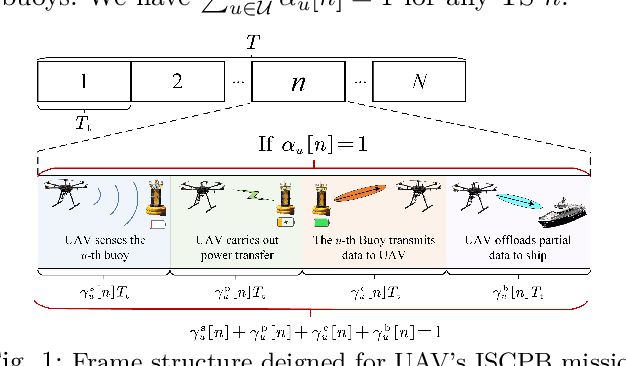

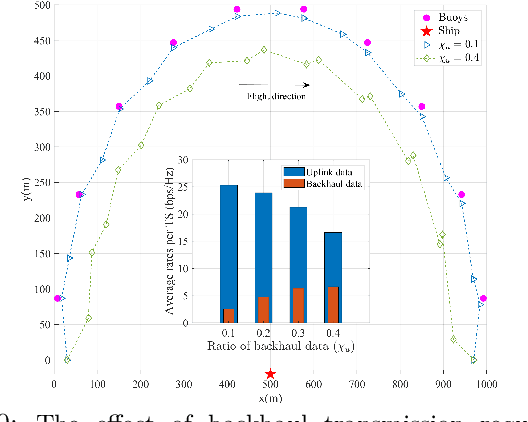

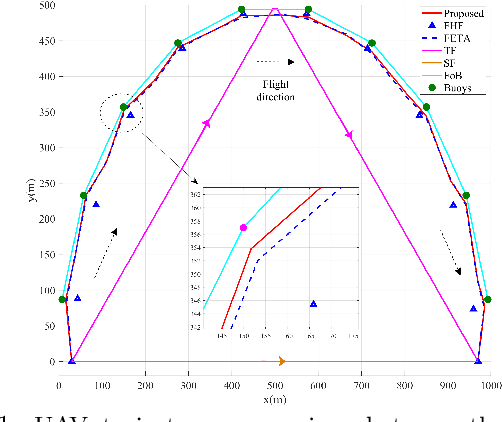

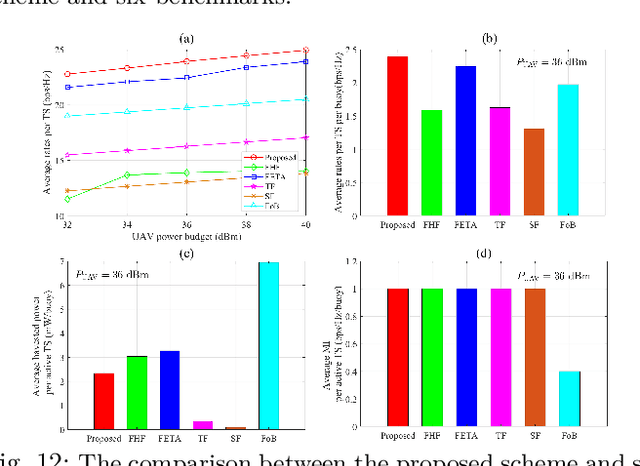

Abstract:This paper addresses the challenge of energy-constrained maritime monitoring networks by proposing an unmanned aerial vehicle (UAV)-enabled integrated sensing, communication, powering and backhaul transmission scheme with a tailored time-division duplex frame structure. Within each time slot, the UAV sequentially implements sensing, wireless charging and uplink receiving with buoys, and lastly forwards part of collected data to the central ship via backhaul links. Considering the tight coupling among these functions, we jointly optimize time allocation, UAV trajectory, UAV-buoy association, and power scheduling to maximize the performance of data collection, with the practical consideration of sea clutter effects during UAV sensing. A novel optimization framework combining alternating optimization, quadratic transform and augmented first-order Taylor approximation is developed, which demonstrates good convergence behavior and robustness. Simulation results show that under sensing quality-of-service constraint, buoys are able to achieve an average data rate over 22bps/Hz using around 2mW harvested power per active time slot, validating the scheme's effectiveness for open-sea monitoring. Additionally, it is found that under the influence of sea clutters, the optimal UAV trajectory always keeps a certain distance with buoys to strike a balance between sensing and other multi-functional transmissions.

QFDNN: A Resource-Efficient Variational Quantum Feature Deep Neural Networks for Fraud Detection and Loan Prediction

Apr 28, 2025

Abstract:Social financial technology focuses on trust, sustainability, and social responsibility, which require advanced technologies to address complex financial tasks in the digital era. With the rapid growth in online transactions, automating credit card fraud detection and loan eligibility prediction has become increasingly challenging. Classical machine learning (ML) models have been used to solve these challenges; however, these approaches often encounter scalability, overfitting, and high computational costs due to complexity and high-dimensional financial data. Quantum computing (QC) and quantum machine learning (QML) provide a promising solution to efficiently processing high-dimensional datasets and enabling real-time identification of subtle fraud patterns. However, existing quantum algorithms lack robustness in noisy environments and fail to optimize performance with reduced feature sets. To address these limitations, we propose a quantum feature deep neural network (QFDNN), a novel, resource efficient, and noise-resilient quantum model that optimizes feature representation while requiring fewer qubits and simpler variational circuits. The model is evaluated using credit card fraud detection and loan eligibility prediction datasets, achieving competitive accuracies of 82.2% and 74.4%, respectively, with reduced computational overhead. Furthermore, we test QFDNN against six noise models, demonstrating its robustness across various error conditions. Our findings highlight QFDNN potential to enhance trust and security in social financial technology by accurately detecting fraudulent transactions while supporting sustainability through its resource-efficient design and minimal computational overhead.

Pre-trained Molecular Language Models with Random Functional Group Masking

Nov 03, 2024

Abstract:Recent advancements in computational chemistry have leveraged the power of trans-former-based language models, such as MoLFormer, pre-trained using a vast amount of simplified molecular-input line-entry system (SMILES) sequences, to understand and predict molecular properties and activities, a critical step in fields like drug discovery and materials science. To further improve performance, researchers have introduced graph neural networks with graph-based molecular representations, such as GEM, incorporating the topology, geometry, 2D or even 3D structures of molecules into pre-training. While most of molecular graphs in existing studies were automatically converted from SMILES sequences, it is to assume that transformer-based language models might be able to implicitly learn structure-aware representations from SMILES sequences. In this paper, we propose \ours{} -- a SMILES-based \underline{\em M}olecular \underline{\em L}anguage \underline{\em M}odel, which randomly masking SMILES subsequences corresponding to specific molecular \underline{\em F}unctional \underline{\em G}roups to incorporate structure information of atoms during the pre-training phase. This technique aims to compel the model to better infer molecular structures and properties, thus enhancing its predictive capabilities. Extensive experimental evaluations across 11 benchmark classification and regression tasks in the chemical domain demonstrate the robustness and superiority of \ours{}. Our findings reveal that \ours{} outperforms existing pre-training models, either based on SMILES or graphs, in 9 out of the 11 downstream tasks, ranking as a close second in the remaining ones.

An Efficient Privacy-aware Split Learning Framework for Satellite Communications

Sep 13, 2024

Abstract:In the rapidly evolving domain of satellite communications, integrating advanced machine learning techniques, particularly split learning, is crucial for enhancing data processing and model training efficiency across satellites, space stations, and ground stations. Traditional ML approaches often face significant challenges within satellite networks due to constraints such as limited bandwidth and computational resources. To address this gap, we propose a novel framework for more efficient SL in satellite communications. Our approach, Dynamic Topology Informed Pruning, namely DTIP, combines differential privacy with graph and model pruning to optimize graph neural networks for distributed learning. DTIP strategically applies differential privacy to raw graph data and prunes GNNs, thereby optimizing both model size and communication load across network tiers. Extensive experiments across diverse datasets demonstrate DTIP's efficacy in enhancing privacy, accuracy, and computational efficiency. Specifically, on Amazon2M dataset, DTIP maintains an accuracy of 0.82 while achieving a 50% reduction in floating-point operations per second. Similarly, on ArXiv dataset, DTIP achieves an accuracy of 0.85 under comparable conditions. Our framework not only significantly improves the operational efficiency of satellite communications but also establishes a new benchmark in privacy-aware distributed learning, potentially revolutionizing data handling in space-based networks.

Converging Paradigms: The Synergy of Symbolic and Connectionist AI in LLM-Empowered Autonomous Agents

Jul 11, 2024

Abstract:This article explores the convergence of connectionist and symbolic artificial intelligence (AI), from historical debates to contemporary advancements. Traditionally considered distinct paradigms, connectionist AI focuses on neural networks, while symbolic AI emphasizes symbolic representation and logic. Recent advancements in large language models (LLMs), exemplified by ChatGPT and GPT-4, highlight the potential of connectionist architectures in handling human language as a form of symbols. The study argues that LLM-empowered Autonomous Agents (LAAs) embody this paradigm convergence. By utilizing LLMs for text-based knowledge modeling and representation, LAAs integrate neuro-symbolic AI principles, showcasing enhanced reasoning and decision-making capabilities. Comparing LAAs with Knowledge Graphs within the neuro-symbolic AI theme highlights the unique strengths of LAAs in mimicking human-like reasoning processes, scaling effectively with large datasets, and leveraging in-context samples without explicit re-training. The research underscores promising avenues in neuro-vector-symbolic integration, instructional encoding, and implicit reasoning, aimed at further enhancing LAA capabilities. By exploring the progression of neuro-symbolic AI and proposing future research trajectories, this work advances the understanding and development of AI technologies.

A 3D Modeling Method for Scattering on Rough Surfaces at the Terahertz Band

May 05, 2023

Abstract:The terahertz (THz) band (0.1-10 THz) is widely considered to be a candidate band for the sixth-generation mobile communication technology (6G). However, due to its short wavelength (less than 1 mm), scattering becomes a particularly significant propagation mechanism. In previous studies, we proposed a scattering model to characterize the scattering in THz bands, which can only reconstruct the scattering in the incidence plane. In this paper, a three-dimensional (3D) stochastic model is proposed to characterize the THz scattering on rough surfaces. Then, we reconstruct the scattering on rough surfaces with different shapes and under different incidence angles utilizing the proposed model. Good agreements can be achieved between the proposed model and full-wave simulation results. This stochastic 3D scattering model can be integrated into the standard channel modeling framework to realize more realistic THz channel data for the evaluation of 6G.

Technology Trends for Massive MIMO towards 6G

Jan 05, 2023

Abstract:At the dawn of the next-generation wireless systems and networks, massive multiple-input multiple-output (MIMO) has been envisioned as one of the enabling technologies. With the continued success of being applied in the 5G and beyond, the massive MIMO technology has demonstrated its advantageousness, integrability, and extendibility. Moreover, several evolutionary features and revolutionizing trends for massive MIMO have gradually emerged in recent years, which are expected to reshape the future 6G wireless systems and networks. Specifically, the functions and performance of future massive MIMO systems will be enabled and enhanced via combining other innovative technologies, architectures, and strategies such as intelligent omni-surfaces (IOSs)/intelligent reflecting surfaces (IRSs), artificial intelligence (AI), THz communications, cell free architecture. Also, more diverse vertical applications based on massive MIMO will emerge and prosper, such as wireless localization and sensing, vehicular communications, non-terrestrial communications, remote sensing, inter-planetary communications.

Secure Performance Analysis of RIS-aided Wireless Communication Systems

May 26, 2022

Abstract:Since Internet of Things (IoT) is suggested as the fundamental platform to adapt massive connections and secure transmission, we study physical-layer authentication in the point-to-point wireless systems relying on reconfigurable intelligent surfaces (RIS) technique. Due to lack of direct link from IoT devices (both legal and illegal devices) to the access point, we benefit from RIS by considering two main secure performance metrics. As main goal, we examine the secrecy performance of a RIS-aided wireless communication systems which show secure performance in the presence of an eavesdropping IoT devices. In this circumstance, RIS is placed between the access point and the legitimate devices and is designed to enhance the link security. To specify secure system performance metrics, we firstly present analytical results for the secrecy outage probability. Then, secrecy rate is further examined. Interestingly, we are to control both the average signal-to-noise ratio at the source and the number of metasurface elements of the RIS to achieve improved system performance. We verify derived expressions by matching Monte-Carlo simulation and analytical results.

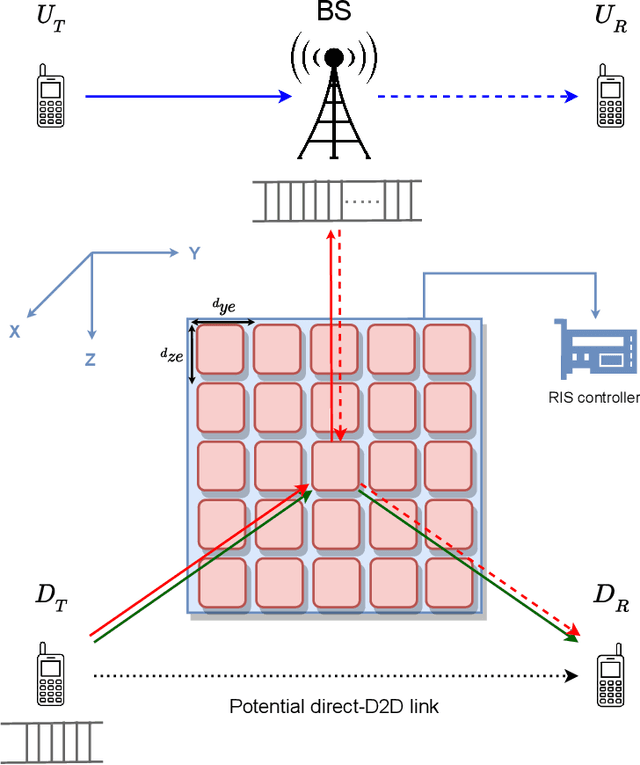

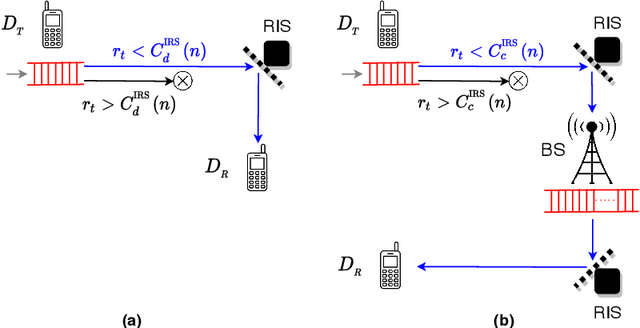

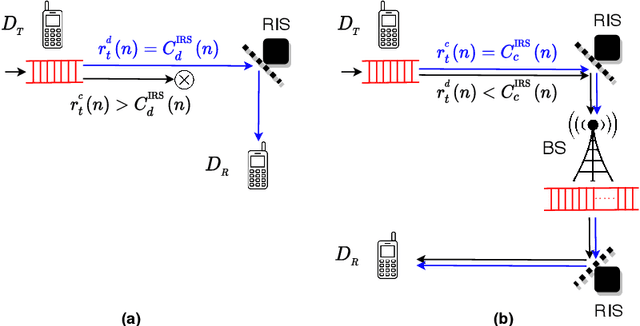

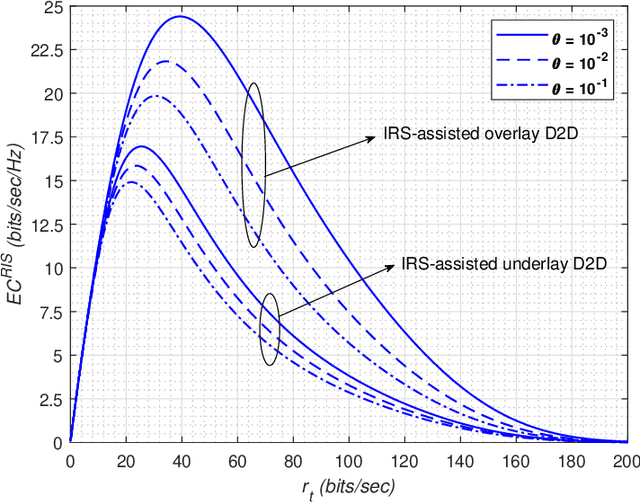

Statistical QoS Analysis of Reconfigurable Intelligent Surface-assisted D2D Communication

Apr 07, 2022

Abstract:This work performs the statistical QoS analysis of a Rician block-fading reconfigurable intelligent surface (RIS)-assisted D2D link in which the transmit node operates under delay QoS constraints. First, we perform mode selection for the D2D link, in which the D2D pair can either communicate directly by relaying data from RISs or through a base station (BS). Next, we provide closed-form expressions for the effective capacity (EC) of the RIS-assisted D2D link. When channel state information at the transmitter (CSIT) is available, the transmit D2D node communicates with the variable rate $r_t(n)$ (adjustable according to the channel conditions); otherwise, it uses a fixed rate $r_t$. It allows us to model the RIS-assisted D2D link as a Markov system in both cases. We also extend our analysis to overlay and underlay D2D settings. To improve the throughput of the RIS-assisted D2D link when CSIT is unknown, we use the HARQ retransmission scheme and provide the EC analysis of the HARQ-enabled RIS-assisted D2D link. Finally, simulation results demonstrate that: i) the EC increases with an increase in RIS elements, ii) the EC decreases when strict QoS constraints are imposed at the transmit node, iii) the EC decreases with an increase in the variance of the path loss estimation error, iv) the EC increases with an increase in the probability of ON states, v) EC increases by using HARQ when CSIT is unknown, and it can reach up to $5\times$ the usual EC (with no HARQ and without CSIT) by using the optimal number of retransmissions.

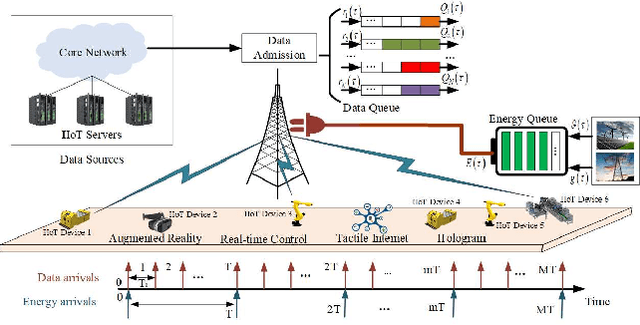

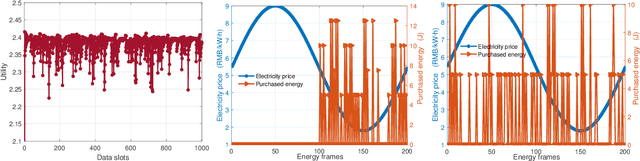

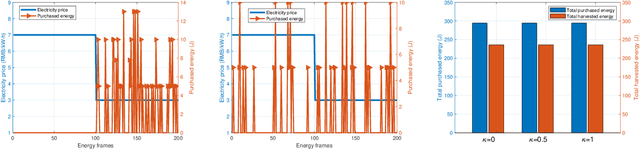

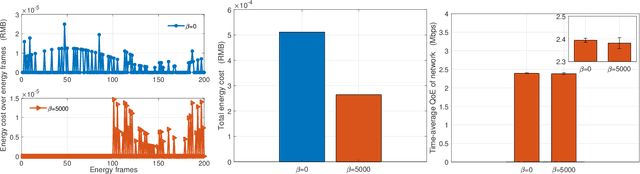

Two-timescale Resource Allocation for Automated Networks in IIoT

Mar 24, 2022

Abstract:The rapid technological advances of cellular technologies will revolutionize network automation in industrial internet of things (IIoT). In this paper, we investigate the two-timescale resource allocation problem in IIoT networks with hybrid energy supply, where temporal variations of energy harvesting (EH), electricity price, channel state, and data arrival exhibit different granularity. The formulated problem consists of energy management at a large timescale, as well as rate control, channel selection, and power allocation at a small timescale. To address this challenge, we develop an online solution to guarantee bounded performance deviation with only causal information. Specifically, Lyapunov optimization is leveraged to transform the long-term stochastic optimization problem into a series of short-term deterministic optimization problems. Then, a low-complexity rate control algorithm is developed based on alternating direction method of multipliers (ADMM), which accelerates the convergence speed via the decomposition-coordination approach. Next, the joint channel selection and power allocation problem is transformed into a one-to-many matching problem, and solved by the proposed price-based matching with quota restriction. Finally, the proposed algorithm is verified through simulations under various system configurations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge