Jon Crowcroft

EIA-SEC: Improved Actor-Critic Framework for Multi-UAV Collaborative Control in Smart Agriculture

Dec 21, 2025

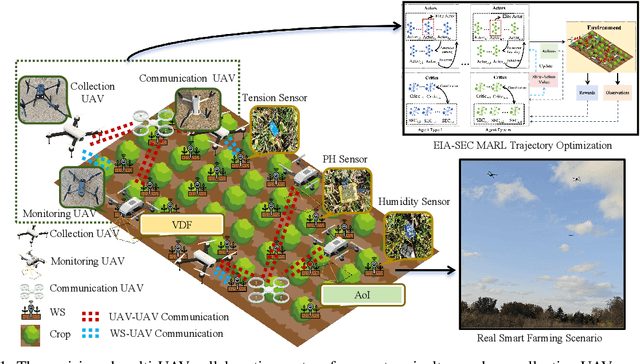

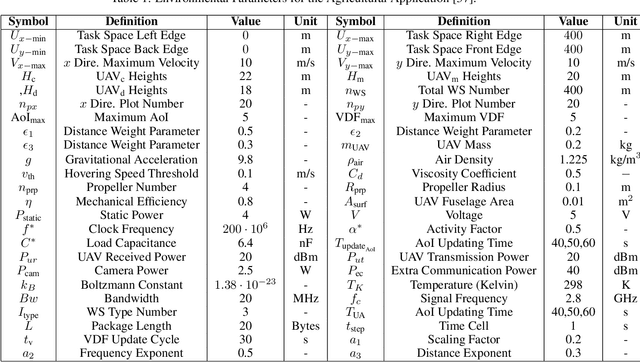

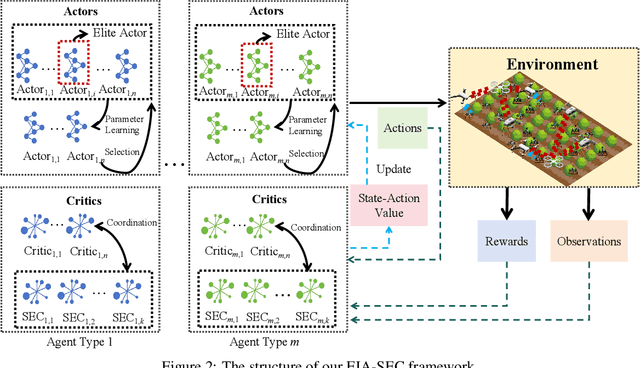

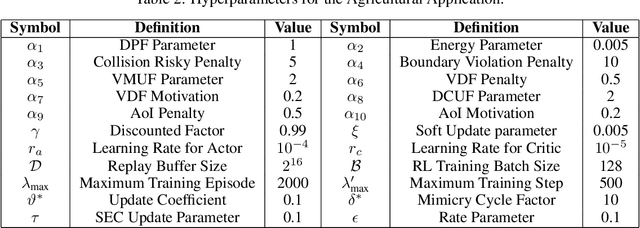

Abstract:The widespread application of wireless communication technology has promoted the development of smart agriculture, where unmanned aerial vehicles (UAVs) play a multifunctional role. We target a multi-UAV smart agriculture system where UAVs cooperatively perform data collection, image acquisition, and communication tasks. In this context, we model a Markov decision process to solve the multi-UAV trajectory planning problem. Moreover, we propose a novel Elite Imitation Actor-Shared Ensemble Critic (EIA-SEC) framework, where agents adaptively learn from the elite agent to reduce trial-and-error costs, and a shared ensemble critic collaborates with each agent's local critic to ensure unbiased objective value estimates and prevent overestimation. Experimental results demonstrate that EIA-SEC outperforms state-of-the-art baselines in terms of reward performance, training stability, and convergence speed.

Should AI Become an Intergenerational Civil Right?

Dec 09, 2025Abstract:Artificial Intelligence (AI) is rapidly becoming a foundational layer of social, economic, and cognitive infrastructure. At the same time, the training and large-scale deployment of AI systems rely on finite and unevenly distributed energy, networking, and computational resources. This tension exposes a largely unexamined problem in current AI governance: while expanding access to AI is essential for social inclusion and equal opportunity, unconstrained growth in AI use risks unsustainable resource consumption, whereas restricting access threatens to entrench inequality and undermine basic rights. This paper argues that access to AI outputs largely derived from publicly produced knowledge should not be treated solely as a commercial service, but as a fundamental civil interest requiring explicit protection. We show that existing regulatory frameworks largely ignore the coupling between equitable access and resource constraints, leaving critical questions of fairness, sustainability, and long-term societal impact unresolved. To address this gap, we propose recognizing access to AI as an \emph{Intergenerational Civil Right}, establishing a legal and ethical framework that simultaneously safeguards present-day inclusion and the rights of future generations. Beyond normative analysis, we explore how this principle can be technically realized. Drawing on emerging paradigms in IoT--Edge--Cloud computing, decentralized inference, and energy-aware networking, we outline technological trajectories and a strawman architecture for AI Delivery Networks that support equitable access under strict resource constraints. By framing AI as a shared social infrastructure rather than a discretionary market commodity, this work connects governance principles with concrete system design choices, offering a pathway toward AI deployment that is both socially just and environmentally sustainable.

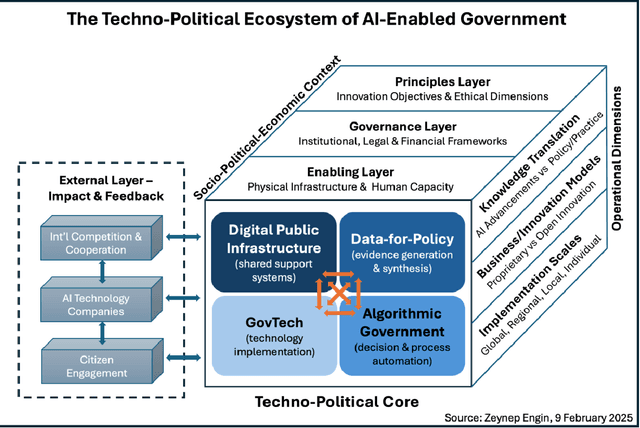

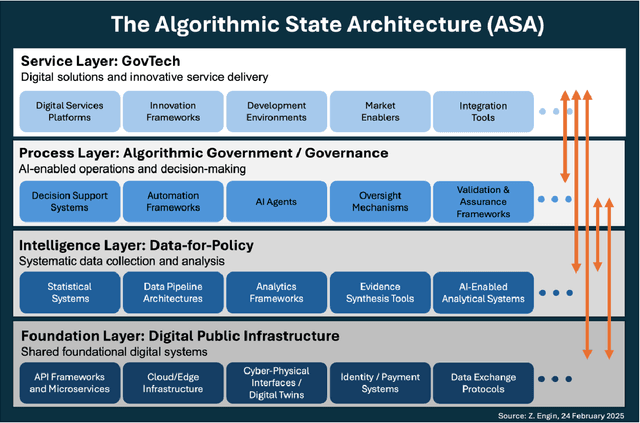

The Algorithmic State Architecture (ASA): An Integrated Framework for AI-Enabled Government

Mar 13, 2025

Abstract:As artificial intelligence transforms public sector operations, governments struggle to integrate technological innovations into coherent systems for effective service delivery. This paper introduces the Algorithmic State Architecture (ASA), a novel four-layer framework conceptualising how Digital Public Infrastructure, Data-for-Policy, Algorithmic Government/Governance, and GovTech interact as an integrated system in AI-enabled states. Unlike approaches that treat these as parallel developments, ASA positions them as interdependent layers with specific enabling relationships and feedback mechanisms. Through comparative analysis of implementations in Estonia, Singapore, India, and the UK, we demonstrate how foundational digital infrastructure enables systematic data collection, which powers algorithmic decision-making processes, ultimately manifesting in user-facing services. Our analysis reveals that successful implementations require balanced development across all layers, with particular attention to integration mechanisms between them. The framework contributes to both theory and practice by bridging previously disconnected domains of digital government research, identifying critical dependencies that influence implementation success, and providing a structured approach for analysing the maturity and development pathways of AI-enabled government systems.

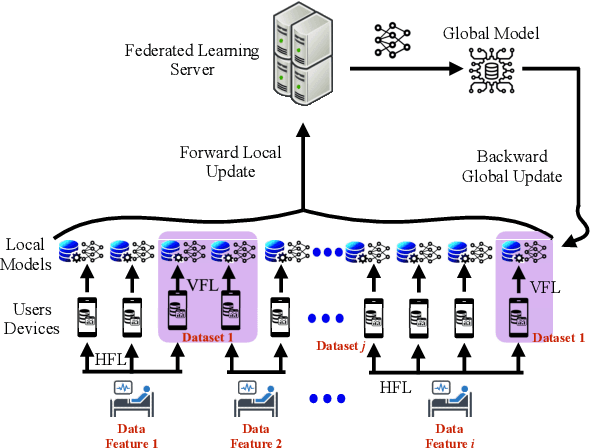

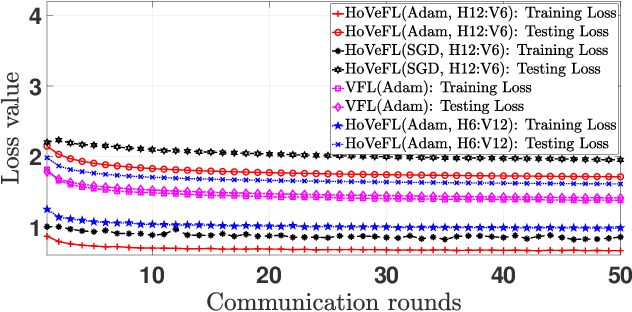

A Novel Framework of Horizontal-Vertical Hybrid Federated Learning for EdgeIoT

Oct 02, 2024

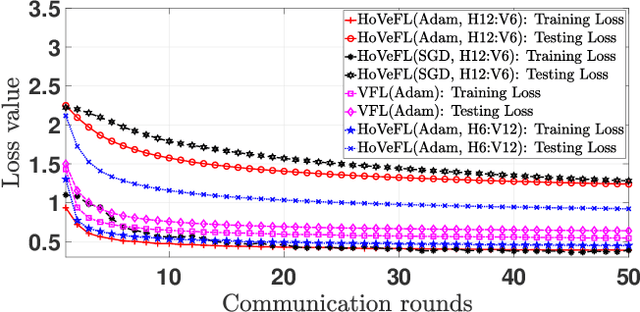

Abstract:This letter puts forth a new hybrid horizontal-vertical federated learning (HoVeFL) for mobile edge computing-enabled Internet of Things (EdgeIoT). In this framework, certain EdgeIoT devices train local models using the same data samples but analyze disparate data features, while the others focus on the same features using non-independent and identically distributed (non-IID) data samples. Thus, even though the data features are consistent, the data samples vary across devices. The proposed HoVeFL formulates the training of local and global models to minimize the global loss function. Performance evaluations on CIFAR-10 and SVHN datasets reveal that the testing loss of HoVeFL with 12 horizontal FL devices and six vertical FL devices is 5.5% and 25.2% higher, respectively, compared to a setup with six horizontal FL devices and 12 vertical FL devices.

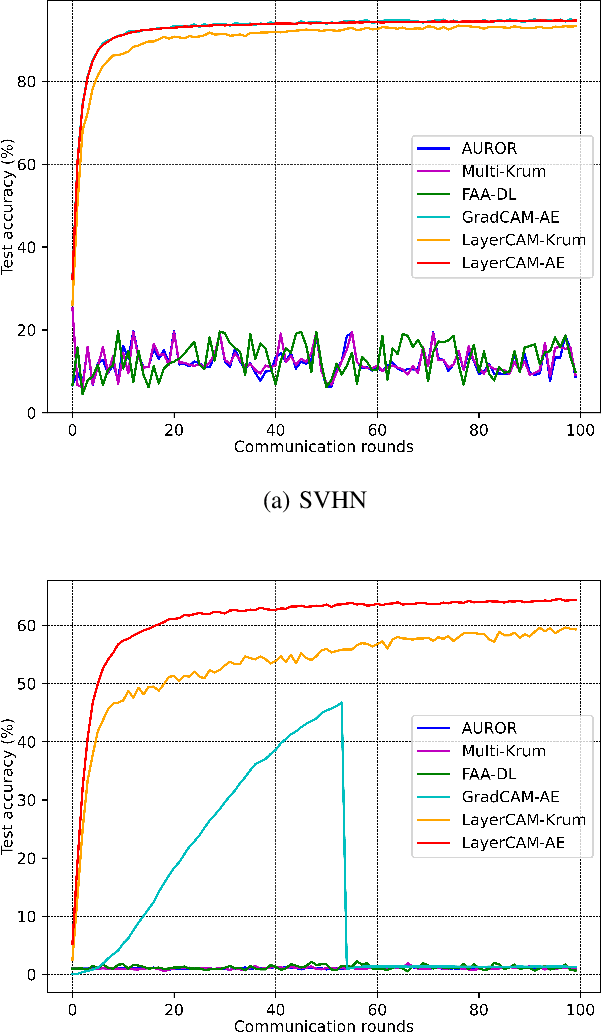

A Novel Defense Against Poisoning Attacks on Federated Learning: LayerCAM Augmented with Autoencoder

Jun 02, 2024

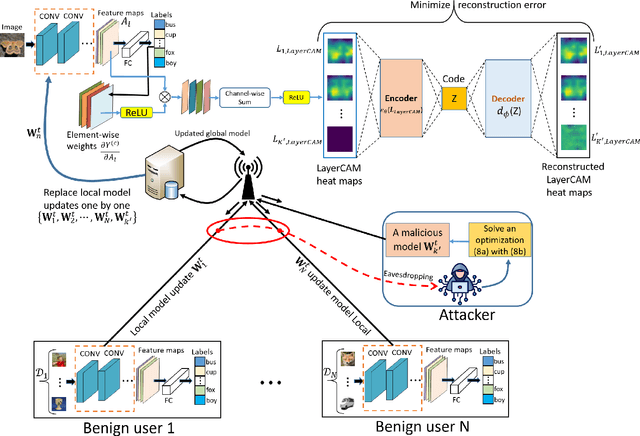

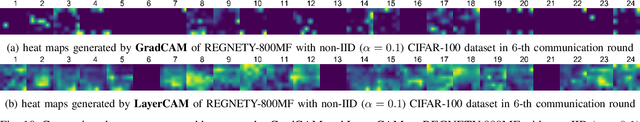

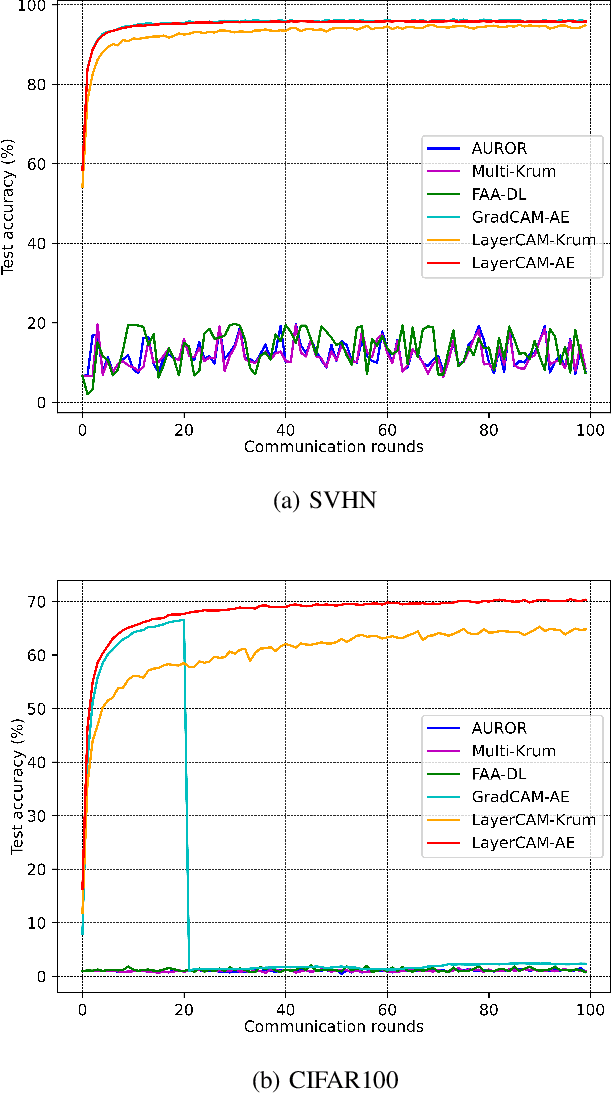

Abstract:Recent attacks on federated learning (FL) can introduce malicious model updates that circumvent widely adopted Euclidean distance-based detection methods. This paper proposes a novel defense strategy, referred to as LayerCAM-AE, designed to counteract model poisoning in federated learning. The LayerCAM-AE puts forth a new Layer Class Activation Mapping (LayerCAM) integrated with an autoencoder (AE), significantly enhancing detection capabilities. Specifically, LayerCAM-AE generates a heat map for each local model update, which is then transformed into a more compact visual format. The autoencoder is designed to process the LayerCAM heat maps from the local model updates, improving their distinctiveness and thereby increasing the accuracy in spotting anomalous maps and malicious local models. To address the risk of misclassifications with LayerCAM-AE, a voting algorithm is developed, where a local model update is flagged as malicious if its heat maps are consistently suspicious over several rounds of communication. Extensive tests of LayerCAM-AE on the SVHN and CIFAR-100 datasets are performed under both Independent and Identically Distributed (IID) and non-IID settings in comparison with existing ResNet-50 and REGNETY-800MF defense models. Experimental results show that LayerCAM-AE increases detection rates (Recall: 1.0, Precision: 1.0, FPR: 0.0, Accuracy: 1.0, F1 score: 1.0, AUC: 1.0) and test accuracy in FL, surpassing the performance of both the ResNet-50 and REGNETY-800MF. Our code is available at: https://github.com/jjzgeeks/LayerCAM-AE

Architecture of Smart Certificates for Web3 Applications Against Cyberthreats in Financial Industry

Nov 03, 2023Abstract:This study addresses the security challenges associated with the current internet transformations, specifically focusing on emerging technologies such as blockchain and decentralized storage. It also investigates the role of Web3 applications in shaping the future of the internet. The primary objective is to propose a novel design for 'smart certificates,' which are digital certificates that can be programmatically enforced. Utilizing such certificates, an enterprise can better protect itself from cyberattacks and ensure the security of its data and systems. Web3 recent security solutions by companies and projects like Certik, Forta, Slither, and Securify are the equivalent of code scanning tool that were originally developed for Web1 and Web2 applications, and definitely not like certificates to help enterprises feel safe against cyberthreats. We aim to improve the resilience of enterprises' digital infrastructure by building on top of Web3 application and put methodologies in place for vulnerability analysis and attack correlation, focusing on architecture of different layers, Wallet/Client, Application and Smart Contract, where specific components are provided to identify and predict threats and risks. Furthermore, Certificate Transparency is used for enhancing the security, trustworthiness and decentralized management of the certificates, and detecting misuses, compromises, and malfeasances.

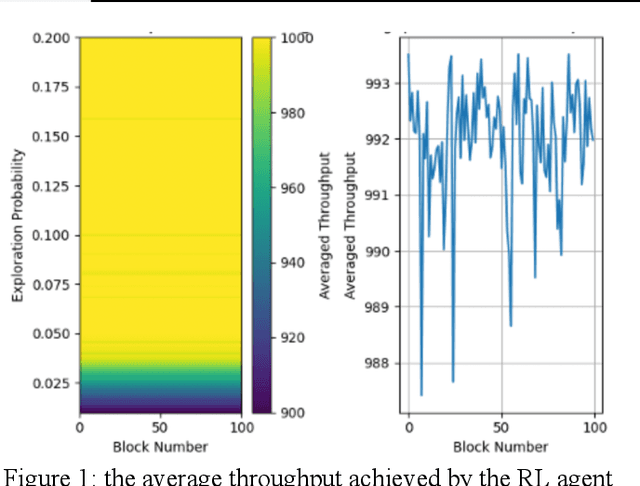

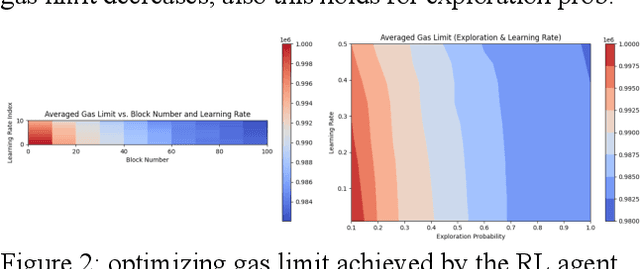

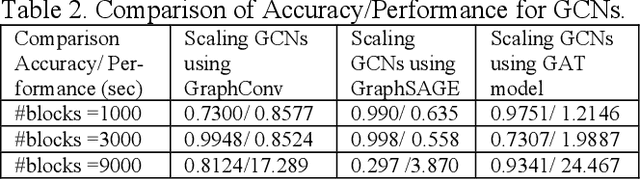

Analysis of Information Propagation in Ethereum Network Using Combined Graph Attention Network and Reinforcement Learning to Optimize Network Efficiency and Scalability

Nov 02, 2023

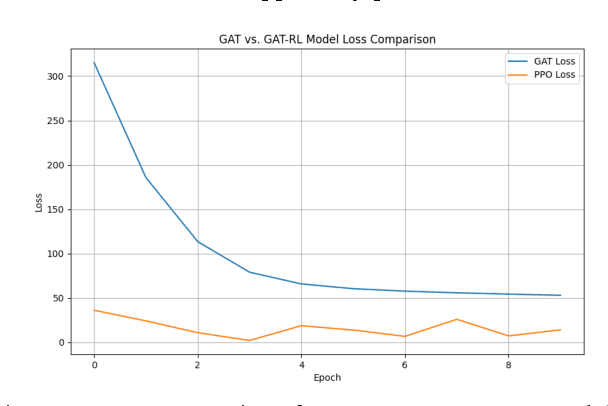

Abstract:Blockchain technology has revolutionized the way information is propagated in decentralized networks. Ethereum plays a pivotal role in facilitating smart contracts and decentralized applications. Understanding information propagation dynamics in Ethereum is crucial for ensuring network efficiency, security, and scalability. In this study, we propose an innovative approach that utilizes Graph Convolutional Networks (GCNs) to analyze the information propagation patterns in the Ethereum network. The first phase of our research involves data collection from the Ethereum blockchain, consisting of blocks, transactions, and node degrees. We construct a transaction graph representation using adjacency matrices to capture the node embeddings; while our major contribution is to develop a combined Graph Attention Network (GAT) and Reinforcement Learning (RL) model to optimize the network efficiency and scalability. It learns the best actions to take in various network states, ultimately leading to improved network efficiency, throughput, and optimize gas limits for block processing. In the experimental evaluation, we analyze the performance of our model on a large-scale Ethereum dataset. We investigate effectively aggregating information from neighboring nodes capturing graph structure and updating node embeddings using GCN with the objective of transaction pattern prediction, accounting for varying network loads and number of blocks. Not only we design a gas limit optimization model and provide the algorithm, but also to address scalability, we demonstrate the use and implementation of sparse matrices in GraphConv, GraphSAGE, and GAT. The results indicate that our designed GAT-RL model achieves superior results compared to other GCN models in terms of performance. It effectively propagates information across the network, optimizing gas limits for block processing and improving network efficiency.

Probabilistic Sampling-Enhanced Temporal-Spatial GCN: A Scalable Framework for Transaction Anomaly Detection in Ethereum Networks

Sep 29, 2023Abstract:The rapid evolution of the Ethereum network necessitates sophisticated techniques to ensure its robustness against potential threats and to maintain transparency. While Graph Neural Networks (GNNs) have pioneered anomaly detection in such platforms, capturing the intricacies of both spatial and temporal transactional patterns has remained a challenge. This study presents a fusion of Graph Convolutional Networks (GCNs) with Temporal Random Walks (TRW) enhanced by probabilistic sampling to bridge this gap. Our approach, unlike traditional GCNs, leverages the strengths of TRW to discern complex temporal sequences in Ethereum transactions, thereby providing a more nuanced transaction anomaly detection mechanism. Preliminary evaluations demonstrate that our TRW-GCN framework substantially advances the performance metrics over conventional GCNs in detecting anomalies and transaction bursts. This research not only underscores the potential of temporal cues in Ethereum transactional data but also offers a scalable and effective methodology for ensuring the security and transparency of decentralized platforms. By harnessing both spatial relationships and time-based transactional sequences as node features, our model introduces an additional layer of granularity, making the detection process more robust and less prone to false positives. This work lays the foundation for future research aimed at optimizing and enhancing the transparency of blockchain technologies, and serves as a testament to the significance of considering both time and space dimensions in the ever-evolving landscape of the decentralized platforms.

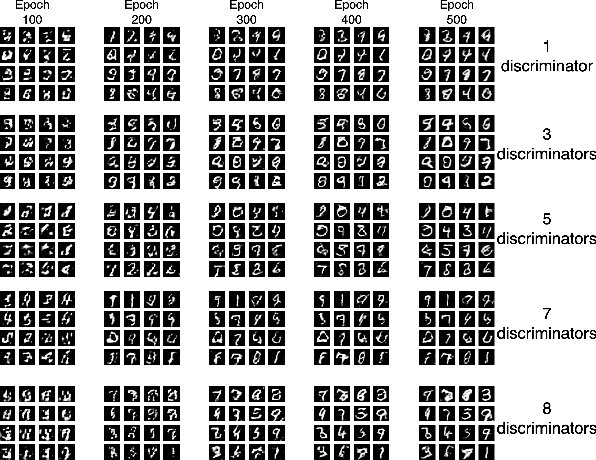

Federated Split GANs

Jul 04, 2022

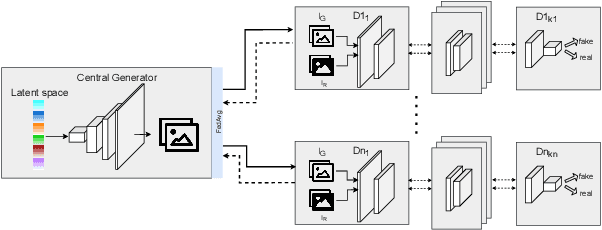

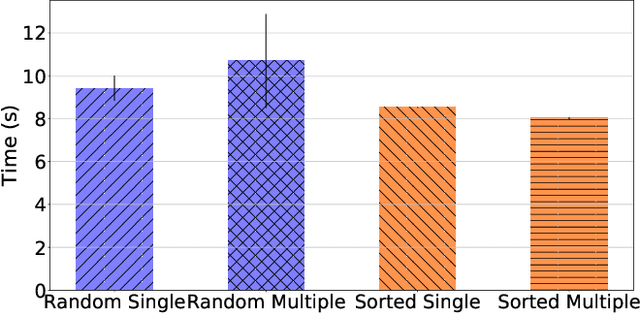

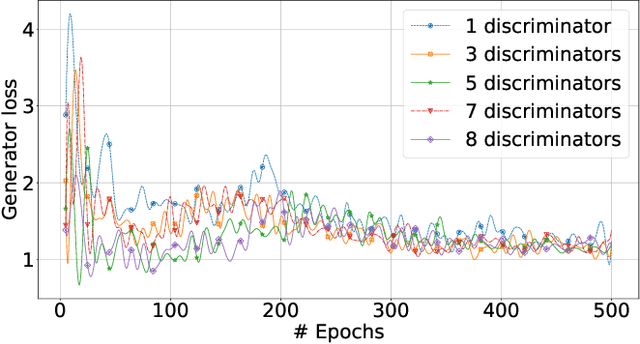

Abstract:Mobile devices and the immense amount and variety of data they generate are key enablers of machine learning (ML)-based applications. Traditional ML techniques have shifted toward new paradigms such as federated (FL) and split learning (SL) to improve the protection of user's data privacy. However, these paradigms often rely on server(s) located in the edge or cloud to train computationally-heavy parts of a ML model to avoid draining the limited resource on client devices, resulting in exposing device data to such third parties. This work proposes an alternative approach to train computationally-heavy ML models in user's devices themselves, where corresponding device data resides. Specifically, we focus on GANs (generative adversarial networks) and leverage their inherent privacy-preserving attribute. We train the discriminative part of a GAN with raw data on user's devices, whereas the generative model is trained remotely (e.g., server) for which there is no need to access sensor true data. Moreover, our approach ensures that the computational load of training the discriminative model is shared among user's devices-proportional to their computation capabilities-by means of SL. We implement our proposed collaborative training scheme of a computationally-heavy GAN model in real resource-constrained devices. The results show that our system preserves data privacy, keeps a short training time, and yields same accuracy of model training in unconstrained devices (e.g., cloud). Our code can be found on https://github.com/YukariSonz/FSL-GAN

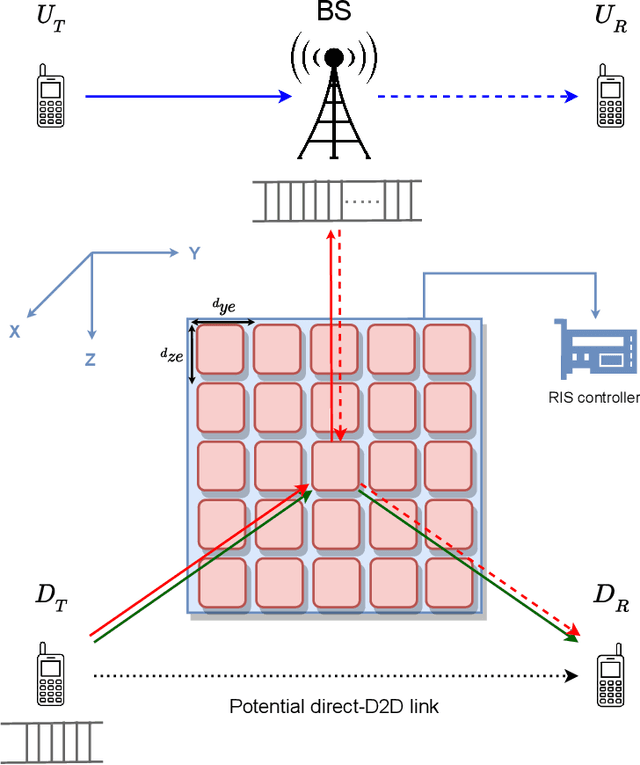

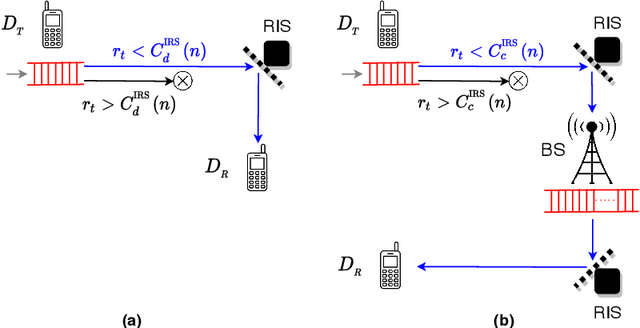

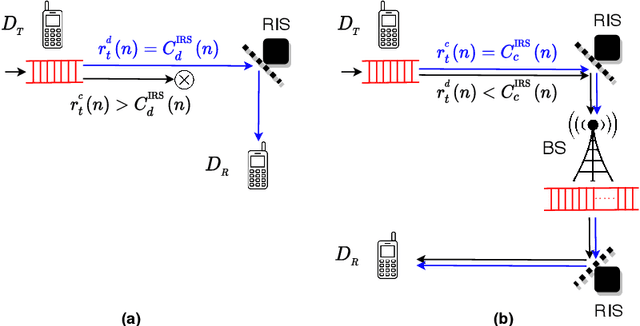

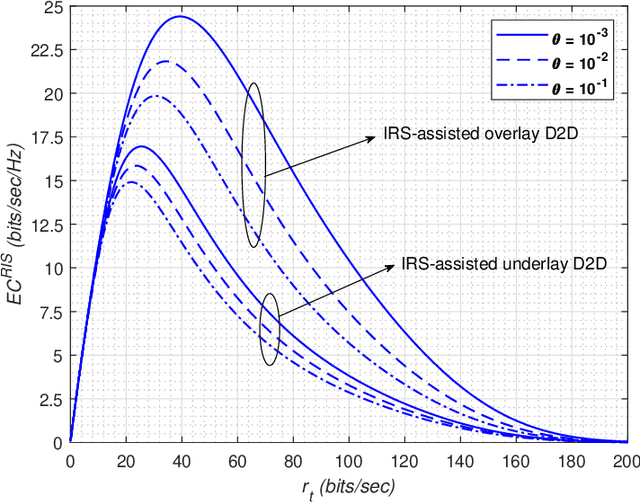

Statistical QoS Analysis of Reconfigurable Intelligent Surface-assisted D2D Communication

Apr 07, 2022

Abstract:This work performs the statistical QoS analysis of a Rician block-fading reconfigurable intelligent surface (RIS)-assisted D2D link in which the transmit node operates under delay QoS constraints. First, we perform mode selection for the D2D link, in which the D2D pair can either communicate directly by relaying data from RISs or through a base station (BS). Next, we provide closed-form expressions for the effective capacity (EC) of the RIS-assisted D2D link. When channel state information at the transmitter (CSIT) is available, the transmit D2D node communicates with the variable rate $r_t(n)$ (adjustable according to the channel conditions); otherwise, it uses a fixed rate $r_t$. It allows us to model the RIS-assisted D2D link as a Markov system in both cases. We also extend our analysis to overlay and underlay D2D settings. To improve the throughput of the RIS-assisted D2D link when CSIT is unknown, we use the HARQ retransmission scheme and provide the EC analysis of the HARQ-enabled RIS-assisted D2D link. Finally, simulation results demonstrate that: i) the EC increases with an increase in RIS elements, ii) the EC decreases when strict QoS constraints are imposed at the transmit node, iii) the EC decreases with an increase in the variance of the path loss estimation error, iv) the EC increases with an increase in the probability of ON states, v) EC increases by using HARQ when CSIT is unknown, and it can reach up to $5\times$ the usual EC (with no HARQ and without CSIT) by using the optimal number of retransmissions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge