Pulak Purkait

SRSR: Enhancing Semantic Accuracy in Real-World Image Super-Resolution with Spatially Re-Focused Text-Conditioning

Oct 26, 2025Abstract:Existing diffusion-based super-resolution approaches often exhibit semantic ambiguities due to inaccuracies and incompleteness in their text conditioning, coupled with the inherent tendency for cross-attention to divert towards irrelevant pixels. These limitations can lead to semantic misalignment and hallucinated details in the generated high-resolution outputs. To address these, we propose a novel, plug-and-play spatially re-focused super-resolution (SRSR) framework that consists of two core components: first, we introduce Spatially Re-focused Cross-Attention (SRCA), which refines text conditioning at inference time by applying visually-grounded segmentation masks to guide cross-attention. Second, we introduce a Spatially Targeted Classifier-Free Guidance (STCFG) mechanism that selectively bypasses text influences on ungrounded pixels to prevent hallucinations. Extensive experiments on both synthetic and real-world datasets demonstrate that SRSR consistently outperforms seven state-of-the-art baselines in standard fidelity metrics (PSNR and SSIM) across all datasets, and in perceptual quality measures (LPIPS and DISTS) on two real-world benchmarks, underscoring its effectiveness in achieving both high semantic fidelity and perceptual quality in super-resolution.

Knowledge Combination to Learn Rotated Detection Without Rotated Annotation

Apr 05, 2023

Abstract:Rotated bounding boxes drastically reduce output ambiguity of elongated objects, making it superior to axis-aligned bounding boxes. Despite the effectiveness, rotated detectors are not widely employed. Annotating rotated bounding boxes is such a laborious process that they are not provided in many detection datasets where axis-aligned annotations are used instead. In this paper, we propose a framework that allows the model to predict precise rotated boxes only requiring cheaper axis-aligned annotation of the target dataset 1. To achieve this, we leverage the fact that neural networks are capable of learning richer representation of the target domain than what is utilized by the task. The under-utilized representation can be exploited to address a more detailed task. Our framework combines task knowledge of an out-of-domain source dataset with stronger annotation and domain knowledge of the target dataset with weaker annotation. A novel assignment process and projection loss are used to enable the co-training on the source and target datasets. As a result, the model is able to solve the more detailed task in the target domain, without additional computation overhead during inference. We extensively evaluate the method on various target datasets including fresh-produce dataset, HRSC2016 and SSDD. Results show that the proposed method consistently performs on par with the fully supervised approach.

Retrieval Augmented Classification for Long-Tail Visual Recognition

Feb 22, 2022

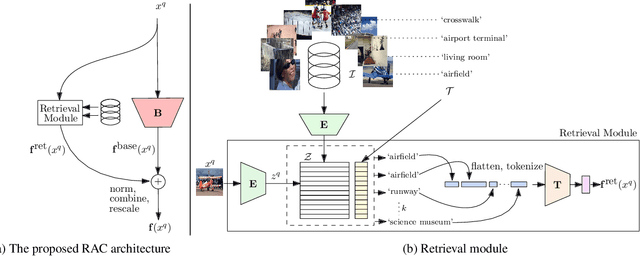

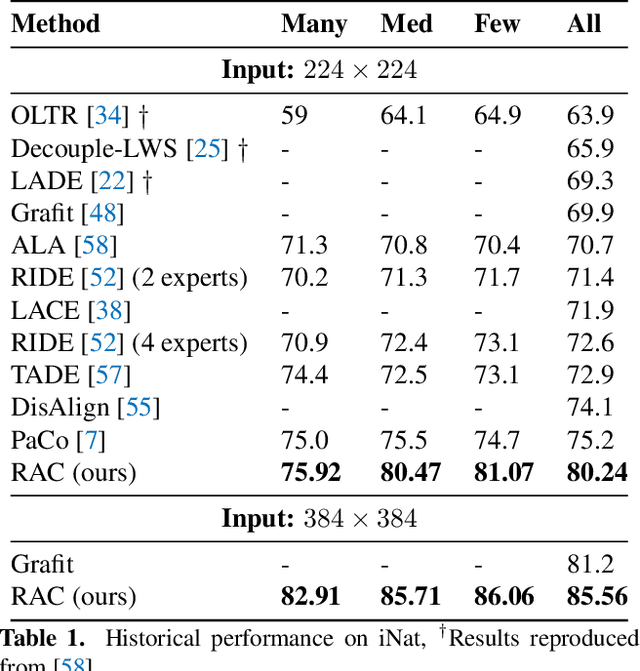

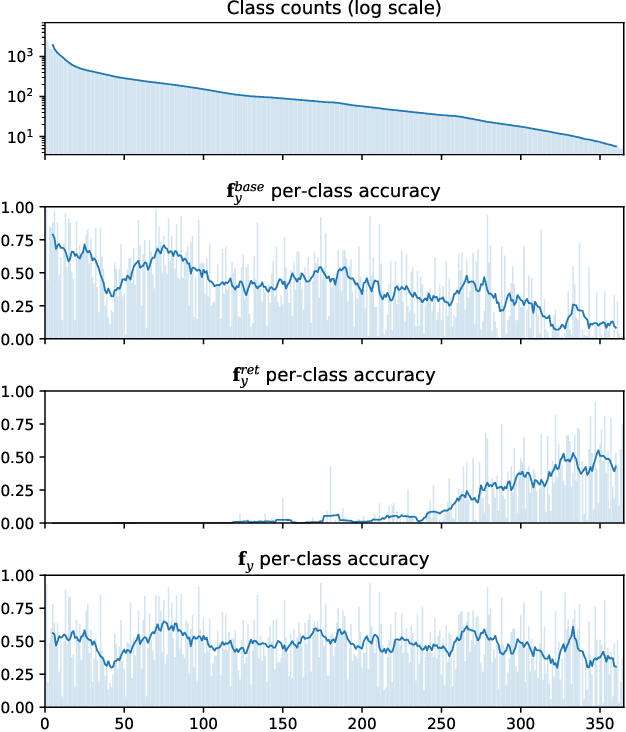

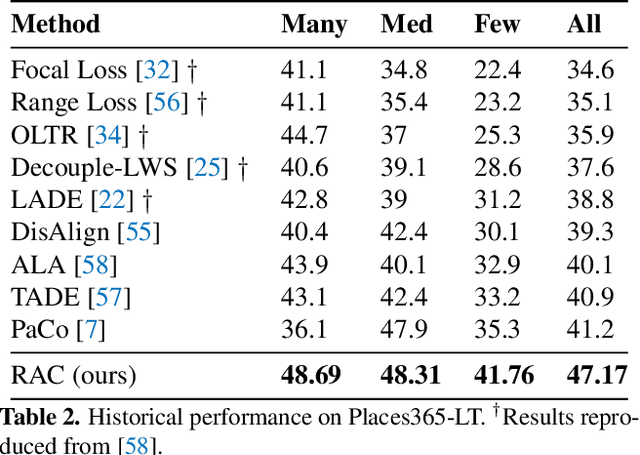

Abstract:We introduce Retrieval Augmented Classification (RAC), a generic approach to augmenting standard image classification pipelines with an explicit retrieval module. RAC consists of a standard base image encoder fused with a parallel retrieval branch that queries a non-parametric external memory of pre-encoded images and associated text snippets. We apply RAC to the problem of long-tail classification and demonstrate a significant improvement over previous state-of-the-art on Places365-LT and iNaturalist-2018 (14.5% and 6.7% respectively), despite using only the training datasets themselves as the external information source. We demonstrate that RAC's retrieval module, without prompting, learns a high level of accuracy on tail classes. This, in turn, frees the base encoder to focus on common classes, and improve its performance thereon. RAC represents an alternative approach to utilizing large, pretrained models without requiring fine-tuning, as well as a first step towards more effectively making use of external memory within common computer vision architectures.

Learning to generate new indoor scenes

Dec 10, 2019

Abstract:Deep generative models have been used in recent years to learn coherent latent representations in order to synthesize high quality images. In this work we propose a neural network to learn a generative model for sampling consistent indoor scene layouts. Our method learns the co-occurrences, and appearance parameters such as shape and pose, for different objects categories through a grammar-based auto-encoder, resulting in a compact and accurate representation for scene layouts. In contrast to existing grammar-based methods with a user-specified grammar, we construct the grammar automatically by extracting a set of production rules on reasoning about object co-occurrences in training data. The extracted grammar is able to represent a scene by an augmented parse tree. The proposed auto-encoder encodes these parse trees to a latent code, and decodes the latent code to a parse-tree, thereby ensuring the generated scene is always valid. We experimentally demonstrate that the proposed auto-encoder learns not only to generate valid scenes (i.e. the arrangements and appearances of objects), but it also learns coherent latent representations where nearby latent samples decode to similar scene outputs. The obtained generative model is applicable to several computer vision tasks such as 3D pose and layout estimation from RGB-D data.

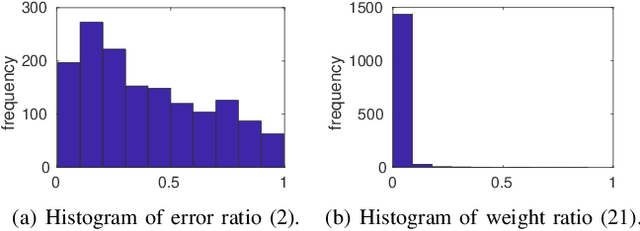

NeuRoRA: Neural Robust Rotation Averaging

Dec 10, 2019

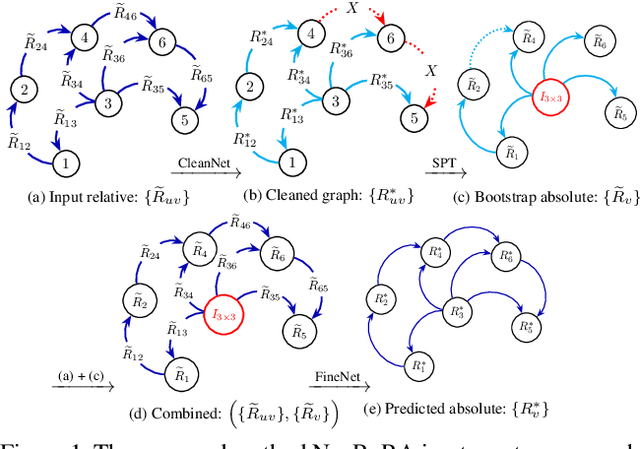

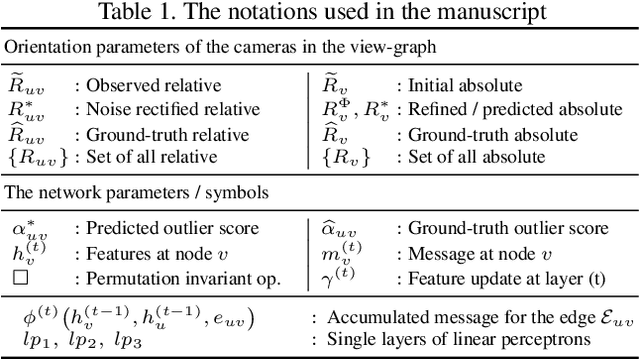

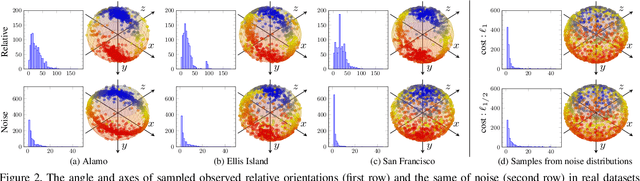

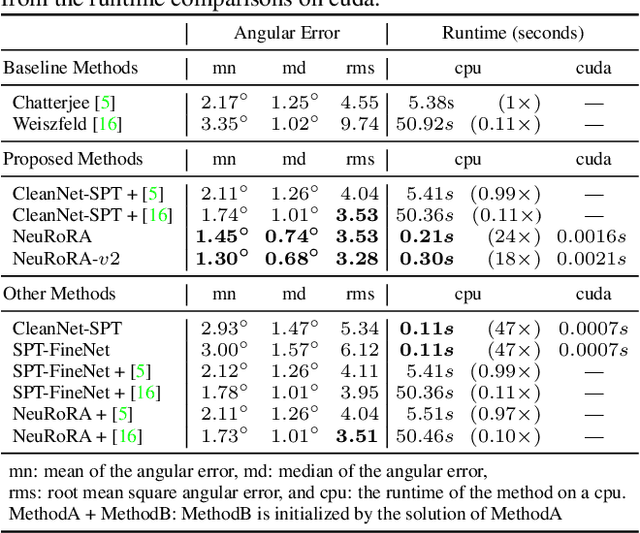

Abstract:Multiple rotation averaging is an essential task for structure from motion, mapping, and robot navigation. The task is to estimate the absolute orientations of several cameras given some of their noisy relative orientation measurements. The conventional methods for this task seek parameters of the absolute orientations that agree best with the observed noisy measurements according to a robust cost function. These robust cost functions are highly nonlinear and are designed based on certain assumptions about the noise and outlier distributions. In this work, we aim to build a neural network that learns the noise patterns from the data and predict/regress the model parameters from the noisy relative orientations. The proposed network is a combination of two networks: (1) a view-graph cleaning network, which detects outlier edges in the view-graph and rectifies noisy measurements; and (2) a fine-tuning network, which fine-tunes an initialization of absolute orientations bootstrapped from the cleaned graph, in a single step. The proposed combined network is very fast, moreover, being trained on a large number of synthetic graphs, it is more accurate than the conventional iterative optimization methods. Although the idea of replacing robust optimization methods by a graph-based network is demonstrated only for multiple rotation averaging, it could easily be extended to other graph-based geometric problems, for example, pose-graph optimization.

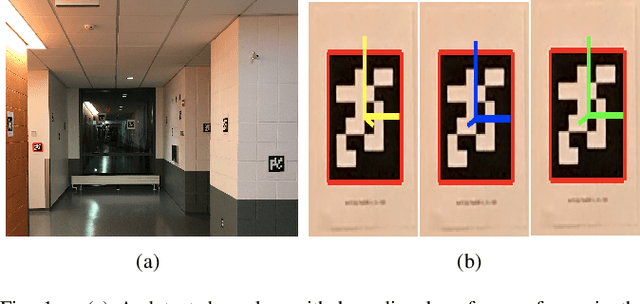

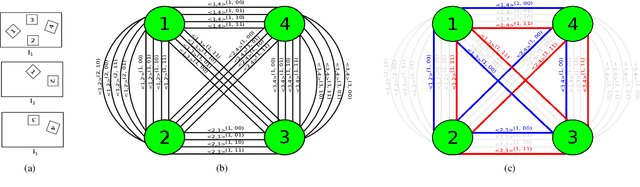

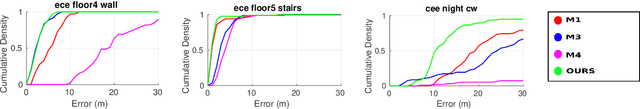

Resolving Marker Pose Ambiguity by Robust Rotation Averaging with Clique Constraints

Sep 26, 2019

Abstract:Planar markers are useful in robotics and computer vision for mapping and localisation. Given a detected marker in an image, a frequent task is to estimate the 6DOF pose of the marker relative to the camera, which is an instance of planar pose estimation (PPE). Although there are mature techniques, PPE suffers from a fundamental ambiguity problem, in that there can be more than one plausible pose solutions for a PPE instance. Especially when localisation of the marker corners is noisy, it is often difficult to disambiguate the pose solutions based on reprojection error alone. Previous methods choose between the possible solutions using a heuristic criteria, or simply ignore ambiguous markers. We propose to resolve the ambiguities by examining the consistencies of a set of markers across multiple views. Our specific contributions include a novel rotation averaging formulation that incorporates long-range dependencies between possible marker orientation solutions that arise from PPE ambiguities. We analyse the combinatorial complexity of the problem, and develop a novel lifted algorithm to effectively resolve marker pose ambiguities, without discarding any marker observations. Results on real and synthetic data show that our method is able to handle highly ambiguous inputs, and provides more accurate and/or complete marker-based mapping and localisation.

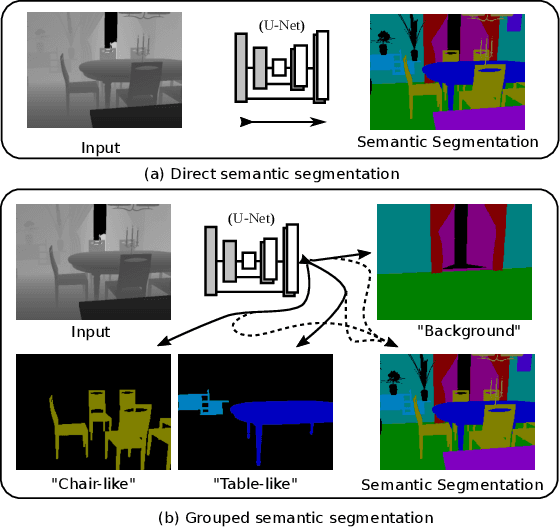

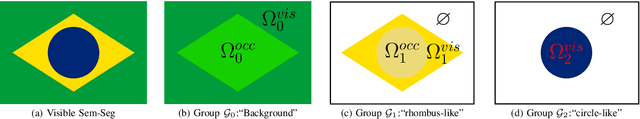

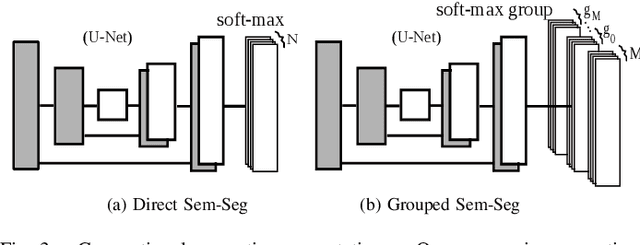

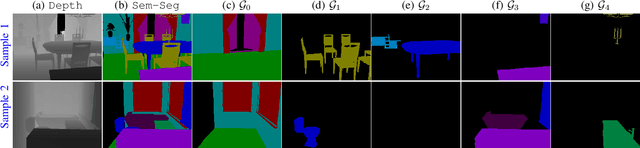

Seeing Behind Things: Extending Semantic Segmentation to Occluded Regions

Jun 07, 2019

Abstract:Semantic segmentation and instance level segmentation made substantial progress in recent years due to the emergence of deep neural networks (DNNs). A number of deep architectures with Convolution Neural Networks (CNNs) were proposed that surpass the traditional machine learning approaches for segmentation by a large margin. These architectures predict the directly observable semantic category of each pixel by usually optimizing a cross entropy loss. In this work we push the limit of semantic segmentation towards predicting semantic labels of directly visible as well as occluded objects or objects parts, where the network's input is a single depth image. We group the semantic categories into one background and multiple foreground object groups, and we propose a modification of the standard cross-entropy loss to cope with the settings. In our experiments we demonstrate that a CNN trained by minimizing the proposed loss is able to predict semantic categories for visible and occluded object parts without requiring to increase the network size (compared to a standard segmentation task). The results are validated on a newly generated dataset (augmented from SUNCG) dataset.

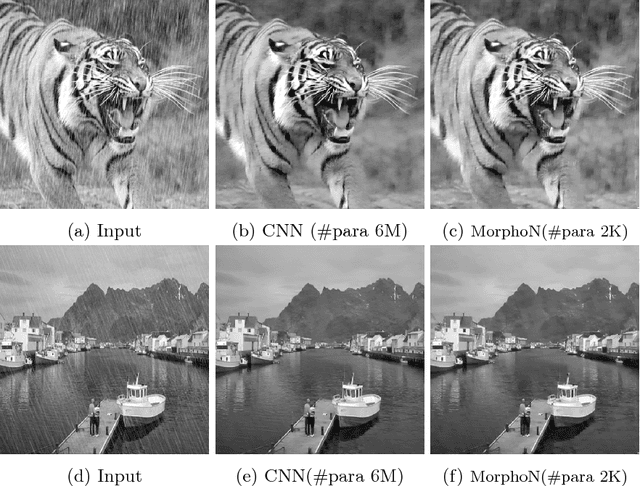

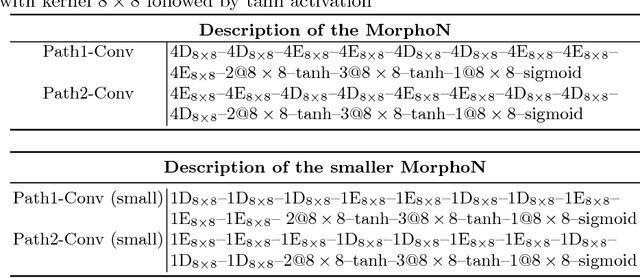

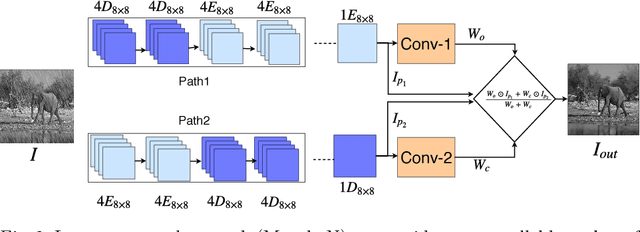

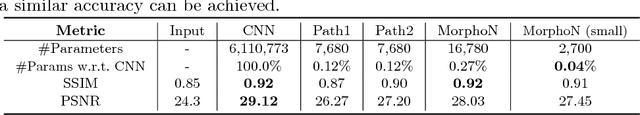

Morphological Networks for Image De-raining

Jan 08, 2019

Abstract:Mathematical morphological methods have successfully been applied to filter out (emphasize or remove) different structures of an image. However, it is argued that these methods could be suitable for the task only if the type and order of the filter(s) as well as the shape and size of operator kernel are designed properly. Thus the existing filtering operators are problem (instance) specific and are designed by the domain experts. In this work we propose a morphological network that emulates classical morphological filtering consisting of a series of erosion and dilation operators with trainable structuring elements. We evaluate the proposed network for image de-raining task where the SSIM and mean absolute error (MAE) loss corresponding to predicted and ground-truth clean image is back-propagated through the network to train the structuring elements. We observe that a single morphological network can de-rain an image with any arbitrary shaped rain-droplets and achieves similar performance with the contemporary CNNs for this task with a fraction of trainable parameters (network size). The proposed morphological network(MorphoN) is not designed specifically for de-raining and can readily be applied to similar filtering / noise cleaning tasks. The source code can be found here https://github.com/ranjanZ/2D-Morphological-Network

* Mathematical Morphology \and Optimization \and Morphological Network \and Image Filtering

Weakly supervised learning of indoor geometry by dual warping

Aug 10, 2018

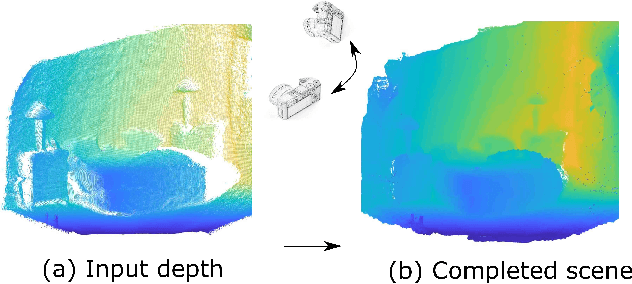

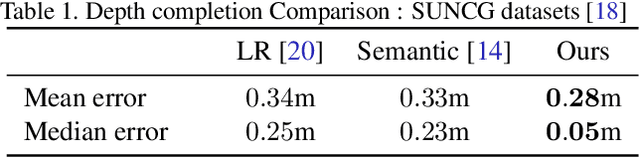

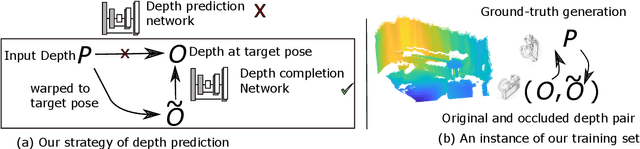

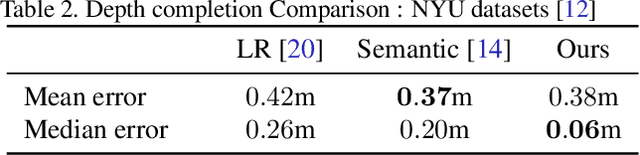

Abstract:A major element of depth perception and 3D understanding is the ability to predict the 3D layout of a scene and its contained objects for a novel pose. Indoor environments are particularly suitable for novel view prediction, since the set of objects in such environments is relatively restricted. In this work we address the task of 3D prediction especially for indoor scenes by leveraging only weak supervision. In the literature 3D scene prediction is usually solved via a 3D voxel grid. However, such methods are limited to estimating rather coarse 3D voxel grids, since predicting entire voxel spaces has large computational costs. Hence, our method operates in image-space rather than in voxel space, and the task of 3D estimation essentially becomes a depth image completion problem. We propose a novel approach to easily generate training data containing depth maps with realistic occlusions, and subsequently train a network for completing those occluded regions. Using multiple publicly available dataset~\cite{song2017semantic,Silberman:ECCV12} we benchmark our method against existing approaches and are able to obtain superior performance. We further demonstrate the flexibility of our method by presenting results for new view synthesis of RGB-D images.

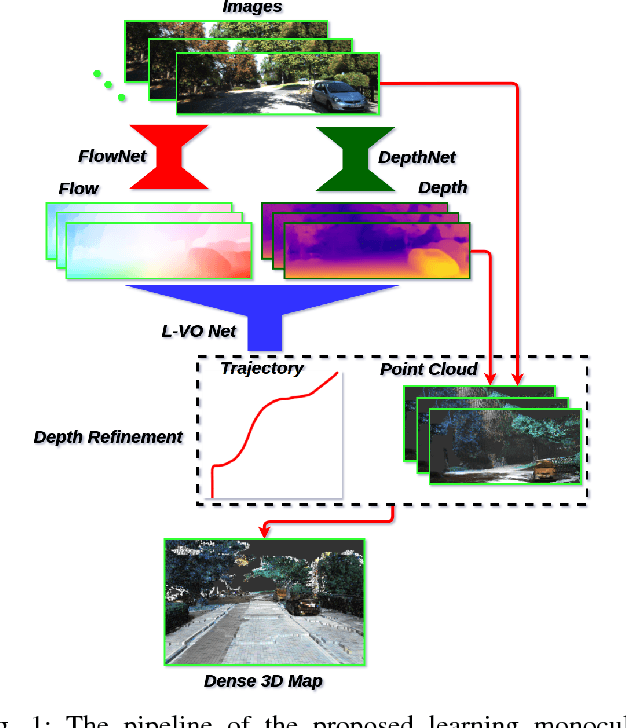

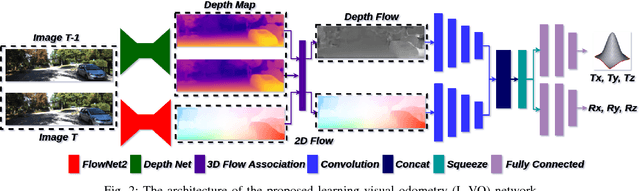

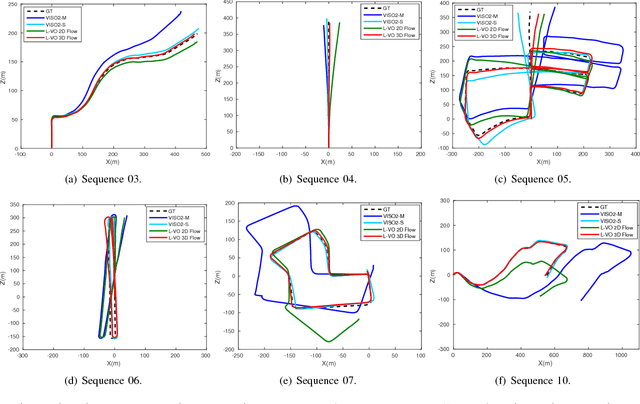

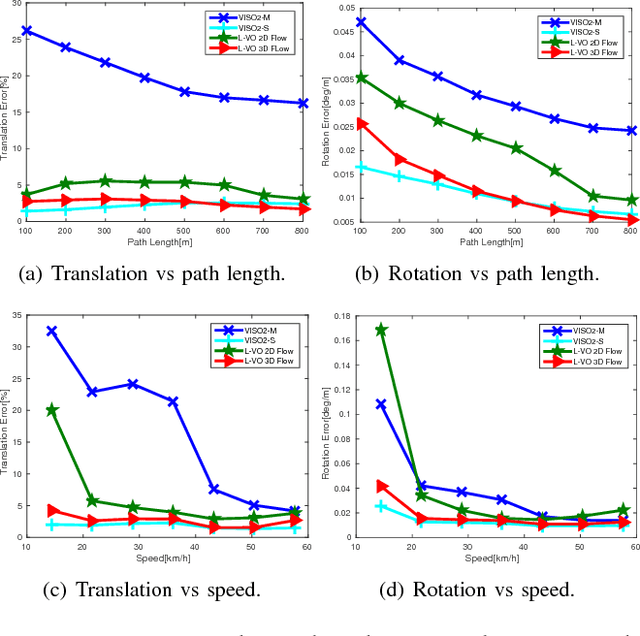

Learning monocular visual odometry with dense 3D mapping from dense 3D flow

Jul 25, 2018

Abstract:This paper introduces a fully deep learning approach to monocular SLAM, which can perform simultaneous localization using a neural network for learning visual odometry (L-VO) and dense 3D mapping. Dense 2D flow and a depth image are generated from monocular images by sub-networks, which are then used by a 3D flow associated layer in the L-VO network to generate dense 3D flow. Given this 3D flow, the dual-stream L-VO network can then predict the 6DOF relative pose and furthermore reconstruct the vehicle trajectory. In order to learn the correlation between motion directions, the Bivariate Gaussian modelling is employed in the loss function. The L-VO network achieves an overall performance of 2.68% for average translational error and 0.0143 deg/m for average rotational error on the KITTI odometry benchmark. Moreover, the learned depth is fully leveraged to generate a dense 3D map. As a result, an entire visual SLAM system, that is, learning monocular odometry combined with dense 3D mapping, is achieved.

* International Conference on Intelligent Robots and Systems(IROS 2018)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge