Alexander Long

AsyncMesh: Fully Asynchronous Optimization for Data and Pipeline Parallelism

Jan 30, 2026Abstract:Data and pipeline parallelism are key strategies for scaling neural network training across distributed devices, but their high communication cost necessitates co-located computing clusters with fast interconnects, limiting their scalability. We address this communication bottleneck by introducing asynchronous updates across both parallelism axes, relaxing the co-location requirement at the expense of introducing staleness between pipeline stages and data parallel replicas. To mitigate staleness, for pipeline parallelism, we adopt a weight look-ahead approach, and for data parallelism, we introduce an asynchronous sparse averaging method equipped with an exponential moving average based correction mechanism. We provide convergence guarantees for both sparse averaging and asynchronous updates. Experiments on large-scale language models (up to \em 1B parameters) demonstrate that our approach matches the performance of the fully synchronous baseline, while significantly reducing communication overhead.

Nesterov Method for Asynchronous Pipeline Parallel Optimization

May 02, 2025Abstract:Pipeline Parallelism (PP) enables large neural network training on small, interconnected devices by splitting the model into multiple stages. To maximize pipeline utilization, asynchronous optimization is appealing as it offers 100% pipeline utilization by construction. However, it is inherently challenging as the weights and gradients are no longer synchronized, leading to stale (or delayed) gradients. To alleviate this, we introduce a variant of Nesterov Accelerated Gradient (NAG) for asynchronous optimization in PP. Specifically, we modify the look-ahead step in NAG to effectively address the staleness in gradients. We theoretically prove that our approach converges at a sublinear rate in the presence of fixed delay in gradients. Our experiments on large-scale language modelling tasks using decoder-only architectures with up to 1B parameters, demonstrate that our approach significantly outperforms existing asynchronous methods, even surpassing the synchronous baseline.

Protocol Learning, Decentralized Frontier Risk and the No-Off Problem

Dec 10, 2024Abstract:Frontier models are currently developed and distributed primarily through two channels: centralized proprietary APIs or open-sourcing of pre-trained weights. We identify a third paradigm - Protocol Learning - where models are trained across decentralized networks of incentivized participants. This approach has the potential to aggregate orders of magnitude more computational resources than any single centralized entity, enabling unprecedented model scales and capabilities. However, it also introduces novel challenges: heterogeneous and unreliable nodes, malicious participants, the need for unextractable models to preserve incentives, and complex governance dynamics. To date, no systematic analysis has been conducted to assess the feasibility of Protocol Learning or the associated risks, particularly the 'No-Off Problem' arising from the inability to unilaterally halt a collectively trained model. We survey recent technical advances that suggest decentralized training may be feasible - covering emerging communication-efficient strategies and fault-tolerant methods - while highlighting critical open problems that remain. Contrary to the notion that decentralization inherently amplifies frontier risks, we argue that Protocol Learning's transparency, distributed governance, and democratized access ultimately reduce these risks compared to today's centralized regimes.

A Sampling Theory Perspective on Activations for Implicit Neural Representations

Feb 08, 2024

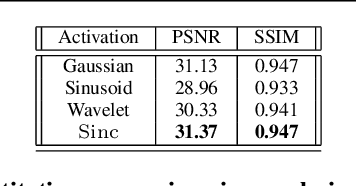

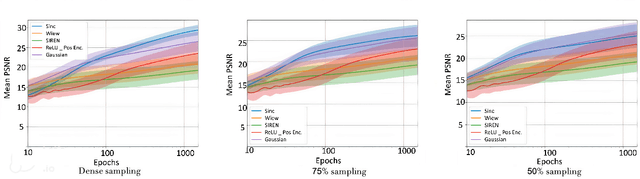

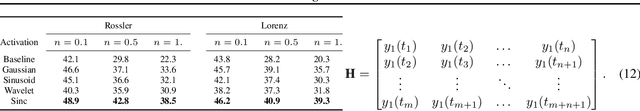

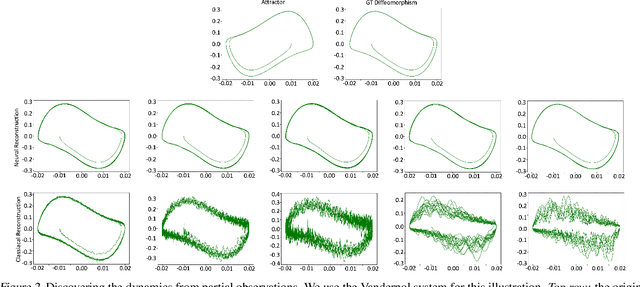

Abstract:Implicit Neural Representations (INRs) have gained popularity for encoding signals as compact, differentiable entities. While commonly using techniques like Fourier positional encodings or non-traditional activation functions (e.g., Gaussian, sinusoid, or wavelets) to capture high-frequency content, their properties lack exploration within a unified theoretical framework. Addressing this gap, we conduct a comprehensive analysis of these activations from a sampling theory perspective. Our investigation reveals that sinc activations, previously unused in conjunction with INRs, are theoretically optimal for signal encoding. Additionally, we establish a connection between dynamical systems and INRs, leveraging sampling theory to bridge these two paradigms.

Fast and Data Efficient Reinforcement Learning from Pixels via Non-Parametric Value Approximation

Mar 07, 2022

Abstract:We present Nonparametric Approximation of Inter-Trace returns (NAIT), a Reinforcement Learning algorithm for discrete action, pixel-based environments that is both highly sample and computation efficient. NAIT is a lazy-learning approach with an update that is equivalent to episodic Monte-Carlo on episode completion, but that allows the stable incorporation of rewards while an episode is ongoing. We make use of a fixed domain-agnostic representation, simple distance based exploration and a proximity graph-based lookup to facilitate extremely fast execution. We empirically evaluate NAIT on both the 26 and 57 game variants of ATARI100k where, despite its simplicity, it achieves competitive performance in the online setting with greater than 100x speedup in wall-time.

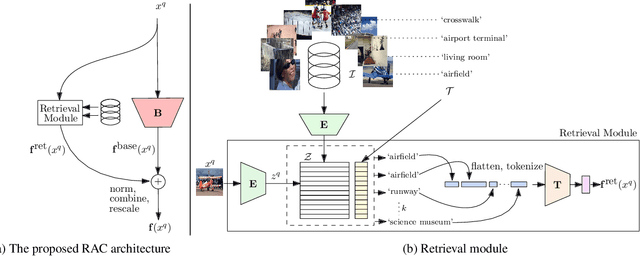

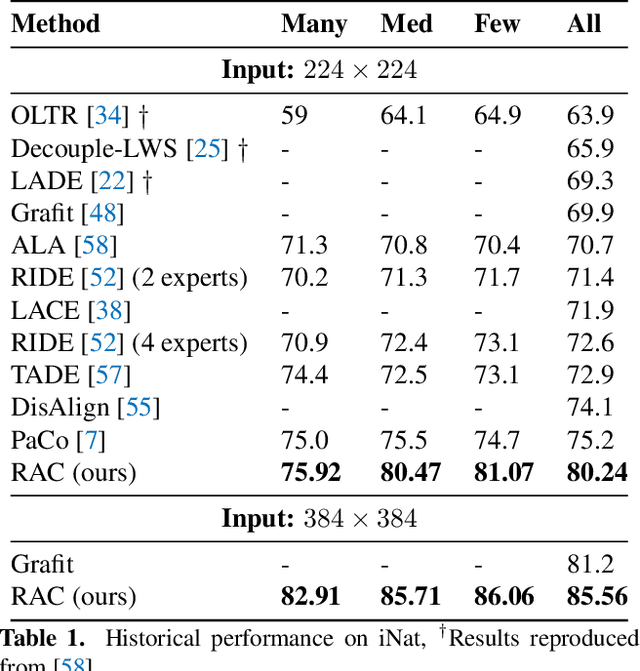

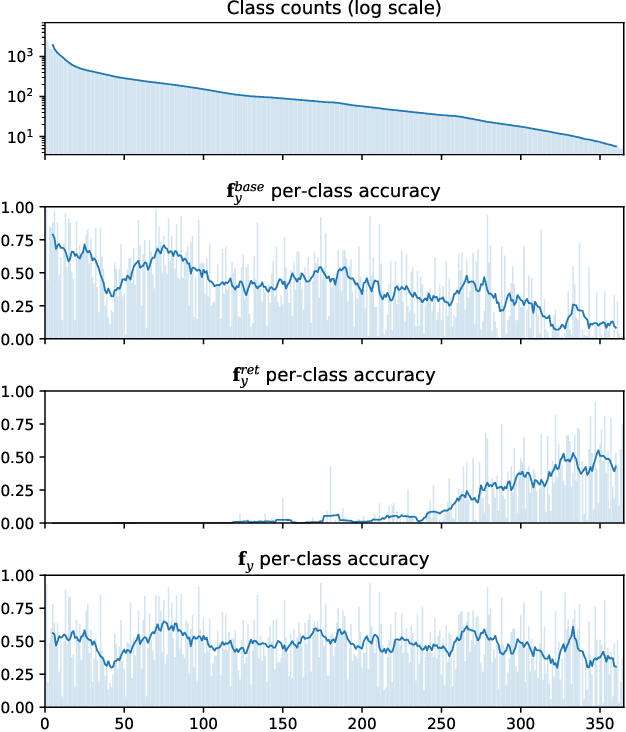

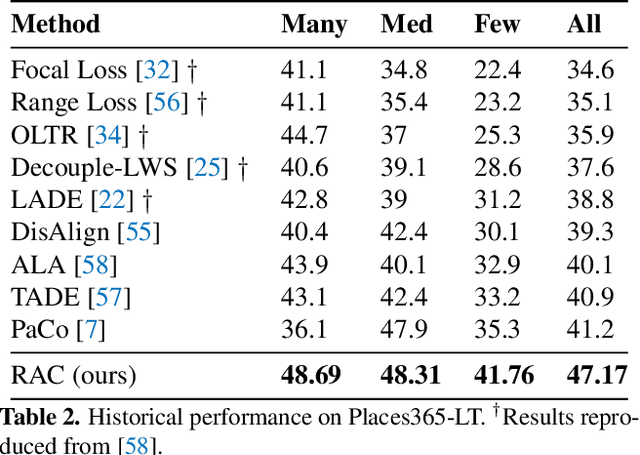

Retrieval Augmented Classification for Long-Tail Visual Recognition

Feb 22, 2022

Abstract:We introduce Retrieval Augmented Classification (RAC), a generic approach to augmenting standard image classification pipelines with an explicit retrieval module. RAC consists of a standard base image encoder fused with a parallel retrieval branch that queries a non-parametric external memory of pre-encoded images and associated text snippets. We apply RAC to the problem of long-tail classification and demonstrate a significant improvement over previous state-of-the-art on Places365-LT and iNaturalist-2018 (14.5% and 6.7% respectively), despite using only the training datasets themselves as the external information source. We demonstrate that RAC's retrieval module, without prompting, learns a high level of accuracy on tail classes. This, in turn, frees the base encoder to focus on common classes, and improve its performance thereon. RAC represents an alternative approach to utilizing large, pretrained models without requiring fine-tuning, as well as a first step towards more effectively making use of external memory within common computer vision architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge