Minsu Kim

Synthesizable Molecular Generation via Soft-constrained GFlowNets with Rich Chemical Priors

Feb 04, 2026Abstract:The application of generative models for experimental drug discovery campaigns is severely limited by the difficulty of designing molecules de novo that can be synthesized in practice. Previous works have leveraged Generative Flow Networks (GFlowNets) to impose hard synthesizability constraints through the design of state and action spaces based on predefined reaction templates and building blocks. Despite the promising prospects of this approach, it currently lacks flexibility and scalability. As an alternative, we propose S3-GFN, which generates synthesizable SMILES molecules via simple soft regularization of a sequence-based GFlowNet. Our approach leverages rich molecular priors learned from large-scale SMILES corpora to steer molecular generation towards high-reward, synthesizable chemical spaces. The model induces constraints through off-policy replay training with a contrastive learning signal based on separate buffers of synthesizable and unsynthesizable samples. Our experiments show that S3-GFN learns to generate synthesizable molecules ($\geq 95\%$) with higher rewards in diverse tasks.

GFlowPO: Generative Flow Network as a Language Model Prompt Optimizer

Feb 03, 2026Abstract:Finding effective prompts for language models (LMs) is critical yet notoriously difficult: the prompt space is combinatorially large, rewards are sparse due to expensive target-LM evaluation. Yet, existing RL-based prompt optimizers often rely on on-policy updates and a meta-prompt sampled from a fixed distribution, leading to poor sample efficiency. We propose GFlowPO, a probabilistic prompt optimization framework that casts prompt search as a posterior inference problem over latent prompts regularized by a meta-prompted reference-LM prior. In the first step, we fine-tune a lightweight prompt-LM with an off-policy Generative Flow Network (GFlowNet) objective, using a replay-based training policy that reuses past prompt evaluations to enable sample-efficient exploration. In the second step, we introduce Dynamic Memory Update (DMU), a training-free mechanism that updates the meta-prompt by injecting both (i) diverse prompts from a replay buffer and (ii) top-performing prompts from a small priority queue, thereby progressively concentrating the search process on high-reward regions. Across few-shot text classification, instruction induction benchmarks, and question answering tasks, GFlowPO consistently outperforms recent discrete prompt optimization baselines.

A generalizable large-scale foundation model for musculoskeletal radiographs

Feb 03, 2026Abstract:Artificial intelligence (AI) has shown promise in detecting and characterizing musculoskeletal diseases from radiographs. However, most existing models remain task-specific, annotation-dependent, and limited in generalizability across diseases and anatomical regions. Although a generalizable foundation model trained on large-scale musculoskeletal radiographs is clinically needed, publicly available datasets remain limited in size and lack sufficient diversity to enable training across a wide range of musculoskeletal conditions and anatomical sites. Here, we present SKELEX, a large-scale foundation model for musculoskeletal radiographs, trained using self-supervised learning on 1.2 million diverse, condition-rich images. The model was evaluated on 12 downstream diagnostic tasks and generally outperformed baselines in fracture detection, osteoarthritis grading, and bone tumor classification. Furthermore, SKELEX demonstrated zero-shot abnormality localization, producing error maps that identified pathologic regions without task-specific training. Building on this capability, we developed an interpretable, region-guided model for predicting bone tumors, which maintained robust performance on independent external datasets and was deployed as a publicly accessible web application. Overall, SKELEX provides a scalable, label-efficient, and generalizable AI framework for musculoskeletal imaging, establishing a foundation for both clinical translation and data-efficient research in musculoskeletal radiology.

Inlier-Centric Post-Training Quantization for Object Detection Models

Feb 03, 2026Abstract:Object detection is pivotal in computer vision, yet its immense computational demands make deployment slow and power-hungry, motivating quantization. However, task-irrelevant morphologies such as background clutter and sensor noise induce redundant activations (or anomalies). These anomalies expand activation ranges and skew activation distributions toward task-irrelevant responses, complicating bit allocation and weakening the preservation of informative features. Without a clear criterion to distinguish anomalies, suppressing them can inadvertently discard useful information. To address this, we present InlierQ, an inlier-centric post-training quantization approach that separates anomalies from informative inliers. InlierQ computes gradient-aware volume saliency scores, classifies each volume as an inlier or anomaly, and fits a posterior distribution over these scores using the Expectation-Maximization (EM) algorithm. This design suppresses anomalies while preserving informative features. InlierQ is label-free, drop-in, and requires only 64 calibration samples. Experiments on the COCO and nuScenes benchmarks show consistent reductions in quantization error for camera-based (2D and 3D) and LiDAR-based (3D) object detection.

Efficient Domain Generalization in Wireless Networks with Scarce Multi-Modal Data

Oct 05, 2025Abstract:In 6G wireless networks, multi-modal ML models can be leveraged to enable situation-aware network decisions in dynamic environments. However, trained ML models often fail to generalize under domain shifts when training and test data distributions are different because they often focus on modality-specific spurious features. In practical wireless systems, domain shifts occur frequently due to dynamic channel statistics, moving obstacles, or hardware configuration. Thus, there is a need for learning frameworks that can achieve robust generalization under scarce multi-modal data in wireless networks. In this paper, a novel and data-efficient two-phase learning framework is proposed to improve generalization performance in unseen and unfamiliar wireless environments with minimal amount of multi-modal data. In the first stage, a physics-based loss function is employed to enable each BS to learn the physics underlying its wireless environment captured by multi-modal data. The data-efficiency of the physics-based loss function is analytically investigated. In the second stage, collaborative domain adaptation is proposed to leverage the wireless environment knowledge of multiple BSs to guide under-performing BSs under domain shift. Specifically, domain-similarity-aware model aggregation is proposed to utilize the knowledge of BSs that experienced similar domains. To validate the proposed framework, a new dataset generation framework is developed by integrating CARLA and MATLAB-based mmWave channel modeling to predict mmWave RSS. Simulation results show that the proposed physics-based training requires only 13% of data samples to achieve the same performance as a state-of-the-art baseline that does not use physics-based training. Moreover, the proposed collaborative domain adaptation needs only 25% of data samples and 20% of FLOPs to achieve the convergence compared to baselines.

Diffusion Alignment as Variational Expectation-Maximization

Oct 01, 2025Abstract:Diffusion alignment aims to optimize diffusion models for the downstream objective. While existing methods based on reinforcement learning or direct backpropagation achieve considerable success in maximizing rewards, they often suffer from reward over-optimization and mode collapse. We introduce Diffusion Alignment as Variational Expectation-Maximization (DAV), a framework that formulates diffusion alignment as an iterative process alternating between two complementary phases: the E-step and the M-step. In the E-step, we employ test-time search to generate diverse and reward-aligned samples. In the M-step, we refine the diffusion model using samples discovered by the E-step. We demonstrate that DAV can optimize reward while preserving diversity for both continuous and discrete tasks: text-to-image synthesis and DNA sequence design.

Active Attacks: Red-teaming LLMs via Adaptive Environments

Sep 26, 2025

Abstract:We address the challenge of generating diverse attack prompts for large language models (LLMs) that elicit harmful behaviors (e.g., insults, sexual content) and are used for safety fine-tuning. Rather than relying on manual prompt engineering, attacker LLMs can be trained with reinforcement learning (RL) to automatically generate such prompts using only a toxicity classifier as a reward. However, capturing a wide range of harmful behaviors is a significant challenge that requires explicit diversity objectives. Existing diversity-seeking RL methods often collapse to limited modes: once high-reward prompts are found, exploration of new regions is discouraged. Inspired by the active learning paradigm that encourages adaptive exploration, we introduce \textit{Active Attacks}, a novel RL-based red-teaming algorithm that adapts its attacks as the victim evolves. By periodically safety fine-tuning the victim LLM with collected attack prompts, rewards in exploited regions diminish, which forces the attacker to seek unexplored vulnerabilities. This process naturally induces an easy-to-hard exploration curriculum, where the attacker progresses beyond easy modes toward increasingly difficult ones. As a result, Active Attacks uncovers a wide range of local attack modes step by step, and their combination achieves wide coverage of the multi-mode distribution. Active Attacks, a simple plug-and-play module that seamlessly integrates into existing RL objectives, unexpectedly outperformed prior RL-based methods -- including GFlowNets, PPO, and REINFORCE -- by improving cross-attack success rates against GFlowNets, the previous state-of-the-art, from 0.07% to 31.28% (a relative gain greater than $400\ \times$) with only a 6% increase in computation. Our code is publicly available \href{https://github.com/dbsxodud-11/active_attacks}{here}.

Non-Adaptive Adversarial Face Generation

Jul 16, 2025

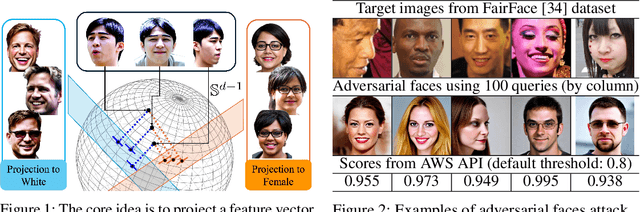

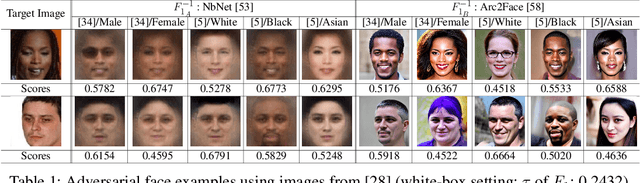

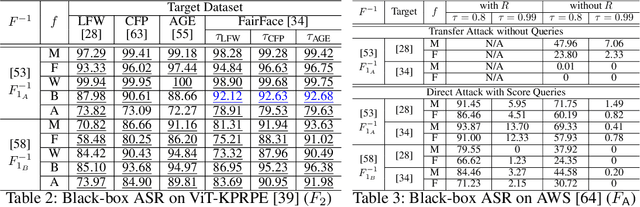

Abstract:Adversarial attacks on face recognition systems (FRSs) pose serious security and privacy threats, especially when these systems are used for identity verification. In this paper, we propose a novel method for generating adversarial faces-synthetic facial images that are visually distinct yet recognized as a target identity by the FRS. Unlike iterative optimization-based approaches (e.g., gradient descent or other iterative solvers), our method leverages the structural characteristics of the FRS feature space. We figure out that individuals sharing the same attribute (e.g., gender or race) form an attributed subsphere. By utilizing such subspheres, our method achieves both non-adaptiveness and a remarkably small number of queries. This eliminates the need for relying on transferability and open-source surrogate models, which have been a typical strategy when repeated adaptive queries to commercial FRSs are impossible. Despite requiring only a single non-adaptive query consisting of 100 face images, our method achieves a high success rate of over 93% against AWS's CompareFaces API at its default threshold. Furthermore, unlike many existing attacks that perturb a given image, our method can deliberately produce adversarial faces that impersonate the target identity while exhibiting high-level attributes chosen by the adversary.

Energy-based generator matching: A neural sampler for general state space

May 26, 2025

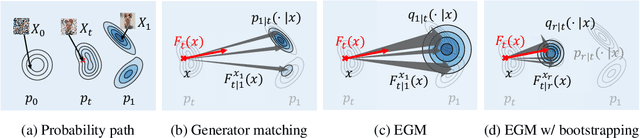

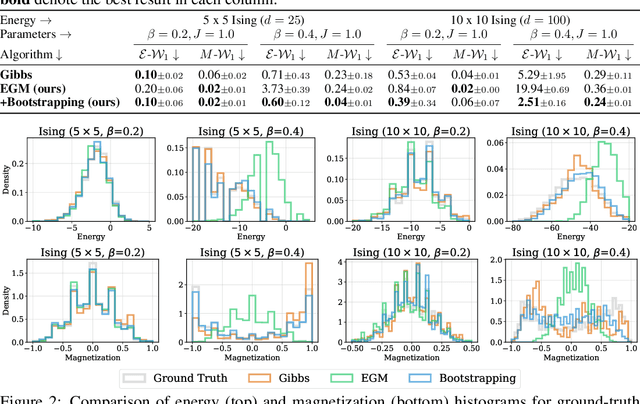

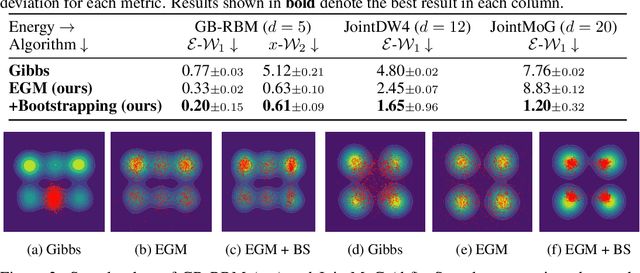

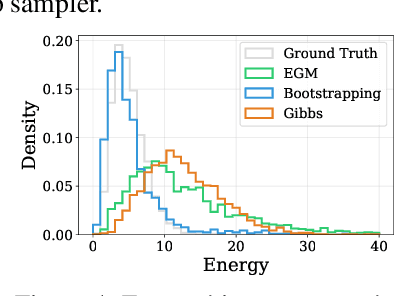

Abstract:We propose Energy-based generator matching (EGM), a modality-agnostic approach to train generative models from energy functions in the absence of data. Extending the recently proposed generator matching, EGM enables training of arbitrary continuous-time Markov processes, e.g., diffusion, flow, and jump, and can generate data from continuous, discrete, and a mixture of two modalities. To this end, we propose estimating the generator matching loss using self-normalized importance sampling with an additional bootstrapping trick to reduce variance in the importance weight. We validate EGM on both discrete and multimodal tasks up to 100 and 20 dimensions, respectively.

On scalable and efficient training of diffusion samplers

May 26, 2025Abstract:We address the challenge of training diffusion models to sample from unnormalized energy distributions in the absence of data, the so-called diffusion samplers. Although these approaches have shown promise, they struggle to scale in more demanding scenarios where energy evaluations are expensive and the sampling space is high-dimensional. To address this limitation, we propose a scalable and sample-efficient framework that properly harmonizes the powerful classical sampling method and the diffusion sampler. Specifically, we utilize Monte Carlo Markov chain (MCMC) samplers with a novelty-based auxiliary energy as a Searcher to collect off-policy samples, using an auxiliary energy function to compensate for exploring modes the diffusion sampler rarely visits. These off-policy samples are then combined with on-policy data to train the diffusion sampler, thereby expanding its coverage of the energy landscape. Furthermore, we identify primacy bias, i.e., the preference of samplers for early experience during training, as the main cause of mode collapse during training, and introduce a periodic re-initialization trick to resolve this issue. Our method significantly improves sample efficiency on standard benchmarks for diffusion samplers and also excels at higher-dimensional problems and real-world molecular conformer generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge