Mingliang Zhou

Learning Diverse Skills for Behavior Models with Mixture of Experts

Jan 18, 2026Abstract:Imitation learning has demonstrated strong performance in robotic manipulation by learning from large-scale human demonstrations. While existing models excel at single-task learning, it is observed in practical applications that their performance degrades in the multi-task setting, where interference across tasks leads to an averaging effect. To address this issue, we propose to learn diverse skills for behavior models with Mixture of Experts, referred to as Di-BM. Di-BM associates each expert with a distinct observation distribution, enabling experts to specialize in sub-regions of the observation space. Specifically, we employ energy-based models to represent expert-specific observation distributions and jointly train them alongside the corresponding action models. Our approach is plug-and-play and can be seamlessly integrated into standard imitation learning methods. Extensive experiments on multiple real-world robotic manipulation tasks demonstrate that Di-BM significantly outperforms state-of-the-art baselines. Moreover, fine-tuning the pretrained Di-BM on novel tasks exhibits superior data efficiency and the reusable of expert-learned knowledge. Code is available at https://github.com/robotnav-bot/Di-BM.

An Efficient and Multi-Modal Navigation System with One-Step World Model

Jan 18, 2026Abstract:Navigation is a fundamental capability for mobile robots. While the current trend is to use learning-based approaches to replace traditional geometry-based methods, existing end-to-end learning-based policies often struggle with 3D spatial reasoning and lack a comprehensive understanding of physical world dynamics. Integrating world models-which predict future observations conditioned on given actions-with iterative optimization planning offers a promising solution due to their capacity for imagination and flexibility. However, current navigation world models, typically built on pure transformer architectures, often rely on multi-step diffusion processes and autoregressive frame-by-frame generation. These mechanisms result in prohibitive computational latency, rendering real-time deployment impossible. To address this bottleneck, we propose a lightweight navigation world model that adopts a one-step generation paradigm and a 3D U-Net backbone equipped with efficient spatial-temporal attention. This design drastically reduces inference latency, enabling high-frequency control while achieving superior predictive performance. We also integrate this model into an optimization-based planning framework utilizing anchor-based initialization to handle multi-modal goal navigation tasks. Extensive closed-loop experiments in both simulation and real-world environments demonstrate our system's superior efficiency and robustness compared to state-of-the-art baselines.

Implicit Kinodynamic Motion Retargeting for Human-to-humanoid Imitation Learning

Sep 18, 2025Abstract:Human-to-humanoid imitation learning aims to learn a humanoid whole-body controller from human motion. Motion retargeting is a crucial step in enabling robots to acquire reference trajectories when exploring locomotion skills. However, current methods focus on motion retargeting frame by frame, which lacks scalability. Could we directly convert large-scale human motion into robot-executable motion through a more efficient approach? To address this issue, we propose Implicit Kinodynamic Motion Retargeting (IKMR), a novel efficient and scalable retargeting framework that considers both kinematics and dynamics. In kinematics, IKMR pretrains motion topology feature representation and a dual encoder-decoder architecture to learn a motion domain mapping. In dynamics, IKMR integrates imitation learning with the motion retargeting network to refine motion into physically feasible trajectories. After fine-tuning using the tracking results, IKMR can achieve large-scale physically feasible motion retargeting in real time, and a whole-body controller could be directly trained and deployed for tracking its retargeted trajectories. We conduct our experiments both in the simulator and the real robot on a full-size humanoid robot. Extensive experiments and evaluation results verify the effectiveness of our proposed framework.

DFDNet: Dynamic Frequency-Guided De-Flare Network

Jul 23, 2025

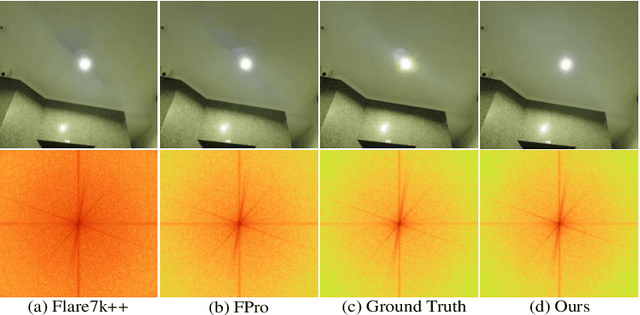

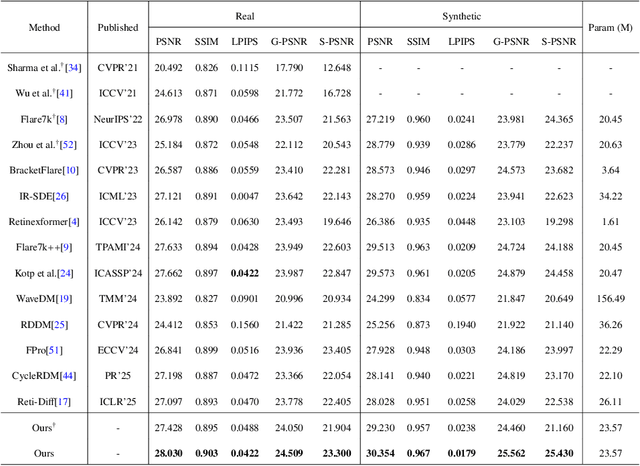

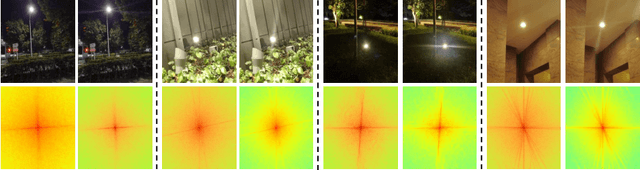

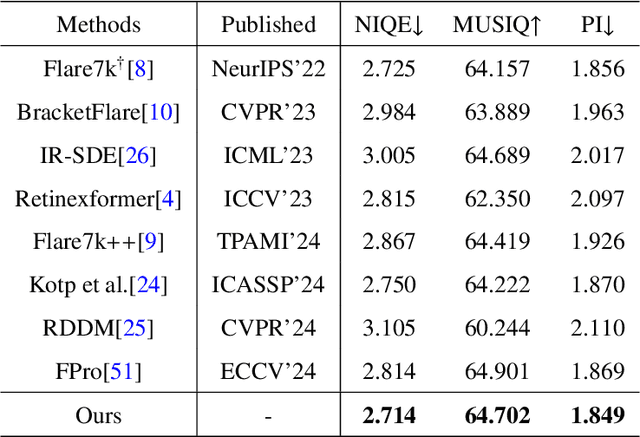

Abstract:Strong light sources in nighttime photography frequently produce flares in images, significantly degrading visual quality and impacting the performance of downstream tasks. While some progress has been made, existing methods continue to struggle with removing large-scale flare artifacts and repairing structural damage in regions near the light source. We observe that these challenging flare artifacts exhibit more significant discrepancies from the reference images in the frequency domain compared to the spatial domain. Therefore, this paper presents a novel dynamic frequency-guided deflare network (DFDNet) that decouples content information from flare artifacts in the frequency domain, effectively removing large-scale flare artifacts. Specifically, DFDNet consists mainly of a global dynamic frequency-domain guidance (GDFG) module and a local detail guidance module (LDGM). The GDFG module guides the network to perceive the frequency characteristics of flare artifacts by dynamically optimizing global frequency domain features, effectively separating flare information from content information. Additionally, we design an LDGM via a contrastive learning strategy that aligns the local features of the light source with the reference image, reduces local detail damage from flare removal, and improves fine-grained image restoration. The experimental results demonstrate that the proposed method outperforms existing state-of-the-art methods in terms of performance. The code is available at \href{https://github.com/AXNing/DFDNet}{https://github.com/AXNing/DFDNet}.

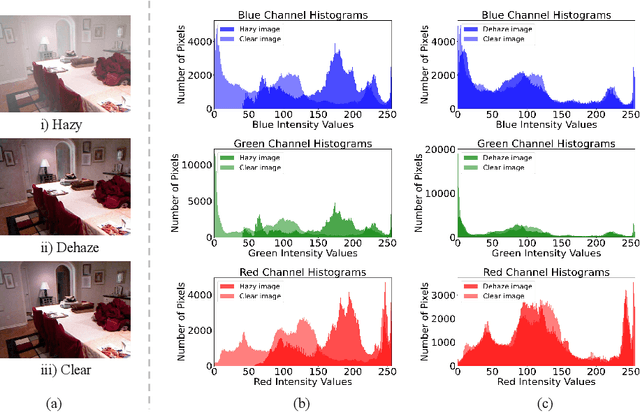

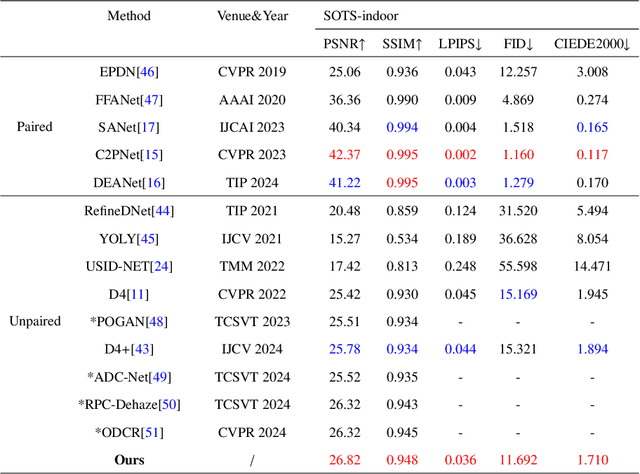

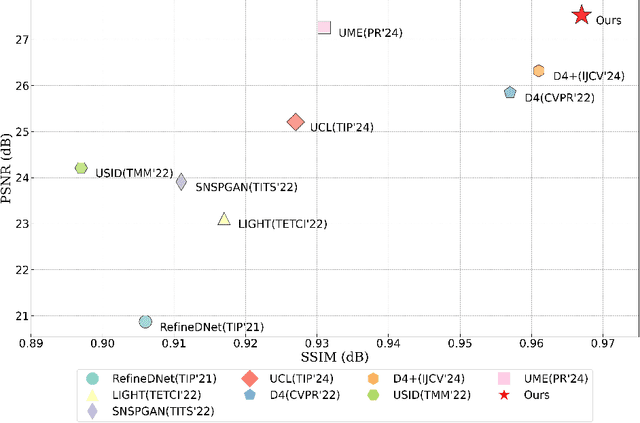

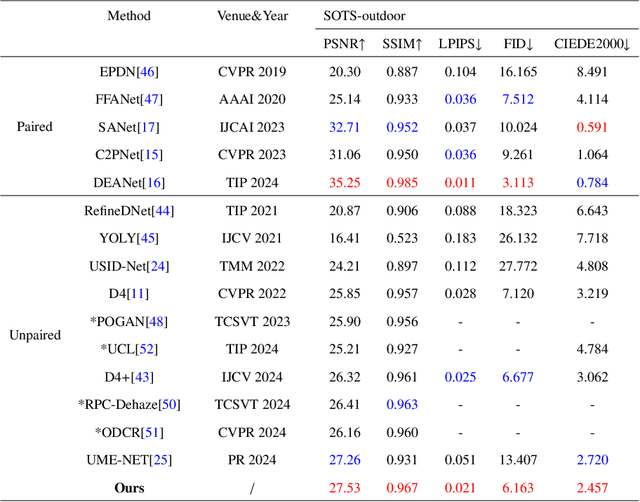

UR2P-Dehaze: Learning a Simple Image Dehaze Enhancer via Unpaired Rich Physical Prior

Jan 12, 2025

Abstract:Image dehazing techniques aim to enhance contrast and restore details, which are essential for preserving visual information and improving image processing accuracy. Existing methods rely on a single manual prior, which cannot effectively reveal image details. To overcome this limitation, we propose an unpaired image dehazing network, called the Simple Image Dehaze Enhancer via Unpaired Rich Physical Prior (UR2P-Dehaze). First, to accurately estimate the illumination, reflectance, and color information of the hazy image, we design a shared prior estimator (SPE) that is iteratively trained to ensure the consistency of illumination and reflectance, generating clear, high-quality images. Additionally, a self-monitoring mechanism is introduced to eliminate undesirable features, providing reliable priors for image reconstruction. Next, we propose Dynamic Wavelet Separable Convolution (DWSC), which effectively integrates key features across both low and high frequencies, significantly enhancing the preservation of image details and ensuring global consistency. Finally, to effectively restore the color information of the image, we propose an Adaptive Color Corrector that addresses the problem of unclear colors. The PSNR, SSIM, LPIPS, FID and CIEDE2000 metrics on the benchmark dataset show that our method achieves state-of-the-art performance. It also contributes to the performance improvement of downstream tasks. The project code will be available at https://github.com/Fan-pixel/UR2P-Dehaze. \end{abstract}

Image Quality Assessment: Exploring Regional Heterogeneity via Response of Adaptive Multiple Quality Factors in Dictionary Space

Dec 24, 2024Abstract:Given that the factors influencing image quality vary significantly with scene, content, and distortion type, particularly in the context of regional heterogeneity, we propose an adaptive multi-quality factor (AMqF) framework to represent image quality in a dictionary space, enabling the precise capture of quality features in non-uniformly distorted regions. By designing an adapter, the framework can flexibly decompose quality factors (such as brightness, structure, contrast, etc.) that best align with human visual perception and quantify them into discrete visual words. These visual words respond to the constructed dictionary basis vector, and by obtaining the corresponding coordinate vectors, we can measure visual similarity. Our method offers two key contributions. First, an adaptive mechanism that extracts and decomposes quality factors according to human visual perception principles enhances their representation ability through reconstruction constraints. Second, the construction of a comprehensive and discriminative dictionary space and basis vector allows quality factors to respond effectively to the dictionary basis vector and capture non-uniform distortion patterns in images, significantly improving the accuracy of visual similarity measurement. The experimental results demonstrate that the proposed method outperforms existing state-of-the-art approaches in handling various types of distorted images. The source code is available at https://anonymous.4open.science/r/AMqF-44B2.

Image Quality Assessment: Investigating Causal Perceptual Effects with Abductive Counterfactual Inference

Dec 22, 2024Abstract:Existing full-reference image quality assessment (FR-IQA) methods often fail to capture the complex causal mechanisms that underlie human perceptual responses to image distortions, limiting their ability to generalize across diverse scenarios. In this paper, we propose an FR-IQA method based on abductive counterfactual inference to investigate the causal relationships between deep network features and perceptual distortions. First, we explore the causal effects of deep features on perception and integrate causal reasoning with feature comparison, constructing a model that effectively handles complex distortion types across different IQA scenarios. Second, the analysis of the perceptual causal correlations of our proposed method is independent of the backbone architecture and thus can be applied to a variety of deep networks. Through abductive counterfactual experiments, we validate the proposed causal relationships, confirming the model's superior perceptual relevance and interpretability of quality scores. The experimental results demonstrate the robustness and effectiveness of the method, providing competitive quality predictions across multiple benchmarks. The source code is available at https://anonymous.4open.science/r/DeepCausalQuality-25BC.

Underwater Image Quality Assessment: A Perceptual Framework Guided by Physical Imaging

Dec 20, 2024Abstract:In this paper, we propose a physically imaging-guided framework for underwater image quality assessment (UIQA), called PIGUIQA. First, we formulate UIQA as a comprehensive problem that considers the combined effects of direct transmission attenuation and backwards scattering on image perception. On this basis, we incorporate advanced physics-based underwater imaging estimation into our method and define distortion metrics that measure the impact of direct transmission attenuation and backwards scattering on image quality. Second, acknowledging the significant content differences across various regions of an image and the varying perceptual sensitivity to distortions in these regions, we design a local perceptual module on the basis of the neighborhood attention mechanism. This module effectively captures subtle features in images, thereby enhancing the adaptive perception of distortions on the basis of local information. Finally, by employing a global perceptual module to further integrate the original image content with underwater image distortion information, the proposed model can accurately predict the image quality score. Comprehensive experiments demonstrate that PIGUIQA achieves state-of-the-art performance in underwater image quality prediction and exhibits strong generalizability. The code for PIGUIQA is available on https://anonymous.4open.science/r/PIGUIQA-A465/

Unified Image Restoration and Enhancement: Degradation Calibrated Cycle Reconstruction Diffusion Model

Dec 19, 2024Abstract:Image restoration and enhancement are pivotal for numerous computer vision applications, yet unifying these tasks efficiently remains a significant challenge. Inspired by the iterative refinement capabilities of diffusion models, we propose CycleRDM, a novel framework designed to unify restoration and enhancement tasks while achieving high-quality mapping. Specifically, CycleRDM first learns the mapping relationships among the degraded domain, the rough normal domain, and the normal domain through a two-stage diffusion inference process. Subsequently, we transfer the final calibration process to the wavelet low-frequency domain using discrete wavelet transform, performing fine-grained calibration from a frequency domain perspective by leveraging task-specific frequency spaces. To improve restoration quality, we design a feature gain module for the decomposed wavelet high-frequency domain to eliminate redundant features. Additionally, we employ multimodal textual prompts and Fourier transform to drive stable denoising and reduce randomness during the inference process. After extensive validation, CycleRDM can be effectively generalized to a wide range of image restoration and enhancement tasks while requiring only a small number of training samples to be significantly superior on various benchmarks of reconstruction quality and perceptual quality. The source code will be available at https://github.com/hejh8/CycleRDM.

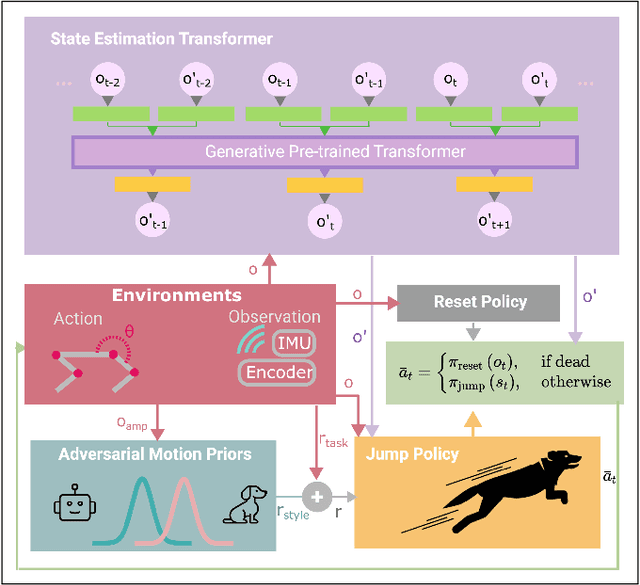

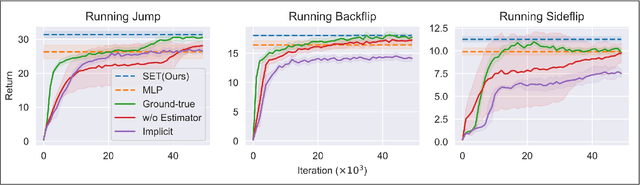

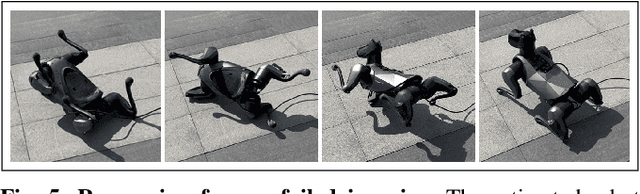

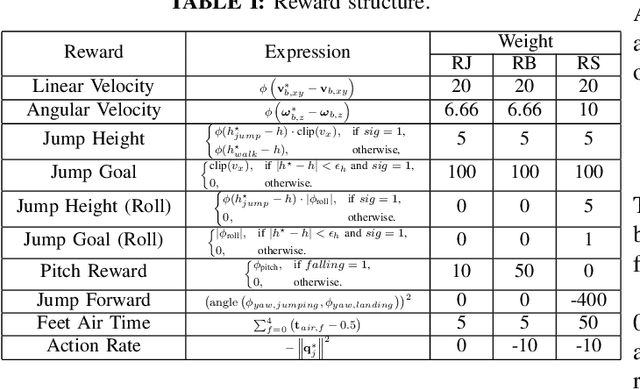

State Estimation Transformers for Agile Legged Locomotion

Oct 17, 2024

Abstract:We propose a state estimation method that can accurately predict the robot's privileged states to push the limits of quadruped robots in executing advanced skills such as jumping in the wild. In particular, we present the State Estimation Transformers (SET), an architecture that casts the state estimation problem as conditional sequence modeling. SET outputs the robot states that are hard to obtain directly in the real world, such as the body height and velocities, by leveraging a causally masked Transformer. By conditioning an autoregressive model on the robot's past states, our SET model can predict these privileged observations accurately even in highly dynamic locomotions. We evaluate our methods on three tasks -- running jumping, running backflipping, and running sideslipping -- on a low-cost quadruped robot, Cyberdog2. Results show that SET can outperform other methods in estimation accuracy and transferability in the simulation as well as success rates of jumping and triggering a recovery controller in the real world, suggesting the superiority of such a Transformer-based explicit state estimator in highly dynamic locomotion tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge