Zhipu Liu

Debiased Dual-Invariant Defense for Adversarially Robust Person Re-Identification

Nov 13, 2025Abstract:Person re-identification (ReID) is a fundamental task in many real-world applications such as pedestrian trajectory tracking. However, advanced deep learning-based ReID models are highly susceptible to adversarial attacks, where imperceptible perturbations to pedestrian images can cause entirely incorrect predictions, posing significant security threats. Although numerous adversarial defense strategies have been proposed for classification tasks, their extension to metric learning tasks such as person ReID remains relatively unexplored. Moreover, the several existing defenses for person ReID fail to address the inherent unique challenges of adversarially robust ReID. In this paper, we systematically identify the challenges of adversarial defense in person ReID into two key issues: model bias and composite generalization requirements. To address them, we propose a debiased dual-invariant defense framework composed of two main phases. In the data balancing phase, we mitigate model bias using a diffusion-model-based data resampling strategy that promotes fairness and diversity in training data. In the bi-adversarial self-meta defense phase, we introduce a novel metric adversarial training approach incorporating farthest negative extension softening to overcome the robustness degradation caused by the absence of classifier. Additionally, we introduce an adversarially-enhanced self-meta mechanism to achieve dual-generalization for both unseen identities and unseen attack types. Experiments demonstrate that our method significantly outperforms existing state-of-the-art defenses.

Zero-LED: Zero-Reference Lighting Estimation Diffusion Model for Low-Light Image Enhancement

Mar 05, 2024

Abstract:Diffusion model-based low-light image enhancement methods rely heavily on paired training data, leading to limited extensive application. Meanwhile, existing unsupervised methods lack effective bridging capabilities for unknown degradation. To address these limitations, we propose a novel zero-reference lighting estimation diffusion model for low-light image enhancement called Zero-LED. It utilizes the stable convergence ability of diffusion models to bridge the gap between low-light domains and real normal-light domains and successfully alleviates the dependence on pairwise training data via zero-reference learning. Specifically, we first design the initial optimization network to preprocess the input image and implement bidirectional constraints between the diffusion model and the initial optimization network through multiple objective functions. Subsequently, the degradation factors of the real-world scene are optimized iteratively to achieve effective light enhancement. In addition, we explore a frequency-domain based and semantically guided appearance reconstruction module that encourages feature alignment of the recovered image at a fine-grained level and satisfies subjective expectations. Finally, extensive experiments demonstrate the superiority of our approach to other state-of-the-art methods and more significant generalization capabilities. We will open the source code upon acceptance of the paper.

Low-light Image Enhancement via CLIP-Fourier Guided Wavelet Diffusion

Jan 08, 2024Abstract:Low-light image enhancement techniques have significantly progressed, but unstable image quality recovery and unsatisfactory visual perception are still significant challenges. To solve these problems, we propose a novel and robust low-light image enhancement method via CLIP-Fourier Guided Wavelet Diffusion, abbreviated as CFWD. Specifically, we design a guided network with a multiscale visual language in the frequency domain based on the wavelet transform to achieve effective image enhancement iteratively. In addition, we combine the advantages of Fourier transform in detail perception to construct a hybrid frequency domain space with significant perceptual capabilities(HFDPM). This operation guides wavelet diffusion to recover the fine-grained structure of the image and avoid diversity confusion. Extensive quantitative and qualitative experiments on publicly available real-world benchmarks show that our method outperforms existing state-of-the-art methods and better reproduces images similar to normal images. Code is available at https://github.com/He-Jinhong/CFWD.

Hierarchical Bi-Directional Feature Perception Network for Person Re-Identification

Aug 08, 2020

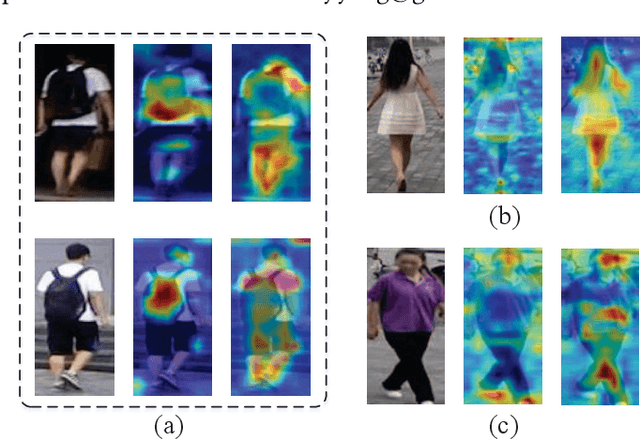

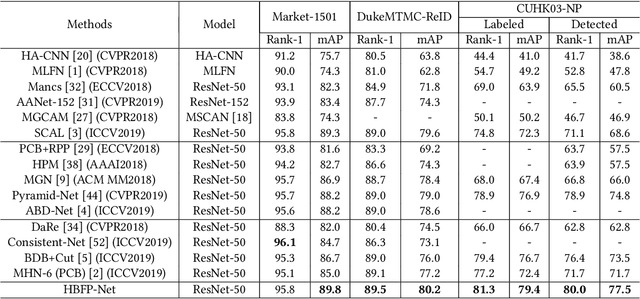

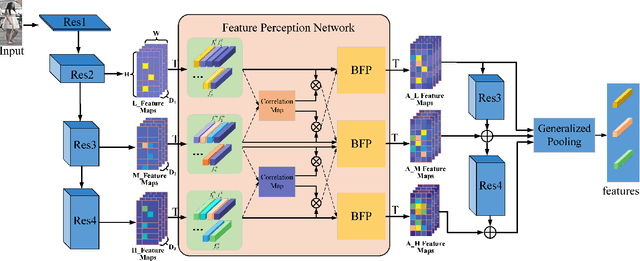

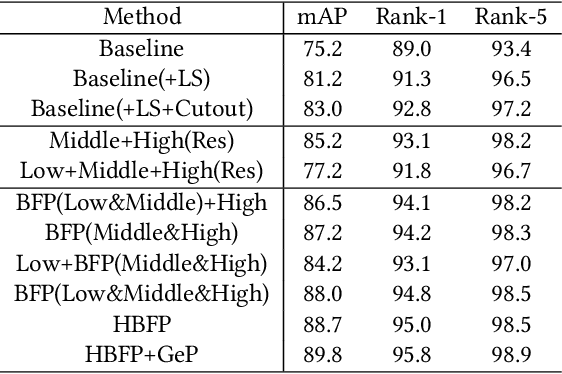

Abstract:Previous Person Re-Identification (Re-ID) models aim to focus on the most discriminative region of an image, while its performance may be compromised when that region is missing caused by camera viewpoint changes or occlusion. To solve this issue, we propose a novel model named Hierarchical Bi-directional Feature Perception Network (HBFP-Net) to correlate multi-level information and reinforce each other. First, the correlation maps of cross-level feature-pairs are modeled via low-rank bilinear pooling. Then, based on the correlation maps, Bi-directional Feature Perception (BFP) module is employed to enrich the attention regions of high-level feature, and to learn abstract and specific information in low-level feature. And then, we propose a novel end-to-end hierarchical network which integrates multi-level augmented features and inputs the augmented low- and middle-level features to following layers to retrain a new powerful network. What's more, we propose a novel trainable generalized pooling, which can dynamically select any value of all locations in feature maps to be activated. Extensive experiments implemented on the mainstream evaluation datasets including Market-1501, CUHK03 and DukeMTMC-ReID show that our method outperforms the recent SOTA Re-ID models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge