Martijn Wisse

Unwieldy Object Delivery with Nonholonomic Mobile Base: A Stable Pushing Approach

Sep 25, 2023

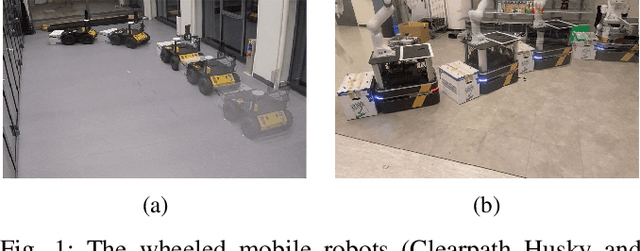

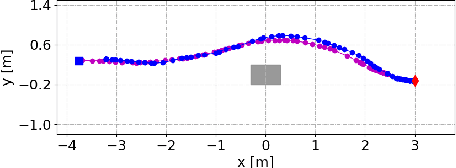

Abstract:This paper addresses the problem of pushing manipulation with nonholonomic mobile robots. Pushing is a fundamental skill that enables robots to move unwieldy objects that cannot be grasped. We propose a stable pushing method that maintains stiff contact between the robot and the object to avoid consuming repositioning actions. We prove that a line contact, rather than a single point contact, is necessary for nonholonomic robots to achieve stable pushing. We also show that the stable pushing constraint and the nonholonomic constraint of the robot can be simplified as a concise linear motion constraint. Then the pushing planning problem can be formulated as a constrained optimization problem using nonlinear model predictive control (NMPC). According to the experiments, our NMPC-based planner outperforms a reactive pushing strategy in terms of efficiency, reducing the robot's traveled distance by 23.8\% and time by 77.4\%. Furthermore, our method requires four fewer hyperparameters and decision variables than the Linear Time-Varying (LTV) MPC approach, making it easier to implement. Real-world experiments are carried out to validate the proposed method with two differential-drive robots, Husky and Boxer, under different friction conditions.

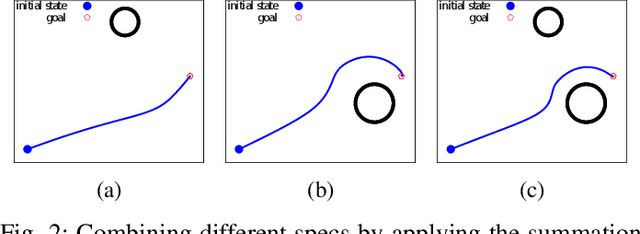

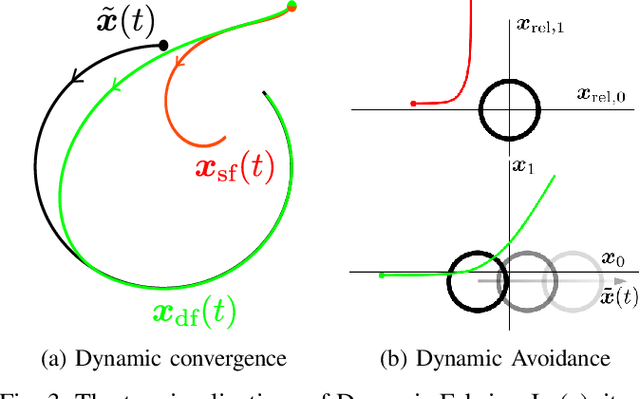

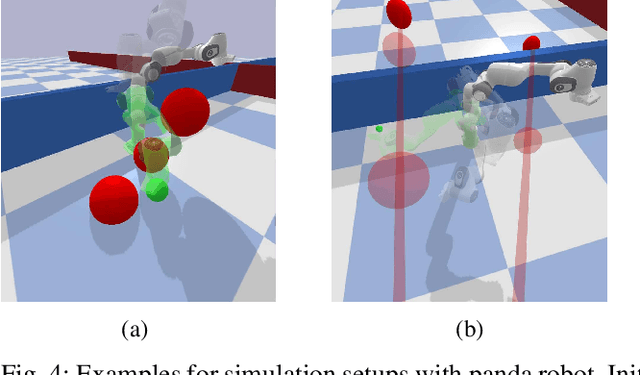

Dynamic Optimization Fabrics for Motion Generation

May 17, 2022

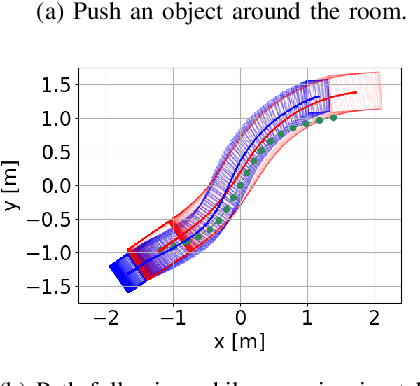

Abstract:Optimization fabrics represent a geometric approach to real-time motion planning, where trajectories are designed by the composition of several differential equations that exhibit a desired motion behavior. We generalize this framework to dynamic scenarios and prove that fundamental properties can be conserved. We show that convergence to trajectories and avoidance of moving obstacles can be guaranteed using simple construction rules of the components. Additionally, we present the first quantitative comparisons between optimization fabrics and model predictive control and show that optimization fabrics can generate similar trajectories with better scalability, and thus, much higher replanning frequency (up to 500 Hz with a 7 degrees of freedom robotic arm). Finally, we present empirical results on several robots, including a non-holonomic mobile manipulator with 10 degrees of freedom, supporting the theoretical findings.

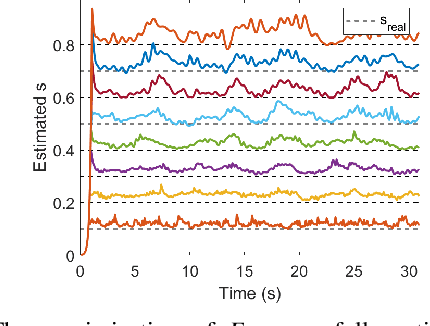

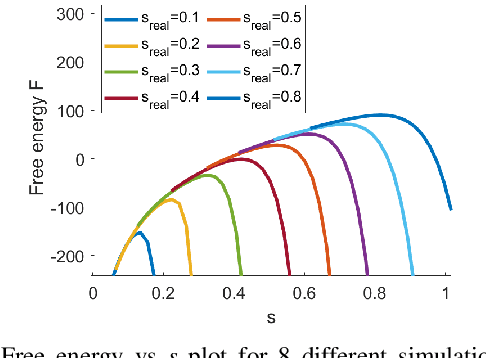

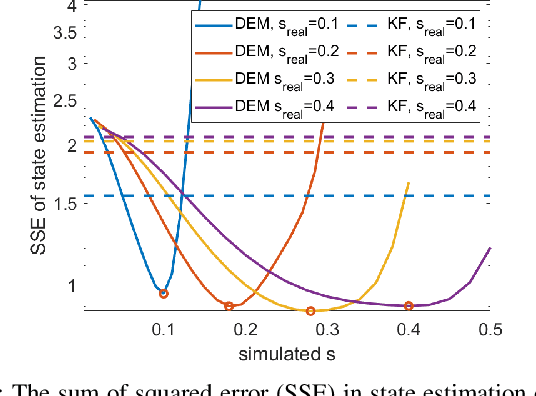

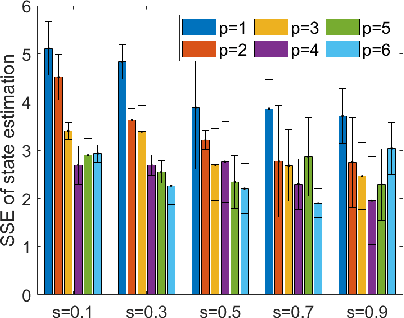

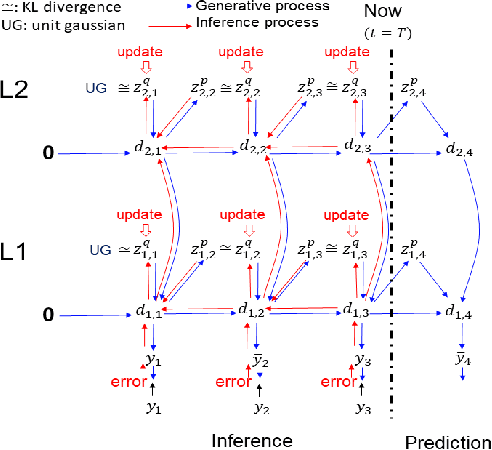

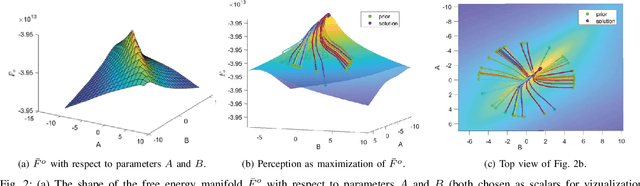

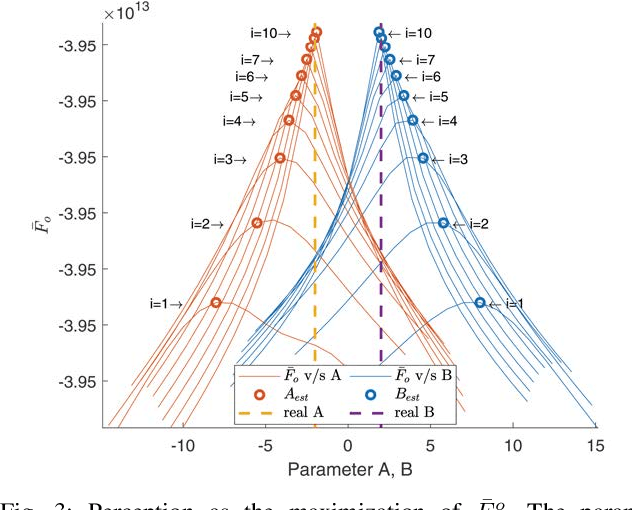

Free Energy Principle for the Noise Smoothness Estimation of Linear Systems with Colored Noise

Apr 04, 2022

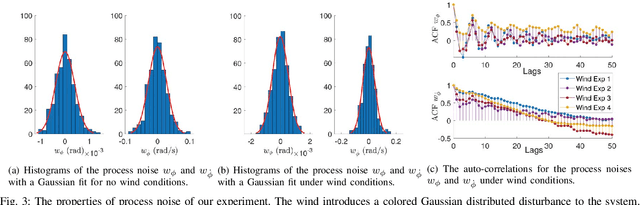

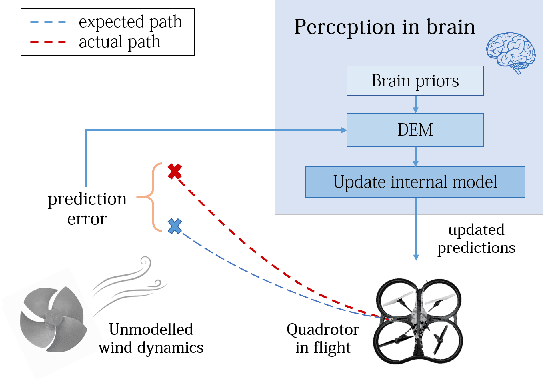

Abstract:The free energy principle (FEP) from neuroscience provides a framework called active inference for the joint estimation and control of state space systems, subjected to colored noise. However, the active inference community has been challenged with the critical task of manually tuning the noise smoothness parameter. To solve this problem, we introduce a novel online noise smoothness estimator based on the idea of free energy principle. We mathematically show that our estimator can converge to the free energy optimum during smoothness estimation. Using this formulation, we introduce a joint state and noise smoothness observer design called DEMs. Through rigorous simulations, we show that DEMs outperforms state-of-the-art state observers with least state estimation error. Finally, we provide a proof of concept for DEMs by applying it on a real life robotics problem - state estimation of a quadrotor hovering in wind, demonstrating its practical use.

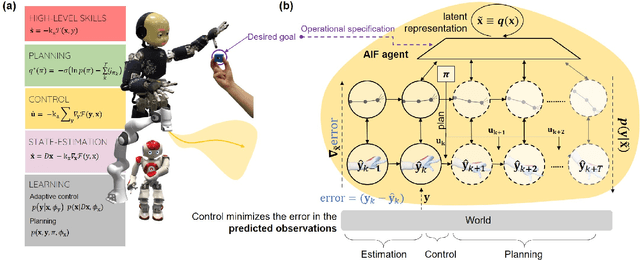

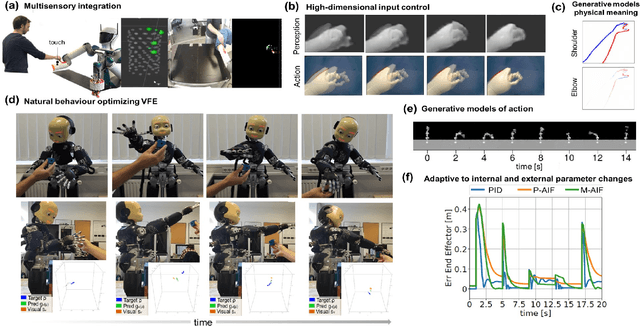

Active Inference in Robotics and Artificial Agents: Survey and Challenges

Dec 03, 2021

Abstract:Active inference is a mathematical framework which originated in computational neuroscience as a theory of how the brain implements action, perception and learning. Recently, it has been shown to be a promising approach to the problems of state-estimation and control under uncertainty, as well as a foundation for the construction of goal-driven behaviours in robotics and artificial agents in general. Here, we review the state-of-the-art theory and implementations of active inference for state-estimation, control, planning and learning; describing current achievements with a particular focus on robotics. We showcase relevant experiments that illustrate its potential in terms of adaptation, generalization and robustness. Furthermore, we connect this approach with other frameworks and discuss its expected benefits and challenges: a unified framework with functional biological plausibility using variational Bayesian inference.

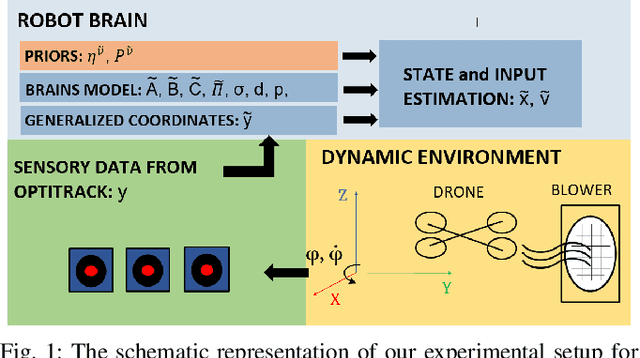

Free Energy Principle for State and Input Estimation of a Quadcopter Flying in Wind

Sep 24, 2021

Abstract:The free energy principle from neuroscience provides a brain-inspired perception scheme through a data-driven model learning algorithm called Dynamic Expectation Maximization (DEM). This paper aims at introducing an experimental design to provide the first experimental confirmation of the usefulness of DEM as a state and input estimator for real robots. Through a series of quadcopter flight experiments under unmodelled wind dynamics, we prove that DEM can leverage the information from colored noise for accurate state and input estimation through the use of generalized coordinates. We demonstrate the superior performance of DEM for state estimation under colored noise with respect to other benchmarks like State Augmentation, SMIKF and Kalman Filtering through its minimal estimation error. We demonstrate the similarities in the performance of DEM and Unknown Input Observer (UIO) for input estimation. The paper concludes by showing the influence of prior beliefs in shaping the accuracy-complexity trade-off during DEM's estimation.

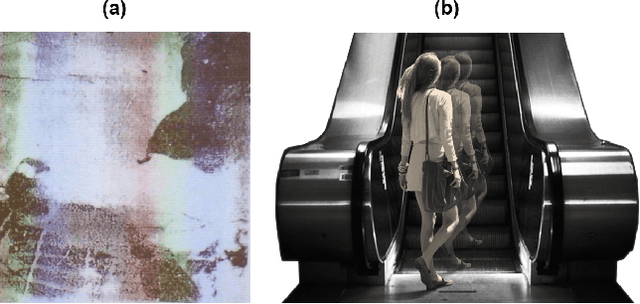

A Brain Inspired Learning Algorithm for the Perception of a Quadrotor in Wind

Sep 24, 2021

Abstract:The quest for a brain-inspired learning algorithm for robots has culminated in the free energy principle from neuroscience that models the brain's perception and action as an optimization over its free energy objectives. Based on this idea, we propose an estimation algorithm for accurate output prediction of a quadrotor flying under unmodelled wind conditions. The key idea behind this work is the handling of unmodelled wind dynamics and the model's non-linearity errors as coloured noise in the system, and leveraging it for accurate output predictions. This paper provides the first experimental validation for the usefulness of generalized coordinates for robot perception using Dynamic Expectation Maximization (DEM). Through real flight experiments, we show that the estimator outperforms classical estimators with the least error in output predictions. Based on the experimental results, we extend the DEM algorithm for model order selection for complete black box identification. With this paper, we provide the first experimental validation of DEM applied to robot learning.

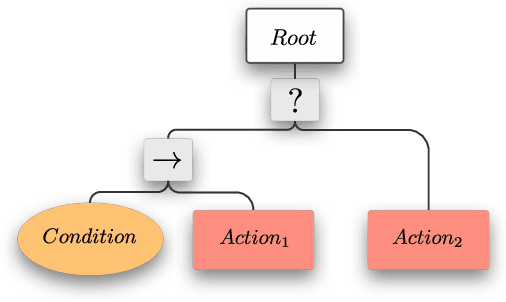

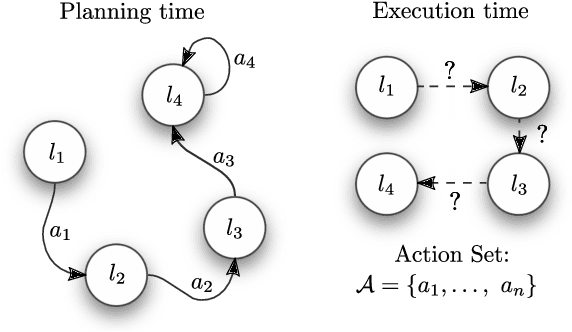

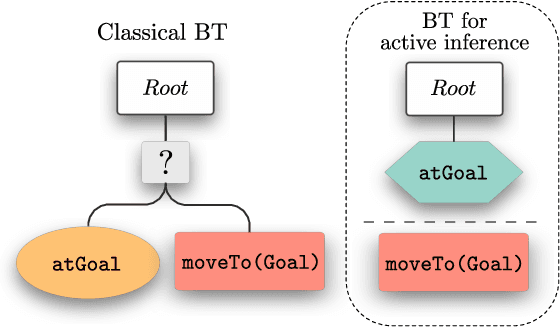

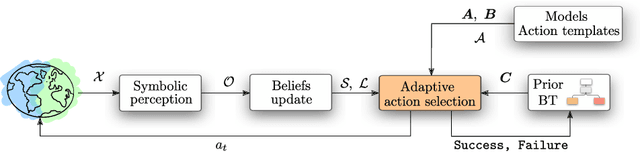

Active Inference and Behavior Trees for Reactive Action Planning and Execution in Robotics

Nov 19, 2020

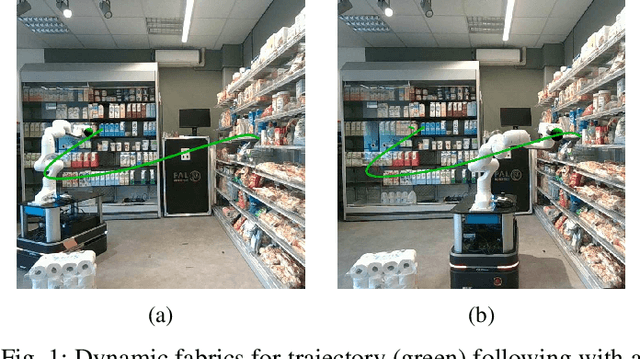

Abstract:This paper presents how the hybrid combination of behavior trees and the neuroscientific principle of active inference can be used for action planning and execution for reactive robot behaviors in dynamic environments. We show how complex robotic tasks can be formulated as a free-energy minimisation problem, and how state estimation and symbolic decision making are handled within the same framework. The general behavior is specified offline through behavior trees, where the leaf nodes represent desired states, not actions as in classical behavior trees. The decision of which action to execute to reach a state is left to the online active inference routine, in order to resolve unexpected contingencies. This hybrid combination improves the robustness of plans specified through behavior trees, while allowing to cope with the curse of dimensionality in active inference. The properties of the proposed algorithm are analysed in terms of robustness and convergence, and the theoretical results are validated using a mobile manipulator in a retail environment.

RRT-CoLearn: towards kinodynamic planning without numerical trajectory optimization

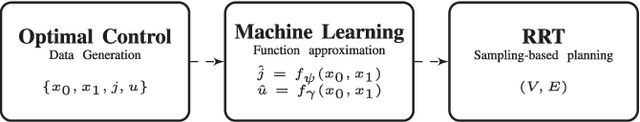

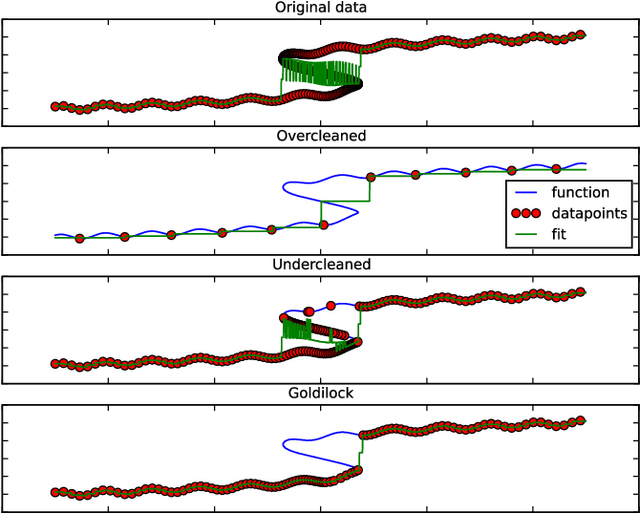

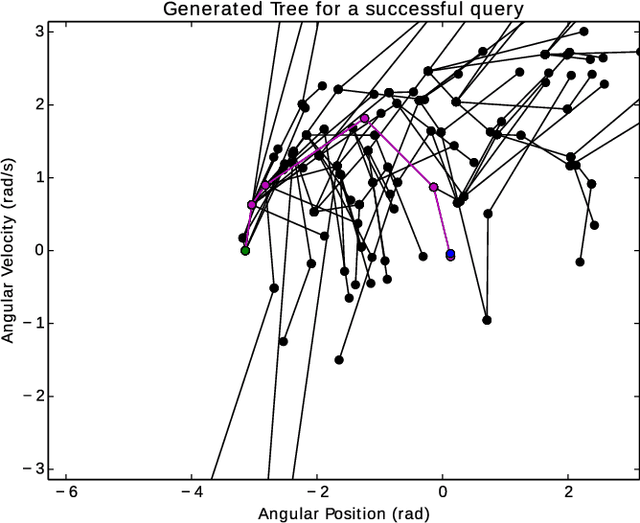

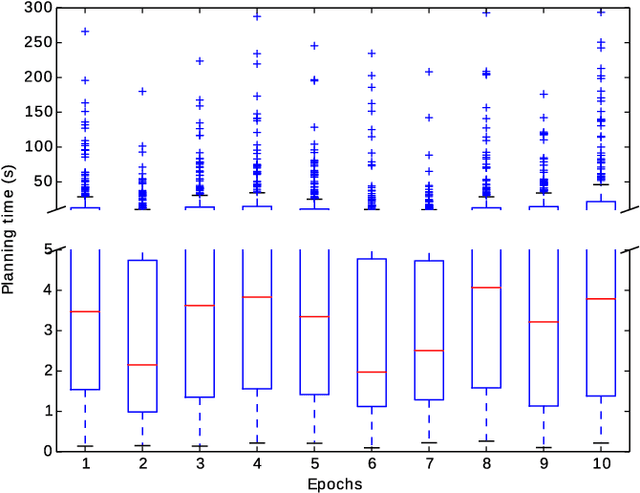

Oct 27, 2017

Abstract:Sampling-based kinodynamic planners, such as Rapidly-exploring Random Trees (RRTs), pose two fundamental challenges: computing a reliable (pseudo-)metric for the distance between two randomly sampled nodes, and computing a steering input to connect the nodes. The core of these challenges is a Two Point Boundary Value Problem, which is known to be NP-hard. Recently, the distance metric has been approximated using supervised learning, reducing computation time drastically. The previous work on such learning RRTs use direct optimal control to generate the data for supervised learning. This paper proposes to use indirect optimal control instead, because it provides two benefits: it reduces the computational effort to generate the data, and it provides a low dimensional parametrization of the action space. The latter allows us to learn both the distance metric and the steering input to connect two nodes. This eliminates the need for a local planner in learning RRTs. Experimental results on a pendulum swing up show 10-fold speed-up in both the offline data generation and the online planning time, leading to at least a 10-fold speed-up in the overall planning time.

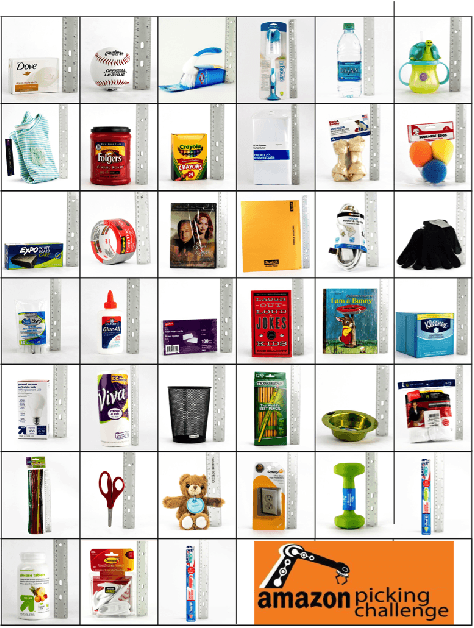

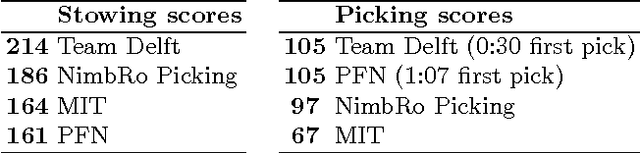

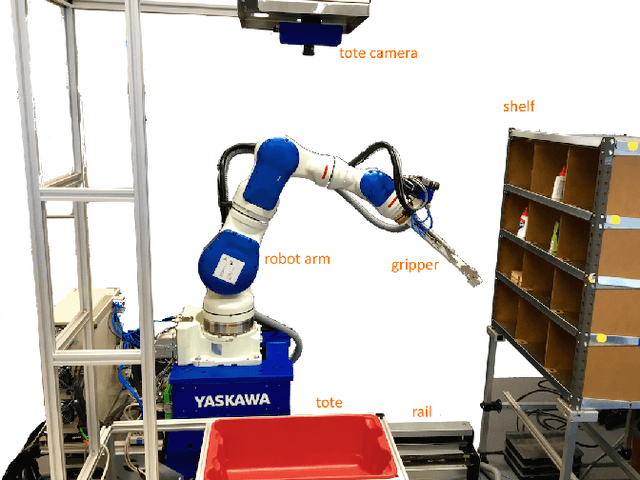

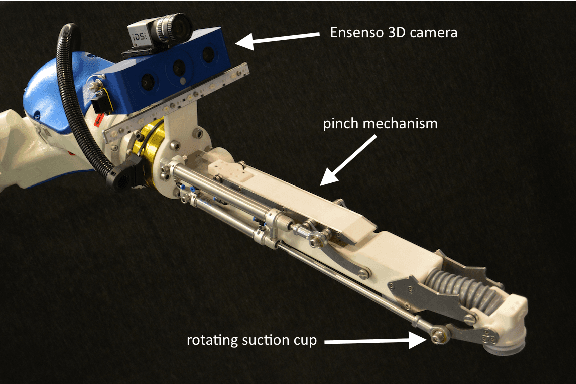

Team Delft's Robot Winner of the Amazon Picking Challenge 2016

Oct 18, 2016

Abstract:This paper describes Team Delft's robot, which won the Amazon Picking Challenge 2016, including both the Picking and the Stowing competitions. The goal of the challenge is to automate pick and place operations in unstructured environments, specifically the shelves in an Amazon warehouse. Team Delft's robot is based on an industrial robot arm, 3D cameras and a customized gripper. The robot's software uses ROS to integrate off-the-shelf components and modules developed specifically for the competition, implementing Deep Learning and other AI techniques for object recognition and pose estimation, grasp planning and motion planning. This paper describes the main components in the system, and discusses its performance and results at the Amazon Picking Challenge 2016 finals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge