Thomas Moerland

A Unified Framework for Zero-Shot Reinforcement Learning

Oct 23, 2025Abstract:Zero-shot reinforcement learning (RL) has emerged as a setting for developing general agents in an unsupervised manner, capable of solving downstream tasks without additional training or planning at test-time. Unlike conventional RL, which optimizes policies for a fixed reward, zero-shot RL requires agents to encode representations rich enough to support immediate adaptation to any objective, drawing parallels to vision and language foundation models. Despite growing interest, the field lacks a common analytical lens. We present the first unified framework for zero-shot RL. Our formulation introduces a consistent notation and taxonomy that organizes existing approaches and allows direct comparison between them. Central to our framework is the classification of algorithms into two families: direct representations, which learn end-to-end mappings from rewards to policies, and compositional representations, which decompose the representation leveraging the substructure of the value function. Within this framework, we highlight shared principles and key differences across methods, and we derive an extended bound for successor-feature methods, offering a new perspective on their performance in the zero-shot regime. By consolidating existing work under a common lens, our framework provides a principled foundation for future research in zero-shot RL and outlines a clear path toward developing more general agents.

A KL-regularization framework for learning to plan with adaptive priors

Oct 05, 2025Abstract:Effective exploration remains a central challenge in model-based reinforcement learning (MBRL), particularly in high-dimensional continuous control tasks where sample efficiency is crucial. A prominent line of recent work leverages learned policies as proposal distributions for Model-Predictive Path Integral (MPPI) planning. Initial approaches update the sampling policy independently of the planner distribution, typically maximizing a learned value function with deterministic policy gradient and entropy regularization. However, because the states encountered during training depend on the MPPI planner, aligning the sampling policy with the planner improves the accuracy of value estimation and long-term performance. To this end, recent methods update the sampling policy by minimizing KL divergence to the planner distribution or by introducing planner-guided regularization into the policy update. In this work, we unify these MPPI-based reinforcement learning methods under a single framework by introducing Policy Optimization-Model Predictive Control (PO-MPC), a family of KL-regularized MBRL methods that integrate the planner's action distribution as a prior in policy optimization. By aligning the learned policy with the planner's behavior, PO-MPC allows more flexibility in the policy updates to trade off Return maximization and KL divergence minimization. We clarify how prior approaches emerge as special cases of this family, and we explore previously unstudied variations. Our experiments show that these extended configurations yield significant performance improvements, advancing the state of the art in MPPI-based RL.

Chargax: A JAX Accelerated EV Charging Simulator

Jul 02, 2025Abstract:Deep Reinforcement Learning can play a key role in addressing sustainable energy challenges. For instance, many grid systems are heavily congested, highlighting the urgent need to enhance operational efficiency. However, reinforcement learning approaches have traditionally been slow due to the high sample complexity and expensive simulation requirements. While recent works have effectively used GPUs to accelerate data generation by converting environments to JAX, these works have largely focussed on classical toy problems. This paper introduces Chargax, a JAX-based environment for realistic simulation of electric vehicle charging stations designed for accelerated training of RL agents. We validate our environment in a variety of scenarios based on real data, comparing reinforcement learning agents against baselines. Chargax delivers substantial computational performance improvements of over 100x-1000x over existing environments. Additionally, Chargax' modular architecture enables the representation of diverse real-world charging station configurations.

Reinforcement learning for Quantum Tiq-Taq-Toe

Nov 10, 2024Abstract:Quantum Tiq-Taq-Toe is a well-known benchmark and playground for both quantum computing and machine learning. Despite its popularity, no reinforcement learning (RL) methods have been applied to Quantum Tiq-Taq-Toe. Although there has been some research on Quantum Chess this game is significantly more complex in terms of computation and analysis. Therefore, we study the combination of quantum computing and reinforcement learning in Quantum Tiq-Taq-Toe, which may serve as an accessible testbed for the integration of both fields. Quantum games are challenging to represent classically due to their inherent partial observability and the potential for exponential state complexity. In Quantum Tiq-Taq-Toe, states are observed through Measurement (a 3x3 matrix of state probabilities) and Move History (a 9x9 matrix of entanglement relations), making strategy complex as each move can collapse the quantum state.

Reinforcement Learning for Sustainable Energy: A Survey

Jul 26, 2024

Abstract:The transition to sustainable energy is a key challenge of our time, requiring modifications in the entire pipeline of energy production, storage, transmission, and consumption. At every stage, new sequential decision-making challenges emerge, ranging from the operation of wind farms to the management of electrical grids or the scheduling of electric vehicle charging stations. All such problems are well suited for reinforcement learning, the branch of machine learning that learns behavior from data. Therefore, numerous studies have explored the use of reinforcement learning for sustainable energy. This paper surveys this literature with the intention of bridging both the underlying research communities: energy and machine learning. After a brief introduction of both fields, we systematically list relevant sustainability challenges, how they can be modeled as a reinforcement learning problem, and what solution approaches currently exist in the literature. Afterwards, we zoom out and identify overarching reinforcement learning themes that appear throughout sustainability, such as multi-agent, offline, and safe reinforcement learning. Lastly, we also cover standardization of environments, which will be crucial for connecting both research fields, and highlight potential directions for future work. In summary, this survey provides an extensive overview of reinforcement learning methods for sustainable energy, which may play a vital role in the energy transition.

RRT-CoLearn: towards kinodynamic planning without numerical trajectory optimization

Oct 27, 2017

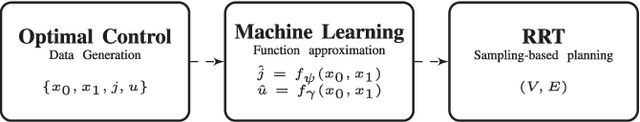

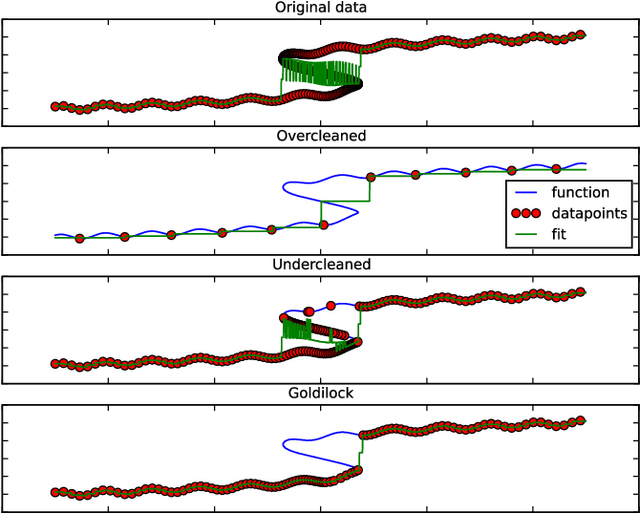

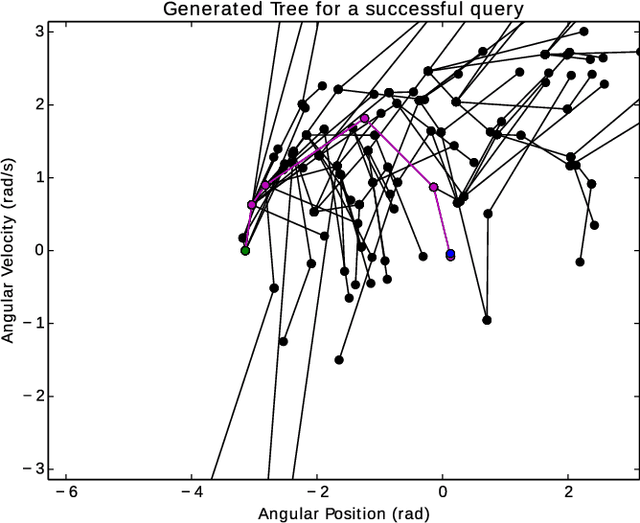

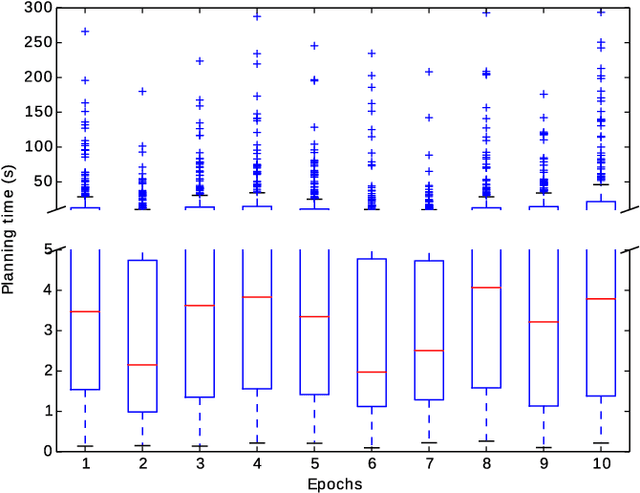

Abstract:Sampling-based kinodynamic planners, such as Rapidly-exploring Random Trees (RRTs), pose two fundamental challenges: computing a reliable (pseudo-)metric for the distance between two randomly sampled nodes, and computing a steering input to connect the nodes. The core of these challenges is a Two Point Boundary Value Problem, which is known to be NP-hard. Recently, the distance metric has been approximated using supervised learning, reducing computation time drastically. The previous work on such learning RRTs use direct optimal control to generate the data for supervised learning. This paper proposes to use indirect optimal control instead, because it provides two benefits: it reduces the computational effort to generate the data, and it provides a low dimensional parametrization of the action space. The latter allows us to learn both the distance metric and the steering input to connect two nodes. This eliminates the need for a local planner in learning RRTs. Experimental results on a pendulum swing up show 10-fold speed-up in both the offline data generation and the online planning time, leading to at least a 10-fold speed-up in the overall planning time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge