Wataru Ohata

Human-Robot Kinaesthetic Interaction Based on Free Energy Principle

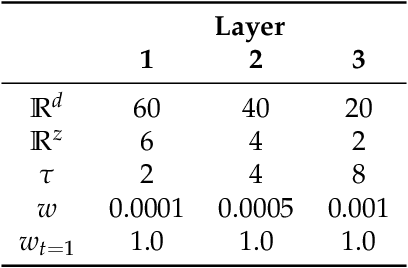

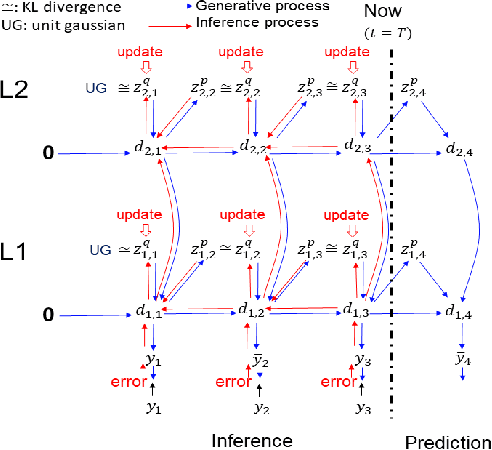

Mar 27, 2023Abstract:The current study investigated possible human-robot kinaesthetic interaction using a variational recurrent neural network model, called PV-RNN, which is based on the free energy principle. Our prior robotic studies using PV-RNN showed that the nature of interactions between top-down expectation and bottom-up inference is strongly affected by a parameter, called the meta-prior, which regulates the complexity term in free energy.The study also compares the counter force generated when trained transitions are induced by a human experimenter and when untrained transitions are induced. Our experimental results indicated that (1) the human experimenter needs more/less force to induce trained transitions when $w$ is set with larger/smaller values, (2) the human experimenter needs more force to act on the robot when he attempts to induce untrained as opposed to trained movement pattern transitions. Our analysis of time development of essential variables and values in PV-RNN during bodily interaction clarified the mechanism by which gaps in actional intentions between the human experimenter and the robot can be manifested as reaction forces between them.

Goal-directed Planning and Goal Understanding by Active Inference: Evaluation Through Simulated and Physical Robot Experiments

Feb 21, 2022

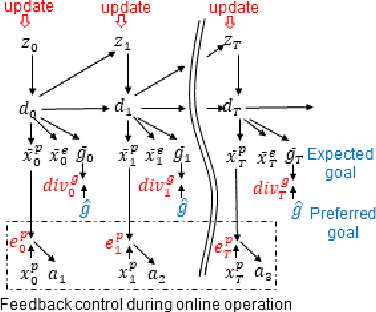

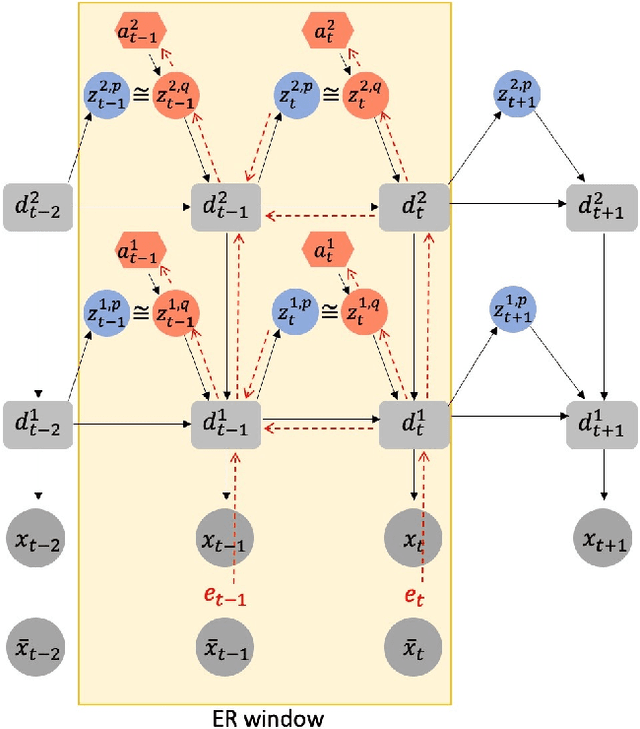

Abstract:We show that goal-directed action planning and generation in a teleological framework can be formulated using the free energy principle. The proposed model, which is built on a variational recurrent neural network model, is characterized by three essential features. These are that (1) goals can be specified for both static sensory states, e.g., for goal images to be reached and dynamic processes, e.g., for moving around an object, (2) the model can not only generate goal-directed action plans, but can also understand goals by sensory observation, and (3) the model generates future action plans for given goals based on the best estimate of the current state, inferred using past sensory observations. The proposed model is evaluated by conducting experiments on a simulated mobile agent as well as on a real humanoid robot performing object manipulation.

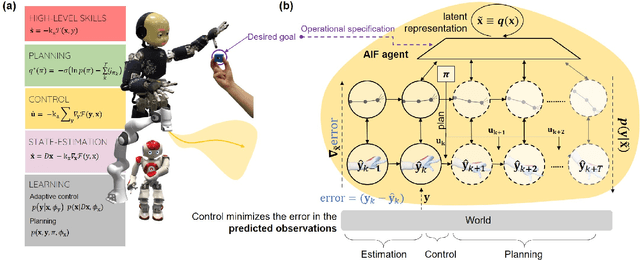

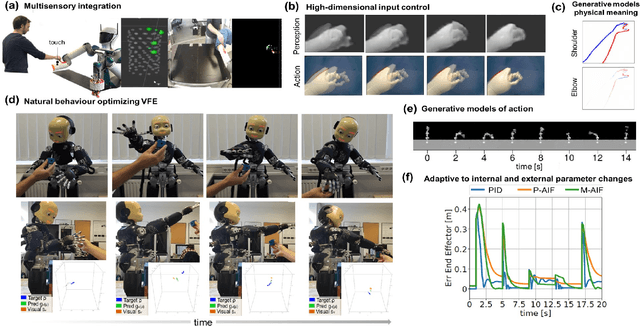

Active Inference in Robotics and Artificial Agents: Survey and Challenges

Dec 03, 2021

Abstract:Active inference is a mathematical framework which originated in computational neuroscience as a theory of how the brain implements action, perception and learning. Recently, it has been shown to be a promising approach to the problems of state-estimation and control under uncertainty, as well as a foundation for the construction of goal-driven behaviours in robotics and artificial agents in general. Here, we review the state-of-the-art theory and implementations of active inference for state-estimation, control, planning and learning; describing current achievements with a particular focus on robotics. We showcase relevant experiments that illustrate its potential in terms of adaptation, generalization and robustness. Furthermore, we connect this approach with other frameworks and discuss its expected benefits and challenges: a unified framework with functional biological plausibility using variational Bayesian inference.

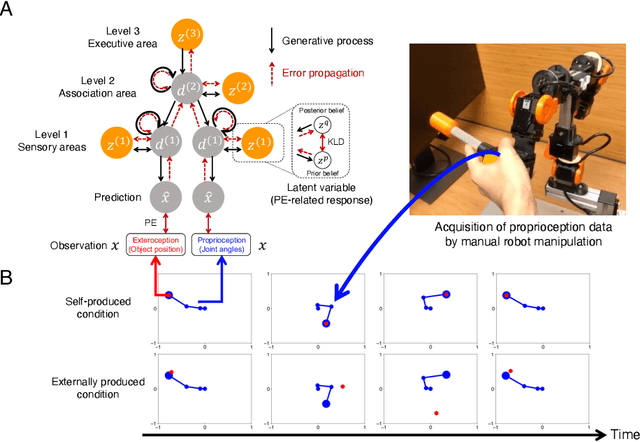

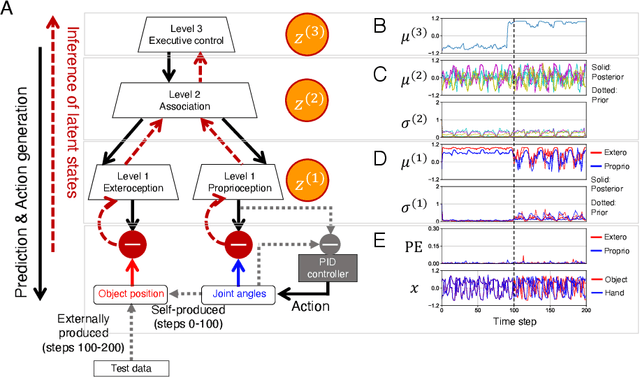

Sensory attenuation develops as a result of sensorimotor experience

Dec 01, 2021

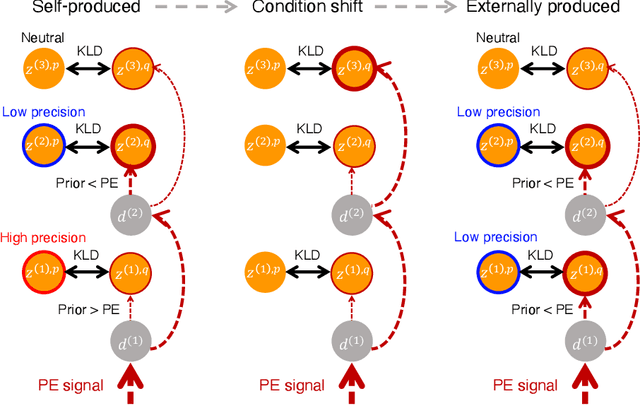

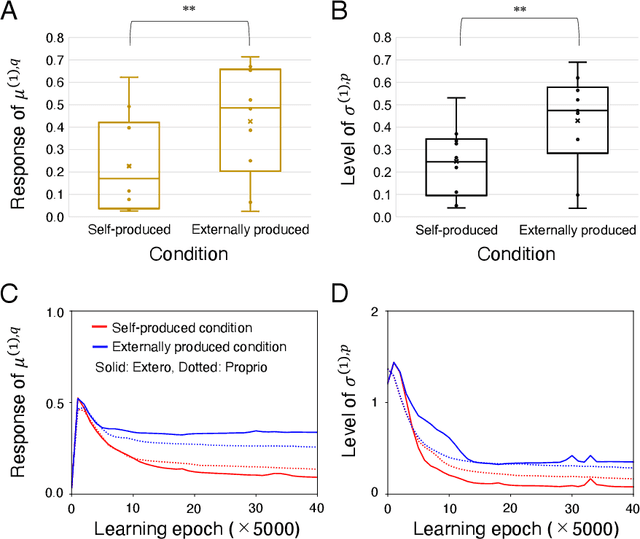

Abstract:The brain attenuates its responses to self-produced exteroceptions (e.g., we cannot tickle ourselves). Is this phenomenon, known as sensory attenuation, enabled innately, or is it acquired through learning? For decades, theoretical and biological studies have suggested related neural functions of sensory attenuation, such as an efference copy of the motor command and neuromodulation; however, the developmental aspect of sensory attenuation remains unexamined. Here, our simulation study using a recurrent neural network, operated according to a computational principle called free-energy minimization, shows that sensory attenuation can be developed as a free-energy state in the network through learning of two distinct types of sensorimotor patterns, characterized by self-produced or externally produced exteroceptive feedback. Simulation of the network, consisting of sensory (proprioceptive and exteroceptive), association, and executive areas, showed that shifts between these two types of sensorimotor patterns triggered transitions from one free-energy state to another in the network. Consequently, this induced shifts between attenuating and amplifying responses in the sensory areas. Furthermore, the executive area, proactively adjusted the precision of the prediction in lower levels while being modulated by the bottom-up sensory prediction error signal in minimizing the free-energy, thereby serving as an information hub in generating the observed shifts. We also found that innate alterations in modulation of sensory-information flow induced some characteristics analogous to schizophrenia and autism spectrum disorder. This study provides a novel perspective on neural mechanisms underlying emergence of perceptual phenomena and psychiatric disorders.

Investigation of Multimodal and Agential Interactions in Human-Robot Imitation, based on frameworks of Predictive Coding and Active Inference

Feb 05, 2020

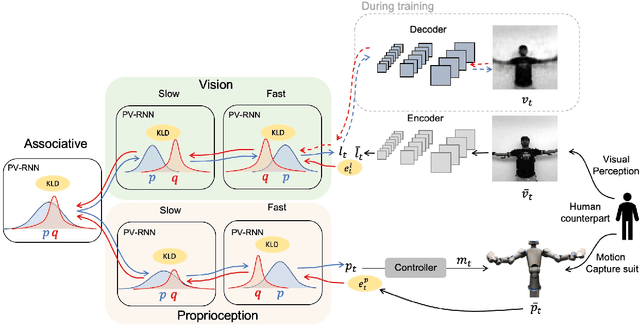

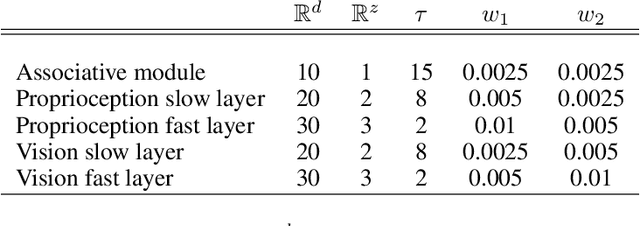

Abstract:This study proposes a model for multimodal, imitative interaction of agents, based on frameworks of predictive coding and active inference, using a variational Bayes recurrent neural network. The model dynamically predicts visual sensation and proprioception simultaneously through generative processes by associating both modalities. It also updates the internal state and generates actions by maximizing the lower bound. A key feature of the model is that the complexity of each modality, as well as of the entire network can be regulated independently. We hypothesize that regulation of complexity offers a common perspective over two distinct properties of embodied agents: coordination of multimodalities and strength of agent intention or belief in social interactions. We evaluate the hypotheses by conducting experiments on imitative human-robot interactions in two different scenarios using the model. First, regulation of complexity was changed between the vision module and the proprioception module during learning. The results showed that complexity of the vision module should be more strongly regulated than that of proprioception because of its greater randomness. Second, the strength of complexity regulation of the whole network in the robot was varied during test imitation after learning. We found that this affects human-robot interactions significantly. With weaker regulation of complexity, the robot tends to move more egocentrically, without adapting to the human counterpart. On the other hand, with stronger regulation, the robot tends to follow its human counterpart by adapting its internal state. Our study concludes that the strength with which complexity is regulated significantly affects the nature of dynamic interactions between different modalities and between individual agents in a social setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge