Fabien C. Y. Benureau

Morphological Wobbling Can Help Robots Learn

May 05, 2022

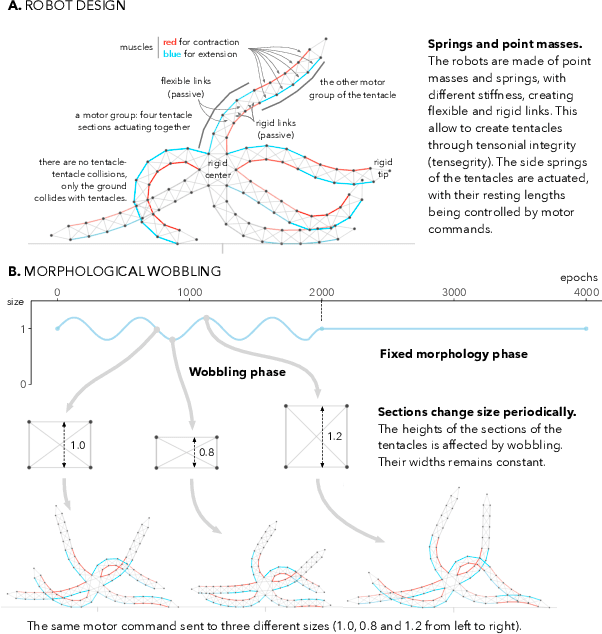

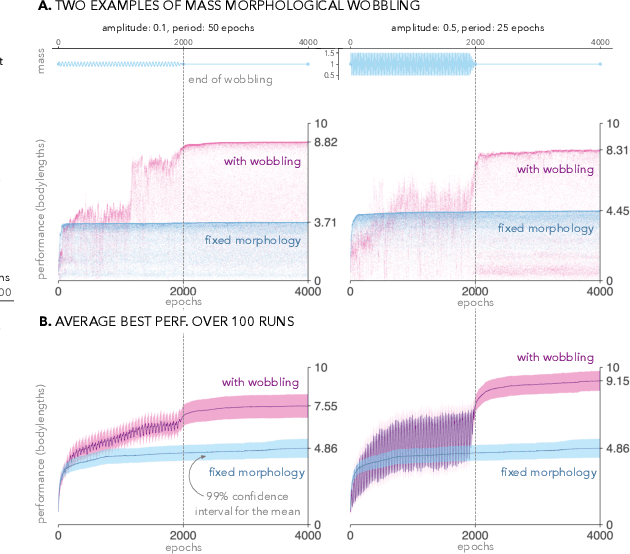

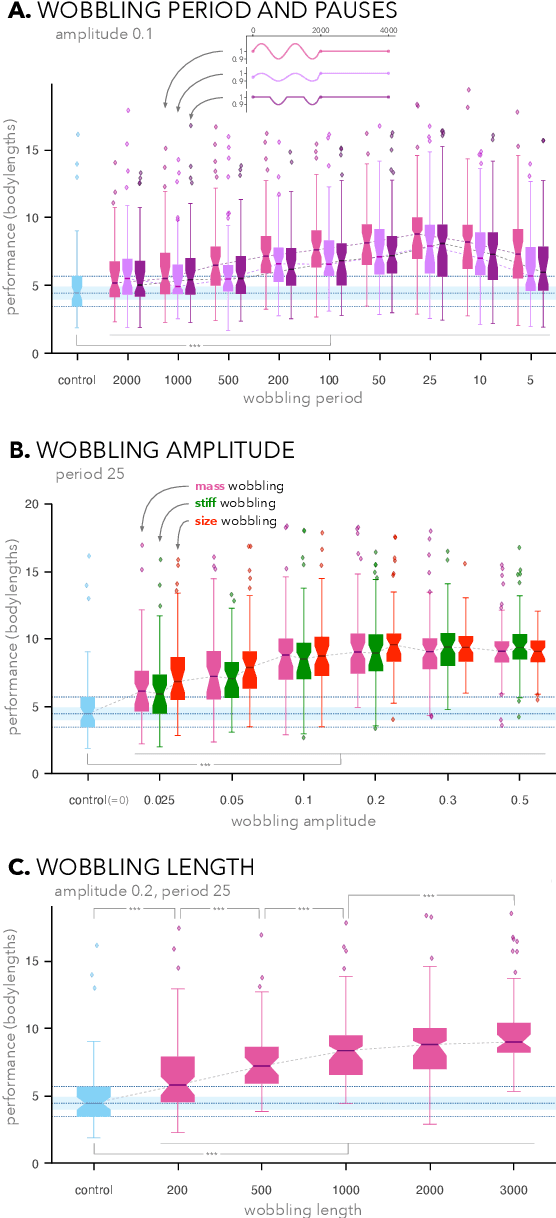

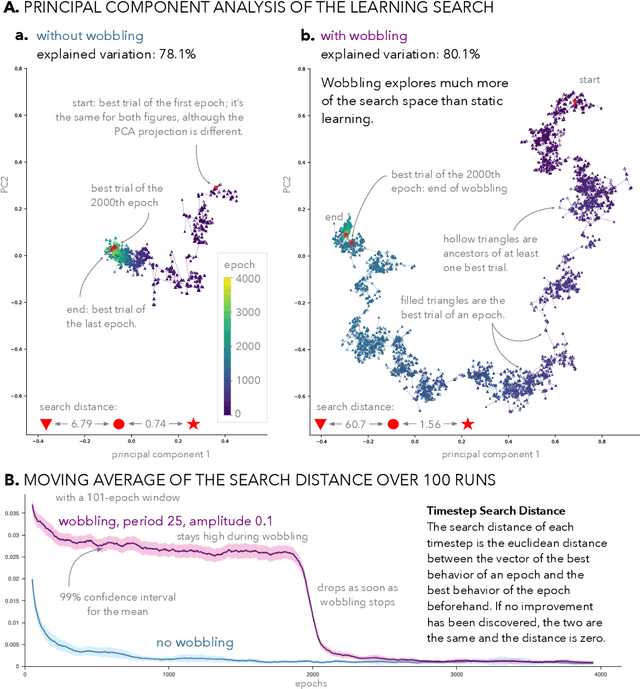

Abstract:We propose to make the physical characteristics of a robot oscillate while it learns to improve its behavioral performance. We consider quantities such as mass, actuator strength, and size that are usually fixed in a robot, and show that when those quantities oscillate at the beginning of the learning process on a simulated 2D soft robot, the performance on a locomotion task can be significantly improved. We investigate the dynamics of the phenomenon and conclude that in our case, surprisingly, a high-frequency oscillation with a large amplitude for a large portion of the learning duration leads to the highest performance benefits. Furthermore, we show that morphological wobbling significantly increases exploration of the search space.

Goal-directed Planning and Goal Understanding by Active Inference: Evaluation Through Simulated and Physical Robot Experiments

Feb 21, 2022

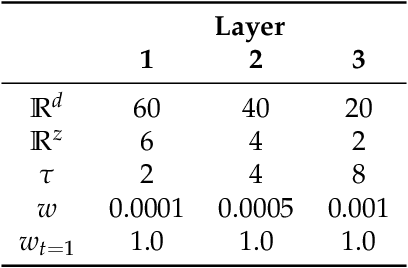

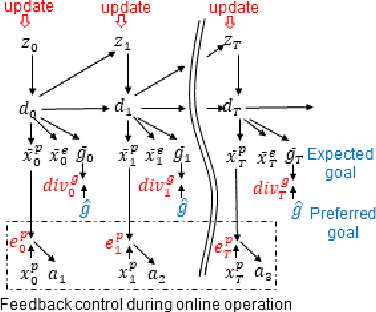

Abstract:We show that goal-directed action planning and generation in a teleological framework can be formulated using the free energy principle. The proposed model, which is built on a variational recurrent neural network model, is characterized by three essential features. These are that (1) goals can be specified for both static sensory states, e.g., for goal images to be reached and dynamic processes, e.g., for moving around an object, (2) the model can not only generate goal-directed action plans, but can also understand goals by sensory observation, and (3) the model generates future action plans for given goals based on the best estimate of the current state, inferred using past sensory observations. The proposed model is evaluated by conducting experiments on a simulated mobile agent as well as on a real humanoid robot performing object manipulation.

Morphological Development at the Evolutionary Timescale: Robotic Developmental Evolution

Oct 28, 2020

Abstract:Evolution and development operate at different timescales; generations for the one, a lifetime for the other. These two processes, the basis of much of life on earth, interact in many non-trivial ways, but their temporal hierarchy---evolution overarching development---is observed for all multicellular lifeforms. When designing robots however, this tenet lifts: it becomes---however natural---a design choice. We propose to inverse this temporal hierarchy and design a developmental process happening at the phylogenetic timescale. Over a classic evolutionary search aimed at finding good gaits for a tentacle robot, we add a developmental process over the robots' morphologies. In each generation, the morphology of the robots does not change. But from one generation to the next, the morphology develops. Much like we become bigger, stronger and heavier as we age, our robots are bigger, stronger and heavier with each passing generation. Our robots start with baby morphologies, and a few thousand generations later, end-up with adult ones. We show that this produces better and qualitatively different gaits than an evolutionary search with only adult robots, and that it prevents premature convergence by fostering exploration. This method is conceptually simple, and can be effective on small or large populations of robots, and intrinsic to the robot and its morphology, and thus not specific to the task and the fitness function it is evaluated on. Furthermore, by recasting the evolutionary search as a learning process, these results can be viewed in the context of developmental learning robotics.

Diversity-Driven Selection of Exploration Strategies in Multi-Armed Bandits

Aug 23, 2018

Abstract:We consider a scenario where an agent has multiple available strategies to explore an unknown environment. For each new interaction with the environment, the agent must select which exploration strategy to use. We provide a new strategy-agnostic method that treat the situation as a Multi-Armed Bandits problem where the reward signal is the diversity of effects that each strategy produces. We test the method empirically on a simulated planar robotic arm, and establish that the method is both able discriminate between strategies of dissimilar quality, even when the differences are tenuous, and that the resulting performance is competitive with the best fixed mixture of strategies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge