Mohamed Baioumy

Monte Carlo Tree Search with Boltzmann Exploration

Apr 11, 2024Abstract:Monte-Carlo Tree Search (MCTS) methods, such as Upper Confidence Bound applied to Trees (UCT), are instrumental to automated planning techniques. However, UCT can be slow to explore an optimal action when it initially appears inferior to other actions. Maximum ENtropy Tree-Search (MENTS) incorporates the maximum entropy principle into an MCTS approach, utilising Boltzmann policies to sample actions, naturally encouraging more exploration. In this paper, we highlight a major limitation of MENTS: optimal actions for the maximum entropy objective do not necessarily correspond to optimal actions for the original objective. We introduce two algorithms, Boltzmann Tree Search (BTS) and Decaying ENtropy Tree-Search (DENTS), that address these limitations and preserve the benefits of Boltzmann policies, such as allowing actions to be sampled faster by using the Alias method. Our empirical analysis shows that our algorithms show consistent high performance across several benchmark domains, including the game of Go.

* Camera ready version of NeurIPS2023 paper

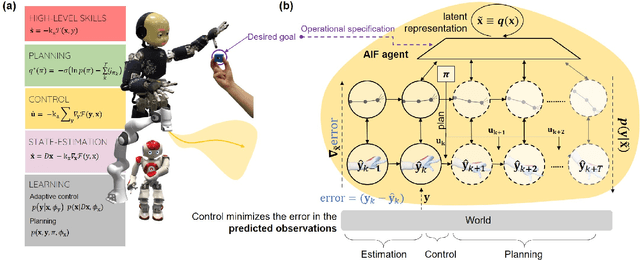

Unbiased Active Inference for Classical Control

Jul 27, 2022

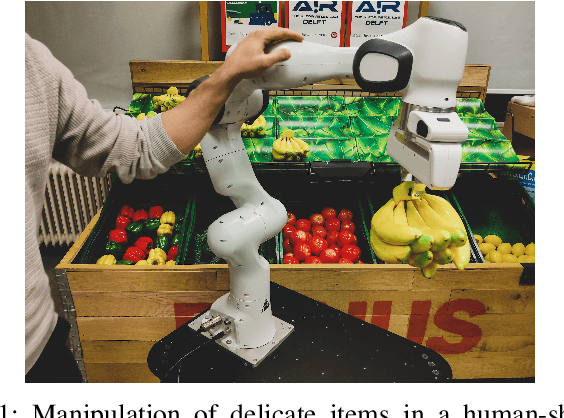

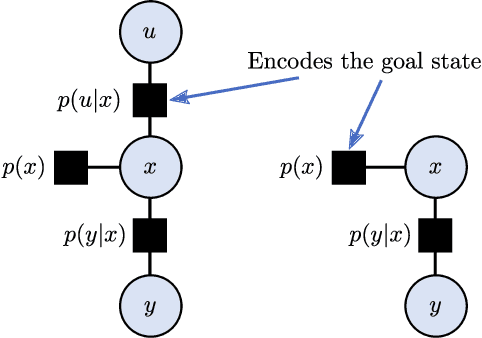

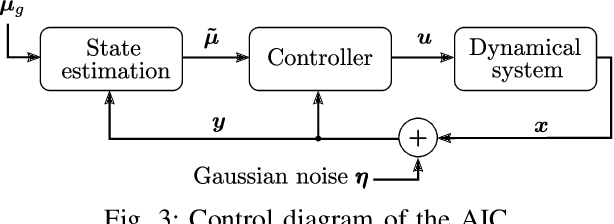

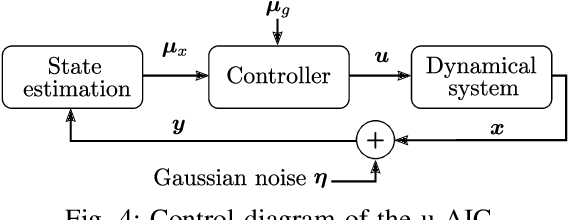

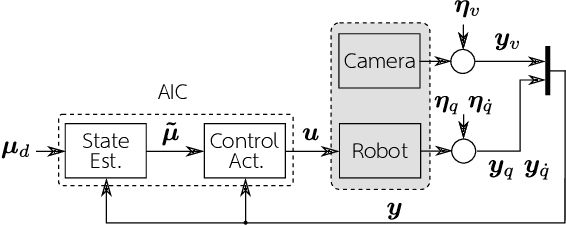

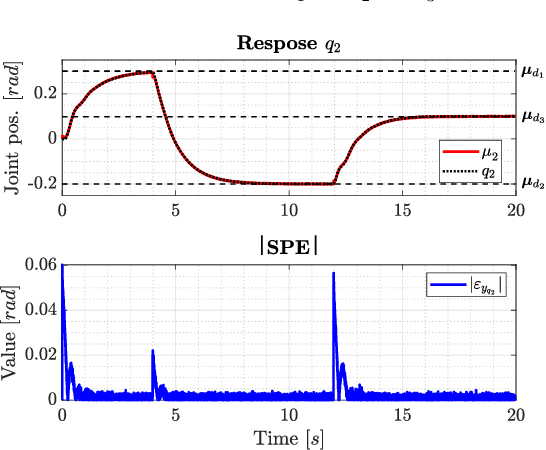

Abstract:Active inference is a mathematical framework that originated in computational neuroscience. Recently, it has been demonstrated as a promising approach for constructing goal-driven behavior in robotics. Specifically, the active inference controller (AIC) has been successful on several continuous control and state-estimation tasks. Despite its relative success, some established design choices lead to a number of practical limitations for robot control. These include having a biased estimate of the state, and only an implicit model of control actions. In this paper, we highlight these limitations and propose an extended version of the unbiased active inference controller (u-AIC). The u-AIC maintains all the compelling benefits of the AIC and removes its limitations. Simulation results on a 2-DOF arm and experiments on a real 7-DOF manipulator show the improved performance of the u-AIC with respect to the standard AIC. The code can be found at https://github.com/cpezzato/unbiased_aic.

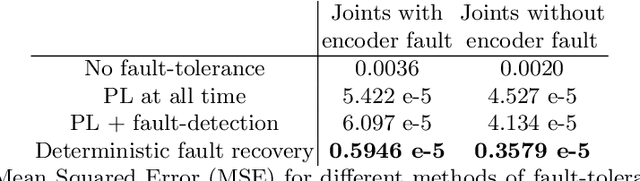

Beta Residuals: Improving Fault-Tolerant Control for Sensory Faults via Bayesian Inference and Precision Learning

Apr 17, 2022

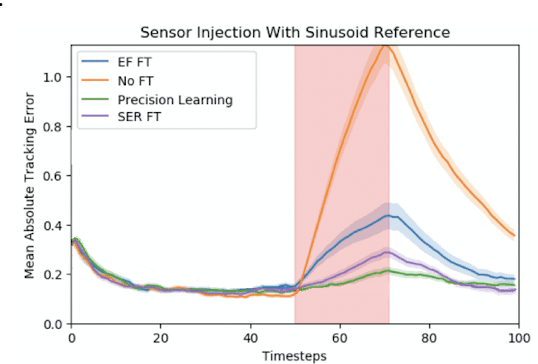

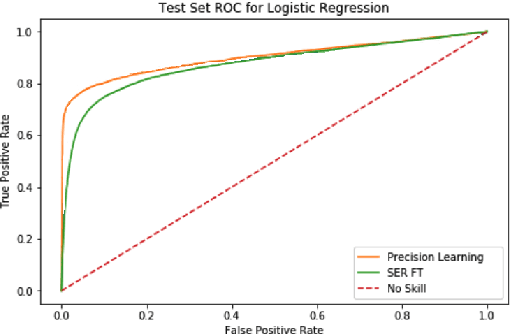

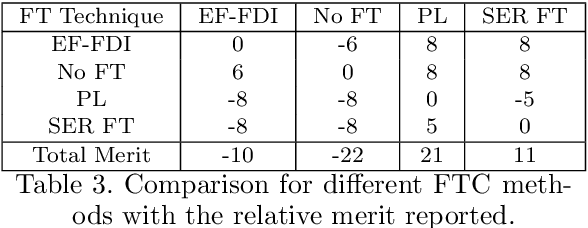

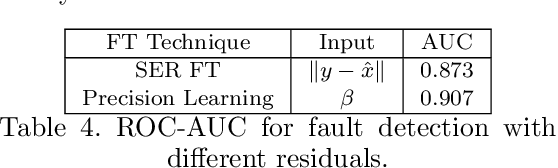

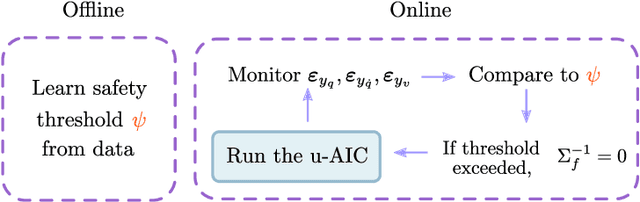

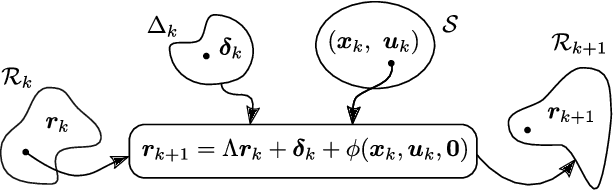

Abstract:Model-based fault-tolerant control (FTC) often consists of two distinct steps: fault detection & isolation (FDI), and fault accommodation. In this work we investigate posing fault-tolerant control as a single Bayesian inference problem. Previous work showed that precision learning allows for stochastic FTC without an explicit fault detection step. While this leads to implicit fault recovery, information on sensor faults is not provided, which may be essential for triggering other impact-mitigation actions. In this paper, we introduce a precision-learning based Bayesian FTC approach and a novel beta residual for fault detection. Simulation results are presented, supporting the use of beta residual against competing approaches.

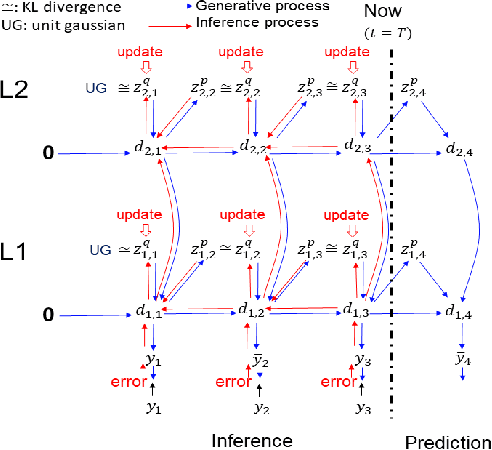

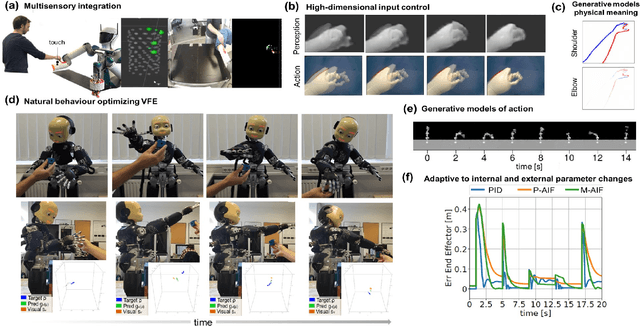

Active Inference in Robotics and Artificial Agents: Survey and Challenges

Dec 03, 2021

Abstract:Active inference is a mathematical framework which originated in computational neuroscience as a theory of how the brain implements action, perception and learning. Recently, it has been shown to be a promising approach to the problems of state-estimation and control under uncertainty, as well as a foundation for the construction of goal-driven behaviours in robotics and artificial agents in general. Here, we review the state-of-the-art theory and implementations of active inference for state-estimation, control, planning and learning; describing current achievements with a particular focus on robotics. We showcase relevant experiments that illustrate its potential in terms of adaptation, generalization and robustness. Furthermore, we connect this approach with other frameworks and discuss its expected benefits and challenges: a unified framework with functional biological plausibility using variational Bayesian inference.

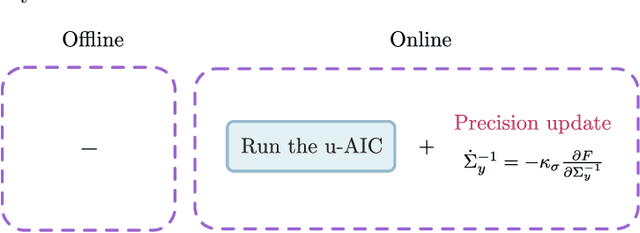

Towards Stochastic Fault-tolerant Control using Precision Learning and Active Inference

Sep 13, 2021

Abstract:This work presents a fault-tolerant control scheme for sensory faults in robotic manipulators based on active inference. In the majority of existing schemes, a binary decision of whether a sensor is healthy (functional) or faulty is made based on measured data. The decision boundary is called a threshold and it is usually deterministic. Following a faulty decision, fault recovery is obtained by excluding the malfunctioning sensor. We propose a stochastic fault-tolerant scheme based on active inference and precision learning which does not require a priori threshold definitions to trigger fault recovery. Instead, the sensor precision, which represents its health status, is learned online in a model-free way allowing the system to gradually, and not abruptly exclude a failing unit. Experiments on a robotic manipulator show promising results and directions for future work are discussed.

On Solving a Stochastic Shortest-Path Markov Decision Process as Probabilistic Inference

Sep 13, 2021

Abstract:Previous work on planning as active inference addresses finite horizon problems and solutions valid for online planning. We propose solving the general Stochastic Shortest-Path Markov Decision Process (SSP MDP) as probabilistic inference. Furthermore, we discuss online and offline methods for planning under uncertainty. In an SSP MDP, the horizon is indefinite and unknown a priori. SSP MDPs generalize finite and infinite horizon MDPs and are widely used in the artificial intelligence community. Additionally, we highlight some of the differences between solving an MDP using dynamic programming approaches widely used in the artificial intelligence community and approaches used in the active inference community.

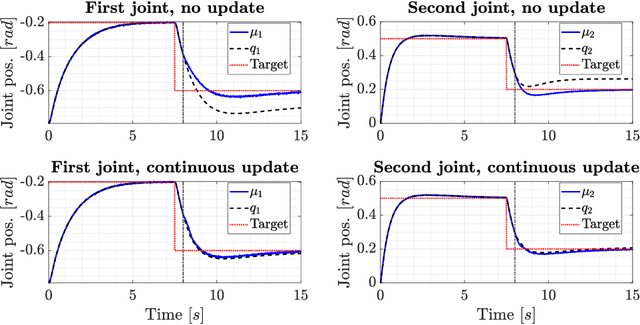

Fault-tolerant Control of Robot Manipulators with Sensory Faults using Unbiased Active Inference

Apr 05, 2021

Abstract:This work presents a novel fault-tolerant control scheme based on active inference. Specifically, a new formulation of active inference which, unlike previous solutions, provides unbiased state estimation and simplifies the definition of probabilistically robust thresholds for fault-tolerant control of robotic systems using the free-energy. The proposed solution makes use of the sensory prediction errors in the free-energy for the generation of residuals and thresholds for fault detection and isolation of sensory faults, and it does not require additional controllers for fault recovery. Results validating the benefits in a simulated 2-DOF manipulator are presented, and future directions to improve the current fault recovery approach are discussed.

Active Inference for Integrated State-Estimation, Control, and Learning

May 12, 2020

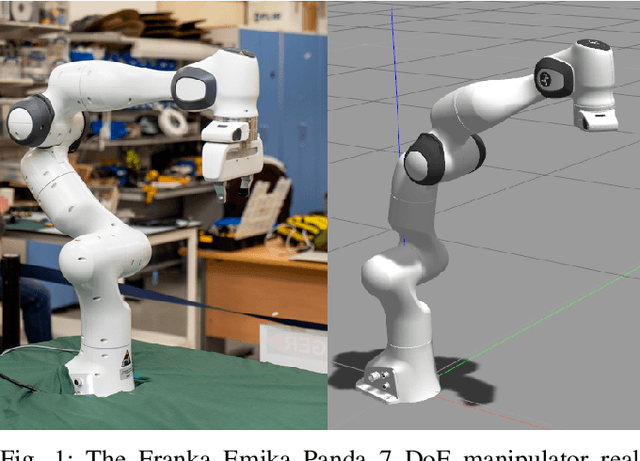

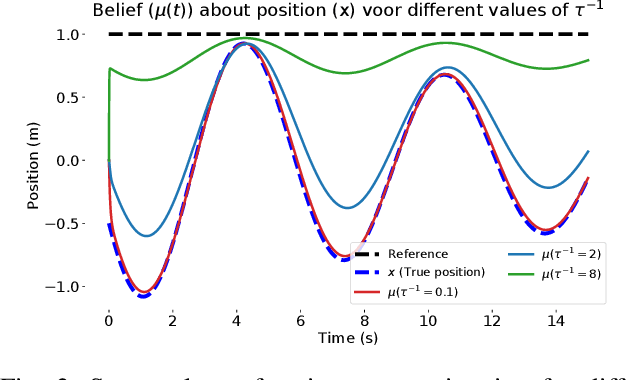

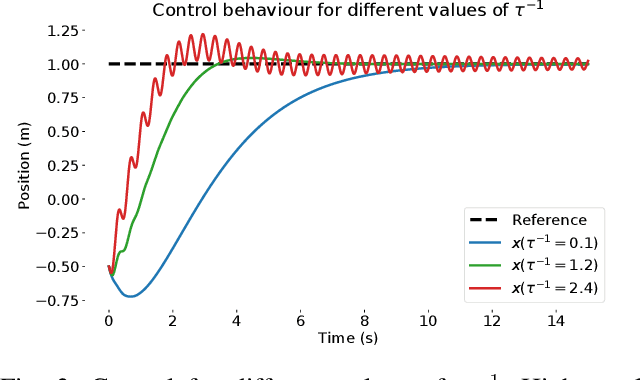

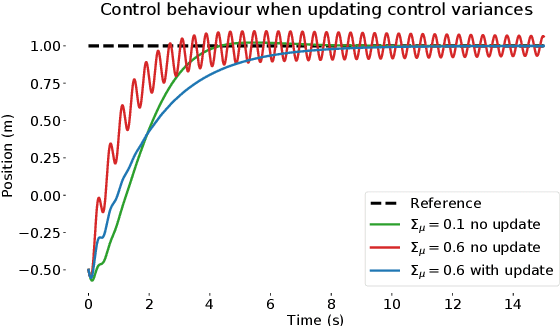

Abstract:This work presents an approach for control, state-estimation and learning model (hyper)parameters for robotic manipulators. It is based on the active inference framework, prominent in computational neuroscience as a theory of the brain, where behaviour arises from minimizing variational free-energy. The robotic manipulator shows adaptive and robust behaviour compared to state-of-the-art methods. Additionally, we show the exact relationship to classic methods such as PID control. Finally, we show that by learning a temporal parameter and model variances, our approach can deal with unmodelled dynamics, damps oscillations, and is robust against disturbances and poor initial parameters. The approach is validated on the `Franka Emika Panda' 7 DoF manipulator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge