Malte Jung

Large Language Model Use Impact Locus of Control

May 16, 2025Abstract:As AI tools increasingly shape how we write, they may also quietly reshape how we perceive ourselves. This paper explores the psychological impact of co-writing with AI on people's locus of control. Through an empirical study with 462 participants, we found that employment status plays a critical role in shaping users' reliance on AI and their locus of control. Current results demonstrated that employed participants displayed higher reliance on AI and a shift toward internal control, while unemployed users tended to experience a reduction in personal agency. Through quantitative results and qualitative observations, this study opens a broader conversation about AI's role in shaping personal agency and identity.

ERR@HRI 2024 Challenge: Multimodal Detection of Errors and Failures in Human-Robot Interactions

Jul 08, 2024Abstract:Despite the recent advancements in robotics and machine learning (ML), the deployment of autonomous robots in our everyday lives is still an open challenge. This is due to multiple reasons among which are their frequent mistakes, such as interrupting people or having delayed responses, as well as their limited ability to understand human speech, i.e., failure in tasks like transcribing speech to text. These mistakes may disrupt interactions and negatively influence human perception of these robots. To address this problem, robots need to have the ability to detect human-robot interaction (HRI) failures. The ERR@HRI 2024 challenge tackles this by offering a benchmark multimodal dataset of robot failures during human-robot interactions (HRI), encouraging researchers to develop and benchmark multimodal machine learning models to detect these failures. We created a dataset featuring multimodal non-verbal interaction data, including facial, speech, and pose features from video clips of interactions with a robotic coach, annotated with labels indicating the presence or absence of robot mistakes, user awkwardness, and interaction ruptures, allowing for the training and evaluation of predictive models. Challenge participants have been invited to submit their multimodal ML models for detection of robot errors and to be evaluated against various performance metrics such as accuracy, precision, recall, F1 score, with and without a margin of error reflecting the time-sensitivity of these metrics. The results of this challenge will help the research field in better understanding the robot failures in human-robot interactions and designing autonomous robots that can mitigate their own errors after successfully detecting them.

Understanding Entrainment in Human Groups: Optimising Human-Robot Collaboration from Lessons Learned during Human-Human Collaboration

Feb 23, 2024

Abstract:Successful entrainment during collaboration positively affects trust, willingness to collaborate, and likeability towards collaborators. In this paper, we present a mixed-method study to investigate characteristics of successful entrainment leading to pair and group-based synchronisation. Drawing inspiration from industrial settings, we designed a fast-paced, short-cycle repetitive task. Using motion tracking, we investigated entrainment in both dyadic and triadic task completion. Furthermore, we utilise audio-video recordings and semi-structured interviews to contextualise participants' experiences. This paper contributes to the Human-Computer/Robot Interaction (HCI/HRI) literature using a human-centred approach to identify characteristics of entrainment during pair- and group-based collaboration. We present five characteristics related to successful entrainment. These are related to the occurrence of entrainment, leader-follower patterns, interpersonal communication, the importance of the point-of-assembly, and the value of acoustic feedback. Finally, we present three design considerations for future research and design on collaboration with robots.

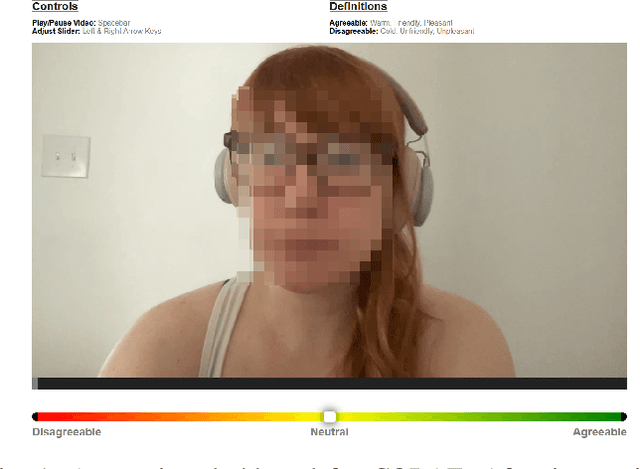

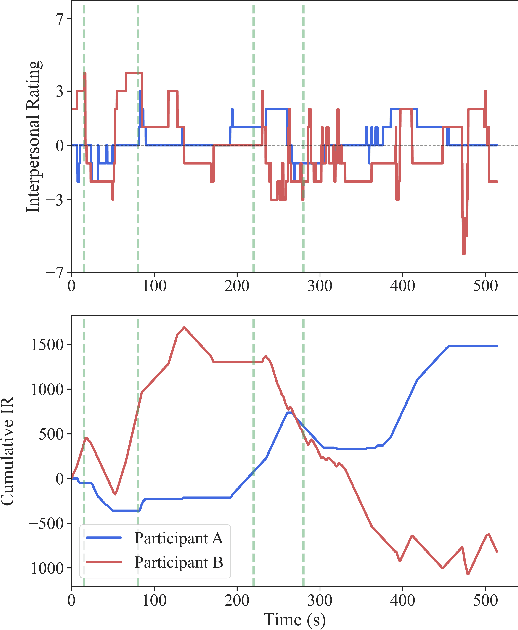

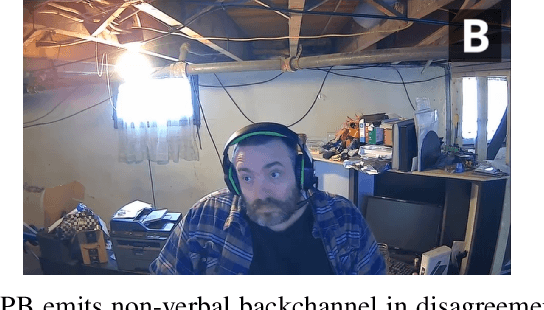

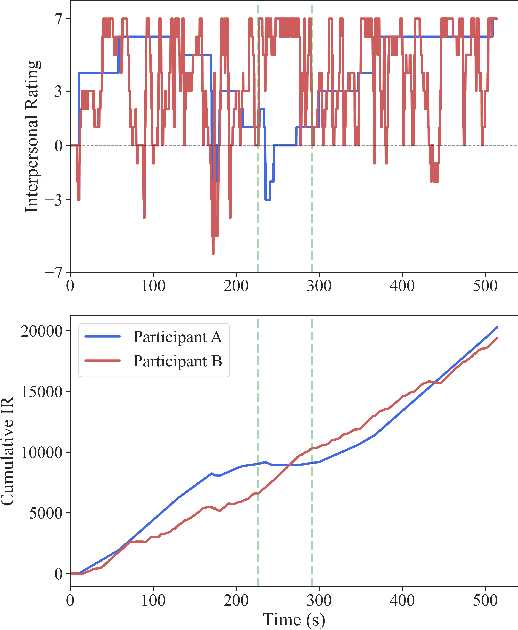

"How Did They Come Across?" Lessons Learned from Continuous Affective Ratings

Jul 07, 2023

Abstract:Social distance, or perception of the other, is recognized as a dynamic dimension of an interaction, but yet to be widely explored or understood. Through CORAE, a novel web-based open-source tool for COntinuous Retrospective Affect Evaluation, we collected retrospective ratings of interpersonal perceptions between 12 participant dyads. In this work, we explore how different aspects of these interactions reflect on the ratings collected, through a discourse analysis of individual and social behavior of the interactants. We found that different events observed in the ratings can be mapped to complex interaction phenomena, shedding light on relevant interaction features that may play a role in interpersonal understanding and grounding. This paves the way for better, more seamless human-robot interactions, where affect is interpreted as highly dynamic and contingent on interaction history.

* arXiv admin note: substantial text overlap with arXiv:2306.16629

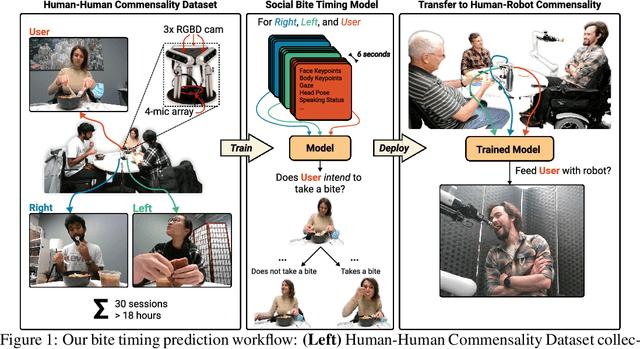

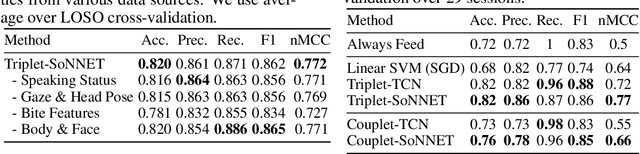

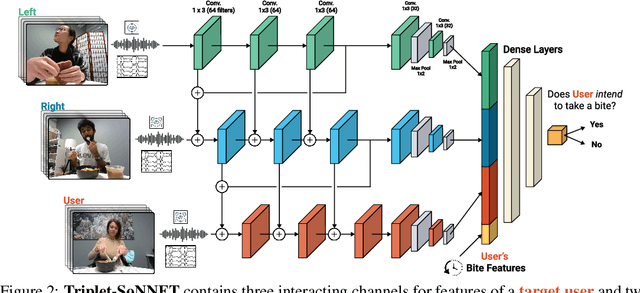

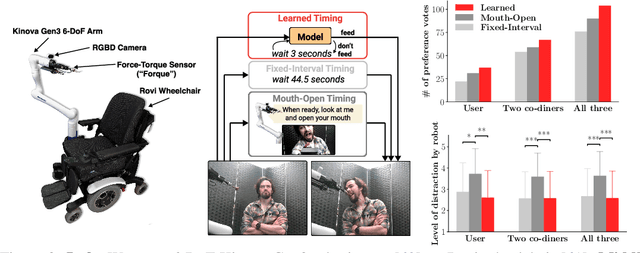

Human-Robot Commensality: Bite Timing Prediction for Robot-Assisted Feeding in Groups

Jul 07, 2022

Abstract:We develop data-driven models to predict when a robot should feed during social dining scenarios. Being able to eat independently with friends and family is considered one of the most memorable and important activities for people with mobility limitations. Robots can potentially help with this activity but robot-assisted feeding is a multi-faceted problem with challenges in bite acquisition, bite timing, and bite transfer. Bite timing in particular becomes uniquely challenging in social dining scenarios due to the possibility of interrupting a social human-robot group interaction during commensality. Our key insight is that bite timing strategies that take into account the delicate balance of social cues can lead to seamless interactions during robot-assisted feeding in a social dining scenario. We approach this problem by collecting a multimodal Human-Human Commensality Dataset (HHCD) containing 30 groups of three people eating together. We use this dataset to analyze human-human commensality behaviors and develop bite timing prediction models in social dining scenarios. We also transfer these models to human-robot commensality scenarios. Our user studies show that prediction improves when our algorithm uses multimodal social signaling cues between diners to model bite timing. The HHCD dataset, videos of user studies, and code will be publicly released after acceptance.

Artificial intelligence in communication impacts language and social relationships

Feb 10, 2021

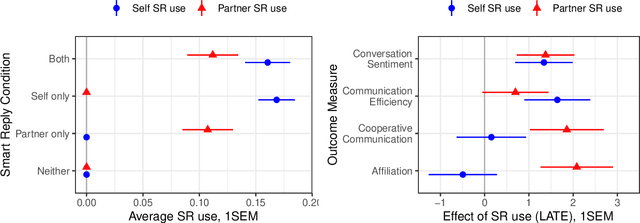

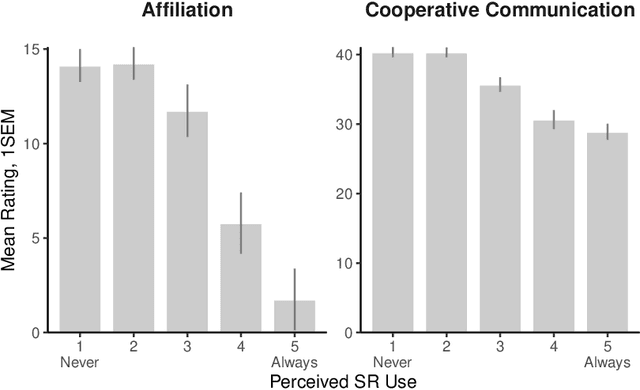

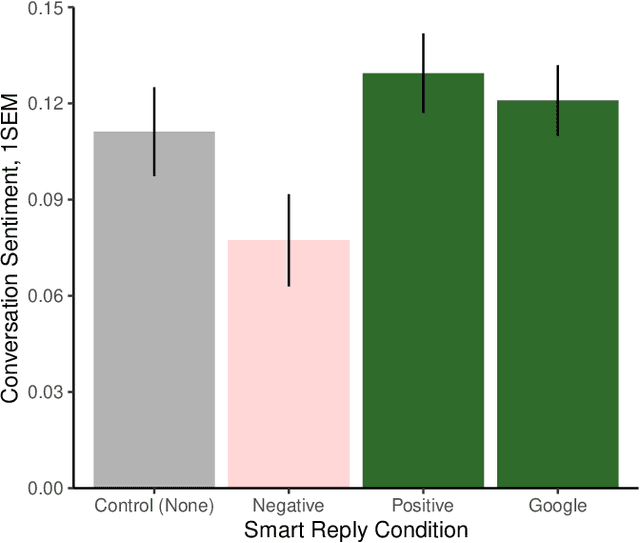

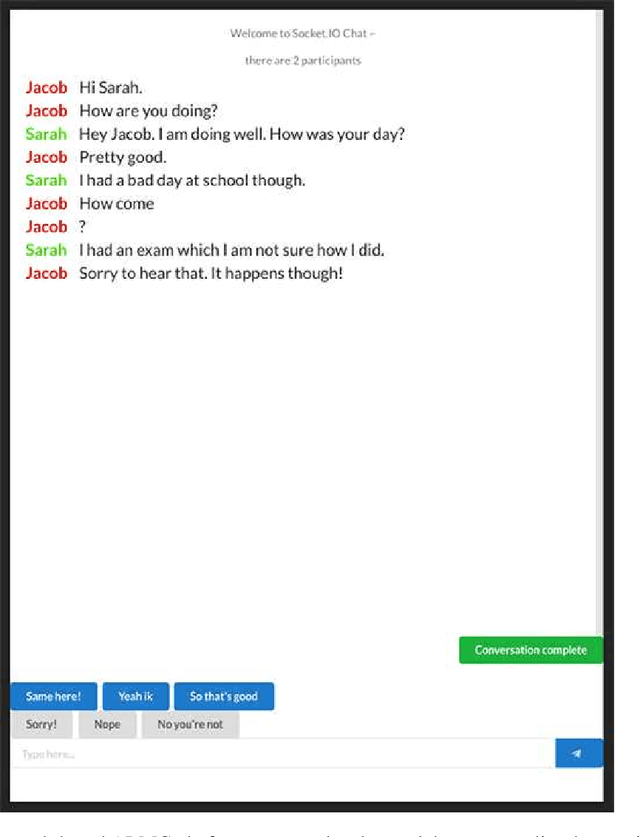

Abstract:Artificial intelligence (AI) is now widely used to facilitate social interaction, but its impact on social relationships and communication is not well understood. We study the social consequences of one of the most pervasive AI applications: algorithmic response suggestions ("smart replies"). Two randomized experiments (n = 1036) provide evidence that a commercially-deployed AI changes how people interact with and perceive one another in pro-social and anti-social ways. We find that using algorithmic responses increases communication efficiency, use of positive emotional language, and positive evaluations by communication partners. However, consistent with common assumptions about the negative implications of AI, people are evaluated more negatively if they are suspected to be using algorithmic responses. Thus, even though AI can increase communication efficiency and improve interpersonal perceptions, it risks changing users' language production and continues to be viewed negatively.

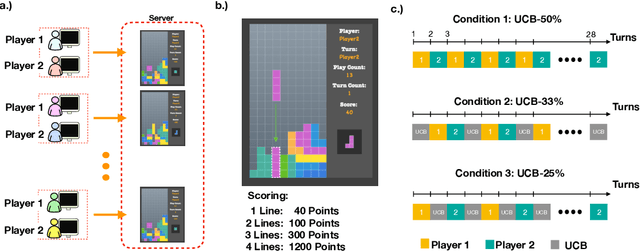

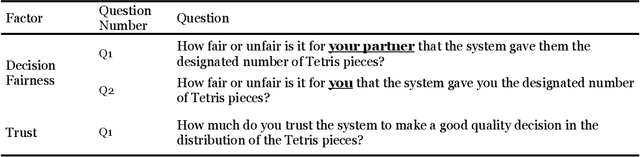

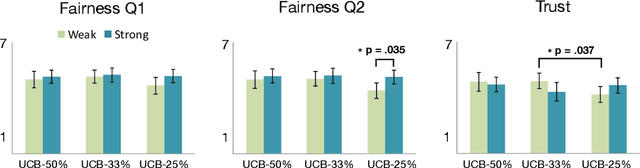

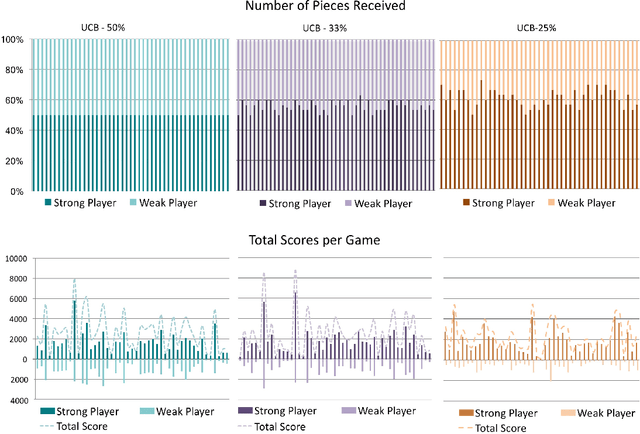

Reinforcement Learning with Fairness Constraints for Resource Distribution in Human-Robot Teams

Jul 08, 2019

Abstract:Much work in robotics and operations research has focused on optimal resource distribution, where an agent dynamically decides how to sequentially distribute resources among different candidates. However, most work ignores the notion of fairness in candidate selection. In the case where a robot distributes resources to human team members, disproportionately favoring the highest performing teammate can have negative effects in team dynamics and system acceptance. We introduce a multi-armed bandit algorithm with fairness constraints, where a robot distributes resources to human teammates of different skill levels. In this problem, the robot does not know the skill level of each human teammate, but learns it by observing their performance over time. We define fairness as a constraint on the minimum rate that each human teammate is selected throughout the task. We provide theoretical guarantees on performance and perform a large-scale user study, where we adjust the level of fairness in our algorithm. Results show that fairness in resource distribution has a significant effect on users' trust in the system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge