Minja Axelsson

Critical Insights about Robots for Mental Wellbeing

Jun 16, 2025Abstract:Social robots are increasingly being explored as tools to support emotional wellbeing, particularly in non-clinical settings. Drawing on a range of empirical studies and practical deployments, this paper outlines six key insights that highlight both the opportunities and challenges in using robots to promote mental wellbeing. These include (1) the lack of a single, objective measure of wellbeing, (2) the fact that robots don't need to act as companions to be effective, (3) the growing potential of virtual interactions, (4) the importance of involving clinicians in the design process, (5) the difference between one-off and long-term interactions, and (6) the idea that adaptation and personalization are not always necessary for positive outcomes. Rather than positioning robots as replacements for human therapists, we argue that they are best understood as supportive tools that must be designed with care, grounded in evidence, and shaped by ethical and psychological considerations. Our aim is to inform future research and guide responsible, effective use of robots in mental health and wellbeing contexts.

ERR@HRI 2024 Challenge: Multimodal Detection of Errors and Failures in Human-Robot Interactions

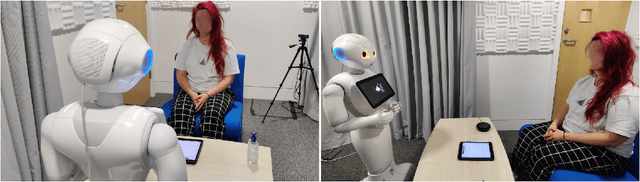

Jul 08, 2024Abstract:Despite the recent advancements in robotics and machine learning (ML), the deployment of autonomous robots in our everyday lives is still an open challenge. This is due to multiple reasons among which are their frequent mistakes, such as interrupting people or having delayed responses, as well as their limited ability to understand human speech, i.e., failure in tasks like transcribing speech to text. These mistakes may disrupt interactions and negatively influence human perception of these robots. To address this problem, robots need to have the ability to detect human-robot interaction (HRI) failures. The ERR@HRI 2024 challenge tackles this by offering a benchmark multimodal dataset of robot failures during human-robot interactions (HRI), encouraging researchers to develop and benchmark multimodal machine learning models to detect these failures. We created a dataset featuring multimodal non-verbal interaction data, including facial, speech, and pose features from video clips of interactions with a robotic coach, annotated with labels indicating the presence or absence of robot mistakes, user awkwardness, and interaction ruptures, allowing for the training and evaluation of predictive models. Challenge participants have been invited to submit their multimodal ML models for detection of robot errors and to be evaluated against various performance metrics such as accuracy, precision, recall, F1 score, with and without a margin of error reflecting the time-sensitivity of these metrics. The results of this challenge will help the research field in better understanding the robot failures in human-robot interactions and designing autonomous robots that can mitigate their own errors after successfully detecting them.

Past, Present, and Future: A Survey of The Evolution of Affective Robotics For Well-being

Jul 03, 2024Abstract:Recent research in affective robots has recognized their potential in supporting human well-being. Due to rapidly developing affective and artificial intelligence technologies, this field of research has undergone explosive expansion and advancement in recent years. In order to develop a deeper understanding of recent advancements, we present a systematic review of the past 10 years of research in affective robotics for wellbeing. In this review, we identify the domains of well-being that have been studied, the methods used to investigate affective robots for well-being, and how these have evolved over time. We also examine the evolution of the multifaceted research topic from three lenses: technical, design, and ethical. Finally, we discuss future opportunities for research based on the gaps we have identified in our review -- proposing pathways to take affective robotics from the past and present to the future. The results of our review are of interest to human-robot interaction and affective computing researchers, as well as clinicians and well-being professionals who may wish to examine and incorporate affective robotics in their practices.

Appropriateness of LLM-equipped Robotic Well-being Coach Language in the Workplace: A Qualitative Evaluation

Jan 26, 2024

Abstract:Robotic coaches have been recently investigated to promote mental well-being in various contexts such as workplaces and homes. With the widespread use of Large Language Models (LLMs), HRI researchers are called to consider language appropriateness when using such generated language for robotic mental well-being coaches in the real world. Therefore, this paper presents the first work that investigated the language appropriateness of robot mental well-being coach in the workplace. To this end, we conducted an empirical study that involved 17 employees who interacted over 4 weeks with a robotic mental well-being coach equipped with LLM-based capabilities. After the study, we individually interviewed them and we conducted a focus group of 1.5 hours with 11 of them. The focus group consisted of: i) an ice-breaking activity, ii) evaluation of robotic coach language appropriateness in various scenarios, and iii) listing shoulds and shouldn'ts for designing appropriate robotic coach language for mental well-being. From our qualitative evaluation, we found that a language-appropriate robotic coach should (1) ask deep questions which explore feelings of the coachees, rather than superficial questions, (2) express and show emotional and empathic understanding of the context, and (3) not make any assumptions without clarifying with follow-up questions to avoid bias and stereotyping. These results can inform the design of language-appropriate robotic coach to promote mental well-being in real-world contexts.

"Oh, Sorry, I Think I Interrupted You'': Designing Repair Strategies for Robotic Longitudinal Well-being Coaching

Jan 08, 2024Abstract:Robotic well-being coaches have been shown to successfully promote people's mental well-being. To provide successful coaching, a robotic coach should have the capability to repair the mistakes it makes. Past investigations of robot mistakes are limited to game or task-based, one-off and in-lab studies. This paper presents a 4-phase design process to design repair strategies for robotic longitudinal well-being coaching with the involvement of real-world stakeholders: 1) designing repair strategies with a professional well-being coach; 2) a longitudinal study with the involvement of experienced users (i.e., who had already interacted with a robotic coach) to investigate the repair strategies defined in (1); 3) a design workshop with users from the study in (2) to gather their perspectives on the robotic coach's repair strategies; 4) discussing the results obtained in (2) and (3) with the mental well-being professional to reflect on how to design repair strategies for robotic coaching. Our results show that users have different expectations for a robotic coach than a human coach, which influences how repair strategies should be designed. We show that different repair strategies (e.g., apologizing, explaining, or repairing empathically) are appropriate in different scenarios, and that preferences for repair strategies change during longitudinal interactions with the robotic coach.

VITA: A Multi-modal LLM-based System for Longitudinal, Autonomous, and Adaptive Robotic Mental Well-being Coaching

Dec 15, 2023Abstract:Recently, several works have explored if and how robotic coaches can promote and maintain mental well-being in different settings. However, findings from these studies revealed that these robotic coaches are not ready to be used and deployed in real-world settings due to several limitations that span from technological challenges to coaching success. To overcome these challenges, this paper presents VITA, a novel multi-modal LLM-based system that allows robotic coaches to autonomously adapt to the coachee's multi-modal behaviours (facial valence and speech duration) and deliver coaching exercises in order to promote mental well-being in adults. We identified five objectives that correspond to the challenges in the recent literature, and we show how the VITA system addresses these via experimental validations that include one in-lab pilot study (N=4) that enabled us to test different robotic coach configurations (pre-scripted, generic, and adaptive models) and inform its design for using it in the real world, and one real-world study (N=17) conducted in a workplace over 4 weeks. Our results show that: (i) coachees perceived the VITA adaptive and generic configurations more positively than the pre-scripted one, and they felt understood and heard by the adaptive robotic coach, (ii) the VITA adaptive robotic coach kept learning successfully by personalising to each coachee over time and did not detect any interaction ruptures during the coaching, (iii) coachees had significant mental well-being improvements via the VITA-based robotic coach practice. The code for the VITA system is openly available via: https://github.com/Cambridge-AFAR/VITA-system.

Participant Perceptions of a Robotic Coach Conducting Positive Psychology Exercises: A Systematic Analysis

Sep 08, 2022

Abstract:This paper provides a detailed overview of a case study of applying Continual Learning (CL) to a single-session Human-Robot Interaction (HRI) session (avg. 31 +- 10 minutes), where a robotic mental well-being coach conducted Positive Psychology (PP) exercises with (n = 20) participants. We present the results of a Thematic Analysis (TA) of data recorded from brief semi-structured interviews that were conducted with participants after the interaction sessions, as well as an analysis of statistical results demonstrating how participants' personalities may affect how they perceive the robot and its interactions.

Robots as Mental Well-being Coaches: Design and Ethical Recommendations

Aug 31, 2022

Abstract:The last decade has shown a growing interest in robots as well-being coaches. However, cohesive and comprehensive guidelines for the design of robots as coaches to promote mental well-being have not yet been proposed. This paper details design and ethical recommendations based on a qualitative meta-analysis drawing on a grounded theory approach, which was conducted with three distinct user-centered design studies involving robotic well-being coaches, namely: (1) a participatory design study conducted with 11 participants consisting of both prospective users who had participated in a Brief Solution-Focused Practice study with a human coach, as well as coaches of different disciplines, (2) semi-structured individual interview data gathered from 20 participants attending a Positive Psychology intervention study with the robotic well-being coach Pepper, and (3) a participatory design study conducted with 3 participants of the Positive Psychology study as well as 2 relevant well-being coaches. After conducting a thematic analysis and a qualitative meta-analysis, we collated the data gathered into convergent and divergent themes, and we distilled from those results a set of design guidelines and ethical considerations. Our findings can inform researchers and roboticists on the key aspects to take into account when designing robotic mental well-being coaches.

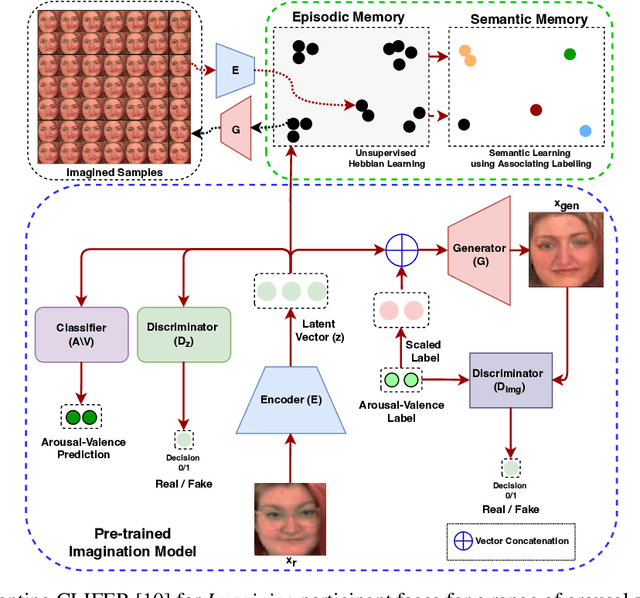

Continual Learning for Affective Robotics: A Proof of Concept for Wellbeing

Jun 22, 2022

Abstract:Sustaining real-world human-robot interactions requires robots to be sensitive to human behavioural idiosyncrasies and adapt their perception and behaviour models to cater to these individual preferences. For affective robots, this entails learning to adapt to individual affective behaviour to offer a personalised interaction experience to each individual. Continual Learning (CL) has been shown to enable real-time adaptation in agents, allowing them to learn with incrementally acquired data while preserving past knowledge. In this work, we present a novel framework for real-world application of CL for modelling personalised human-robot interactions using a CL-based affect perception mechanism. To evaluate the proposed framework, we undertake a proof-of-concept user study with 20 participants interacting with the Pepper robot using three variants of interaction behaviour: static and scripted, using affect-based adaptation without personalisation, and using affect-based adaptation with continual personalisation. Our results demonstrate a clear preference in the participants for CL-based continual personalisation with significant improvements observed in the robot's anthropomorphism, animacy and likeability ratings as well as the interactions being rated significantly higher for warmth and comfort as the robot is rated as significantly better at understanding how the participants feel.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge