Wendy Ju

Towards Considerate Embodied AI: Co-Designing Situated Multi-Site Healthcare Robots from Abstract Concepts to High-Fidelity Prototypes

Feb 03, 2026Abstract:Co-design is essential for grounding embodied artificial intelligence (AI) systems in real-world contexts, especially high-stakes domains such as healthcare. While prior work has explored multidisciplinary collaboration, iterative prototyping, and support for non-technical participants, few have interwoven these into a sustained co-design process. Such efforts often target one context and low-fidelity stages, limiting the generalizability of findings and obscuring how participants' ideas evolve. To address these limitations, we conducted a 14-week workshop with a multidisciplinary team of 22 participants, centered around how embodied AI can reduce non-value-added task burdens in three healthcare settings: emergency departments, long-term rehabilitation facilities, and sleep disorder clinics. We found that the iterative progression from abstract brainstorming to high-fidelity prototypes, supported by educational scaffolds, enabled participants to understand real-world trade-offs and generate more deployable solutions. We propose eight guidelines for co-designing more considerate embodied AI: attuned to context, responsive to social dynamics, mindful of expectations, and grounded in deployment. Project Page: https://byc-sophie.github.io/Towards-Considerate-Embodied-AI/

Calling for Backup: How Children Navigate Successive Robot Communication Failures

Jan 02, 2026Abstract:How do children respond to repeated robot errors? While prior research has examined adult reactions to successive robot errors, children's responses remain largely unexplored. In this study, we explore children's reactions to robot social errors and performance errors. For the latter, this study reproduces the successive robot failure paradigm of Liu et al. with child participants (N=59, ages 8-10) to examine how young users respond to repeated robot conversational errors. Participants interacted with a robot that failed to understand their prompts three times in succession, with their behavioral responses video-recorded and analyzed. We found both similarities and differences compared to adult responses from the original study. Like adults, children adjusted their prompts, modified their verbal tone, and exhibited increasingly emotional non-verbal responses throughout successive errors. However, children demonstrated more disengagement behaviors, including temporarily ignoring the robot or actively seeking an adult. Errors did not affect participants' perception of the robot, suggesting more flexible conversational expectations in children. These findings inform the design of more effective and developmentally appropriate human-robot interaction systems for young users.

Why We Need a New Framework for Emotional Intelligence in AI

Dec 29, 2025Abstract:In this paper, we develop the position that current frameworks for evaluating emotional intelligence (EI) in artificial intelligence (AI) systems need refinement because they do not adequately or comprehensively measure the various aspects of EI relevant in AI. Human EI often involves a phenomenological component and a sense of understanding that artificially intelligent systems lack; therefore, some aspects of EI are irrelevant in evaluating AI systems. However, EI also includes an ability to sense an emotional state, explain it, respond appropriately, and adapt to new contexts (e.g., multicultural), and artificially intelligent systems can do such things to greater or lesser degrees. Several benchmark frameworks specialize in evaluating the capacity of different AI models to perform some tasks related to EI, but these often lack a solid foundation regarding the nature of emotion and what it is to be emotionally intelligent. In this project, we begin by reviewing different theories about emotion and general EI, evaluating the extent to which each is applicable to artificial systems. We then critically evaluate the available benchmark frameworks, identifying where each falls short in light of the account of EI developed in the first section. Lastly, we outline some options for improving evaluation strategies to avoid these shortcomings in EI evaluation in AI systems.

Characterizing Human Feedback-Based Control in Naturalistic Driving Interactions via Gaussian Process Regression with Linear Feedback

Dec 09, 2025Abstract:Understanding driver interactions is critical to designing autonomous vehicles to interoperate safely with human-driven cars. We consider the impact of these interactions on the policies drivers employ when navigating unsigned intersections in a driving simulator. The simulator allows the collection of naturalistic decision-making and behavior data in a controlled environment. Using these data, we model the human driver responses as state-based feedback controllers learned via Gaussian Process regression methods. We compute the feedback gain of the controller using a weighted combination of linear and nonlinear priors. We then analyze how the individual gains are reflected in driver behavior. We also assess differences in these controllers across populations of drivers. Our work in data-driven analyses of how drivers determine their policies can facilitate future work in the design of socially responsive autonomy for vehicles.

ERR@HRI 2.0 Challenge: Multimodal Detection of Errors and Failures in Human-Robot Conversations

Jul 17, 2025Abstract:The integration of large language models (LLMs) into conversational robots has made human-robot conversations more dynamic. Yet, LLM-powered conversational robots remain prone to errors, e.g., misunderstanding user intent, prematurely interrupting users, or failing to respond altogether. Detecting and addressing these failures is critical for preventing conversational breakdowns, avoiding task disruptions, and sustaining user trust. To tackle this problem, the ERR@HRI 2.0 Challenge provides a multimodal dataset of LLM-powered conversational robot failures during human-robot conversations and encourages researchers to benchmark machine learning models designed to detect robot failures. The dataset includes 16 hours of dyadic human-robot interactions, incorporating facial, speech, and head movement features. Each interaction is annotated with the presence or absence of robot errors from the system perspective, and perceived user intention to correct for a mismatch between robot behavior and user expectation. Participants are invited to form teams and develop machine learning models that detect these failures using multimodal data. Submissions will be evaluated using various performance metrics, including detection accuracy and false positive rate. This challenge represents another key step toward improving failure detection in human-robot interaction through social signal analysis.

A Constructed Response: Designing and Choreographing Robot Arm Movements in Collaborative Dance Improvisation

May 29, 2025Abstract:Dancers often prototype movements themselves or with each other during improvisation and choreography. How are these interactions altered when physically manipulable technologies are introduced into the creative process? To understand how dancers design and improvise movements while working with instruments capable of non-humanoid movements, we engaged dancers in workshops to co-create movements with a robot arm in one-human-to-one-robot and three-human-to-one-robot settings. We found that dancers produced more fluid movements in one-to-one scenarios, experiencing a stronger sense of connection and presence with the robot as a co-dancer. In three-to-one scenarios, the dancers divided their attention between the human dancers and the robot, resulting in increased perceived use of space and more stop-and-go movements, perceiving the robot as part of the stage background. This work highlights how technologies can drive creativity in movement artists adapting to new ways of working with physical instruments, contributing design insights supporting artistic collaborations with non-humanoid agents.

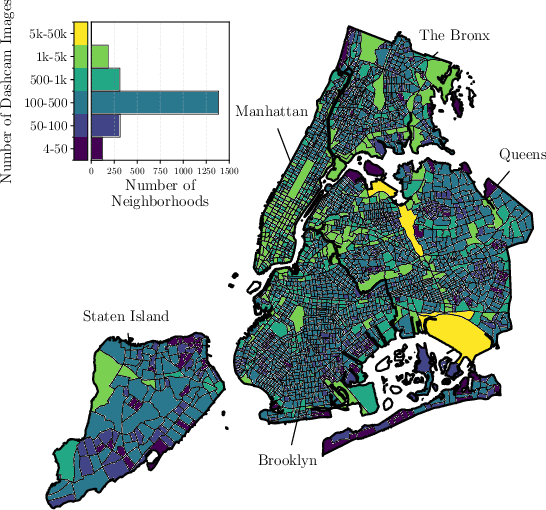

Privacy of Groups in Dense Street Imagery

May 11, 2025Abstract:Spatially and temporally dense street imagery (DSI) datasets have grown unbounded. In 2024, individual companies possessed around 3 trillion unique images of public streets. DSI data streams are only set to grow as companies like Lyft and Waymo use DSI to train autonomous vehicle algorithms and analyze collisions. Academic researchers leverage DSI to explore novel approaches to urban analysis. Despite good-faith efforts by DSI providers to protect individual privacy through blurring faces and license plates, these measures fail to address broader privacy concerns. In this work, we find that increased data density and advancements in artificial intelligence enable harmful group membership inferences from supposedly anonymized data. We perform a penetration test to demonstrate how easily sensitive group affiliations can be inferred from obfuscated pedestrians in 25,232,608 dashcam images taken in New York City. We develop a typology of identifiable groups within DSI and analyze privacy implications through the lens of contextual integrity. Finally, we discuss actionable recommendations for researchers working with data from DSI providers.

The Robotability Score: Enabling Harmonious Robot Navigation on Urban Streets

Apr 15, 2025Abstract:This paper introduces the Robotability Score ($R$), a novel metric that quantifies the suitability of urban environments for autonomous robot navigation. Through expert interviews and surveys, we identify and weigh key features contributing to R for wheeled robots on urban streets. Our findings reveal that pedestrian density, crowd dynamics and pedestrian flow are the most critical factors, collectively accounting for 28% of the total score. Computing robotability across New York City yields significant variation; the area of highest R is 3.0 times more "robotable" than the area of lowest R. Deployments of a physical robot on high and low robotability areas show the adequacy of the score in anticipating the ease of robot navigation. This new framework for evaluating urban landscapes aims to reduce uncertainty in robot deployment while respecting established mobility patterns and urban planning principles, contributing to the discourse on harmonious human-robot environments.

Making Sense of Robots in Public Spaces: A Study of Trash Barrel Robots

Apr 01, 2025Abstract:In this work, we analyze video data and interviews from a public deployment of two trash barrel robots in a large public space to better understand the sensemaking activities people perform when they encounter robots in public spaces. Based on an analysis of 274 human-robot interactions and interviews with N=65 individuals or groups, we discovered that people were responding not only to the robots or their behavior, but also to the general idea of deploying robots as trashcans, and the larger social implications of that idea. They wanted to understand details about the deployment because having that knowledge would change how they interact with the robot. Based on our data and analysis, we have provided implications for design that may be topics for future human-robot design researchers who are exploring robots for public space deployment. Furthermore, our work offers a practical example of analyzing field data to make sense of robots in public spaces.

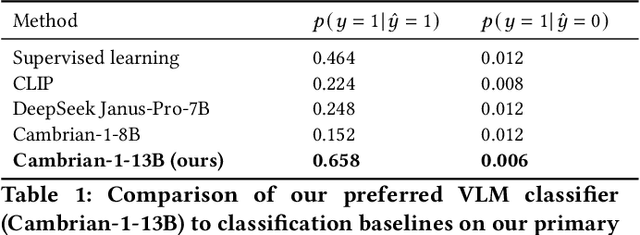

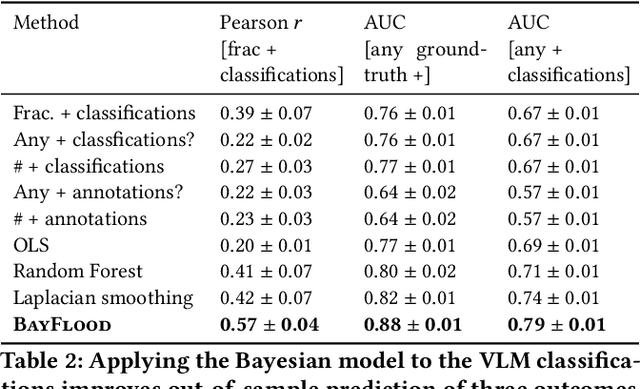

Bayesian Modeling of Zero-Shot Classifications for Urban Flood Detection

Mar 18, 2025

Abstract:Street scene datasets, collected from Street View or dashboard cameras, offer a promising means of detecting urban objects and incidents like street flooding. However, a major challenge in using these datasets is their lack of reliable labels: there are myriad types of incidents, many types occur rarely, and ground-truth measures of where incidents occur are lacking. Here, we propose BayFlood, a two-stage approach which circumvents this difficulty. First, we perform zero-shot classification of where incidents occur using a pretrained vision-language model (VLM). Second, we fit a spatial Bayesian model on the VLM classifications. The zero-shot approach avoids the need to annotate large training sets, and the Bayesian model provides frequent desiderata in urban settings - principled measures of uncertainty, smoothing across locations, and incorporation of external data like stormwater accumulation zones. We comprehensively validate this two-stage approach, showing that VLMs provide strong zero-shot signal for floods across multiple cities and time periods, the Bayesian model improves out-of-sample prediction relative to baseline methods, and our inferred flood risk correlates with known external predictors of risk. Having validated our approach, we show it can be used to improve urban flood detection: our analysis reveals 113,738 people who are at high risk of flooding overlooked by current methods, identifies demographic biases in existing methods, and suggests locations for new flood sensors. More broadly, our results showcase how Bayesian modeling of zero-shot LM annotations represents a promising paradigm because it avoids the need to collect large labeled datasets and leverages the power of foundation models while providing the expressiveness and uncertainty quantification of Bayesian models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge