Alexandra Bremers

Field Notes on Deploying Research Robots in Public Spaces

Apr 29, 2024Abstract:Human-robot interaction requires to be studied in the wild. In the summers of 2022 and 2023, we deployed two trash barrel service robots through the wizard-of-oz protocol in public spaces to study human-robot interactions in urban settings. We deployed the robots at two different public plazas in downtown Manhattan and Brooklyn for a collective of 20 hours of field time. To date, relatively few long-term human-robot interaction studies have been conducted in shared public spaces. To support researchers aiming to fill this gap, we would like to share some of our insights and learned lessons that would benefit both researchers and practitioners on how to deploy robots in public spaces. We share best practices and lessons learned with the HRI research community to encourage more in-the-wild research of robots in public spaces and call for the community to share their lessons learned to a GitHub repository.

A utility belt for an agricultural robot: reflection-in-action for applied design research

Dec 22, 2023

Abstract:Clothing for robots can help expand a robot's functionality and also clarify the robot's purpose to bystanders. In studying how to design clothing for robots, we can shed light on the functional role of aesthetics in interactive system design. We present a case study of designing a utility belt for an agricultural robot. We use reflection-in-action to consider the ways that observation, in situ making, and documentation serve to illuminate how pragmatic, aesthetic, and intellectual inquiry are layered in this applied design research project. Themes explored in this pictorial include 1) contextual discovery of materials, tools, and practices, 2) design space exploration of materials in context, 3) improvising spaces for making, and 4) social processes in design. These themes emerged from the qualitative coding of 25 reflection-in-action videos from the researcher. We conclude with feedback on the utility belt prototypes for an agriculture robot and our learnings about context, materials, and people needed to design successful novel clothing forms for robots.

Implicit collaboration with a drawing machine through dance movements

Sep 30, 2023

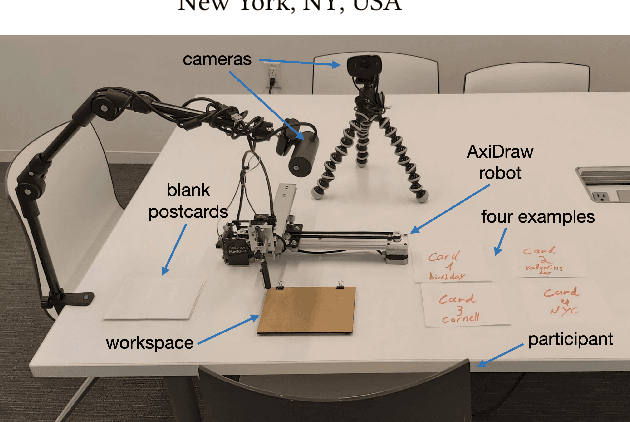

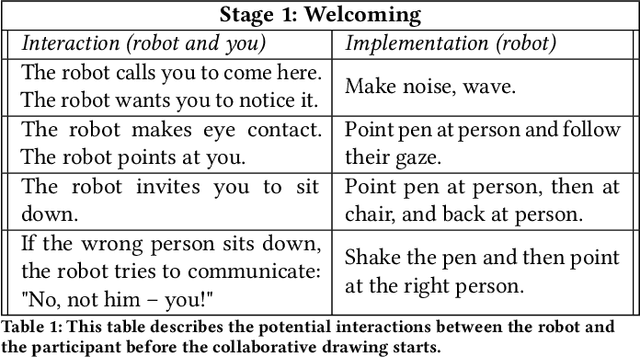

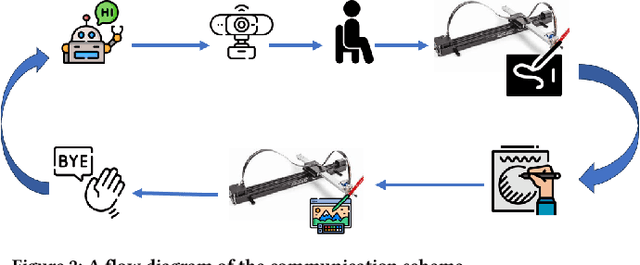

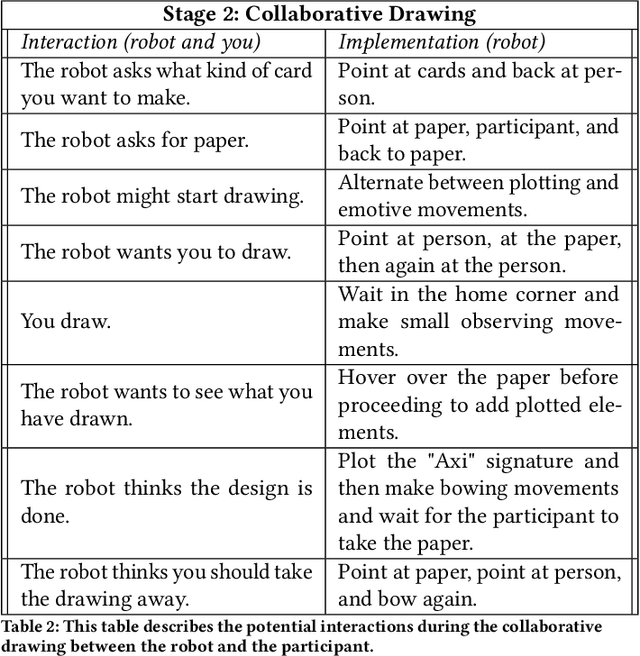

Abstract:In this demonstration, we exhibit the initial results of an ongoing body of exploratory work, investigating the potential for creative machines to communicate and collaborate with people through movement as a form of implicit interaction. The paper describes a Wizard-of-Oz demo, where a hidden wizard controls an AxiDraw drawing robot while a participant collaborates with it to draw a custom postcard. This demonstration aims to gather perspectives from the computational fabrication community regarding how practitioners of fabrication with machines experience interacting with a mixed-initiative collaborative machine.

Driver Profiling and Bayesian Workload Estimation Using Naturalistic Peripheral Detection Study Data

Mar 26, 2023

Abstract:Monitoring drivers' mental workload facilitates initiating and maintaining safe interactions with in-vehicle information systems, and thus delivers adaptive human machine interaction with reduced impact on the primary task of driving. In this paper, we tackle the problem of workload estimation from driving performance data. First, we present a novel on-road study for collecting subjective workload data via a modified peripheral detection task in naturalistic settings. Key environmental factors that induce a high mental workload are identified via video analysis, e.g. junctions and behaviour of vehicle in front. Second, a supervised learning framework using state-of-the-art time series classifiers (e.g. convolutional neural network and transform techniques) is introduced to profile drivers based on the average workload they experience during a journey. A Bayesian filtering approach is then proposed for sequentially estimating, in (near) real-time, the driver's instantaneous workload. This computationally efficient and flexible method can be easily personalised to a driver (e.g. incorporate their inferred average workload profile), adapted to driving/environmental contexts (e.g. road type) and extended with data streams from new sources. The efficacy of the presented profiling and instantaneous workload estimation approaches are demonstrated using the on-road study data, showing $F_{1}$ scores of up to 92% and 81%, respectively.

The Bystander Affect Detection (BAD) Dataset for Failure Detection in HRI

Mar 08, 2023

Abstract:For a robot to repair its own error, it must first know it has made a mistake. One way that people detect errors is from the implicit reactions from bystanders -- their confusion, smirks, or giggles clue us in that something unexpected occurred. To enable robots to detect and act on bystander responses to task failures, we developed a novel method to elicit bystander responses to human and robot errors. Using 46 different stimulus videos featuring a variety of human and machine task failures, we collected a total of 2452 webcam videos of human reactions from 54 participants. To test the viability of the collected data, we used the bystander reaction dataset as input to a deep-learning model, BADNet, to predict failure occurrence. We tested different data labeling methods and learned how they affect model performance, achieving precisions above 90%. We discuss strategies to model bystander reactions and predict failure and how this approach can be used in real-world robotic deployments to detect errors and improve robot performance. As part of this work, we also contribute with the "Bystander Affect Detection" (BAD) dataset of bystander reactions, supporting the development of better prediction models.

Using Social Cues to Recognize Task Failures for HRI: A Review of Current Research and Future Directions

Jan 27, 2023

Abstract:Robots that carry out tasks and interact in complex environments will inevitably commit errors. Error detection is thus an important ability for robots to master, to work in an efficient and productive way. People leverage social cues from others around them to recognize and repair their own mistakes. With advances in computing and AI, it is increasingly possible for robots to achieve a similar error detection capability. In this work, we review current literature around the topic of how social cues can be used to recognize task failures for human-robot interaction (HRI). This literature review unites insights from behavioral science, human-robot interaction, and machine learning, to focus on three areas: 1) social cues for error detection (from behavioral science), 2) recognizing task failures in robots (from HRI), and 3) approaches for autonomous detection of HRI task failures based on social cues (from machine learning). We propose a taxonomy of error detection based on self-awareness and social feedback. Finally, we leave recommendations for HRI researchers and practitioners interested in developing robots that detect (physical) task errors using social cues from bystanders.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge