Tapomayukh Bhattacharjee

A Human-in-the-Loop Confidence-Aware Failure Recovery Framework for Modular Robot Policies

Feb 10, 2026Abstract:Robots operating in unstructured human environments inevitably encounter failures, especially in robot caregiving scenarios. While humans can often help robots recover, excessive or poorly targeted queries impose unnecessary cognitive and physical workload on the human partner. We present a human-in-the-loop failure-recovery framework for modular robotic policies, where a policy is composed of distinct modules such as perception, planning, and control, any of which may fail and often require different forms of human feedback. Our framework integrates calibrated estimates of module-level uncertainty with models of human intervention cost to decide which module to query and when to query the human. It separates these two decisions: a module selector identifies the module most likely responsible for failure, and a querying algorithm determines whether to solicit human input or act autonomously. We evaluate several module-selection strategies and querying algorithms in controlled synthetic experiments, revealing trade-offs between recovery efficiency, robustness to system and user variables, and user workload. Finally, we deploy the framework on a robot-assisted bite acquisition system and demonstrate, in studies involving individuals with both emulated and real mobility limitations, that it improves recovery success while reducing the workload imposed on users. Our results highlight how explicitly reasoning about both robot uncertainty and human effort can enable more efficient and user-centered failure recovery in collaborative robots. Supplementary materials and videos can be found at: http://emprise.cs.cornell.edu/modularhil

OpenRoboCare: A Multimodal Multi-Task Expert Demonstration Dataset for Robot Caregiving

Nov 17, 2025Abstract:We present OpenRoboCare, a multimodal dataset for robot caregiving, capturing expert occupational therapist demonstrations of Activities of Daily Living (ADLs). Caregiving tasks involve complex physical human-robot interactions, requiring precise perception under occlusions, safe physical contact, and long-horizon planning. While recent advances in robot learning from demonstrations have shown promise, there is a lack of a large-scale, diverse, and expert-driven dataset that captures real-world caregiving routines. To address this gap, we collect data from 21 occupational therapists performing 15 ADL tasks on two manikins. The dataset spans five modalities: RGB-D video, pose tracking, eye-gaze tracking, task and action annotations, and tactile sensing, providing rich multimodal insights into caregiver movement, attention, force application, and task execution strategies. We further analyze expert caregiving principles and strategies, offering insights to improve robot efficiency and task feasibility. Additionally, our evaluations demonstrate that OpenRoboCare presents challenges for state-of-the-art robot perception and human activity recognition methods, both critical for developing safe and adaptive assistive robots, highlighting the value of our contribution. See our website for additional visualizations: https://emprise.cs.cornell.edu/robo-care/.

FEAST: A Flexible Mealtime-Assistance System Towards In-the-Wild Personalization

Jun 17, 2025Abstract:Physical caregiving robots hold promise for improving the quality of life of millions worldwide who require assistance with feeding. However, in-home meal assistance remains challenging due to the diversity of activities (e.g., eating, drinking, mouth wiping), contexts (e.g., socializing, watching TV), food items, and user preferences that arise during deployment. In this work, we propose FEAST, a flexible mealtime-assistance system that can be personalized in-the-wild to meet the unique needs of individual care recipients. Developed in collaboration with two community researchers and informed by a formative study with a diverse group of care recipients, our system is guided by three key tenets for in-the-wild personalization: adaptability, transparency, and safety. FEAST embodies these principles through: (i) modular hardware that enables switching between assisted feeding, drinking, and mouth-wiping, (ii) diverse interaction methods, including a web interface, head gestures, and physical buttons, to accommodate diverse functional abilities and preferences, and (iii) parameterized behavior trees that can be safely and transparently adapted using a large language model. We evaluate our system based on the personalization requirements identified in our formative study, demonstrating that FEAST offers a wide range of transparent and safe adaptations and outperforms a state-of-the-art baseline limited to fixed customizations. To demonstrate real-world applicability, we conduct an in-home user study with two care recipients (who are community researchers), feeding them three meals each across three diverse scenarios. We further assess FEAST's ecological validity by evaluating with an Occupational Therapist previously unfamiliar with the system. In all cases, users successfully personalize FEAST to meet their individual needs and preferences. Website: https://emprise.cs.cornell.edu/feast

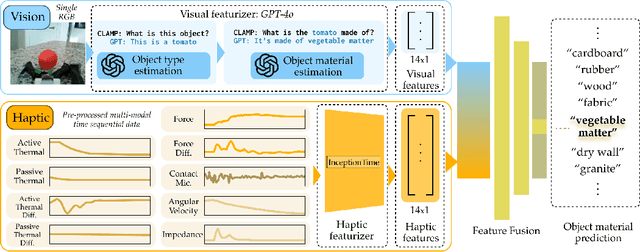

CLAMP: Crowdsourcing a LArge-scale in-the-wild haptic dataset with an open-source device for Multimodal robot Perception

May 27, 2025

Abstract:Robust robot manipulation in unstructured environments often requires understanding object properties that extend beyond geometry, such as material or compliance-properties that can be challenging to infer using vision alone. Multimodal haptic sensing provides a promising avenue for inferring such properties, yet progress has been constrained by the lack of large, diverse, and realistic haptic datasets. In this work, we introduce the CLAMP device, a low-cost (<\$200) sensorized reacher-grabber designed to collect large-scale, in-the-wild multimodal haptic data from non-expert users in everyday settings. We deployed 16 CLAMP devices to 41 participants, resulting in the CLAMP dataset, the largest open-source multimodal haptic dataset to date, comprising 12.3 million datapoints across 5357 household objects. Using this dataset, we train a haptic encoder that can infer material and compliance object properties from multimodal haptic data. We leverage this encoder to create the CLAMP model, a visuo-haptic perception model for material recognition that generalizes to novel objects and three robot embodiments with minimal finetuning. We also demonstrate the effectiveness of our model in three real-world robot manipulation tasks: sorting recyclable and non-recyclable waste, retrieving objects from a cluttered bag, and distinguishing overripe from ripe bananas. Our results show that large-scale, in-the-wild haptic data collection can unlock new capabilities for generalizable robot manipulation. Website: https://emprise.cs.cornell.edu/clamp/

Coloring Between the Lines: Personalization in the Null Space of Planning Constraints

May 21, 2025Abstract:Generalist robots must personalize in-the-wild to meet the diverse needs and preferences of long-term users. How can we enable flexible personalization without sacrificing safety or competency? This paper proposes Coloring Between the Lines (CBTL), a method for personalization that exploits the null space of constraint satisfaction problems (CSPs) used in robot planning. CBTL begins with a CSP generator that ensures safe and competent behavior, then incrementally personalizes behavior by learning parameterized constraints from online interaction. By quantifying uncertainty and leveraging the compositionality of planning constraints, CBTL achieves sample-efficient adaptation without environment resets. We evaluate CBTL in (1) three diverse simulation environments; (2) a web-based user study; and (3) a real-robot assisted feeding system, finding that CBTL consistently achieves more effective personalization with fewer interactions than baselines. Our results demonstrate that CBTL provides a unified and practical approach for continual, flexible, active, and safe robot personalization. Website: https://emprise.cs.cornell.edu/cbtl/

GRACE: Generalizing Robot-Assisted Caregiving with User Functionality Embeddings

Jan 29, 2025Abstract:Robot caregiving should be personalized to meet the diverse needs of care recipients -- assisting with tasks as needed, while taking user agency in action into account. In physical tasks such as handover, bathing, dressing, and rehabilitation, a key aspect of this diversity is the functional range of motion (fROM), which can vary significantly between individuals. In this work, we learn to predict personalized fROM as a way to generalize robot decision-making in a wide range of caregiving tasks. We propose a novel data-driven method for predicting personalized fROM using functional assessment scores from occupational therapy. We develop a neural model that learns to embed functional assessment scores into a latent representation of the user's physical function. The model is trained using motion capture data collected from users with emulated mobility limitations. After training, the model predicts personalized fROM for new users without motion capture. Through simulated experiments and a real-robot user study, we show that the personalized fROM predictions from our model enable the robot to provide personalized and effective assistance while improving the user's agency in action. See our website for more visualizations: https://emprise.cs.cornell.edu/grace/.

CART-MPC: Coordinating Assistive Devices for Robot-Assisted Transferring with Multi-Agent Model Predictive Control

Jan 19, 2025

Abstract:Bed-to-wheelchair transferring is a ubiquitous activity of daily living (ADL), but especially challenging for caregiving robots with limited payloads. We develop a novel algorithm that leverages the presence of other assistive devices: a Hoyer sling and a wheelchair for coarse manipulation of heavy loads, alongside a robot arm for fine-grained manipulation of deformable objects (Hoyer sling straps). We instrument the Hoyer sling and wheelchair with actuators and sensors so that they can become intelligent agents in the algorithm. We then focus on one subtask of the transferring ADL -- tying Hoyer sling straps to the sling bar -- that exemplifies the challenges of transfer: multi-agent planning, deformable object manipulation, and generalization to varying hook shapes, sling materials, and care recipient bodies. To address these challenges, we propose CART-MPC, a novel algorithm based on turn-taking multi-agent model predictive control that uses a learned neural dynamics model for a keypoint-based representation of the deformable Hoyer sling strap, and a novel cost function that leverages linking numbers from knot theory and neural amortization to accelerate inference. We validate it in both RCareWorld simulation and real-world environments. In simulation, CART-MPC successfully generalizes across diverse hook designs, sling materials, and care recipient body shapes. In the real world, we show zero-shot sim-to-real generalization capabilities to tie deformable Hoyer sling straps on a sling bar towards transferring a manikin from a hospital bed to a wheelchair. See our website for supplementary materials: https://emprise.cs.cornell.edu/cart-mpc/.

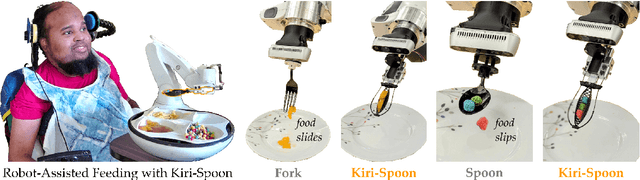

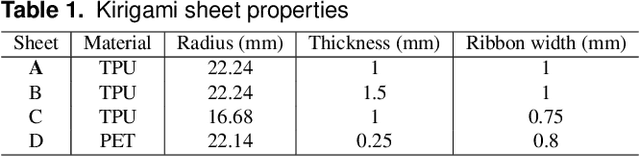

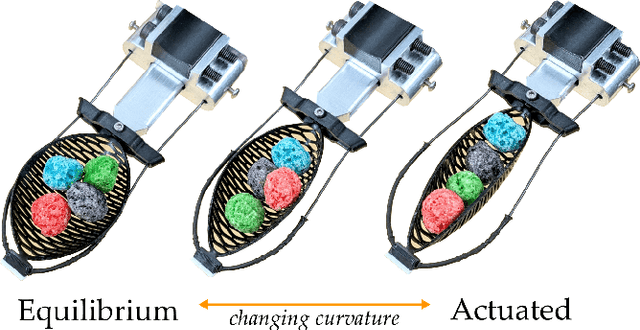

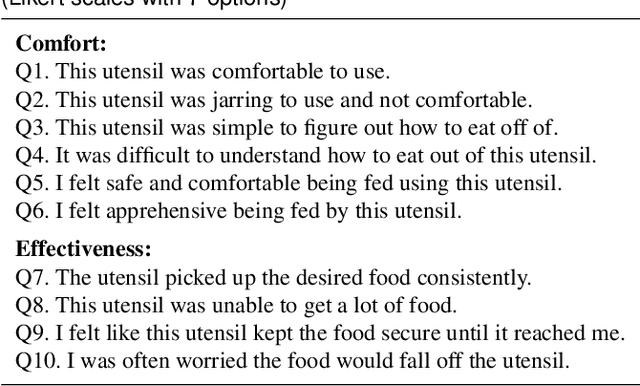

Kiri-Spoon: A Kirigami Utensil for Robot-Assisted Feeding

Jan 02, 2025

Abstract:For millions of adults with mobility limitations, eating meals is a daily challenge. A variety of robotic systems have been developed to address this societal need. Unfortunately, end-user adoption of robot-assisted feeding is limited, in part because existing devices are unable to seamlessly grasp, manipulate, and feed diverse foods. Recent works seek to address this issue by creating new algorithms for food acquisition and bite transfer. In parallel to these algorithmic developments, however, we hypothesize that mechanical intelligence will make it fundamentally easier for robot arms to feed humans. We therefore propose Kiri-Spoon, a soft utensil specifically designed for robot-assisted feeding. Kiri-Spoon consists of a spoon-shaped kirigami structure: when actuated, the kirigami sheet deforms into a bowl of increasing curvature. Robot arms equipped with Kiri-Spoon can leverage the kirigami structure to wrap-around morsels during acquisition, contain those items as the robot moves, and then compliantly release the food into the user's mouth. Overall, Kiri-Spoon combines the familiar and comfortable shape of a standard spoon with the increased capabilities of soft robotic grippers. In what follows, we first apply a stakeholder-driven design process to ensure that Kiri-Spoon meets the needs of caregivers and users with physical disabilities. We next characterize the dynamics of Kiri-Spoon, and derive a mechanics model to relate actuation force to the spoon's shape. The paper concludes with three separate experiments that evaluate (a) the mechanical advantage provided by Kiri-Spoon, (b) the ways users with disabilities perceive our system, and (c) how the mechanical intelligence of Kiri-Spoon complements state-of-the-art algorithms. Our results suggest that Kiri-Spoon advances robot-assisted feeding across diverse foods, multiple robotic platforms, and different manipulation algorithms.

REPeat: A Real2Sim2Real Approach for Pre-acquisition of Soft Food Items in Robot-assisted Feeding

Oct 13, 2024

Abstract:The paper presents REPeat, a Real2Sim2Real framework designed to enhance bite acquisition in robot-assisted feeding for soft foods. It uses `pre-acquisition actions' such as pushing, cutting, and flipping to improve the success rate of bite acquisition actions such as skewering, scooping, and twirling. If the data-driven model predicts low success for direct bite acquisition, the system initiates a Real2Sim phase, reconstructing the food's geometry in a simulation. The robot explores various pre-acquisition actions in the simulation, then a Sim2Real step renders a photorealistic image to reassess success rates. If the success improves, the robot applies the action in reality. We evaluate the system on 15 diverse plates with 10 types of food items for a soft food diet, showing improvement in bite acquisition success rates by 27\% on average across all plates. See our project website at https://emprise.cs.cornell.edu/repeat.

FLAIR: Feeding via Long-horizon AcquIsition of Realistic dishes

Jul 10, 2024Abstract:Robot-assisted feeding has the potential to improve the quality of life for individuals with mobility limitations who are unable to feed themselves independently. However, there exists a large gap between the homogeneous, curated plates existing feeding systems can handle, and truly in-the-wild meals. Feeding realistic plates is immensely challenging due to the sheer range of food items that a robot may encounter, each requiring specialized manipulation strategies which must be sequenced over a long horizon to feed an entire meal. An assistive feeding system should not only be able to sequence different strategies efficiently in order to feed an entire meal, but also be mindful of user preferences given the personalized nature of the task. We address this with FLAIR, a system for long-horizon feeding which leverages the commonsense and few-shot reasoning capabilities of foundation models, along with a library of parameterized skills, to plan and execute user-preferred and efficient bite sequences. In real-world evaluations across 6 realistic plates, we find that FLAIR can effectively tap into a varied library of skills for efficient food pickup, while adhering to the diverse preferences of 42 participants without mobility limitations as evaluated in a user study. We demonstrate the seamless integration of FLAIR with existing bite transfer methods [19, 28], and deploy it across 2 institutions and 3 robots, illustrating its adaptability. Finally, we illustrate the real-world efficacy of our system by successfully feeding a care recipient with severe mobility limitations. Supplementary materials and videos can be found at: https://emprise.cs.cornell.edu/flair .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge