Sidharth Vasudev

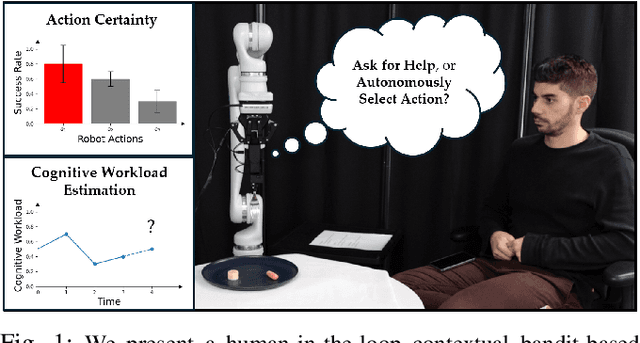

To Ask or Not To Ask: Human-in-the-loop Contextual Bandits with Applications in Robot-Assisted Feeding

May 11, 2024

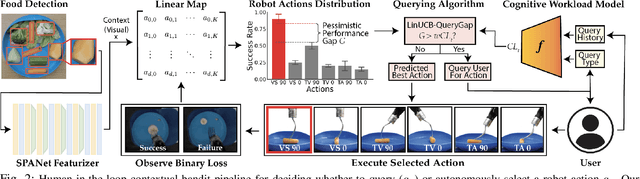

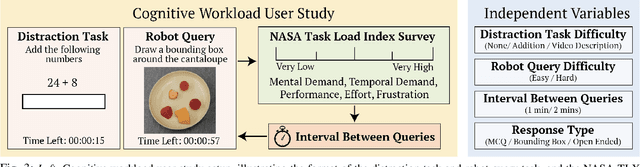

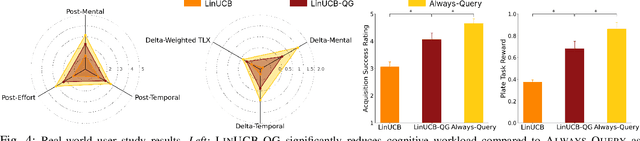

Abstract:Robot-assisted bite acquisition involves picking up food items that vary in their shape, compliance, size, and texture. A fully autonomous strategy for bite acquisition is unlikely to efficiently generalize to this wide variety of food items. We propose to leverage the presence of the care recipient to provide feedback when the system encounters novel food items. However, repeatedly asking for help imposes cognitive workload on the user. In this work, we formulate human-in-the-loop bite acquisition within a contextual bandit framework and propose a novel method, LinUCB-QG, that selectively asks for help. This method leverages a predictive model of cognitive workload in response to different types and timings of queries, learned using data from 89 participants collected in an online user study. We demonstrate that this method enhances the balance between task performance and cognitive workload compared to autonomous and querying baselines, through experiments in a food dataset-based simulator and a user study with 18 participants without mobility limitations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge