Reinforcement Learning with Fairness Constraints for Resource Distribution in Human-Robot Teams

Paper and Code

Jul 08, 2019

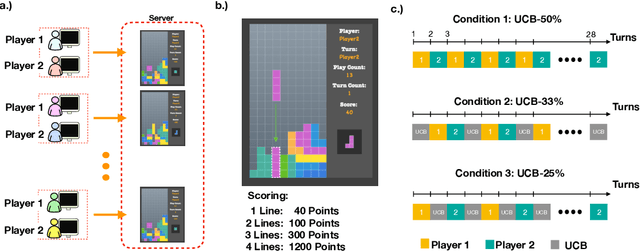

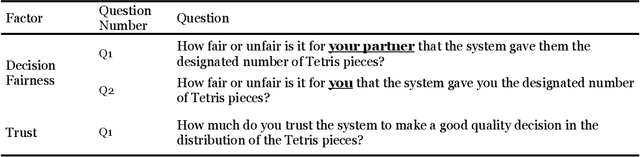

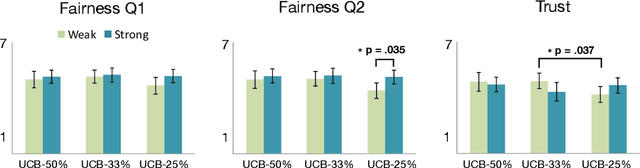

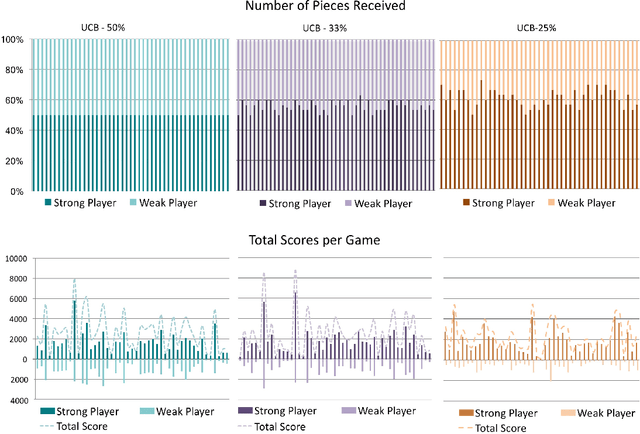

Much work in robotics and operations research has focused on optimal resource distribution, where an agent dynamically decides how to sequentially distribute resources among different candidates. However, most work ignores the notion of fairness in candidate selection. In the case where a robot distributes resources to human team members, disproportionately favoring the highest performing teammate can have negative effects in team dynamics and system acceptance. We introduce a multi-armed bandit algorithm with fairness constraints, where a robot distributes resources to human teammates of different skill levels. In this problem, the robot does not know the skill level of each human teammate, but learns it by observing their performance over time. We define fairness as a constraint on the minimum rate that each human teammate is selected throughout the task. We provide theoretical guarantees on performance and perform a large-scale user study, where we adjust the level of fairness in our algorithm. Results show that fairness in resource distribution has a significant effect on users' trust in the system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge