Khanh Nguyen

Retrieving Objects from 3D Scenes with Box-Guided Open-Vocabulary Instance Segmentation

Dec 22, 2025

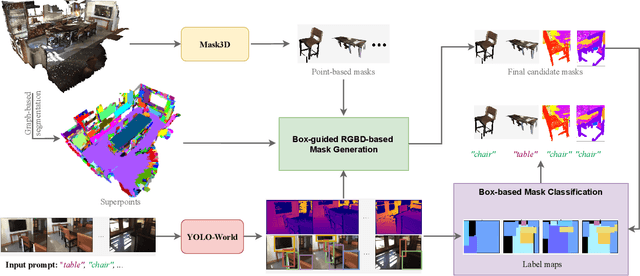

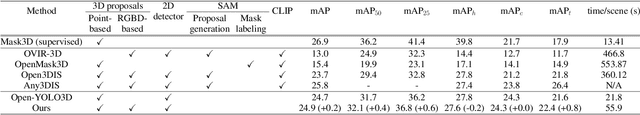

Abstract:Locating and retrieving objects from scene-level point clouds is a challenging problem with broad applications in robotics and augmented reality. This task is commonly formulated as open-vocabulary 3D instance segmentation. Although recent methods demonstrate strong performance, they depend heavily on SAM and CLIP to generate and classify 3D instance masks from images accompanying the point cloud, leading to substantial computational overhead and slow processing that limit their deployment in real-world settings. Open-YOLO 3D alleviates this issue by using a real-time 2D detector to classify class-agnostic masks produced directly from the point cloud by a pretrained 3D segmenter, eliminating the need for SAM and CLIP and significantly reducing inference time. However, Open-YOLO 3D often fails to generalize to object categories that appear infrequently in the 3D training data. In this paper, we propose a method that generates 3D instance masks for novel objects from RGB images guided by a 2D open-vocabulary detector. Our approach inherits the 2D detector's ability to recognize novel objects while maintaining efficient classification, enabling fast and accurate retrieval of rare instances from open-ended text queries. Our code will be made available at https://github.com/ndkhanh360/BoxOVIS.

NVIDIA Nemotron Nano V2 VL

Nov 07, 2025Abstract:We introduce Nemotron Nano V2 VL, the latest model of the Nemotron vision-language series designed for strong real-world document understanding, long video comprehension, and reasoning tasks. Nemotron Nano V2 VL delivers significant improvements over our previous model, Llama-3.1-Nemotron-Nano-VL-8B, across all vision and text domains through major enhancements in model architecture, datasets, and training recipes. Nemotron Nano V2 VL builds on Nemotron Nano V2, a hybrid Mamba-Transformer LLM, and innovative token reduction techniques to achieve higher inference throughput in long document and video scenarios. We are releasing model checkpoints in BF16, FP8, and FP4 formats and sharing large parts of our datasets, recipes and training code.

DocMIA: Document-Level Membership Inference Attacks against DocVQA Models

Feb 06, 2025

Abstract:Document Visual Question Answering (DocVQA) has introduced a new paradigm for end-to-end document understanding, and quickly became one of the standard benchmarks for multimodal LLMs. Automating document processing workflows, driven by DocVQA models, presents significant potential for many business sectors. However, documents tend to contain highly sensitive information, raising concerns about privacy risks associated with training such DocVQA models. One significant privacy vulnerability, exploited by the membership inference attack, is the possibility for an adversary to determine if a particular record was part of the model's training data. In this paper, we introduce two novel membership inference attacks tailored specifically to DocVQA models. These attacks are designed for two different adversarial scenarios: a white-box setting, where the attacker has full access to the model architecture and parameters, and a black-box setting, where only the model's outputs are available. Notably, our attacks assume the adversary lacks access to auxiliary datasets, which is more realistic in practice but also more challenging. Our unsupervised methods outperform existing state-of-the-art membership inference attacks across a variety of DocVQA models and datasets, demonstrating their effectiveness and highlighting the privacy risks in this domain.

NeurIPS 2023 Competition: Privacy Preserving Federated Learning Document VQA

Nov 06, 2024

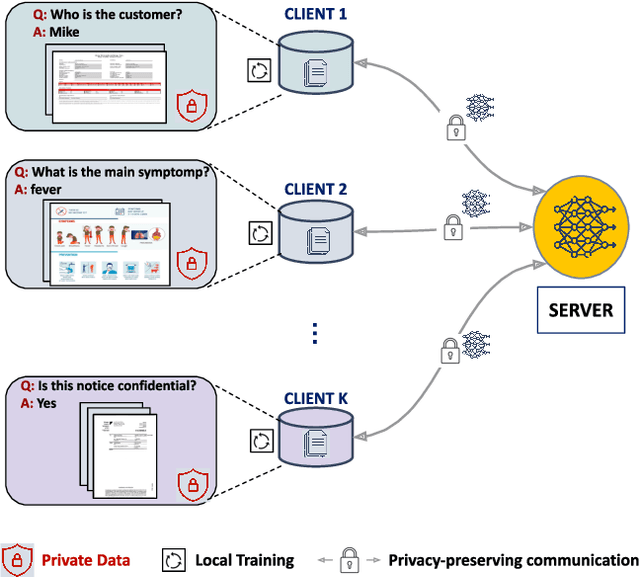

Abstract:The Privacy Preserving Federated Learning Document VQA (PFL-DocVQA) competition challenged the community to develop provably private and communication-efficient solutions in a federated setting for a real-life use case: invoice processing. The competition introduced a dataset of real invoice documents, along with associated questions and answers requiring information extraction and reasoning over the document images. Thereby, it brings together researchers and expertise from the document analysis, privacy, and federated learning communities. Participants fine-tuned a pre-trained, state-of-the-art Document Visual Question Answering model provided by the organizers for this new domain, mimicking a typical federated invoice processing setup. The base model is a multi-modal generative language model, and sensitive information could be exposed through either the visual or textual input modality. Participants proposed elegant solutions to reduce communication costs while maintaining a minimum utility threshold in track 1 and to protect all information from each document provider using differential privacy in track 2. The competition served as a new testbed for developing and testing private federated learning methods, simultaneously raising awareness about privacy within the document image analysis and recognition community. Ultimately, the competition analysis provides best practices and recommendations for successfully running privacy-focused federated learning challenges in the future.

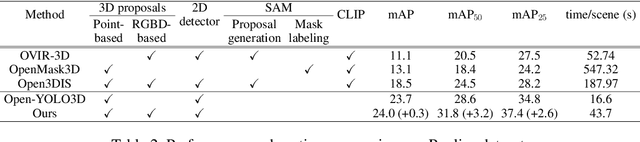

Improving Electrolyte Performance for Target Cathode Loading Using Interpretable Data-Driven Approach

Sep 03, 2024

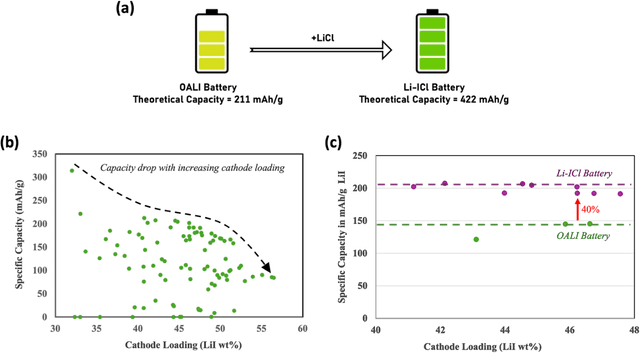

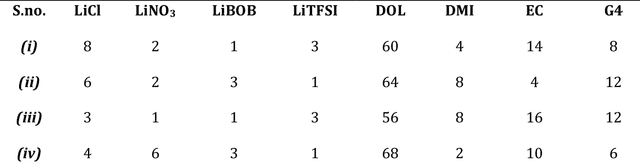

Abstract:Higher loading of active electrode materials is desired in batteries, especially those based on conversion reactions, for enhanced energy density and cost efficiency. However, increasing active material loading in electrodes can cause significant performance depreciation due to internal resistance, shuttling, and parasitic side reactions, which can be alleviated to a certain extent by a compatible design of electrolytes. In this work, a data-driven approach is leveraged to find a high-performing electrolyte formulation for a novel interhalogen battery custom to the target cathode loading. An electrolyte design consisting of 4 solvents and 4 salts is experimentally devised for a novel interhalogen battery based on a multi-electron redox reaction. The experimental dataset with variable electrolyte compositions and active cathode loading, is used to train a graph-based deep learning model mapping changing variables in the battery's material design to its specific capacity. The trained model is used to further optimize the electrolyte formulation compositions for enhancing the battery capacity at a target cathode loading by a two-fold approach: large-scale screening and interpreting electrolyte design principles for different cathode loadings. The data-driven approach is demonstrated to bring about an additional 20% increment in the specific capacity of the battery over capacities obtained from the experimental optimization.

Active Sensing of Knee Osteoarthritis Progression with Reinforcement Learning

Aug 05, 2024

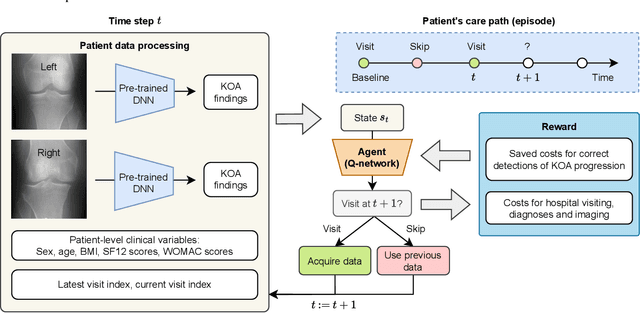

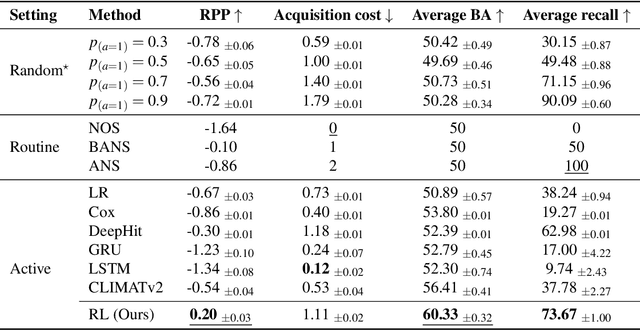

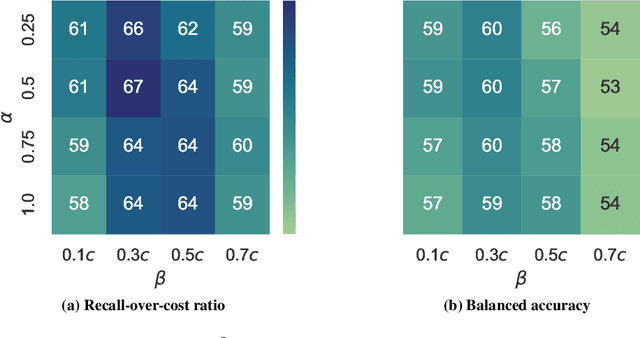

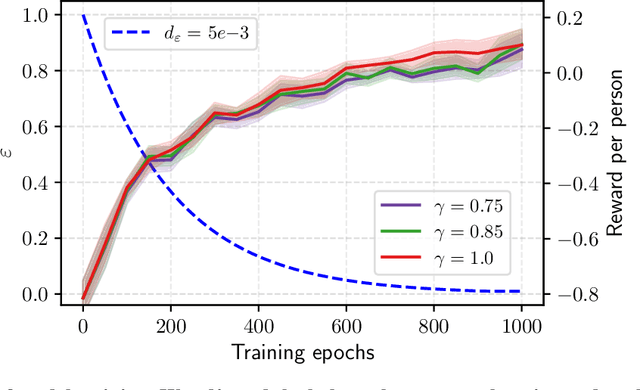

Abstract:Osteoarthritis (OA) is the most common musculoskeletal disease, which has no cure. Knee OA (KOA) is one of the highest causes of disability worldwide, and it costs billions of United States dollars to the global community. Prediction of KOA progression has been of high interest to the community for years, as it can advance treatment development through more efficient clinical trials and improve patient outcomes through more efficient healthcare utilization. Existing approaches for predicting KOA, however, are predominantly static, i.e. consider data from a single time point to predict progression many years into the future, and knee level, i.e. consider progression in a single joint only. Due to these and related reasons, these methods fail to deliver the level of predictive performance, which is sufficient to result in cost savings and better patient outcomes. Collecting extensive data from all patients on a regular basis could address the issue, but it is limited by the high cost at a population level. In this work, we propose to go beyond static prediction models in OA, and bring a novel Active Sensing (AS) approach, designed to dynamically follow up patients with the objective of maximizing the number of informative data acquisitions, while minimizing their total cost over a period of time. Our approach is based on Reinforcement Learning (RL), and it leverages a novel reward function designed specifically for AS of disease progression in more than one part of a human body. Our method is end-to-end, relies on multi-modal Deep Learning, and requires no human input at inference time. Throughout an exhaustive experimental evaluation, we show that using RL can provide a higher monetary benefit when compared to state-of-the-art baselines.

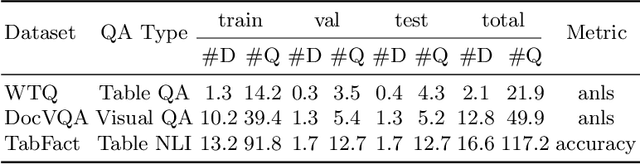

Federated Document Visual Question Answering: A Pilot Study

May 10, 2024

Abstract:An important handicap of document analysis research is that documents tend to be copyrighted or contain private information, which prohibits their open publication and the creation of centralised, large-scale document datasets. Instead, documents are scattered in private data silos, making extensive training over heterogeneous data a tedious task. In this work, we explore the use of a federated learning (FL) scheme as a way to train a shared model on decentralised private document data. We focus on the problem of Document VQA, a task particularly suited to this approach, as the type of reasoning capabilities required from the model can be quite different in diverse domains. Enabling training over heterogeneous document datasets can thus substantially enrich DocVQA models. We assemble existing DocVQA datasets from diverse domains to reflect the data heterogeneity in real-world applications. We explore the self-pretraining technique in this multi-modal setting, where the same data is used for both pretraining and finetuning, making it relevant for privacy preservation. We further propose combining self-pretraining with a Federated DocVQA training method using centralized adaptive optimization that outperforms the FedAvg baseline. With extensive experiments, we also present a multi-faceted analysis on training DocVQA models with FL, which provides insights for future research on this task. We show that our pretraining strategies can effectively learn and scale up under federated training with diverse DocVQA datasets and tuning hyperparameters is essential for practical document tasks under federation.

Successfully Guiding Humans with Imperfect Instructions by Highlighting Potential Errors and Suggesting Corrections

Feb 26, 2024Abstract:This paper addresses the challenge of leveraging imperfect language models to guide human decision-making in the context of a grounded navigation task. We show that an imperfect instruction generation model can be complemented with an effective communication mechanism to become more successful at guiding humans. The communication mechanism we build comprises models that can detect potential hallucinations in instructions and suggest practical alternatives, and an intuitive interface to present that information to users. We show that this approach reduces the human navigation error by up to 29% with no additional cognitive burden. This result underscores the potential of integrating diverse communication channels into AI systems to compensate for their imperfections and enhance their utility for humans.

Softmax Probabilities (Mostly) Predict Large Language Model Correctness on Multiple-Choice Q&A

Feb 20, 2024Abstract:Although large language models (LLMs) perform impressively on many tasks, overconfidence remains a problem. We hypothesized that on multiple-choice Q&A tasks, wrong answers would be associated with smaller maximum softmax probabilities (MSPs) compared to correct answers. We comprehensively evaluate this hypothesis on ten open-source LLMs and five datasets, and find strong evidence for our hypothesis among models which perform well on the original Q&A task. For the six LLMs with the best Q&A performance, the AUROC derived from the MSP was better than random chance with p < 10^{-4} in 59/60 instances. Among those six LLMs, the average AUROC ranged from 60% to 69%. Leveraging these findings, we propose a multiple-choice Q&A task with an option to abstain and show that performance can be improved by selectively abstaining based on the MSP of the initial model response. We also run the same experiments with pre-softmax logits instead of softmax probabilities and find similar (but not identical) results.

Language-Guided World Models: A Model-Based Approach to AI Control

Jan 24, 2024

Abstract:Installing probabilistic world models into artificial agents opens an efficient channel for humans to communicate with and control these agents. In addition to updating agent policies, humans can modify their internal world models in order to influence their decisions. The challenge, however, is that currently existing world models are difficult for humans to adapt because they lack a natural communication interface. Aimed at addressing this shortcoming, we develop Language-Guided World Models (LWMs), which can capture environment dynamics by reading language descriptions. These models enhance agent communication efficiency, allowing humans to simultaneously alter their behavior on multiple tasks with concise language feedback. They also enable agents to self-learn from texts originally written to instruct humans. To facilitate the development of LWMs, we design a challenging benchmark based on the game of MESSENGER (Hanjie et al., 2021), requiring compositional generalization to new language descriptions and environment dynamics. Our experiments reveal that the current state-of-the-art Transformer architecture performs poorly on this benchmark, motivating us to design a more robust architecture. To showcase the practicality of our proposed LWMs, we simulate a scenario where these models augment the interpretability and safety of an agent by enabling it to generate and discuss plans with a human before execution. By effectively incorporating language feedback on the plan, the models boost the agent performance in the real environment by up to three times without collecting any interactive experiences in this environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge