Ke Jin

DVD: A Robust Method for Detecting Variant Contamination in Large Language Model Evaluation

Jan 08, 2026Abstract:Evaluating large language models (LLMs) is increasingly confounded by \emph{variant contamination}: the training corpus contains semantically equivalent yet lexically or syntactically altered versions of test items. Unlike verbatim leakage, these paraphrased or structurally transformed variants evade existing detectors based on sampling consistency or perplexity, thereby inflating benchmark scores via memorization rather than genuine reasoning. We formalize this problem and introduce \textbf{DVD} (\textbf{D}etection via \textbf{V}ariance of generation \textbf{D}istribution), a single-sample detector that models the local output distribution induced by temperature sampling. Our key insight is that contaminated items trigger alternation between a \emph{memory-adherence} state and a \emph{perturbation-drift} state, yielding abnormally high variance in the synthetic difficulty of low-probability tokens; uncontaminated items remain in drift with comparatively smooth variance. We construct the first benchmark for variant contamination across two domains Omni-MATH and SuperGPQA by generating and filtering semantically equivalent variants, and simulate contamination via fine-tuning models of different scales and architectures (Qwen2.5 and Llama3.1). Across datasets and models, \textbf{DVD} consistently outperforms perplexity-based, Min-$k$\%++, edit-distance (CDD), and embedding-similarity baselines, while exhibiting strong robustness to hyperparameters. Our results establish variance of the generation distribution as a principled and practical fingerprint for detecting variant contamination in LLM evaluation.

Alternating Training-based Label Smoothing Enhances Prompt Generalization

Aug 25, 2025

Abstract:Recent advances in pre-trained vision-language models have demonstrated remarkable zero-shot generalization capabilities. To further enhance these models' adaptability to various downstream tasks, prompt tuning has emerged as a parameter-efficient fine-tuning method. However, despite its efficiency, the generalization ability of prompt remains limited. In contrast, label smoothing (LS) has been widely recognized as an effective regularization technique that prevents models from becoming over-confident and improves their generalization. This inspires us to explore the integration of LS with prompt tuning. However, we have observed that the vanilla LS even weakens the generalization ability of prompt tuning. To address this issue, we propose the Alternating Training-based Label Smoothing (ATLaS) method, which alternately trains with standard one-hot labels and soft labels generated by LS to supervise the prompt tuning. Moreover, we introduce two types of efficient offline soft labels, including Class-wise Soft Labels (CSL) and Instance-wise Soft Labels (ISL), to provide inter-class or instance-class relationships for prompt tuning. The theoretical properties of the proposed ATLaS method are analyzed. Extensive experiments demonstrate that the proposed ATLaS method, combined with CSL and ISL, consistently enhances the generalization performance of prompt tuning. Moreover, the proposed ATLaS method exhibits high compatibility with prevalent prompt tuning methods, enabling seamless integration into existing methods.

SimpleVQA: Multimodal Factuality Evaluation for Multimodal Large Language Models

Feb 18, 2025Abstract:The increasing application of multi-modal large language models (MLLMs) across various sectors have spotlighted the essence of their output reliability and accuracy, particularly their ability to produce content grounded in factual information (e.g. common and domain-specific knowledge). In this work, we introduce SimpleVQA, the first comprehensive multi-modal benchmark to evaluate the factuality ability of MLLMs to answer natural language short questions. SimpleVQA is characterized by six key features: it covers multiple tasks and multiple scenarios, ensures high quality and challenging queries, maintains static and timeless reference answers, and is straightforward to evaluate. Our approach involves categorizing visual question-answering items into 9 different tasks around objective events or common knowledge and situating these within 9 topics. Rigorous quality control processes are implemented to guarantee high-quality, concise, and clear answers, facilitating evaluation with minimal variance via an LLM-as-a-judge scoring system. Using SimpleVQA, we perform a comprehensive assessment of leading 18 MLLMs and 8 text-only LLMs, delving into their image comprehension and text generation abilities by identifying and analyzing error cases.

Bayesian Optimization by Kernel Regression and Density-based Exploration

Feb 10, 2025Abstract:Bayesian optimization is highly effective for optimizing expensive-to-evaluate black-box functions, but it faces significant computational challenges due to the high computational complexity of Gaussian processes, which results in a total time complexity that is quartic with respect to the number of iterations. To address this limitation, we propose the Bayesian Optimization by Kernel regression and density-based Exploration (BOKE) algorithm. BOKE uses kernel regression for efficient function approximation, kernel density for exploration, and the improved kernel regression upper confidence bound criteria to guide the optimization process, thus reducing computational costs to quadratic. Our theoretical analysis rigorously establishes the global convergence of BOKE and ensures its robustness. Through extensive numerical experiments on both synthetic and real-world optimization tasks, we demonstrate that BOKE not only performs competitively compared to Gaussian process-based methods but also exhibits superior computational efficiency. These results highlight BOKE's effectiveness in resource-constrained environments, providing a practical approach for optimization problems in engineering applications.

ExecRepoBench: Multi-level Executable Code Completion Evaluation

Dec 16, 2024

Abstract:Code completion has become an essential tool for daily software development. Existing evaluation benchmarks often employ static methods that do not fully capture the dynamic nature of real-world coding environments and face significant challenges, including limited context length, reliance on superficial evaluation metrics, and potential overfitting to training datasets. In this work, we introduce a novel framework for enhancing code completion in software development through the creation of a repository-level benchmark ExecRepoBench and the instruction corpora Repo-Instruct, aim at improving the functionality of open-source large language models (LLMs) in real-world coding scenarios that involve complex interdependencies across multiple files. ExecRepoBench includes 1.2K samples from active Python repositories. Plus, we present a multi-level grammar-based completion methodology conditioned on the abstract syntax tree to mask code fragments at various logical units (e.g. statements, expressions, and functions). Then, we fine-tune the open-source LLM with 7B parameters on Repo-Instruct to produce a strong code completion baseline model Qwen2.5-Coder-Instruct-C based on the open-source model. Qwen2.5-Coder-Instruct-C is rigorously evaluated against existing benchmarks, including MultiPL-E and ExecRepoBench, which consistently outperforms prior baselines across all programming languages. The deployment of \ourmethod{} can be used as a high-performance, local service for programming development\footnote{\url{https://execrepobench.github.io/}}.

Evaluating and Aligning CodeLLMs on Human Preference

Dec 06, 2024

Abstract:Code large language models (codeLLMs) have made significant strides in code generation. Most previous code-related benchmarks, which consist of various programming exercises along with the corresponding test cases, are used as a common measure to evaluate the performance and capabilities of code LLMs. However, the current code LLMs focus on synthesizing the correct code snippet, ignoring the alignment with human preferences, where the query should be sampled from the practical application scenarios and the model-generated responses should satisfy the human preference. To bridge the gap between the model-generated response and human preference, we present a rigorous human-curated benchmark CodeArena to emulate the complexity and diversity of real-world coding tasks, where 397 high-quality samples spanning 40 categories and 44 programming languages, carefully curated from user queries. Further, we propose a diverse synthetic instruction corpus SynCode-Instruct (nearly 20B tokens) by scaling instructions from the website to verify the effectiveness of the large-scale synthetic instruction fine-tuning, where Qwen2.5-SynCoder totally trained on synthetic instruction data can achieve top-tier performance of open-source code LLMs. The results find performance differences between execution-based benchmarks and CodeArena. Our systematic experiments of CodeArena on 40+ LLMs reveal a notable performance gap between open SOTA code LLMs (e.g. Qwen2.5-Coder) and proprietary LLMs (e.g., OpenAI o1), underscoring the importance of the human preference alignment.\footnote{\url{https://codearenaeval.github.io/ }}

MdEval: Massively Multilingual Code Debugging

Nov 04, 2024

Abstract:Code large language models (LLMs) have made significant progress in code debugging by directly generating the correct code based on the buggy code snippet. Programming benchmarks, typically consisting of buggy code snippet and their associated test cases, are used to assess the debugging capabilities of LLMs. However, many existing benchmarks primarily focus on Python and are often limited in terms of language diversity (e.g., DebugBench and DebugEval). To advance the field of multilingual debugging with LLMs, we propose the first massively multilingual debugging benchmark, which includes 3.6K test samples of 18 programming languages and covers the automated program repair (APR) task, the code review (CR) task, and the bug identification (BI) task. Further, we introduce the debugging instruction corpora MDEVAL-INSTRUCT by injecting bugs into the correct multilingual queries and solutions (xDebugGen). Further, a multilingual debugger xDebugCoder trained on MDEVAL-INSTRUCT as a strong baseline specifically to handle the bugs of a wide range of programming languages (e.g. "Missing Mut" in language Rust and "Misused Macro Definition" in language C). Our extensive experiments on MDEVAL reveal a notable performance gap between open-source models and closed-source LLMs (e.g., GPT and Claude series), highlighting huge room for improvement in multilingual code debugging scenarios.

M2rc-Eval: Massively Multilingual Repository-level Code Completion Evaluation

Oct 28, 2024

Abstract:Repository-level code completion has drawn great attention in software engineering, and several benchmark datasets have been introduced. However, existing repository-level code completion benchmarks usually focus on a limited number of languages (<5), which cannot evaluate the general code intelligence abilities across different languages for existing code Large Language Models (LLMs). Besides, the existing benchmarks usually report overall average scores of different languages, where the fine-grained abilities in different completion scenarios are ignored. Therefore, to facilitate the research of code LLMs in multilingual scenarios, we propose a massively multilingual repository-level code completion benchmark covering 18 programming languages (called M2RC-EVAL), and two types of fine-grained annotations (i.e., bucket-level and semantic-level) on different completion scenarios are provided, where we obtain these annotations based on the parsed abstract syntax tree. Moreover, we also curate a massively multilingual instruction corpora M2RC- INSTRUCT dataset to improve the repository-level code completion abilities of existing code LLMs. Comprehensive experimental results demonstrate the effectiveness of our M2RC-EVAL and M2RC-INSTRUCT.

MTFinEval:A Multi-domain Chinese Financial Benchmark with Eurypalynous questions

Aug 20, 2024Abstract:With the emergence of more and more economy-specific LLMS, how to measure whether they can be safely invested in production becomes a problem. Previous research has primarily focused on evaluating the performance of LLMs within specific application scenarios. However, these benchmarks cannot reflect the theoretical level and generalization ability, and the backward datasets are increasingly unsuitable for problems in real scenarios. In this paper, we have compiled a new benchmark, MTFinEval, focusing on the LLMs' basic knowledge of economics, which can always be used as a basis for judgment. To examine only theoretical knowledge as much as possible, MTFinEval is build with foundational questions from university textbooks,and exam papers in economics and management major. Aware of the overall performance of LLMs do not depend solely on one subdiscipline of economics, MTFinEval comprise 360 questions refined from six major disciplines of economics, and reflect capabilities more comprehensively. Experiment result shows all LLMs perform poorly on MTFinEval, which proves that our benchmark built on basic knowledge is very successful. Our research not only offers guidance for selecting the appropriate LLM for specific use cases, but also put forward increase the rigor reliability of LLMs from the basics.

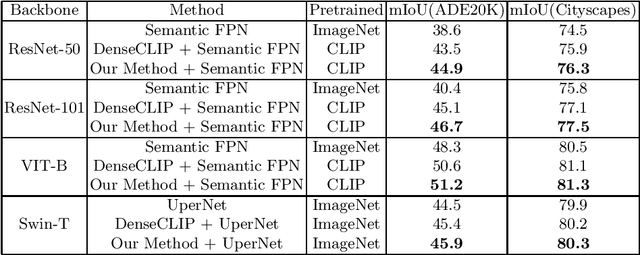

CLIP for Lightweight Semantic Segmentation

Oct 11, 2023

Abstract:The large-scale pretrained model CLIP, trained on 400 million image-text pairs, offers a promising paradigm for tackling vision tasks, albeit at the image level. Later works, such as DenseCLIP and LSeg, extend this paradigm to dense prediction, including semantic segmentation, and have achieved excellent results. However, the above methods either rely on CLIP-pretrained visual backbones or use none-pretrained but heavy backbones such as Swin, while falling ineffective when applied to lightweight backbones. The reason for this is that the lightweitht networks, feature extraction ability of which are relatively limited, meet difficulty embedding the image feature aligned with text embeddings perfectly. In this work, we present a new feature fusion module which tackles this problem and enables language-guided paradigm to be applied to lightweight networks. Specifically, the module is a parallel design of CNN and transformer with a two-way bridge in between, where CNN extracts spatial information and visual context of the feature map from the image encoder, and the transformer propagates text embeddings from the text encoder forward. The core of the module is the bidirectional fusion of visual and text feature across the bridge which prompts their proximity and alignment in embedding space. The module is model-agnostic, which can not only make language-guided lightweight semantic segmentation practical, but also fully exploit the pretrained knowledge of language priors and achieve better performance than previous SOTA work, such as DenseCLIP, whatever the vision backbone is. Extensive experiments have been conducted to demonstrate the superiority of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge