CLIP for Lightweight Semantic Segmentation

Paper and Code

Oct 11, 2023

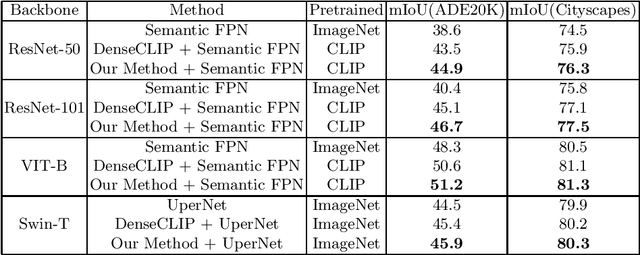

The large-scale pretrained model CLIP, trained on 400 million image-text pairs, offers a promising paradigm for tackling vision tasks, albeit at the image level. Later works, such as DenseCLIP and LSeg, extend this paradigm to dense prediction, including semantic segmentation, and have achieved excellent results. However, the above methods either rely on CLIP-pretrained visual backbones or use none-pretrained but heavy backbones such as Swin, while falling ineffective when applied to lightweight backbones. The reason for this is that the lightweitht networks, feature extraction ability of which are relatively limited, meet difficulty embedding the image feature aligned with text embeddings perfectly. In this work, we present a new feature fusion module which tackles this problem and enables language-guided paradigm to be applied to lightweight networks. Specifically, the module is a parallel design of CNN and transformer with a two-way bridge in between, where CNN extracts spatial information and visual context of the feature map from the image encoder, and the transformer propagates text embeddings from the text encoder forward. The core of the module is the bidirectional fusion of visual and text feature across the bridge which prompts their proximity and alignment in embedding space. The module is model-agnostic, which can not only make language-guided lightweight semantic segmentation practical, but also fully exploit the pretrained knowledge of language priors and achieve better performance than previous SOTA work, such as DenseCLIP, whatever the vision backbone is. Extensive experiments have been conducted to demonstrate the superiority of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge