Jun Huan

MURPHY: Multi-Turn GRPO for Self Correcting Code Generation

Nov 11, 2025Abstract:Reinforcement Learning with Verifiable Rewards (RLVR) has emerged as a powerful framework for enhancing the reasoning capabilities of large language models (LLMs). However, existing approaches such as Group Relative Policy Optimization (GRPO) and its variants, while effective on reasoning benchmarks, struggle with agentic tasks that require iterative decision-making. We introduce Murphy, a multi-turn reflective optimization framework that extends GRPO by incorporating iterative self-correction during training. By leveraging both quantitative and qualitative execution feedback, Murphy enables models to progressively refine their reasoning across multiple turns. Evaluations on code generation benchmarks with model families such as Qwen and OLMo show that Murphy consistently improves performance, achieving up to a 8% relative gain in pass@1 over GRPO, on similar compute budgets.

Inference Optimization of Foundation Models on AI Accelerators

Jul 12, 2024

Abstract:Powerful foundation models, including large language models (LLMs), with Transformer architectures have ushered in a new era of Generative AI across various industries. Industry and research community have witnessed a large number of new applications, based on those foundation models. Such applications include question and answer, customer services, image and video generation, and code completions, among others. However, as the number of model parameters reaches to hundreds of billions, their deployment incurs prohibitive inference costs and high latency in real-world scenarios. As a result, the demand for cost-effective and fast inference using AI accelerators is ever more higher. To this end, our tutorial offers a comprehensive discussion on complementary inference optimization techniques using AI accelerators. Beginning with an overview of basic Transformer architectures and deep learning system frameworks, we deep dive into system optimization techniques for fast and memory-efficient attention computations and discuss how they can be implemented efficiently on AI accelerators. Next, we describe architectural elements that are key for fast transformer inference. Finally, we examine various model compression and fast decoding strategies in the same context.

HLAT: High-quality Large Language Model Pre-trained on AWS Trainium

Apr 16, 2024

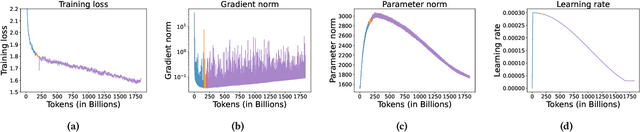

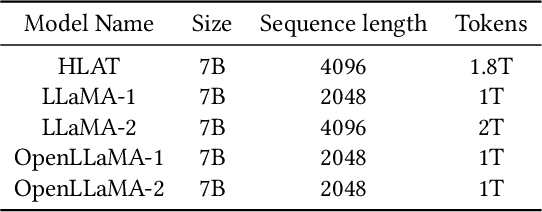

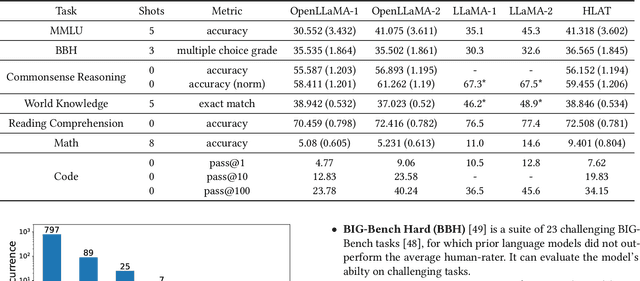

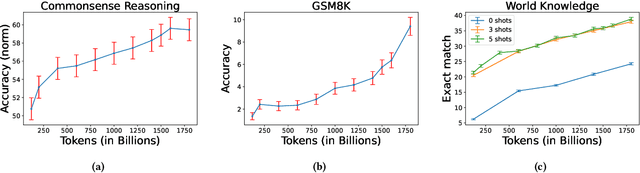

Abstract:Getting large language models (LLMs) to perform well on the downstream tasks requires pre-training over trillions of tokens. This typically demands a large number of powerful computational devices in addition to a stable distributed training framework to accelerate the training. The growing number of applications leveraging AI/ML had led to a scarcity of the expensive conventional accelerators (such as GPUs), which begs the need for the alternative specialized-accelerators that are scalable and cost-efficient. AWS Trainium is the second-generation machine learning accelerator that has been purposely built for training large deep learning models. Its corresponding instance, Amazon EC2 trn1, is an alternative to GPU instances for LLM training. However, training LLMs with billions of parameters on trn1 is challenging due to its relatively nascent software ecosystem. In this paper, we showcase HLAT: a 7 billion parameter decoder-only LLM pre-trained using trn1 instances over 1.8 trillion tokens. The performance of HLAT is benchmarked against popular open source baseline models including LLaMA and OpenLLaMA, which have been trained on NVIDIA GPUs and Google TPUs, respectively. On various evaluation tasks, we show that HLAT achieves model quality on par with the baselines. We also share the best practice of using the Neuron Distributed Training Library (NDTL), a customized distributed training library for AWS Trainium to achieve efficient training. Our work demonstrates that AWS Trainium powered by the NDTL is able to successfully pre-train state-of-the-art LLM models with high performance and cost-effectiveness.

Random Walk on Multiple Networks

Jul 04, 2023Abstract:Random Walk is a basic algorithm to explore the structure of networks, which can be used in many tasks, such as local community detection and network embedding. Existing random walk methods are based on single networks that contain limited information. In contrast, real data often contain entities with different types or/and from different sources, which are comprehensive and can be better modeled by multiple networks. To take advantage of rich information in multiple networks and make better inferences on entities, in this study, we propose random walk on multiple networks, RWM. RWM is flexible and supports both multiplex networks and general multiple networks, which may form many-to-many node mappings between networks. RWM sends a random walker on each network to obtain the local proximity (i.e., node visiting probabilities) w.r.t. the starting nodes. Walkers with similar visiting probabilities reinforce each other. We theoretically analyze the convergence properties of RWM. Two approximation methods with theoretical performance guarantees are proposed for efficient computation. We apply RWM in link prediction, network embedding, and local community detection. Comprehensive experiments conducted on both synthetic and real-world datasets demonstrate the effectiveness and efficiency of RWM.

Temporal Output Discrepancy for Loss Estimation-based Active Learning

Dec 20, 2022

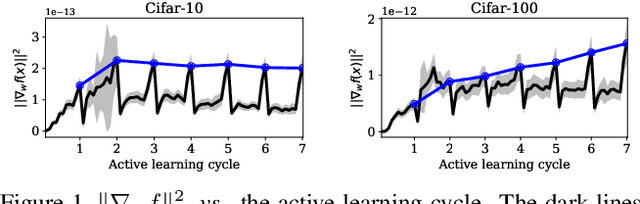

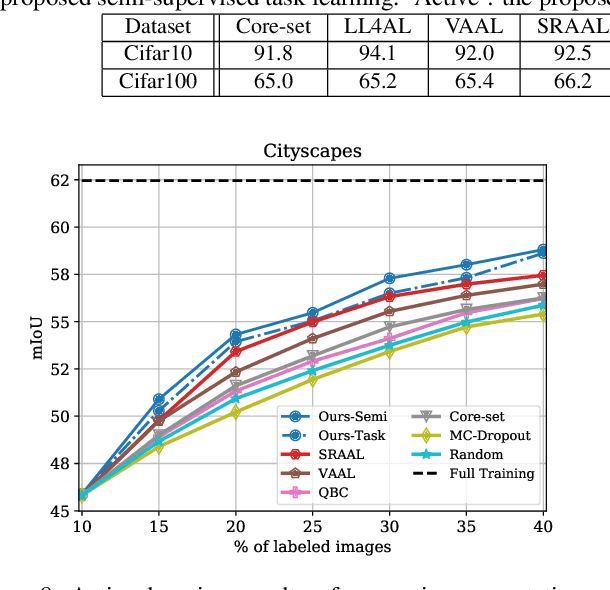

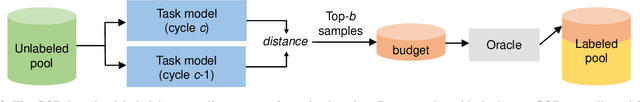

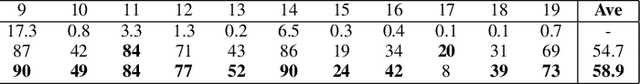

Abstract:While deep learning succeeds in a wide range of tasks, it highly depends on the massive collection of annotated data which is expensive and time-consuming. To lower the cost of data annotation, active learning has been proposed to interactively query an oracle to annotate a small proportion of informative samples in an unlabeled dataset. Inspired by the fact that the samples with higher loss are usually more informative to the model than the samples with lower loss, in this paper we present a novel deep active learning approach that queries the oracle for data annotation when the unlabeled sample is believed to incorporate high loss. The core of our approach is a measurement Temporal Output Discrepancy (TOD) that estimates the sample loss by evaluating the discrepancy of outputs given by models at different optimization steps. Our theoretical investigation shows that TOD lower-bounds the accumulated sample loss thus it can be used to select informative unlabeled samples. On basis of TOD, we further develop an effective unlabeled data sampling strategy as well as an unsupervised learning criterion for active learning. Due to the simplicity of TOD, our methods are efficient, flexible, and task-agnostic. Extensive experimental results demonstrate that our approach achieves superior performances than the state-of-the-art active learning methods on image classification and semantic segmentation tasks. In addition, we show that TOD can be utilized to select the best model of potentially the highest testing accuracy from a pool of candidate models.

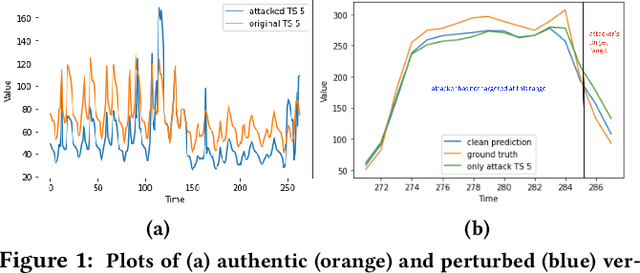

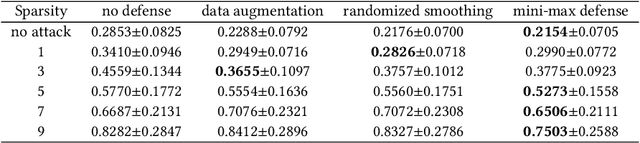

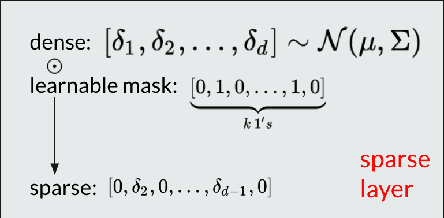

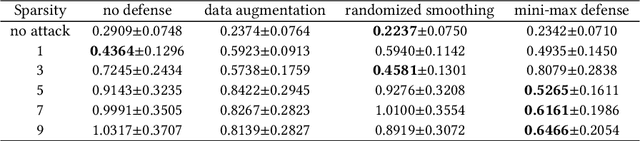

Towards Robust Multivariate Time-Series Forecasting: Adversarial Attacks and Defense Mechanisms

Jul 19, 2022

Abstract:As deep learning models have gradually become the main workhorse of time series forecasting, the potential vulnerability under adversarial attacks to forecasting and decision system accordingly has emerged as a main issue in recent years. Albeit such behaviors and defense mechanisms started to be investigated for the univariate time series forecasting, there are still few studies regarding the multivariate forecasting which is often preferred due to its capacity to encode correlations between different time series. In this work, we study and design adversarial attack on multivariate probabilistic forecasting models, taking into consideration attack budget constraints and the correlation architecture between multiple time series. Specifically, we investigate a sparse indirect attack that hurts the prediction of an item (time series) by only attacking the history of a small number of other items to save attacking cost. In order to combat these attacks, we also develop two defense strategies. First, we adopt randomized smoothing to multivariate time series scenario and verify its effectiveness via empirical experiments. Second, we leverage a sparse attacker to enable end-to-end adversarial training that delivers robust probabilistic forecasters. Extensive experiments on real dataset confirm that our attack schemes are powerful and our defend algorithms are more effective compared with other baseline defense mechanisms.

Semi-Supervised Active Learning with Temporal Output Discrepancy

Jul 29, 2021

Abstract:While deep learning succeeds in a wide range of tasks, it highly depends on the massive collection of annotated data which is expensive and time-consuming. To lower the cost of data annotation, active learning has been proposed to interactively query an oracle to annotate a small proportion of informative samples in an unlabeled dataset. Inspired by the fact that the samples with higher loss are usually more informative to the model than the samples with lower loss, in this paper we present a novel deep active learning approach that queries the oracle for data annotation when the unlabeled sample is believed to incorporate high loss. The core of our approach is a measurement Temporal Output Discrepancy (TOD) that estimates the sample loss by evaluating the discrepancy of outputs given by models at different optimization steps. Our theoretical investigation shows that TOD lower-bounds the accumulated sample loss thus it can be used to select informative unlabeled samples. On basis of TOD, we further develop an effective unlabeled data sampling strategy as well as an unsupervised learning criterion that enhances model performance by incorporating the unlabeled data. Due to the simplicity of TOD, our active learning approach is efficient, flexible, and task-agnostic. Extensive experimental results demonstrate that our approach achieves superior performances than the state-of-the-art active learning methods on image classification and semantic segmentation tasks.

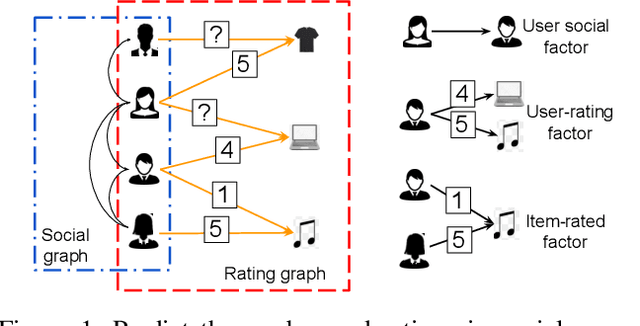

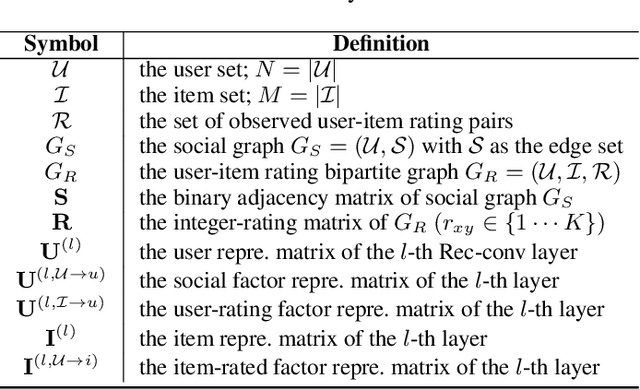

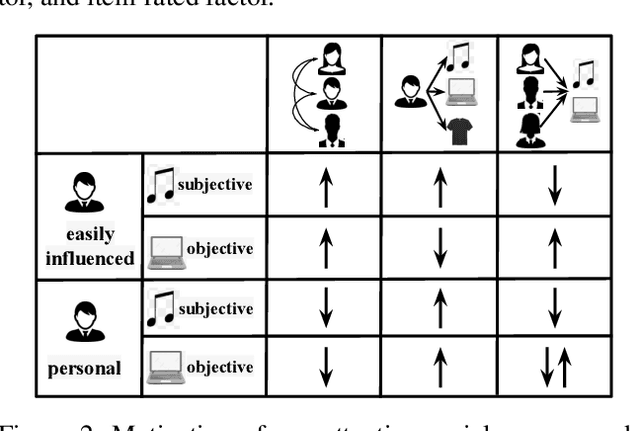

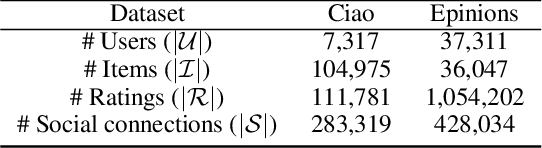

Attentive Social Recommendation: Towards User And Item Diversities

Nov 15, 2020

Abstract:Social recommendation system is to predict unobserved user-item rating values by taking advantage of user-user social relation and user-item ratings. However, user/item diversities in social recommendations are not well utilized in the literature. Especially, inter-factor (social and rating factors) relations and distinct rating values need taking into more consideration. In this paper, we propose an attentive social recommendation system (ASR) to address this issue from two aspects. First, in ASR, Rec-conv graph network layers are proposed to extract the social factor, user-rating and item-rated factors and then automatically assign contribution weights to aggregate these factors into the user/item embedding vectors. Second, a disentangling strategy is applied for diverse rating values. Extensive experiments on benchmarks demonstrate the effectiveness and advantages of our ASR.

Generating Person Images with Appearance-aware Pose Stylizer

Jul 17, 2020

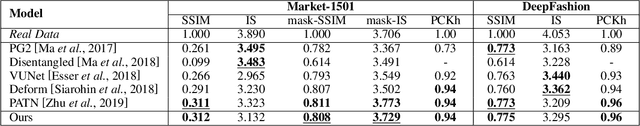

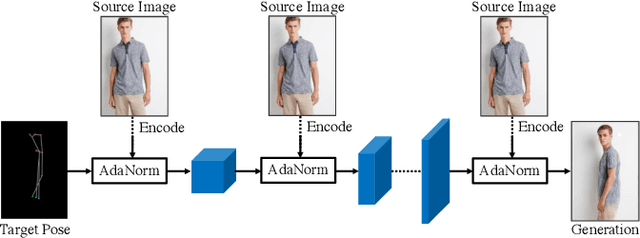

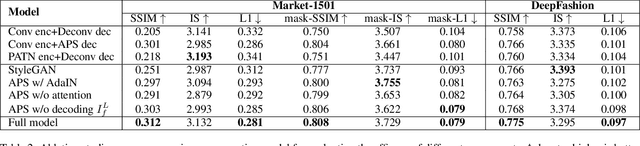

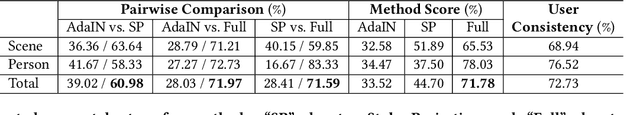

Abstract:Generation of high-quality person images is challenging, due to the sophisticated entanglements among image factors, e.g., appearance, pose, foreground, background, local details, global structures, etc. In this paper, we present a novel end-to-end framework to generate realistic person images based on given person poses and appearances. The core of our framework is a novel generator called Appearance-aware Pose Stylizer (APS) which generates human images by coupling the target pose with the conditioned person appearance progressively. The framework is highly flexible and controllable by effectively decoupling various complex person image factors in the encoding phase, followed by re-coupling them in the decoding phase. In addition, we present a new normalization method named adaptive patch normalization, which enables region-specific normalization and shows a good performance when adopted in person image generation model. Experiments on two benchmark datasets show that our method is capable of generating visually appealing and realistic-looking results using arbitrary image and pose inputs.

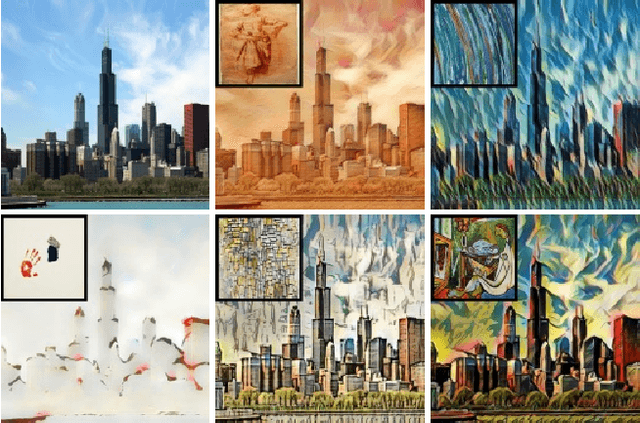

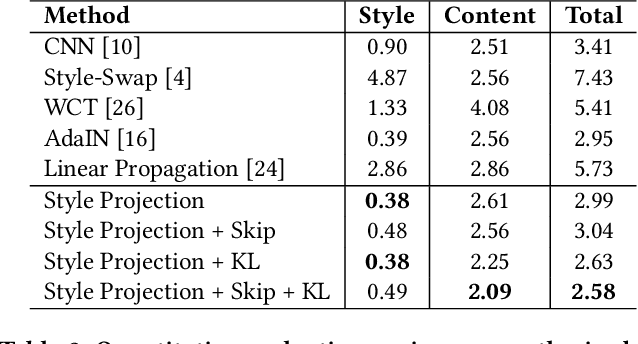

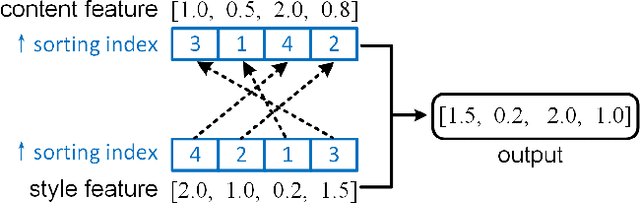

Parameter-Free Style Projection for Arbitrary Style Transfer

Mar 17, 2020

Abstract:Arbitrary image style transfer is a challenging task which aims to stylize a content image conditioned on an arbitrary style image. In this task the content-style feature transformation is a critical component for a proper fusion of features. Existing feature transformation algorithms often suffer from unstable learning, loss of content and style details, and non-natural stroke patterns. To mitigate these issues, this paper proposes a parameter-free algorithm, Style Projection, for fast yet effective content-style transformation. To leverage the proposed Style Projection~component, this paper further presents a real-time feed-forward model for arbitrary style transfer, including a regularization for matching the content semantics between inputs and outputs. Extensive experiments have demonstrated the effectiveness and efficiency of the proposed method in terms of qualitative analysis, quantitative evaluation, and user study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge