Juan Zhang

Differentiable Adaptive Kalman Filtering via Optimal Transport

Aug 09, 2025Abstract:Learning-based filtering has demonstrated strong performance in non-linear dynamical systems, particularly when the statistics of noise are unknown. However, in real-world deployments, environmental factors, such as changing wind conditions or electromagnetic interference, can induce unobserved noise-statistics drift, leading to substantial degradation of learning-based methods. To address this challenge, we propose OTAKNet, the first online solution to noise-statistics drift within learning-based adaptive Kalman filtering. Unlike existing learning-based methods that perform offline fine-tuning using batch pointwise matching over entire trajectories, OTAKNet establishes a connection between the state estimate and the drift via one-step predictive measurement likelihood, and addresses it using optimal transport. This leverages OT's geometry - aware cost and stable gradients to enable fully online adaptation without ground truth labels or retraining. We compare OTAKNet against classical model-based adaptive Kalman filtering and offline learning-based filtering. The performance is demonstrated on both synthetic and real-world NCLT datasets, particularly under limited training data.

Squeeze10-LLM: Squeezing LLMs' Weights by 10 Times via a Staged Mixed-Precision Quantization Method

Jul 24, 2025Abstract:Deploying large language models (LLMs) is challenging due to their massive parameters and high computational costs. Ultra low-bit quantization can significantly reduce storage and accelerate inference, but extreme compression (i.e., mean bit-width <= 2) often leads to severe performance degradation. To address this, we propose Squeeze10-LLM, effectively "squeezing" 16-bit LLMs' weights by 10 times. Specifically, Squeeze10-LLM is a staged mixed-precision post-training quantization (PTQ) framework and achieves an average of 1.6 bits per weight by quantizing 80% of the weights to 1 bit and 20% to 4 bits. We introduce Squeeze10LLM with two key innovations: Post-Binarization Activation Robustness (PBAR) and Full Information Activation Supervision (FIAS). PBAR is a refined weight significance metric that accounts for the impact of quantization on activations, improving accuracy in low-bit settings. FIAS is a strategy that preserves full activation information during quantization to mitigate cumulative error propagation across layers. Experiments on LLaMA and LLaMA2 show that Squeeze10-LLM achieves state-of-the-art performance for sub-2bit weight-only quantization, improving average accuracy from 43% to 56% on six zero-shot classification tasks--a significant boost over existing PTQ methods. Our code will be released upon publication.

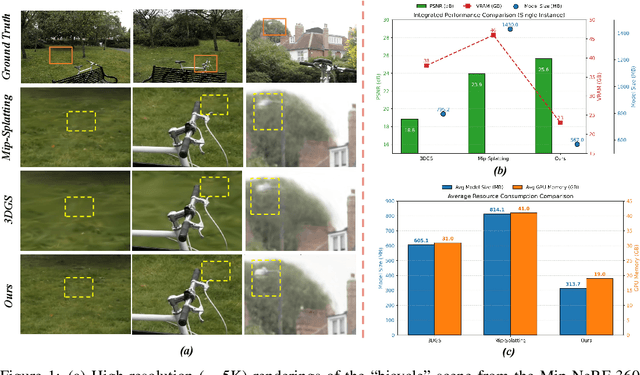

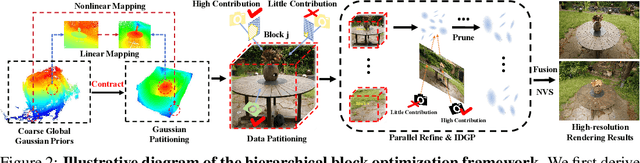

HRGS: Hierarchical Gaussian Splatting for Memory-Efficient High-Resolution 3D Reconstruction

Jun 17, 2025

Abstract:3D Gaussian Splatting (3DGS) has made significant strides in real-time 3D scene reconstruction, but faces memory scalability issues in high-resolution scenarios. To address this, we propose Hierarchical Gaussian Splatting (HRGS), a memory-efficient framework with hierarchical block-level optimization. First, we generate a global, coarse Gaussian representation from low-resolution data. Then, we partition the scene into multiple blocks, refining each block with high-resolution data. The partitioning involves two steps: Gaussian partitioning, where irregular scenes are normalized into a bounded cubic space with a uniform grid for task distribution, and training data partitioning, where only relevant observations are retained for each block. By guiding block refinement with the coarse Gaussian prior, we ensure seamless Gaussian fusion across adjacent blocks. To reduce computational demands, we introduce Importance-Driven Gaussian Pruning (IDGP), which computes importance scores for each Gaussian and removes those with minimal contribution, speeding up convergence and reducing memory usage. Additionally, we incorporate normal priors from a pretrained model to enhance surface reconstruction quality. Our method enables high-quality, high-resolution 3D scene reconstruction even under memory constraints. Extensive experiments on three benchmarks show that HRGS achieves state-of-the-art performance in high-resolution novel view synthesis (NVS) and surface reconstruction tasks.

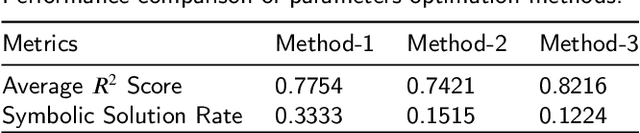

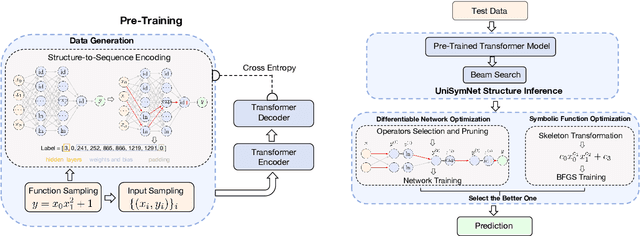

UniSymNet: A Unified Symbolic Network Guided by Transformer

May 09, 2025

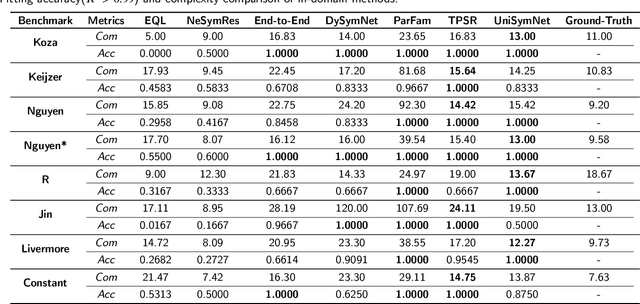

Abstract:Symbolic Regression (SR) is a powerful technique for automatically discovering mathematical expressions from input data. Mainstream SR algorithms search for the optimal symbolic tree in a vast function space, but the increasing complexity of the tree structure limits their performance. Inspired by neural networks, symbolic networks have emerged as a promising new paradigm. However, most existing symbolic networks still face certain challenges: binary nonlinear operators $\{\times, \div\}$ cannot be naturally extended to multivariate operators, and training with fixed architecture often leads to higher complexity and overfitting. In this work, we propose a Unified Symbolic Network that unifies nonlinear binary operators into nested unary operators and define the conditions under which UniSymNet can reduce complexity. Moreover, we pre-train a Transformer model with a novel label encoding method to guide structural selection, and adopt objective-specific optimization strategies to learn the parameters of the symbolic network. UniSymNet shows high fitting accuracy, excellent symbolic solution rate, and relatively low expression complexity, achieving competitive performance on low-dimensional Standard Benchmarks and high-dimensional SRBench.

Multimodal-Aware Fusion Network for Referring Remote Sensing Image Segmentation

Mar 14, 2025

Abstract:Referring remote sensing image segmentation (RRSIS) is a novel visual task in remote sensing images segmentation, which aims to segment objects based on a given text description, with great significance in practical application. Previous studies fuse visual and linguistic modalities by explicit feature interaction, which fail to effectively excavate useful multimodal information from dual-branch encoder. In this letter, we design a multimodal-aware fusion network (MAFN) to achieve fine-grained alignment and fusion between the two modalities. We propose a correlation fusion module (CFM) to enhance multi-scale visual features by introducing adaptively noise in transformer, and integrate cross-modal aware features. In addition, MAFN employs multi-scale refinement convolution (MSRC) to adapt to the various orientations of objects at different scales to boost their representation ability to enhances segmentation accuracy. Extensive experiments have shown that MAFN is significantly more effective than the state of the art on RRSIS-D datasets. The source code is available at https://github.com/Roaxy/MAFN.

TrackDiffuser: Nearly Model-Free Bayesian Filtering with Diffusion Model

Feb 08, 2025

Abstract:State estimation remains a fundamental challenge across numerous domains, from autonomous driving, aircraft tracking to quantum system control. Although Bayesian filtering has been the cornerstone solution, its classical model-based paradigm faces two major limitations: it struggles with inaccurate state space model (SSM) and requires extensive prior knowledge of noise characteristics. We present TrackDiffuser, a generative framework addressing both challenges by reformulating Bayesian filtering as a conditional diffusion model. Our approach implicitly learns system dynamics from data to mitigate the effects of inaccurate SSM, while simultaneously circumventing the need for explicit measurement models and noise priors by establishing a direct relationship between measurements and states. Through an implicit predict-and-update mechanism, TrackDiffuser preserves the interpretability advantage of traditional model-based filtering methods. Extensive experiments demonstrate that our framework substantially outperforms both classical and contemporary hybrid methods, especially in challenging non-linear scenarios involving non-Gaussian noises. Notably, TrackDiffuser exhibits remarkable robustness to SSM inaccuracies, offering a practical solution for real-world state estimation problems where perfect models and prior knowledge are unavailable.

ViSymRe: Vision-guided Multimodal Symbolic Regression

Dec 15, 2024Abstract:Symbolic regression automatically searches for mathematical equations to reveal underlying mechanisms within datasets, offering enhanced interpretability compared to black box models. Traditionally, symbolic regression has been considered to be purely numeric-driven, with insufficient attention given to the potential contributions of visual information in augmenting this process. When dealing with high-dimensional and complex datasets, existing symbolic regression models are often inefficient and tend to generate overly complex equations, making subsequent mechanism analysis complicated. In this paper, we propose the vision-guided multimodal symbolic regression model, called ViSymRe, that systematically explores how visual information can improve various metrics of symbolic regression. Compared to traditional models, our proposed model has the following innovations: (1) It integrates three modalities: vision, symbol and numeric to enhance symbolic regression, enabling the model to benefit from the strengths of each modality; (2) It establishes a meta-learning framework that can learn from historical experiences to efficiently solve new symbolic regression problems; (3) It emphasizes the simplicity and structural rationality of the equations rather than merely numerical fitting. Extensive experiments show that our proposed model exhibits strong generalization capability and noise resistance. The equations it generates outperform state-of-the-art numeric-only baselines in terms of fitting effect, simplicity and structural accuracy, thus being able to facilitate accurate mechanism analysis and the development of theoretical models.

Bridge to Real Environment with Hardware-in-the-loop for Wireless Artificial Intelligence Paradigms

Sep 25, 2024

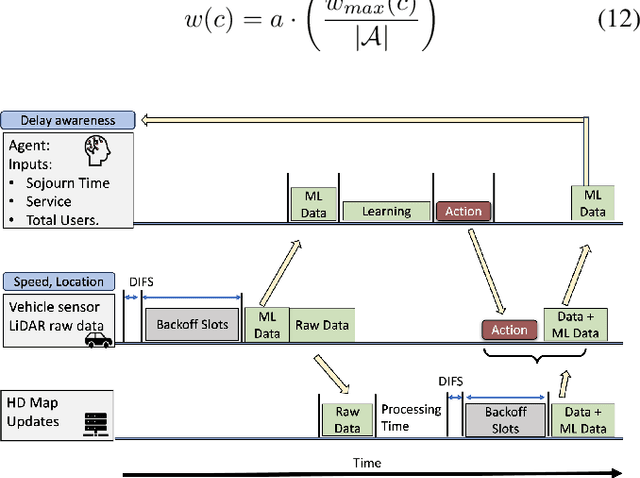

Abstract:Nowadays, many machine learning (ML) solutions to improve the wireless standard IEEE802.11p for Vehicular Adhoc Network (VANET) are commonly evaluated in the simulated world. At the same time, this approach could be cost-effective compared to real-world testing due to the high cost of vehicles. There is a risk of unexpected outcomes when these solutions are implemented in the real world, potentially leading to wasted resources. To mitigate this challenge, the hardware-in-the-loop is the way to move forward as it enables the opportunity to test in the real world and simulated worlds together. Therefore, we have developed what we believe is the pioneering hardware-in-the-loop for testing artificial intelligence, multiple services, and HD map data (LiDAR), in both simulated and real-world settings.

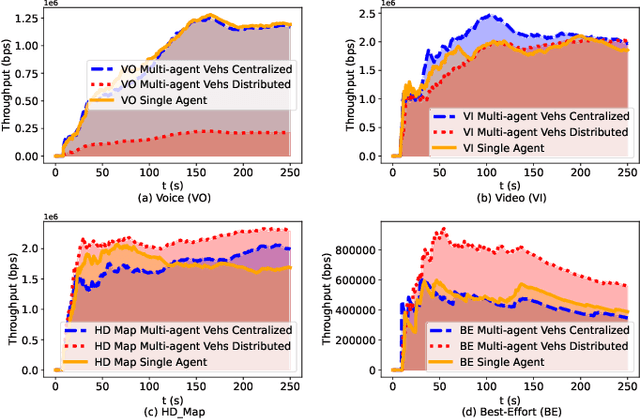

Multi-agent Assessment with QoS Enhancement for HD Map Updates in a Vehicular Network

Jul 31, 2024

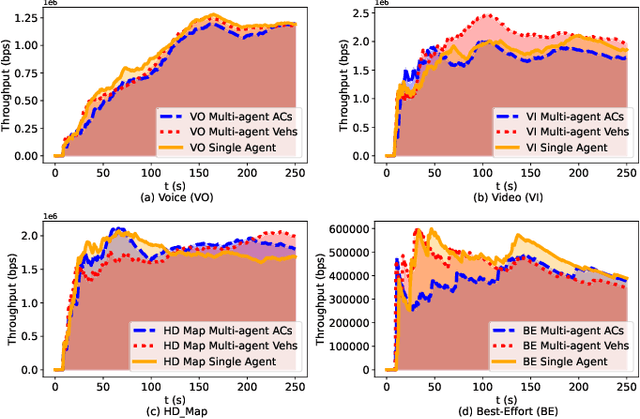

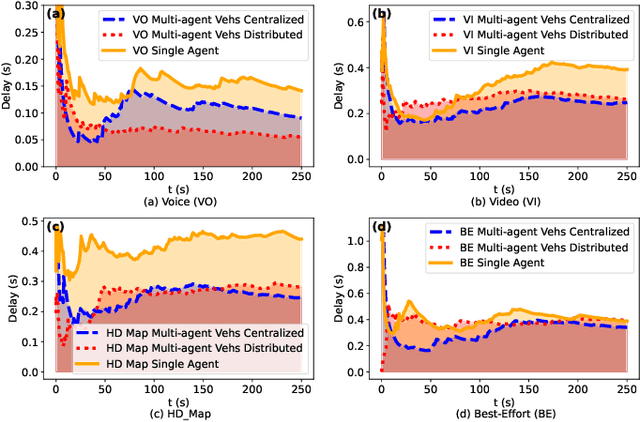

Abstract:Reinforcement Learning (RL) algorithms have been used to address the challenging problems in the offloading process of vehicular ad hoc networks (VANET). More recently, they have been utilized to improve the dissemination of high-definition (HD) Maps. Nevertheless, implementing solutions such as deep Q-learning (DQN) and Actor-critic at the autonomous vehicle (AV) may lead to an increase in the computational load, causing a heavy burden on the computational devices and higher costs. Moreover, their implementation might raise compatibility issues between technologies due to the required modifications to the standards. Therefore, in this paper, we assess the scalability of an application utilizing a Q-learning single-agent solution in a distributed multi-agent environment. This application improves the network performance by taking advantage of a smaller state, and action space whilst using a multi-agent approach. The proposed solution is extensively evaluated with different test cases involving reward function considering individual or overall network performance, number of agents, and centralized and distributed learning comparison. The experimental results demonstrate that the time latencies of our proposed solution conducted in voice, video, HD Map, and best-effort cases have significant improvements, with 40.4%, 36%, 43%, and 12% respectively, compared to the performances with the single-agent approach.

Coverage-aware and Reinforcement Learning Using Multi-agent Approach for HD Map QoS in a Realistic Environment

Jul 19, 2024

Abstract:One effective way to optimize the offloading process is by minimizing the transmission time. This is particularly true in a Vehicular Adhoc Network (VANET) where vehicles frequently download and upload High-definition (HD) map data which requires constant updates. This implies that latency and throughput requirements must be guaranteed by the wireless system. To achieve this, adjustable contention windows (CW) allocation strategies in the standard IEEE802.11p have been explored by numerous researchers. Nevertheless, their implementations demand alterations to the existing standard which is not always desirable. To address this issue, we proposed a Q-Learning algorithm that operates at the application layer. Moreover, it could be deployed in any wireless network thereby mitigating the compatibility issues. The solution has demonstrated a better network performance with relatively fewer optimization requirements as compared to the Deep Q Network (DQN) and Actor-Critic algorithms. The same is observed while evaluating the model in a multi-agent setup showing higher performance compared to the single-agent setup.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge