Jiyao Liu

Liver Fibrosis Quantification and Analysis: The LiQA Dataset and Baseline Method

Dec 22, 2025Abstract:Liver fibrosis represents a significant global health burden, necessitating accurate staging for effective clinical management. This report introduces the LiQA (Liver Fibrosis Quantification and Analysis) dataset, established as part of the CARE 2024 challenge. Comprising $440$ patients with multi-phase, multi-center MRI scans, the dataset is curated to benchmark algorithms for Liver Segmentation (LiSeg) and Liver Fibrosis Staging (LiFS) under complex real-world conditions, including domain shifts, missing modalities, and spatial misalignment. We further describe the challenge's top-performing methodology, which integrates a semi-supervised learning framework with external data for robust segmentation, and utilizes a multi-view consensus approach with Class Activation Map (CAM)-based regularization for staging. Evaluation of this baseline demonstrates that leveraging multi-source data and anatomical constraints significantly enhances model robustness in clinical settings.

Probing Scientific General Intelligence of LLMs with Scientist-Aligned Workflows

Dec 18, 2025Abstract:Despite advances in scientific AI, a coherent framework for Scientific General Intelligence (SGI)-the ability to autonomously conceive, investigate, and reason across scientific domains-remains lacking. We present an operational SGI definition grounded in the Practical Inquiry Model (PIM: Deliberation, Conception, Action, Perception) and operationalize it via four scientist-aligned tasks: deep research, idea generation, dry/wet experiments, and experimental reasoning. SGI-Bench comprises over 1,000 expert-curated, cross-disciplinary samples inspired by Science's 125 Big Questions, enabling systematic evaluation of state-of-the-art LLMs. Results reveal gaps: low exact match (10--20%) in deep research despite step-level alignment; ideas lacking feasibility and detail; high code executability but low execution result accuracy in dry experiments; low sequence fidelity in wet protocols; and persistent multimodal comparative-reasoning challenges. We further introduce Test-Time Reinforcement Learning (TTRL), which optimizes retrieval-augmented novelty rewards at inference, enhancing hypothesis novelty without reference answer. Together, our PIM-grounded definition, workflow-centric benchmark, and empirical insights establish a foundation for AI systems that genuinely participate in scientific discovery.

SafeRBench: A Comprehensive Benchmark for Safety Assessment in Large Reasoning Models

Nov 19, 2025Abstract:Large Reasoning Models (LRMs) improve answer quality through explicit chain-of-thought, yet this very capability introduces new safety risks: harmful content can be subtly injected, surface gradually, or be justified by misleading rationales within the reasoning trace. Existing safety evaluations, however, primarily focus on output-level judgments and rarely capture these dynamic risks along the reasoning process. In this paper, we present SafeRBench, the first benchmark that assesses LRM safety end-to-end -- from inputs and intermediate reasoning to final outputs. (1) Input Characterization: We pioneer the incorporation of risk categories and levels into input design, explicitly accounting for affected groups and severity, and thereby establish a balanced prompt suite reflecting diverse harm gradients. (2) Fine-Grained Output Analysis: We introduce a micro-thought chunking mechanism to segment long reasoning traces into semantically coherent units, enabling fine-grained evaluation across ten safety dimensions. (3) Human Safety Alignment: We validate LLM-based evaluations against human annotations specifically designed to capture safety judgments. Evaluations on 19 LRMs demonstrate that SafeRBench enables detailed, multidimensional safety assessment, offering insights into risks and protective mechanisms from multiple perspectives.

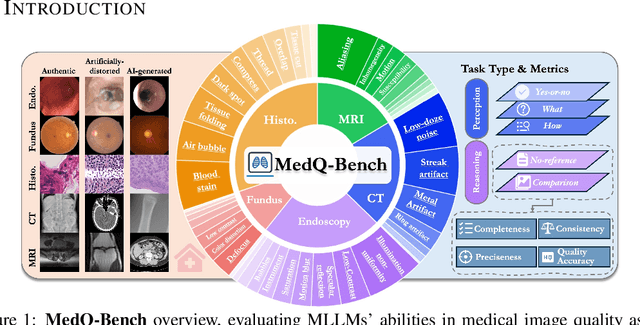

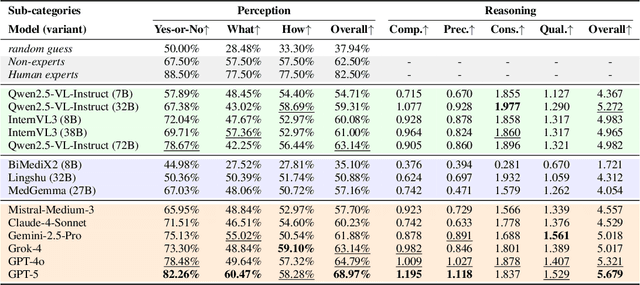

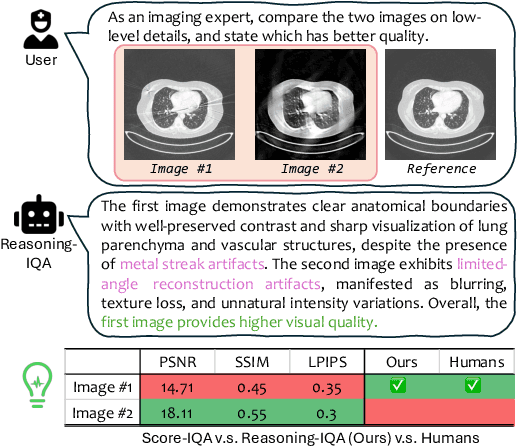

MedQ-Bench: Evaluating and Exploring Medical Image Quality Assessment Abilities in MLLMs

Oct 02, 2025

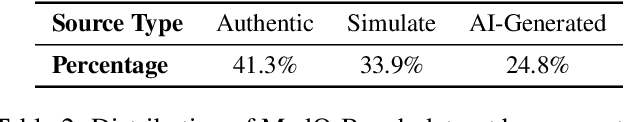

Abstract:Medical Image Quality Assessment (IQA) serves as the first-mile safety gate for clinical AI, yet existing approaches remain constrained by scalar, score-based metrics and fail to reflect the descriptive, human-like reasoning process central to expert evaluation. To address this gap, we introduce MedQ-Bench, a comprehensive benchmark that establishes a perception-reasoning paradigm for language-based evaluation of medical image quality with Multi-modal Large Language Models (MLLMs). MedQ-Bench defines two complementary tasks: (1) MedQ-Perception, which probes low-level perceptual capability via human-curated questions on fundamental visual attributes; and (2) MedQ-Reasoning, encompassing both no-reference and comparison reasoning tasks, aligning model evaluation with human-like reasoning on image quality. The benchmark spans five imaging modalities and over forty quality attributes, totaling 2,600 perceptual queries and 708 reasoning assessments, covering diverse image sources including authentic clinical acquisitions, images with simulated degradations via physics-based reconstructions, and AI-generated images. To evaluate reasoning ability, we propose a multi-dimensional judging protocol that assesses model outputs along four complementary axes. We further conduct rigorous human-AI alignment validation by comparing LLM-based judgement with radiologists. Our evaluation of 14 state-of-the-art MLLMs demonstrates that models exhibit preliminary but unstable perceptual and reasoning skills, with insufficient accuracy for reliable clinical use. These findings highlight the need for targeted optimization of MLLMs in medical IQA. We hope that MedQ-Bench will catalyze further exploration and unlock the untapped potential of MLLMs for medical image quality evaluation.

A Survey of Scientific Large Language Models: From Data Foundations to Agent Frontiers

Aug 28, 2025

Abstract:Scientific Large Language Models (Sci-LLMs) are transforming how knowledge is represented, integrated, and applied in scientific research, yet their progress is shaped by the complex nature of scientific data. This survey presents a comprehensive, data-centric synthesis that reframes the development of Sci-LLMs as a co-evolution between models and their underlying data substrate. We formulate a unified taxonomy of scientific data and a hierarchical model of scientific knowledge, emphasizing the multimodal, cross-scale, and domain-specific challenges that differentiate scientific corpora from general natural language processing datasets. We systematically review recent Sci-LLMs, from general-purpose foundations to specialized models across diverse scientific disciplines, alongside an extensive analysis of over 270 pre-/post-training datasets, showing why Sci-LLMs pose distinct demands -- heterogeneous, multi-scale, uncertainty-laden corpora that require representations preserving domain invariance and enabling cross-modal reasoning. On evaluation, we examine over 190 benchmark datasets and trace a shift from static exams toward process- and discovery-oriented assessments with advanced evaluation protocols. These data-centric analyses highlight persistent issues in scientific data development and discuss emerging solutions involving semi-automated annotation pipelines and expert validation. Finally, we outline a paradigm shift toward closed-loop systems where autonomous agents based on Sci-LLMs actively experiment, validate, and contribute to a living, evolving knowledge base. Collectively, this work provides a roadmap for building trustworthy, continually evolving artificial intelligence (AI) systems that function as a true partner in accelerating scientific discovery.

RetinaLogos: Fine-Grained Synthesis of High-Resolution Retinal Images Through Captions

May 19, 2025Abstract:The scarcity of high-quality, labelled retinal imaging data, which presents a significant challenge in the development of machine learning models for ophthalmology, hinders progress in the field. To synthesise Colour Fundus Photographs (CFPs), existing methods primarily relying on predefined disease labels face significant limitations. However, current methods remain limited, thus failing to generate images for broader categories with diverse and fine-grained anatomical structures. To overcome these challenges, we first introduce an innovative pipeline that creates a large-scale, synthetic Caption-CFP dataset comprising 1.4 million entries, called RetinaLogos-1400k. Specifically, RetinaLogos-1400k uses large language models (LLMs) to describe retinal conditions and key structures, such as optic disc configuration, vascular distribution, nerve fibre layers, and pathological features. Furthermore, based on this dataset, we employ a novel three-step training framework, called RetinaLogos, which enables fine-grained semantic control over retinal images and accurately captures different stages of disease progression, subtle anatomical variations, and specific lesion types. Extensive experiments demonstrate state-of-the-art performance across multiple datasets, with 62.07% of text-driven synthetic images indistinguishable from real ones by ophthalmologists. Moreover, the synthetic data improves accuracy by 10%-25% in diabetic retinopathy grading and glaucoma detection, thereby providing a scalable solution to augment ophthalmic datasets.

GMAI-VL-R1: Harnessing Reinforcement Learning for Multimodal Medical Reasoning

Apr 02, 2025Abstract:Recent advances in general medical AI have made significant strides, but existing models often lack the reasoning capabilities needed for complex medical decision-making. This paper presents GMAI-VL-R1, a multimodal medical reasoning model enhanced by reinforcement learning (RL) to improve its reasoning abilities. Through iterative training, GMAI-VL-R1 optimizes decision-making, significantly boosting diagnostic accuracy and clinical support. We also develop a reasoning data synthesis method, generating step-by-step reasoning data via rejection sampling, which further enhances the model's generalization. Experimental results show that after RL training, GMAI-VL-R1 excels in tasks such as medical image diagnosis and visual question answering. While the model demonstrates basic memorization with supervised fine-tuning, RL is crucial for true generalization. Our work establishes new evaluation benchmarks and paves the way for future advancements in medical reasoning models. Code, data, and model will be released at \href{https://github.com/uni-medical/GMAI-VL-R1}{this link}.

Adaptive Data Augmentation with NaturalSpeech3 for Far-field Speaker Verification

Jan 15, 2025Abstract:The scarcity of speaker-annotated far-field speech presents a significant challenge in developing high-performance far-field speaker verification (SV) systems. While data augmentation using large-scale near-field speech has been a common strategy to address this limitation, the mismatch in acoustic environments between near-field and far-field speech significantly hinders the improvement of far-field SV effectiveness. In this paper, we propose an adaptive speech augmentation approach leveraging NaturalSpeech3, a pre-trained foundation text-to-speech (TTS) model, to convert near-field speech into far-field speech by incorporating far-field acoustic ambient noise for data augmentation. Specifically, we utilize FACodec from NaturalSpeech3 to decompose the speech waveform into distinct embedding subspaces-content, prosody, speaker, and residual (acoustic details) embeddings-and reconstruct the speech waveform from these disentangled representations. In our method, the prosody, content, and residual embeddings of far-field speech are combined with speaker embeddings from near-field speech to generate augmented pseudo far-field speech that maintains the speaker identity from the out-domain near-field speech while preserving the acoustic environment of the in-domain far-field speech. This approach not only serves as an effective strategy for augmenting training data for far-field speaker verification but also extends to cross-data augmentation for enrollment and test speech in evaluation trials.Experimental results on FFSVC demonstrate that the adaptive data augmentation method significantly outperforms traditional approaches, such as random noise addition and reverberation, as well as other competitive data augmentation strategies.

Subject Disentanglement Neural Network for Speech Envelope Reconstruction from EEG

Jan 15, 2025

Abstract:Reconstructing speech envelopes from EEG signals is essential for exploring neural mechanisms underlying speech perception. Yet, EEG variability across subjects and physiological artifacts complicate accurate reconstruction. To address this problem, we introduce Subject Disentangling Neural Network (SDN-Net), which disentangles subject identity information from reconstructed speech envelopes to enhance cross-subject reconstruction accuracy. SDN-Net integrates three key components: MLA-Codec, MPN-MI, and CTA-MTDNN. The MLA-Codec, a fully convolutional neural network, decodes EEG signals into speech envelopes. The CTA-MTDNN module, a multi-scale time-delay neural network with channel and temporal attention, extracts subject identity features from EEG signals. Lastly, the MPN-MI module, a mutual information estimator with a multi-layer perceptron, supervises the removal of subject identity information from the reconstructed speech envelope. Experiments on the Auditory EEG Decoding Dataset demonstrate that SDN-Net achieves superior performance in inner- and cross-subject speech envelope reconstruction compared to recent state-of-the-art methods.

Trustworthy Contrast-enhanced Brain MRI Synthesis

Jul 10, 2024

Abstract:Contrast-enhanced brain MRI (CE-MRI) is a valuable diagnostic technique but may pose health risks and incur high costs. To create safer alternatives, multi-modality medical image translation aims to synthesize CE-MRI images from other available modalities. Although existing methods can generate promising predictions, they still face two challenges, i.e., exhibiting over-confidence and lacking interpretability on predictions. To address the above challenges, this paper introduces TrustI2I, a novel trustworthy method that reformulates multi-to-one medical image translation problem as a multimodal regression problem, aiming to build an uncertainty-aware and reliable system. Specifically, our method leverages deep evidential regression to estimate prediction uncertainties and employs an explicit intermediate and late fusion strategy based on the Mixture of Normal Inverse Gamma (MoNIG) distribution, enhancing both synthesis quality and interpretability. Additionally, we incorporate uncertainty calibration to improve the reliability of uncertainty. Validation on the BraTS2018 dataset demonstrates that our approach surpasses current methods, producing higher-quality images with rational uncertainty estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge