Sibo Ju

Enhancing LLMs' Reasoning-Intensive Multimedia Search Capabilities through Fine-Tuning and Reinforcement Learning

May 24, 2025Abstract:Existing large language models (LLMs) driven search agents typically rely on prompt engineering to decouple the user queries into search plans, limiting their effectiveness in complex scenarios requiring reasoning. Furthermore, they suffer from excessive token consumption due to Python-based search plan representations and inadequate integration of multimedia elements for both input processing and response generation. To address these challenges, we introduce SearchExpert, a training method for LLMs to improve their multimedia search capabilities in response to complex search queries. Firstly, we reformulate the search plan in an efficient natural language representation to reduce token consumption. Then, we propose the supervised fine-tuning for searching (SFTS) to fine-tune LLM to adapt to these representations, together with an automated dataset construction pipeline. Secondly, to improve reasoning-intensive search capabilities, we propose the reinforcement learning from search feedback (RLSF) that takes the search results planned by LLM as the reward signals. Thirdly, we propose a multimedia understanding and generation agent that enables the fine-tuned LLM to process visual input and produce visual output during inference. Finally, we establish an automated benchmark construction pipeline and a human evaluation framework. Our resulting benchmark, SearchExpertBench-25, comprises 200 multiple-choice questions spanning financial and international news scenarios that require reasoning in searching. Experiments demonstrate that SearchExpert outperforms the commercial LLM search method (Perplexity Pro) by 36.60% on the existing FinSearchBench-24 benchmark and 54.54% on our proposed SearchExpertBench-25. Human evaluations further confirm the superior readability.

GMAI-VL-R1: Harnessing Reinforcement Learning for Multimodal Medical Reasoning

Apr 02, 2025Abstract:Recent advances in general medical AI have made significant strides, but existing models often lack the reasoning capabilities needed for complex medical decision-making. This paper presents GMAI-VL-R1, a multimodal medical reasoning model enhanced by reinforcement learning (RL) to improve its reasoning abilities. Through iterative training, GMAI-VL-R1 optimizes decision-making, significantly boosting diagnostic accuracy and clinical support. We also develop a reasoning data synthesis method, generating step-by-step reasoning data via rejection sampling, which further enhances the model's generalization. Experimental results show that after RL training, GMAI-VL-R1 excels in tasks such as medical image diagnosis and visual question answering. While the model demonstrates basic memorization with supervised fine-tuning, RL is crucial for true generalization. Our work establishes new evaluation benchmarks and paves the way for future advancements in medical reasoning models. Code, data, and model will be released at \href{https://github.com/uni-medical/GMAI-VL-R1}{this link}.

Exploiting Emotion-Semantic Correlations for Empathetic Response Generation

Feb 27, 2024

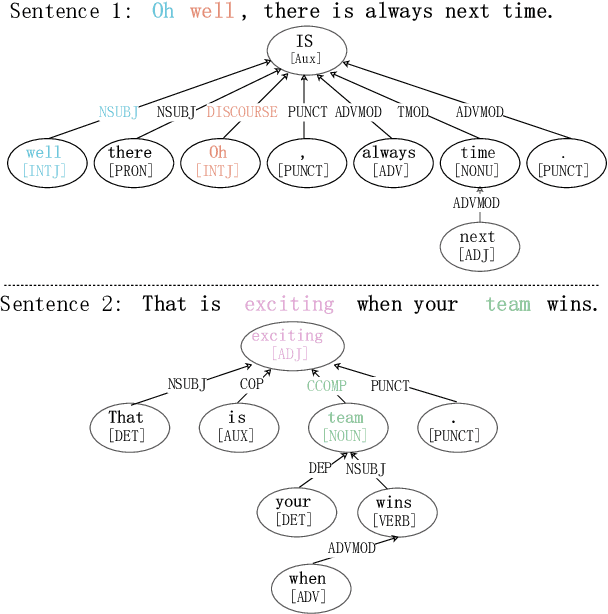

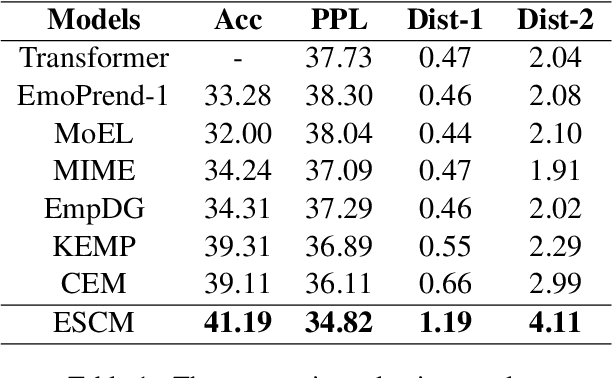

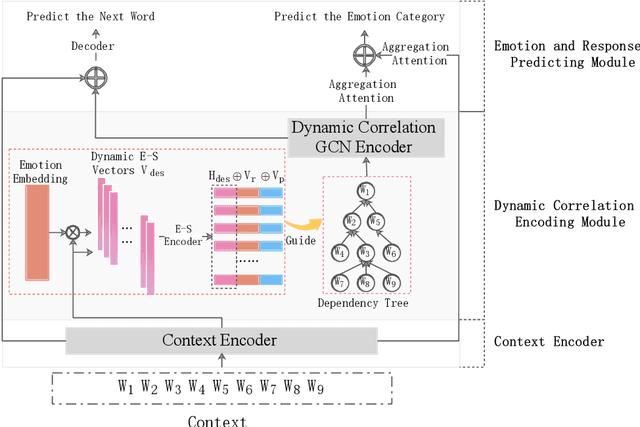

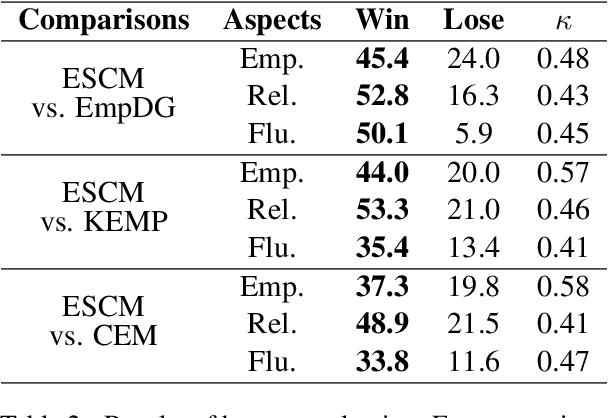

Abstract:Empathetic response generation aims to generate empathetic responses by understanding the speaker's emotional feelings from the language of dialogue. Recent methods capture emotional words in the language of communicators and construct them as static vectors to perceive nuanced emotions. However, linguistic research has shown that emotional words in language are dynamic and have correlations with other grammar semantic roles, i.e., words with semantic meanings, in grammar. Previous methods overlook these two characteristics, which easily lead to misunderstandings of emotions and neglect of key semantics. To address this issue, we propose a dynamical Emotion-Semantic Correlation Model (ESCM) for empathetic dialogue generation tasks. ESCM constructs dynamic emotion-semantic vectors through the interaction of context and emotions. We introduce dependency trees to reflect the correlations between emotions and semantics. Based on dynamic emotion-semantic vectors and dependency trees, we propose a dynamic correlation graph convolutional network to guide the model in learning context meanings in dialogue and generating empathetic responses. Experimental results on the EMPATHETIC-DIALOGUES dataset show that ESCM understands semantics and emotions more accurately and expresses fluent and informative empathetic responses. Our analysis results also indicate that the correlations between emotions and semantics are frequently used in dialogues, which is of great significance for empathetic perception and expression.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge