Jing Cai

DeepMoLM: Leveraging Visual and Geometric Structural Information for Molecule-Text Modeling

Jan 21, 2026Abstract:AI models for drug discovery and chemical literature mining must interpret molecular images and generate outputs consistent with 3D geometry and stereochemistry. Most molecular language models rely on strings or graphs, while vision-language models often miss stereochemical details and struggle to map continuous 3D structures into discrete tokens. We propose DeepMoLM: Deep Molecular Language M odeling, a dual-view framework that grounds high-resolution molecular images in geometric invariants derived from molecular conformations. DeepMoLM preserves high-frequency evidence from 1024 $\times$ 1024 inputs, encodes conformer neighborhoods as discrete Extended 3-Dimensional Fingerprints, and fuses visual and geometric streams with cross-attention, enabling physically grounded generation without atom coordinates. DeepMoLM improves PubChem captioning with a 12.3% relative METEOR gain over the strongest generalist baseline while staying competitive with specialist methods. It produces valid numeric outputs for all property queries and attains MAE 13.64 g/mol on Molecular Weight and 37.89 on Complexity in the specialist setting. On ChEBI-20 description generation from images, it exceeds generalist baselines and matches state-of- the-art vision-language models. Code is available at https://github.com/1anj/DeepMoLM.

Structure-Aware Contrastive Learning with Fine-Grained Binding Representations for Drug Discovery

Sep 18, 2025

Abstract:Accurate identification of drug-target interactions (DTI) remains a central challenge in computational pharmacology, where sequence-based methods offer scalability. This work introduces a sequence-based drug-target interaction framework that integrates structural priors into protein representations while maintaining high-throughput screening capability. Evaluated across multiple benchmarks, the model achieves state-of-the-art performance on Human and BioSNAP datasets and remains competitive on BindingDB. In virtual screening tasks, it surpasses prior methods on LIT-PCBA, yielding substantial gains in AUROC and BEDROC. Ablation studies confirm the critical role of learned aggregation, bilinear attention, and contrastive alignment in enhancing predictive robustness. Embedding visualizations reveal improved spatial correspondence with known binding pockets and highlight interpretable attention patterns over ligand-residue contacts. These results validate the framework's utility for scalable and structure-aware DTI prediction.

IC-Custom: Diverse Image Customization via In-Context Learning

Jul 02, 2025Abstract:Image customization, a crucial technique for industrial media production, aims to generate content that is consistent with reference images. However, current approaches conventionally separate image customization into position-aware and position-free customization paradigms and lack a universal framework for diverse customization, limiting their applications across various scenarios. To overcome these limitations, we propose IC-Custom, a unified framework that seamlessly integrates position-aware and position-free image customization through in-context learning. IC-Custom concatenates reference images with target images to a polyptych, leveraging DiT's multi-modal attention mechanism for fine-grained token-level interactions. We introduce the In-context Multi-Modal Attention (ICMA) mechanism with learnable task-oriented register tokens and boundary-aware positional embeddings to enable the model to correctly handle different task types and distinguish various inputs in polyptych configurations. To bridge the data gap, we carefully curated a high-quality dataset of 12k identity-consistent samples with 8k from real-world sources and 4k from high-quality synthetic data, avoiding the overly glossy and over-saturated synthetic appearance. IC-Custom supports various industrial applications, including try-on, accessory placement, furniture arrangement, and creative IP customization. Extensive evaluations on our proposed ProductBench and the publicly available DreamBench demonstrate that IC-Custom significantly outperforms community workflows, closed-source models, and state-of-the-art open-source approaches. IC-Custom achieves approximately 73% higher human preference across identity consistency, harmonicity, and text alignment metrics, while training only 0.4% of the original model parameters. Project page: https://liyaowei-stu.github.io/project/IC_Custom

Adapting Vision-Language Foundation Model for Next Generation Medical Ultrasound Image Analysis

Jun 11, 2025Abstract:Medical ultrasonography is an essential imaging technique for examining superficial organs and tissues, including lymph nodes, breast, and thyroid. It employs high-frequency ultrasound waves to generate detailed images of the internal structures of the human body. However, manually contouring regions of interest in these images is a labor-intensive task that demands expertise and often results in inconsistent interpretations among individuals. Vision-language foundation models, which have excelled in various computer vision applications, present new opportunities for enhancing ultrasound image analysis. Yet, their performance is hindered by the significant differences between natural and medical imaging domains. This research seeks to overcome these challenges by developing domain adaptation methods for vision-language foundation models. In this study, we explore the fine-tuning pipeline for vision-language foundation models by utilizing large language model as text refiner with special-designed adaptation strategies and task-driven heads. Our approach has been extensively evaluated on six ultrasound datasets and two tasks: segmentation and classification. The experimental results show that our method can effectively improve the performance of vision-language foundation models for ultrasound image analysis, and outperform the existing state-of-the-art vision-language and pure foundation models. The source code of this study is available at https://github.com/jinggqu/NextGen-UIA.

The Application of Deep Learning for Lymph Node Segmentation: A Systematic Review

May 09, 2025Abstract:Automatic lymph node segmentation is the cornerstone for advances in computer vision tasks for early detection and staging of cancer. Traditional segmentation methods are constrained by manual delineation and variability in operator proficiency, limiting their ability to achieve high accuracy. The introduction of deep learning technologies offers new possibilities for improving the accuracy of lymph node image analysis. This study evaluates the application of deep learning in lymph node segmentation and discusses the methodologies of various deep learning architectures such as convolutional neural networks, encoder-decoder networks, and transformers in analyzing medical imaging data across different modalities. Despite the advancements, it still confronts challenges like the shape diversity of lymph nodes, the scarcity of accurately labeled datasets, and the inadequate development of methods that are robust and generalizable across different imaging modalities. To the best of our knowledge, this is the first study that provides a comprehensive overview of the application of deep learning techniques in lymph node segmentation task. Furthermore, this study also explores potential future research directions, including multimodal fusion techniques, transfer learning, and the use of large-scale pre-trained models to overcome current limitations while enhancing cancer diagnosis and treatment planning strategies.

MLLA-UNet: Mamba-like Linear Attention in an Efficient U-Shape Model for Medical Image Segmentation

Oct 31, 2024

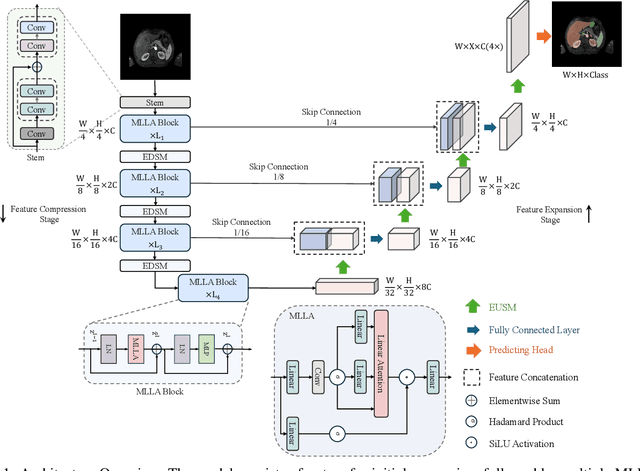

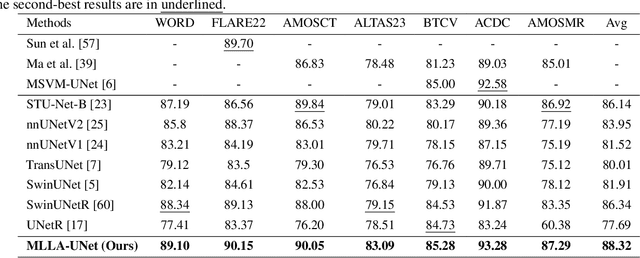

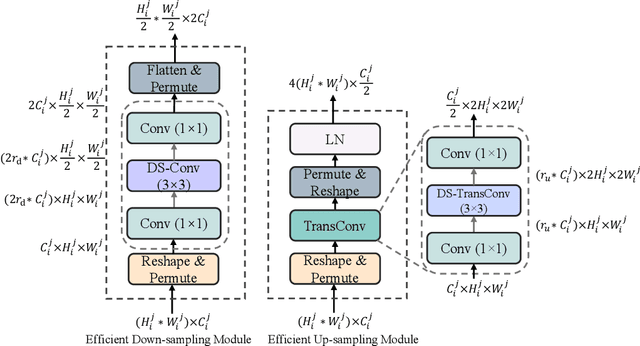

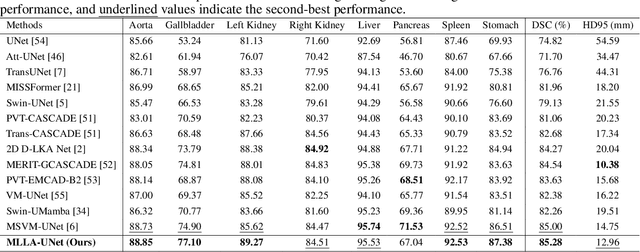

Abstract:Recent advancements in medical imaging have resulted in more complex and diverse images, with challenges such as high anatomical variability, blurred tissue boundaries, low organ contrast, and noise. Traditional segmentation methods struggle to address these challenges, making deep learning approaches, particularly U-shaped architectures, increasingly prominent. However, the quadratic complexity of standard self-attention makes Transformers computationally prohibitive for high-resolution images. To address these challenges, we propose MLLA-UNet (Mamba-Like Linear Attention UNet), a novel architecture that achieves linear computational complexity while maintaining high segmentation accuracy through its innovative combination of linear attention and Mamba-inspired adaptive mechanisms, complemented by an efficient symmetric sampling structure for enhanced feature processing. Our architecture effectively preserves essential spatial features while capturing long-range dependencies at reduced computational complexity. Additionally, we introduce a novel sampling strategy for multi-scale feature fusion. Experiments demonstrate that MLLA-UNet achieves state-of-the-art performance on six challenging datasets with 24 different segmentation tasks, including but not limited to FLARE22, AMOS CT, and ACDC, with an average DSC of 88.32%. These results underscore the superiority of MLLA-UNet over existing methods. Our contributions include the novel 2D segmentation architecture and its empirical validation. The code is available via https://github.com/csyfjiang/MLLA-UNet.

A Deep Learning-Based Method for Metal Artifact-Resistant Syn-MP-RAGE Contrast Synthesis

Oct 22, 2024

Abstract:In certain brain volumetric studies, synthetic T1-weighted magnetization-prepared rapid gradient-echo (MP-RAGE) contrast, derived from quantitative T1 MRI (T1-qMRI), proves highly valuable due to its clear white/gray matter boundaries for brain segmentation. However, generating synthetic MP-RAGE (syn-MP-RAGE) typically requires pairs of high-quality, artifact-free, multi-modality inputs, which can be challenging in retrospective studies, where missing or corrupted data is common. To overcome this limitation, our research explores the feasibility of employing a deep learning-based approach to synthesize syn-MP-RAGE contrast directly from a single channel turbo spin-echo (TSE) input, renowned for its resistance to metal artifacts. We evaluated this deep learning-based synthetic MP-RAGE (DL-Syn-MPR) on 31 non-artifact and 11 metal-artifact subjects. The segmentation results, measured by the Dice Similarity Coefficient (DSC), consistently achieved high agreement (DSC values above 0.83), indicating a strong correlation with reference segmentations, with lower input requirements. Also, no significant difference in segmentation performance was observed between the artifact and non-artifact groups.

AI-based Automatic Segmentation of Prostate on Multi-modality Images: A Review

Jul 09, 2024

Abstract:Prostate cancer represents a major threat to health. Early detection is vital in reducing the mortality rate among prostate cancer patients. One approach involves using multi-modality (CT, MRI, US, etc.) computer-aided diagnosis (CAD) systems for the prostate region. However, prostate segmentation is challenging due to imperfections in the images and the prostate's complex tissue structure. The advent of precision medicine and a significant increase in clinical capacity have spurred the need for various data-driven tasks in the field of medical imaging. Recently, numerous machine learning and data mining tools have been integrated into various medical areas, including image segmentation. This article proposes a new classification method that differentiates supervision types, either in number or kind, during the training phase. Subsequently, we conducted a survey on artificial intelligence (AI)-based automatic prostate segmentation methods, examining the advantages and limitations of each. Additionally, we introduce variants of evaluation metrics for the verification and performance assessment of the segmentation method and summarize the current challenges. Finally, future research directions and development trends are discussed, reflecting the outcomes of our literature survey, suggesting high-precision detection and treatment of prostate cancer as a promising avenue.

Feature-oriented Deep Learning Framework for Pulmonary Cone-beam CT (CBCT) Enhancement with Multi-task Customized Perceptual Loss

Nov 01, 2023

Abstract:Cone-beam computed tomography (CBCT) is routinely collected during image-guided radiation therapy (IGRT) to provide updated patient anatomy information for cancer treatments. However, CBCT images often suffer from streaking artifacts and noise caused by under-rate sampling projections and low-dose exposure, resulting in low clarity and information loss. While recent deep learning-based CBCT enhancement methods have shown promising results in suppressing artifacts, they have limited performance on preserving anatomical details since conventional pixel-to-pixel loss functions are incapable of describing detailed anatomy. To address this issue, we propose a novel feature-oriented deep learning framework that translates low-quality CBCT images into high-quality CT-like imaging via a multi-task customized feature-to-feature perceptual loss function. The framework comprises two main components: a multi-task learning feature-selection network(MTFS-Net) for customizing the perceptual loss function; and a CBCT-to-CT translation network guided by feature-to-feature perceptual loss, which uses advanced generative models such as U-Net, GAN and CycleGAN. Our experiments showed that the proposed framework can generate synthesized CT (sCT) images for the lung that achieved a high similarity to CT images, with an average SSIM index of 0.9869 and an average PSNR index of 39.9621. The sCT images also achieved visually pleasing performance with effective artifacts suppression, noise reduction, and distinctive anatomical details preservation. Our experiment results indicate that the proposed framework outperforms the state-of-the-art models for pulmonary CBCT enhancement. This framework holds great promise for generating high-quality anatomical imaging from CBCT that is suitable for various clinical applications.

Unveiling Theory of Mind in Large Language Models: A Parallel to Single Neurons in the Human Brain

Sep 04, 2023Abstract:With their recent development, large language models (LLMs) have been found to exhibit a certain level of Theory of Mind (ToM), a complex cognitive capacity that is related to our conscious mind and that allows us to infer another's beliefs and perspective. While human ToM capabilities are believed to derive from the neural activity of a broadly interconnected brain network, including that of dorsal medial prefrontal cortex (dmPFC) neurons, the precise processes underlying LLM's capacity for ToM or their similarities with that of humans remains largely unknown. In this study, we drew inspiration from the dmPFC neurons subserving human ToM and employed a similar methodology to examine whether LLMs exhibit comparable characteristics. Surprisingly, our analysis revealed a striking resemblance between the two, as hidden embeddings (artificial neurons) within LLMs started to exhibit significant responsiveness to either true- or false-belief trials, suggesting their ability to represent another's perspective. These artificial embedding responses were closely correlated with the LLMs' performance during the ToM tasks, a property that was dependent on the size of the models. Further, the other's beliefs could be accurately decoded using the entire embeddings, indicating the presence of the embeddings' ToM capability at the population level. Together, our findings revealed an emergent property of LLMs' embeddings that modified their activities in response to ToM features, offering initial evidence of a parallel between the artificial model and neurons in the human brain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge