Haoran Xie

Imagine a City: CityGenAgent for Procedural 3D City Generation

Feb 05, 2026Abstract:The automated generation of interactive 3D cities is a critical challenge with broad applications in autonomous driving, virtual reality, and embodied intelligence. While recent advances in generative models and procedural techniques have improved the realism of city generation, existing methods often struggle with high-fidelity asset creation, controllability, and manipulation. In this work, we introduce CityGenAgent, a natural language-driven framework for hierarchical procedural generation of high-quality 3D cities. Our approach decomposes city generation into two interpretable components, Block Program and Building Program. To ensure structural correctness and semantic alignment, we adopt a two-stage learning strategy: (1) Supervised Fine-Tuning (SFT). We train BlockGen and BuildingGen to generate valid programs that adhere to schema constraints, including non-self-intersecting polygons and complete fields; (2) Reinforcement Learning (RL). We design Spatial Alignment Reward to enhance spatial reasoning ability and Visual Consistency Reward to bridge the gap between textual descriptions and the visual modality. Benefiting from the programs and the models' generalization, CityGenAgent supports natural language editing and manipulation. Comprehensive evaluations demonstrate superior semantic alignment, visual quality, and controllability compared to existing methods, establishing a robust foundation for scalable 3D city generation.

LoD Sketch Extraction from Architectural Models Using Generative AI: Dataset Construction for Multi-Level Architectural Design Generation

Jan 23, 2026Abstract:For architectural design, representation across multiple Levels of Details (LoD) is essential for achieving a smooth transition from conceptual massing to detailed modeling. However, traditional LoD modeling processes rely on manual operations that are time-consuming, labor-intensive, and prone to geometric inconsistencies. While the rapid advancement of generative artificial intelligence (AI) has opened new possibilities for generating multi-level architectural models from sketch inputs, its application remains limited by the lack of high-quality paired LoD training data. To address this issue, we propose an automatic LoD sketch extraction framework using generative AI models, which progressively simplifies high-detail architectural models to automatically generate geometrically consistent and hierarchically coherent multi-LoD representations. The proposed framework integrates computer vision techniques with generative AI methods to establish a progressive extraction pipeline that transitions from detailed representations to volumetric abstractions. Experimental results demonstrate that the method maintains strong geometric consistency across LoD levels, achieving SSIM values of 0.7319 and 0.7532 for the transitions from LoD3 to LoD2 and from LoD2 to LoD1, respectively, with corresponding normalized Hausdorff distances of 25.1% and 61.0% of the image diagonal, reflecting controlled geometric deviation during abstraction. These results verify that the proposed framework effectively preserves global structure while achieving progressive semantic simplification across different LoD levels, providing reliable data and technical support for AI-driven multi-level architectural generation and hierarchical modeling.

Sketch-Based Facade Renovation With Generative AI: A Streamlined Framework for Bypassing As-Built Modelling in Industrial Adaptive Reuse

Jan 13, 2026Abstract:Facade renovation offers a more sustainable alternative to full demolition, yet producing design proposals that preserve existing structures while expressing new intent remains challenging. Current workflows typically require detailed as-built modelling before design, which is time-consuming, labour-intensive, and often involves repeated revisions. To solve this issue, we propose a three-stage framework combining generative artificial intelligence (AI) and vision-language models (VLM) that directly processes rough structural sketch and textual descriptions to produce consistent renovation proposals. First, the input sketch is used by a fine-tuned VLM model to predict bounding boxes specifying where modifications are needed and which components should be added. Next, a stable diffusion model generates detailed sketches of new elements, which are merged with the original outline through a generative inpainting pipeline. Finally, ControlNet is employed to refine the result into a photorealistic image. Experiments on datasets and real industrial buildings indicate that the proposed framework can generate renovation proposals that preserve the original structure while improving facade detail quality. This approach effectively bypasses the need for detailed as-built modelling, enabling architects to rapidly explore design alternatives, iterate on early-stage concepts, and communicate renovation intentions with greater clarity.

Benchmarking Real-World Medical Image Classification with Noisy Labels: Challenges, Practice, and Outlook

Dec 10, 2025Abstract:Learning from noisy labels remains a major challenge in medical image analysis, where annotation demands expert knowledge and substantial inter-observer variability often leads to inconsistent or erroneous labels. Despite extensive research on learning with noisy labels (LNL), the robustness of existing methods in medical imaging has not been systematically assessed. To address this gap, we introduce LNMBench, a comprehensive benchmark for Label Noise in Medical imaging. LNMBench encompasses \textbf{10} representative methods evaluated across 7 datasets, 6 imaging modalities, and 3 noise patterns, establishing a unified and reproducible framework for robustness evaluation under realistic conditions. Comprehensive experiments reveal that the performance of existing LNL methods degrades substantially under high and real-world noise, highlighting the persistent challenges of class imbalance and domain variability in medical data. Motivated by these findings, we further propose a simple yet effective improvement to enhance model robustness under such conditions. The LNMBench codebase is publicly released to facilitate standardized evaluation, promote reproducible research, and provide practical insights for developing noise-resilient algorithms in both research and real-world medical applications.The codebase is publicly available on https://github.com/myyy777/LNMBench.

Wavelet-guided Misalignment-aware Network for Visible-Infrared Object Detection

Jul 27, 2025Abstract:Visible-infrared object detection aims to enhance the detection robustness by exploiting the complementary information of visible and infrared image pairs. However, its performance is often limited by frequent misalignments caused by resolution disparities, spatial displacements, and modality inconsistencies. To address this issue, we propose the Wavelet-guided Misalignment-aware Network (WMNet), a unified framework designed to adaptively address different cross-modal misalignment patterns. WMNet incorporates wavelet-based multi-frequency analysis and modality-aware fusion mechanisms to improve the alignment and integration of cross-modal features. By jointly exploiting low and high-frequency information and introducing adaptive guidance across modalities, WMNet alleviates the adverse effects of noise, illumination variation, and spatial misalignment. Furthermore, it enhances the representation of salient target features while suppressing spurious or misleading information, thereby promoting more accurate and robust detection. Extensive evaluations on the DVTOD, DroneVehicle, and M3FD datasets demonstrate that WMNet achieves state-of-the-art performance on misaligned cross-modal object detection tasks, confirming its effectiveness and practical applicability.

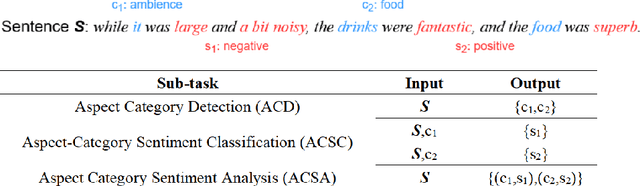

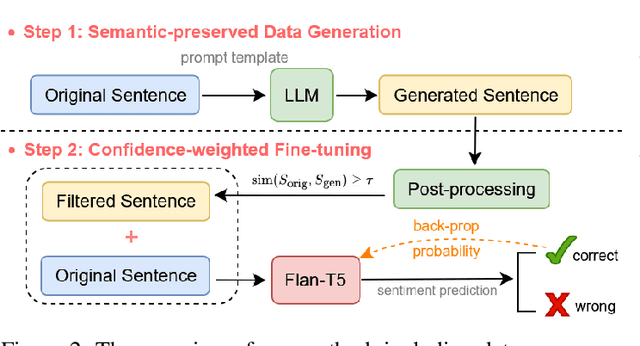

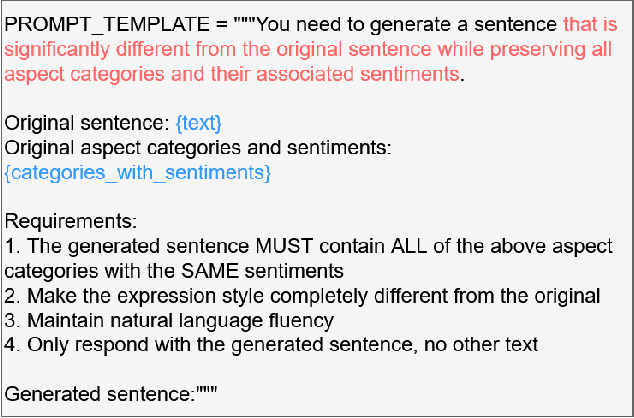

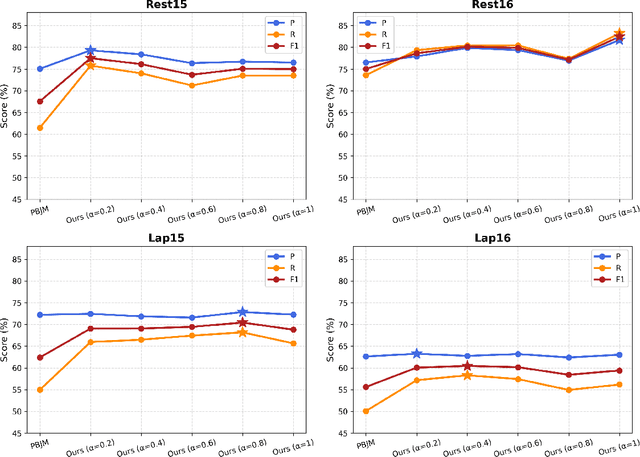

Semantic-preserved Augmentation with Confidence-weighted Fine-tuning for Aspect Category Sentiment Analysis

Jun 08, 2025

Abstract:Large language model (LLM) is an effective approach to addressing data scarcity in low-resource scenarios. Recent existing research designs hand-crafted prompts to guide LLM for data augmentation. We introduce a data augmentation strategy for the aspect category sentiment analysis (ACSA) task that preserves the original sentence semantics and has linguistic diversity, specifically by providing a structured prompt template for an LLM to generate predefined content. In addition, we employ a post-processing technique to further ensure semantic consistency between the generated sentence and the original sentence. The augmented data increases the semantic coverage of the training distribution, enabling the model better to understand the relationship between aspect categories and sentiment polarities, enhancing its inference capabilities. Furthermore, we propose a confidence-weighted fine-tuning strategy to encourage the model to generate more confident and accurate sentiment polarity predictions. Compared with powerful and recent works, our method consistently achieves the best performance on four benchmark datasets over all baselines.

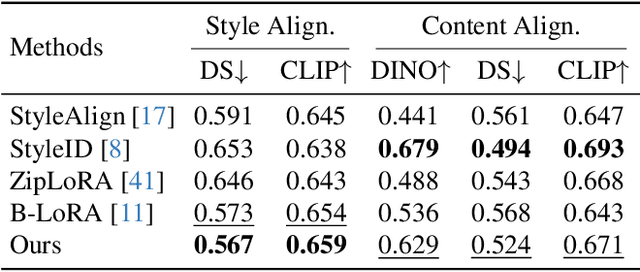

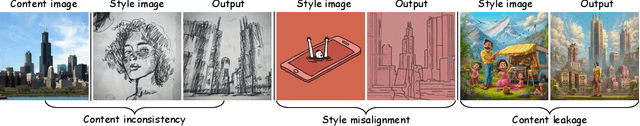

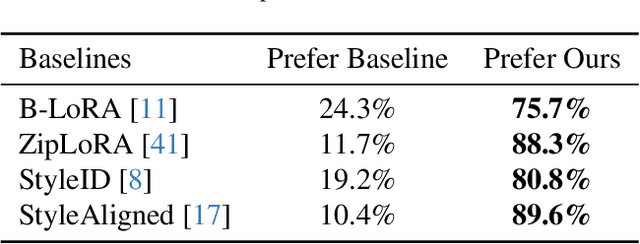

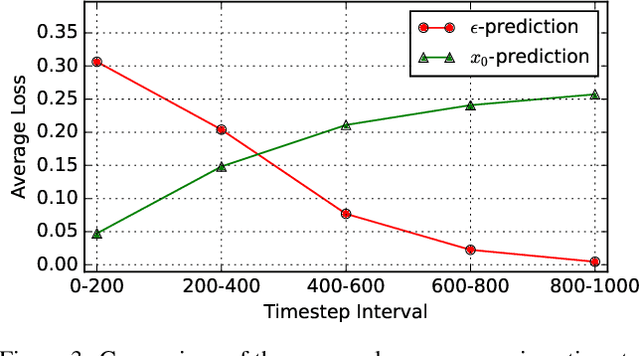

ConsisLoRA: Enhancing Content and Style Consistency for LoRA-based Style Transfer

Mar 13, 2025

Abstract:Style transfer involves transferring the style from a reference image to the content of a target image. Recent advancements in LoRA-based (Low-Rank Adaptation) methods have shown promise in effectively capturing the style of a single image. However, these approaches still face significant challenges such as content inconsistency, style misalignment, and content leakage. In this paper, we comprehensively analyze the limitations of the standard diffusion parameterization, which learns to predict noise, in the context of style transfer. To address these issues, we introduce ConsisLoRA, a LoRA-based method that enhances both content and style consistency by optimizing the LoRA weights to predict the original image rather than noise. We also propose a two-step training strategy that decouples the learning of content and style from the reference image. To effectively capture both the global structure and local details of the content image, we introduce a stepwise loss transition strategy. Additionally, we present an inference guidance method that enables continuous control over content and style strengths during inference. Through both qualitative and quantitative evaluations, our method demonstrates significant improvements in content and style consistency while effectively reducing content leakage.

Multi-View Depth Consistent Image Generation Using Generative AI Models: Application on Architectural Design of University Buildings

Mar 05, 2025

Abstract:In the early stages of architectural design, shoebox models are typically used as a simplified representation of building structures but require extensive operations to transform them into detailed designs. Generative artificial intelligence (AI) provides a promising solution to automate this transformation, but ensuring multi-view consistency remains a significant challenge. To solve this issue, we propose a novel three-stage consistent image generation framework using generative AI models to generate architectural designs from shoebox model representations. The proposed method enhances state-of-the-art image generation diffusion models to generate multi-view consistent architectural images. We employ ControlNet as the backbone and optimize it to accommodate multi-view inputs of architectural shoebox models captured from predefined perspectives. To ensure stylistic and structural consistency across multi-view images, we propose an image space loss module that incorporates style loss, structural loss and angle alignment loss. We then use depth estimation method to extract depth maps from the generated multi-view images. Finally, we use the paired data of the architectural images and depth maps as inputs to improve the multi-view consistency via the depth-aware 3D attention module. Experimental results demonstrate that the proposed framework can generate multi-view architectural images with consistent style and structural coherence from shoebox model inputs.

Exploring Incremental Unlearning: Techniques, Challenges, and Future Directions

Feb 23, 2025

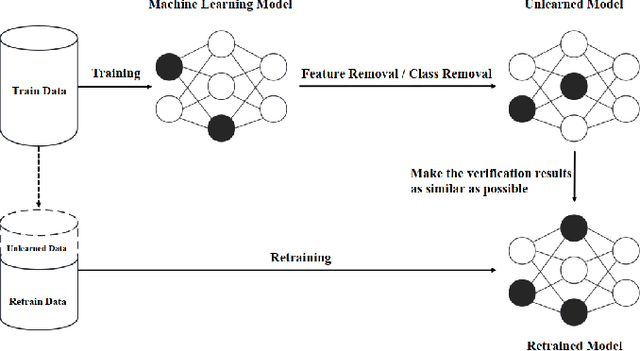

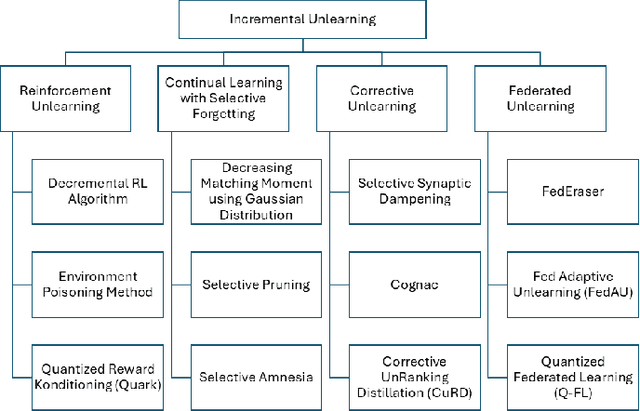

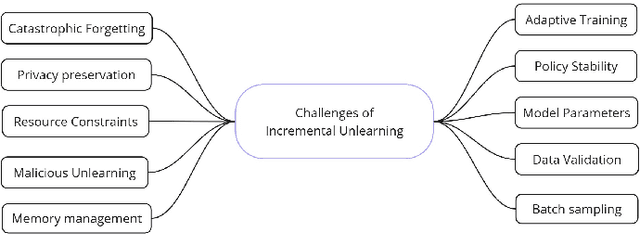

Abstract:The growing demand for data privacy in Machine Learning (ML) applications has seen Machine Unlearning (MU) emerge as a critical area of research. As the `right to be forgotten' becomes regulated globally, it is increasingly important to develop mechanisms that delete user data from AI systems while maintaining performance and scalability of these systems. Incremental Unlearning (IU) is a promising MU solution to address the challenges of efficiently removing specific data from ML models without the need for expensive and time-consuming full retraining. This paper presents the various techniques and approaches to IU. It explores the challenges faced in designing and implementing IU mechanisms. Datasets and metrics for evaluating the performance of unlearning techniques are discussed as well. Finally, potential solutions to the IU challenges alongside future research directions are offered. This survey provides valuable insights for researchers and practitioners seeking to understand the current landscape of IU and its potential for enhancing privacy-preserving intelligent systems.

CondAmbigQA: A Benchmark and Dataset for Conditional Ambiguous Question Answering

Feb 03, 2025

Abstract:Large language models (LLMs) are prone to hallucinations in question-answering (QA) tasks when faced with ambiguous questions. Users often assume that LLMs share their cognitive alignment, a mutual understanding of context, intent, and implicit details, leading them to omit critical information in the queries. However, LLMs generate responses based on assumptions that can misalign with user intent, which may be perceived as hallucinations if they misalign with the user's intent. Therefore, identifying those implicit assumptions is crucial to resolve ambiguities in QA. Prior work, such as AmbigQA, reduces ambiguity in queries via human-annotated clarifications, which is not feasible in real application. Meanwhile, ASQA compiles AmbigQA's short answers into long-form responses but inherits human biases and fails capture explicit logical distinctions that differentiates the answers. We introduce Conditional Ambiguous Question-Answering (CondAmbigQA), a benchmark with 200 ambiguous queries and condition-aware evaluation metrics. Our study pioneers the concept of ``conditions'' in ambiguous QA tasks, where conditions stand for contextual constraints or assumptions that resolve ambiguities. The retrieval-based annotation strategy uses retrieved Wikipedia fragments to identify possible interpretations for a given query as its conditions and annotate the answers through those conditions. Such a strategy minimizes human bias introduced by different knowledge levels among annotators. By fixing retrieval results, CondAmbigQA evaluates how RAG systems leverage conditions to resolve ambiguities. Experiments show that models considering conditions before answering improve performance by $20\%$, with an additional $5\%$ gain when conditions are explicitly provided. These results underscore the value of conditional reasoning in QA, offering researchers tools to rigorously evaluate ambiguity resolution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge