Xiaohua Jia

Towards Long-Horizon Interpretability: Efficient and Faithful Multi-Token Attribution for Reasoning LLMs

Feb 02, 2026Abstract:Token attribution methods provide intuitive explanations for language model outputs by identifying causally important input tokens. However, as modern LLMs increasingly rely on extended reasoning chains, existing schemes face two critical challenges: (1) efficiency bottleneck, where attributing a target span of M tokens within a context of length N requires O(M*N) operations, making long-context attribution prohibitively slow; and (2) faithfulness drop, where intermediate reasoning tokens absorb attribution mass, preventing importance from propagating back to the original input. To address these, we introduce FlashTrace, an efficient multi-token attribution method that employs span-wise aggregation to compute attribution over multi-token targets in a single pass, while maintaining faithfulness. Moreover, we design a recursive attribution mechanism that traces importance through intermediate reasoning chains back to source inputs. Extensive experiments on long-context retrieval (RULER) and multi-step reasoning (MATH, MorehopQA) tasks demonstrate that FlashTrace achieves over 130x speedup over existing baselines while maintaining superior faithfulness. We further analyze the dynamics of recursive attribution, showing that even a single recursive hop improves faithfulness by tracing importance through the reasoning chain.

MulVul: Retrieval-augmented Multi-Agent Code Vulnerability Detection via Cross-Model Prompt Evolution

Jan 26, 2026Abstract:Large Language Models (LLMs) struggle to automate real-world vulnerability detection due to two key limitations: the heterogeneity of vulnerability patterns undermines the effectiveness of a single unified model, and manual prompt engineering for massive weakness categories is unscalable. To address these challenges, we propose \textbf{MulVul}, a retrieval-augmented multi-agent framework designed for precise and broad-coverage vulnerability detection. MulVul adopts a coarse-to-fine strategy: a \emph{Router} agent first predicts the top-$k$ coarse categories and then forwards the input to specialized \emph{Detector} agents, which identify the exact vulnerability types. Both agents are equipped with retrieval tools to actively source evidence from vulnerability knowledge bases to mitigate hallucinations. Crucially, to automate the generation of specialized prompts, we design \emph{Cross-Model Prompt Evolution}, a prompt optimization mechanism where a generator LLM iteratively refines candidate prompts while a distinct executor LLM validates their effectiveness. This decoupling mitigates the self-correction bias inherent in single-model optimization. Evaluated on 130 CWE types, MulVul achieves 34.79\% Macro-F1, outperforming the best baseline by 41.5\%. Ablation studies validate cross-model prompt evolution, which boosts performance by 51.6\% over manual prompts by effectively handling diverse vulnerability patterns.

Reconstructing Training Data from Adapter-based Federated Large Language Models

Jan 24, 2026Abstract:Adapter-based Federated Large Language Models (FedLLMs) are widely adopted to reduce the computational, storage, and communication overhead of full-parameter fine-tuning for web-scale applications while preserving user privacy. By freezing the backbone and training only compact low-rank adapters, these methods appear to limit gradient leakage and thwart existing Gradient Inversion Attacks (GIAs). Contrary to this assumption, we show that low-rank adapters create new, exploitable leakage channels. We propose the Unordered-word-bag-based Text Reconstruction (UTR) attack, a novel GIA tailored to the unique structure of adapter-based FedLLMs. UTR overcomes three core challenges: low-dimensional gradients, frozen backbones, and combinatorially large reconstruction spaces by: (i) inferring token presence from attention patterns in frozen layers, (ii) performing sentence-level inversion within the low-rank subspace of adapter gradients, and (iii) enforcing semantic coherence through constrained greedy decoding guided by language priors. Extensive experiments across diverse models (GPT2-Large, BERT, Qwen2.5-7B) and datasets (CoLA, SST-2, Rotten Tomatoes) demonstrate that UTR achieves near-perfect reconstruction accuracy (ROUGE-1/2 > 99), even with large batch size settings where prior GIAs fail completely. Our results reveal a fundamental tension between parameter efficiency and privacy in FedLLMs, challenging the prevailing belief that lightweight adaptation inherently enhances security. Our code and data are available at https://github.com/shwksnshwowk-wq/GIA.

Can LLMs Refuse Questions They Do Not Know? Measuring Knowledge-Aware Refusal in Factual Tasks

Oct 02, 2025Abstract:Large Language Models (LLMs) should refuse to answer questions beyond their knowledge. This capability, which we term knowledge-aware refusal, is crucial for factual reliability. However, existing metrics fail to faithfully measure this ability. On the one hand, simple refusal-based metrics are biased by refusal rates and yield inconsistent scores when models exhibit different refusal tendencies. On the other hand, existing calibration metrics are proxy-based, capturing the performance of auxiliary calibration processes rather than the model's actual refusal behavior. In this work, we propose the Refusal Index (RI), a principled metric that measures how accurately LLMs refuse questions they do not know. We define RI as Spearman's rank correlation between refusal probability and error probability. To make RI practically measurable, we design a lightweight two-pass evaluation method that efficiently estimates RI from observed refusal rates across two standard evaluation runs. Extensive experiments across 16 models and 5 datasets demonstrate that RI accurately quantifies a model's intrinsic knowledge-aware refusal capability in factual tasks. Notably, RI remains stable across different refusal rates and provides consistent model rankings independent of a model's overall accuracy and refusal rates. More importantly, RI provides insight into an important but previously overlooked aspect of LLM factuality: while LLMs achieve high accuracy on factual tasks, their refusal behavior can be unreliable and fragile. This finding highlights the need to complement traditional accuracy metrics with the Refusal Index for comprehensive factuality evaluation.

Exploring Incremental Unlearning: Techniques, Challenges, and Future Directions

Feb 23, 2025

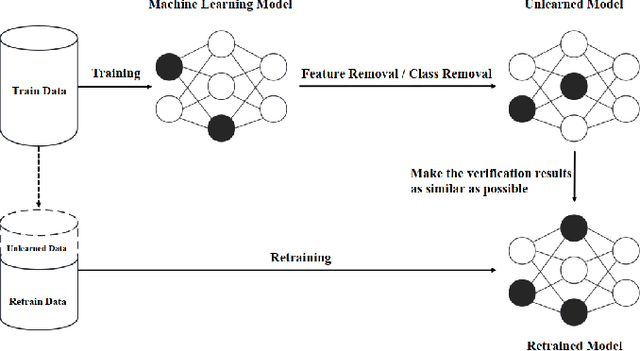

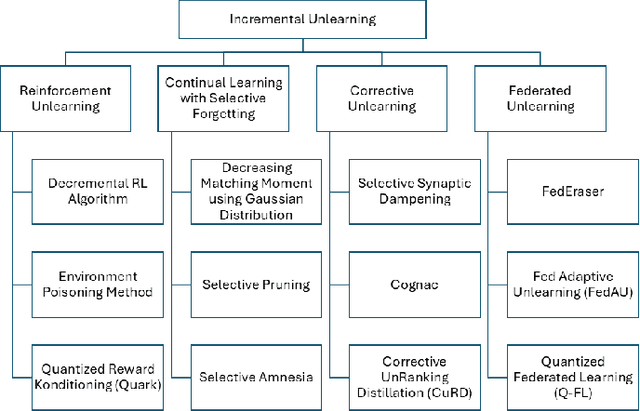

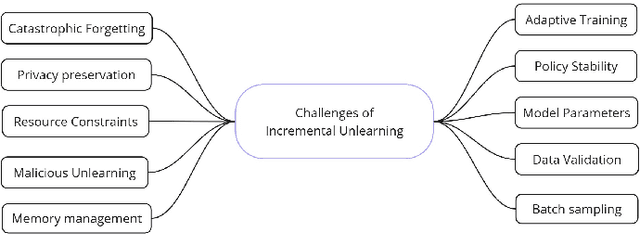

Abstract:The growing demand for data privacy in Machine Learning (ML) applications has seen Machine Unlearning (MU) emerge as a critical area of research. As the `right to be forgotten' becomes regulated globally, it is increasingly important to develop mechanisms that delete user data from AI systems while maintaining performance and scalability of these systems. Incremental Unlearning (IU) is a promising MU solution to address the challenges of efficiently removing specific data from ML models without the need for expensive and time-consuming full retraining. This paper presents the various techniques and approaches to IU. It explores the challenges faced in designing and implementing IU mechanisms. Datasets and metrics for evaluating the performance of unlearning techniques are discussed as well. Finally, potential solutions to the IU challenges alongside future research directions are offered. This survey provides valuable insights for researchers and practitioners seeking to understand the current landscape of IU and its potential for enhancing privacy-preserving intelligent systems.

CAT: Contrastive Adversarial Training for Evaluating the Robustness of Protective Perturbations in Latent Diffusion Models

Feb 11, 2025Abstract:Latent diffusion models have recently demonstrated superior capabilities in many downstream image synthesis tasks. However, customization of latent diffusion models using unauthorized data can severely compromise the privacy and intellectual property rights of data owners. Adversarial examples as protective perturbations have been developed to defend against unauthorized data usage by introducing imperceptible noise to customization samples, preventing diffusion models from effectively learning them. In this paper, we first reveal that the primary reason adversarial examples are effective as protective perturbations in latent diffusion models is the distortion of their latent representations, as demonstrated through qualitative and quantitative experiments. We then propose the Contrastive Adversarial Training (CAT) utilizing adapters as an adaptive attack against these protection methods, highlighting their lack of robustness. Extensive experiments demonstrate that our CAT method significantly reduces the effectiveness of protective perturbations in customization configurations, urging the community to reconsider and enhance the robustness of existing protective perturbation methods. Code is available at \hyperlink{here}{https://github.com/senp98/CAT}.

Protective Perturbations against Unauthorized Data Usage in Diffusion-based Image Generation

Dec 25, 2024

Abstract:Diffusion-based text-to-image models have shown immense potential for various image-related tasks. However, despite their prominence and popularity, customizing these models using unauthorized data also brings serious privacy and intellectual property issues. Existing methods introduce protective perturbations based on adversarial attacks, which are applied to the customization samples. In this systematization of knowledge, we present a comprehensive survey of protective perturbation methods designed to prevent unauthorized data usage in diffusion-based image generation. We establish the threat model and categorize the downstream tasks relevant to these methods, providing a detailed analysis of their designs. We also propose a completed evaluation framework for these perturbation techniques, aiming to advance research in this field.

Embedding Watermarks in Diffusion Process for Model Intellectual Property Protection

Oct 29, 2024

Abstract:In practical application, the widespread deployment of diffusion models often necessitates substantial investment in training. As diffusion models find increasingly diverse applications, concerns about potential misuse highlight the imperative for robust intellectual property protection. Current protection strategies either employ backdoor-based methods, integrating a watermark task as a simpler training objective with the main model task, or embedding watermarks directly into the final output samples. However, the former approach is fragile compared to existing backdoor defense techniques, while the latter fundamentally alters the expected output. In this work, we introduce a novel watermarking framework by embedding the watermark into the whole diffusion process, and theoretically ensure that our final output samples contain no additional information. Furthermore, we utilize statistical algorithms to verify the watermark from internally generated model samples without necessitating triggers as conditions. Detailed theoretical analysis and experimental validation demonstrate the effectiveness of our proposed method.

GReDP: A More Robust Approach for Differential Privacy Training with Gradient-Preserving Noise Reduction

Sep 18, 2024

Abstract:Deep learning models have been extensively adopted in various regions due to their ability to represent hierarchical features, which highly rely on the training set and procedures. Thus, protecting the training process and deep learning algorithms is paramount in privacy preservation. Although Differential Privacy (DP) as a powerful cryptographic primitive has achieved satisfying results in deep learning training, the existing schemes still fall short in preserving model utility, i.e., they either invoke a high noise scale or inevitably harm the original gradients. To address the above issues, in this paper, we present a more robust approach for DP training called GReDP. Specifically, we compute the model gradients in the frequency domain and adopt a new approach to reduce the noise level. Unlike the previous work, our GReDP only requires half of the noise scale compared to DPSGD [1] while keeping all the gradient information intact. We present a detailed analysis of our method both theoretically and empirically. The experimental results show that our GReDP works consistently better than the baselines on all models and training settings.

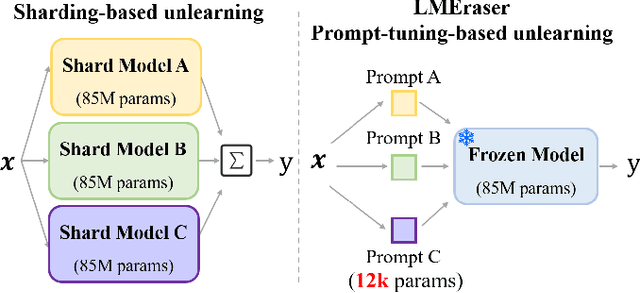

LMEraser: Large Model Unlearning through Adaptive Prompt Tuning

Apr 17, 2024

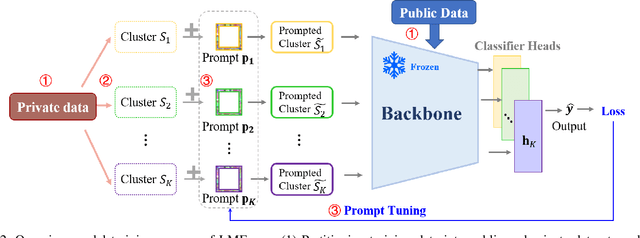

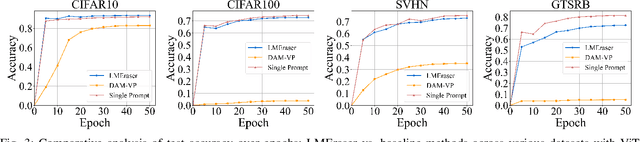

Abstract:To address the growing demand for privacy protection in machine learning, we propose a novel and efficient machine unlearning approach for \textbf{L}arge \textbf{M}odels, called \textbf{LM}Eraser. Existing unlearning research suffers from entangled training data and complex model architectures, incurring extremely high computational costs for large models. LMEraser takes a divide-and-conquer strategy with a prompt tuning architecture to isolate data influence. The training dataset is partitioned into public and private datasets. Public data are used to train the backbone of the model. Private data are adaptively clustered based on their diversity, and each cluster is used to optimize a prompt separately. This adaptive prompt tuning mechanism reduces unlearning costs and maintains model performance. Experiments demonstrate that LMEraser achieves a $100$-fold reduction in unlearning costs without compromising accuracy compared to prior work. Our code is available at: \url{https://github.com/lmeraser/lmeraser}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge