Thanveer Shaik

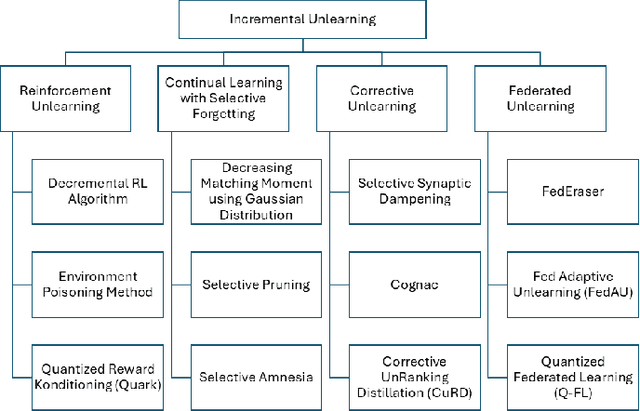

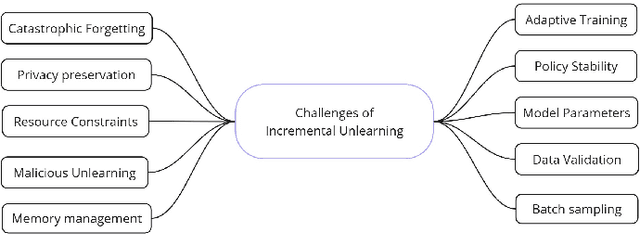

Exploring Incremental Unlearning: Techniques, Challenges, and Future Directions

Feb 23, 2025

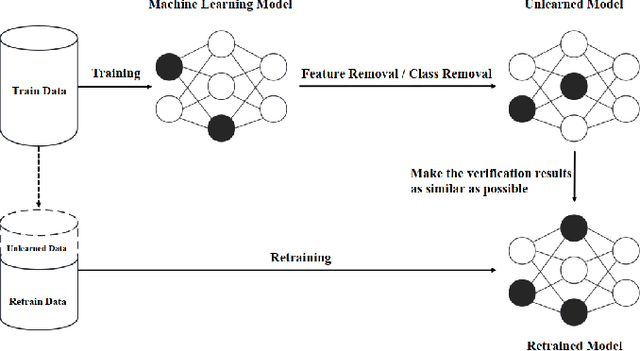

Abstract:The growing demand for data privacy in Machine Learning (ML) applications has seen Machine Unlearning (MU) emerge as a critical area of research. As the `right to be forgotten' becomes regulated globally, it is increasingly important to develop mechanisms that delete user data from AI systems while maintaining performance and scalability of these systems. Incremental Unlearning (IU) is a promising MU solution to address the challenges of efficiently removing specific data from ML models without the need for expensive and time-consuming full retraining. This paper presents the various techniques and approaches to IU. It explores the challenges faced in designing and implementing IU mechanisms. Datasets and metrics for evaluating the performance of unlearning techniques are discussed as well. Finally, potential solutions to the IU challenges alongside future research directions are offered. This survey provides valuable insights for researchers and practitioners seeking to understand the current landscape of IU and its potential for enhancing privacy-preserving intelligent systems.

FRAMU: Attention-based Machine Unlearning using Federated Reinforcement Learning

Sep 24, 2023Abstract:Machine Unlearning is an emerging field that addresses data privacy issues by enabling the removal of private or irrelevant data from the Machine Learning process. Challenges related to privacy and model efficiency arise from the use of outdated, private, and irrelevant data. These issues compromise both the accuracy and the computational efficiency of models in both Machine Learning and Unlearning. To mitigate these challenges, we introduce a novel framework, Attention-based Machine Unlearning using Federated Reinforcement Learning (FRAMU). This framework incorporates adaptive learning mechanisms, privacy preservation techniques, and optimization strategies, making it a well-rounded solution for handling various data sources, either single-modality or multi-modality, while maintaining accuracy and privacy. FRAMU's strength lies in its adaptability to fluctuating data landscapes, its ability to unlearn outdated, private, or irrelevant data, and its support for continual model evolution without compromising privacy. Our experiments, conducted on both single-modality and multi-modality datasets, revealed that FRAMU significantly outperformed baseline models. Additional assessments of convergence behavior and optimization strategies further validate the framework's utility in federated learning applications. Overall, FRAMU advances Machine Unlearning by offering a robust, privacy-preserving solution that optimizes model performance while also addressing key challenges in dynamic data environments.

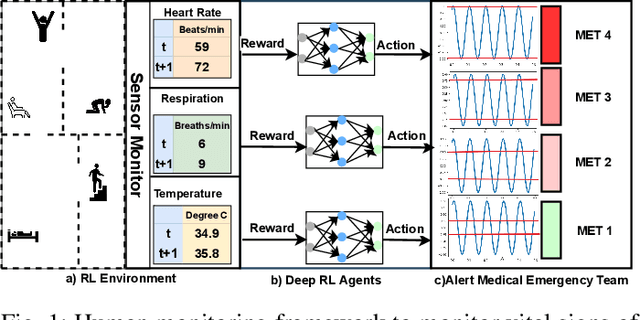

AI-Driven Patient Monitoring with Multi-Agent Deep Reinforcement Learning

Sep 24, 2023

Abstract:Effective patient monitoring is vital for timely interventions and improved healthcare outcomes. Traditional monitoring systems often struggle to handle complex, dynamic environments with fluctuating vital signs, leading to delays in identifying critical conditions. To address this challenge, we propose a novel AI-driven patient monitoring framework using multi-agent deep reinforcement learning (DRL). Our approach deploys multiple learning agents, each dedicated to monitoring a specific physiological feature, such as heart rate, respiration, and temperature. These agents interact with a generic healthcare monitoring environment, learn the patients' behavior patterns, and make informed decisions to alert the corresponding Medical Emergency Teams (METs) based on the level of emergency estimated. In this study, we evaluate the performance of the proposed multi-agent DRL framework using real-world physiological and motion data from two datasets: PPG-DaLiA and WESAD. We compare the results with several baseline models, including Q-Learning, PPO, Actor-Critic, Double DQN, and DDPG, as well as monitoring frameworks like WISEML and CA-MAQL. Our experiments demonstrate that the proposed DRL approach outperforms all other baseline models, achieving more accurate monitoring of patient's vital signs. Furthermore, we conduct hyperparameter optimization to fine-tune the learning process of each agent. By optimizing hyperparameters, we enhance the learning rate and discount factor, thereby improving the agents' overall performance in monitoring patient health status. Our AI-driven patient monitoring system offers several advantages over traditional methods, including the ability to handle complex and uncertain environments, adapt to varying patient conditions, and make real-time decisions without external supervision.

QXAI: Explainable AI Framework for Quantitative Analysis in Patient Monitoring Systems

Sep 20, 2023

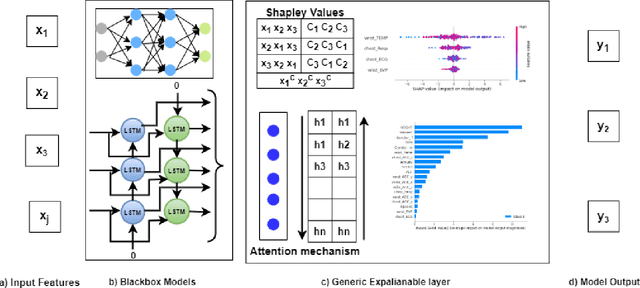

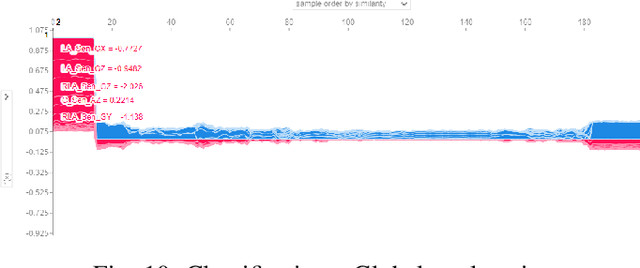

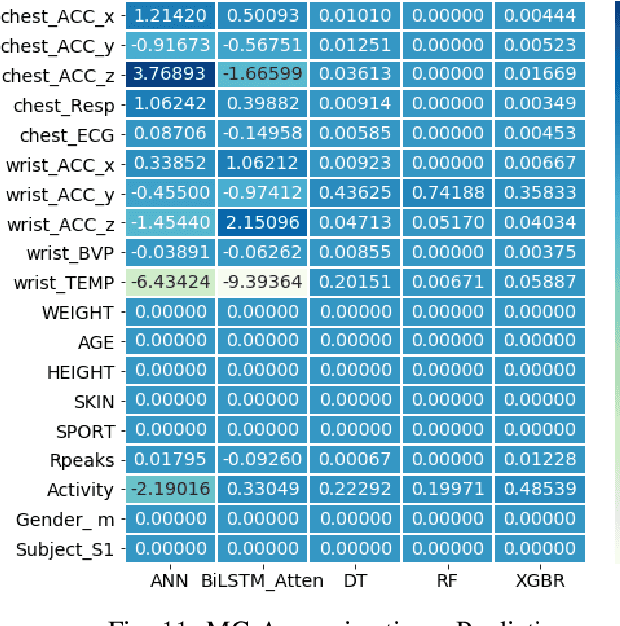

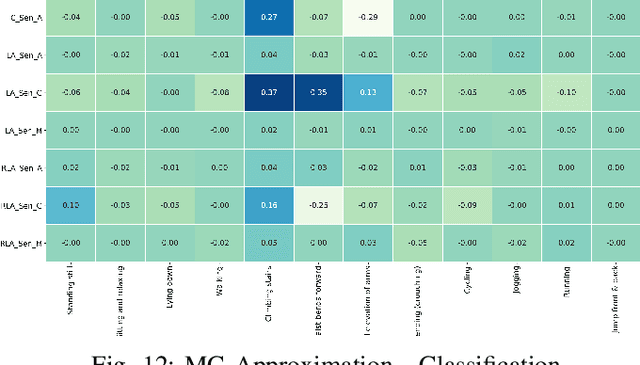

Abstract:Artificial Intelligence techniques can be used to classify a patient's physical activities and predict vital signs for remote patient monitoring. Regression analysis based on non-linear models like deep learning models has limited explainability due to its black-box nature. This can require decision-makers to make blind leaps of faith based on non-linear model results, especially in healthcare applications. In non-invasive monitoring, patient data from tracking sensors and their predisposing clinical attributes act as input features for predicting future vital signs. Explaining the contributions of various features to the overall output of the monitoring application is critical for a clinician's decision-making. In this study, an Explainable AI for Quantitative analysis (QXAI) framework is proposed with post-hoc model explainability and intrinsic explainability for regression and classification tasks in a supervised learning approach. This was achieved by utilizing the Shapley values concept and incorporating attention mechanisms in deep learning models. We adopted the artificial neural networks (ANN) and attention-based Bidirectional LSTM (BiLSTM) models for the prediction of heart rate and classification of physical activities based on sensor data. The deep learning models achieved state-of-the-art results in both prediction and classification tasks. Global explanation and local explanation were conducted on input data to understand the feature contribution of various patient data. The proposed QXAI framework was evaluated using PPG-DaLiA data to predict heart rate and mobile health (MHEALTH) data to classify physical activities based on sensor data. Monte Carlo approximation was applied to the framework to overcome the time complexity and high computation power requirements required for Shapley value calculations.

Clustered FedStack: Intermediate Global Models with Bayesian Information Criterion

Sep 20, 2023Abstract:Federated Learning (FL) is currently one of the most popular technologies in the field of Artificial Intelligence (AI) due to its collaborative learning and ability to preserve client privacy. However, it faces challenges such as non-identically and non-independently distributed (non-IID) and data with imbalanced labels among local clients. To address these limitations, the research community has explored various approaches such as using local model parameters, federated generative adversarial learning, and federated representation learning. In our study, we propose a novel Clustered FedStack framework based on the previously published Stacked Federated Learning (FedStack) framework. The local clients send their model predictions and output layer weights to a server, which then builds a robust global model. This global model clusters the local clients based on their output layer weights using a clustering mechanism. We adopt three clustering mechanisms, namely K-Means, Agglomerative, and Gaussian Mixture Models, into the framework and evaluate their performance. We use Bayesian Information Criterion (BIC) with the maximum likelihood function to determine the number of clusters. The Clustered FedStack models outperform baseline models with clustering mechanisms. To estimate the convergence of our proposed framework, we use Cyclical learning rates.

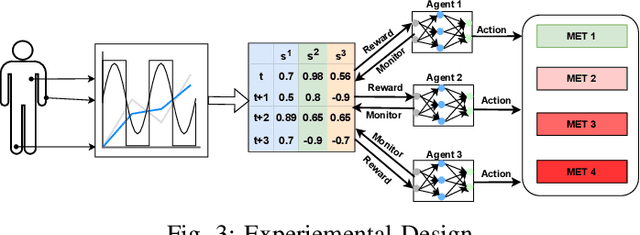

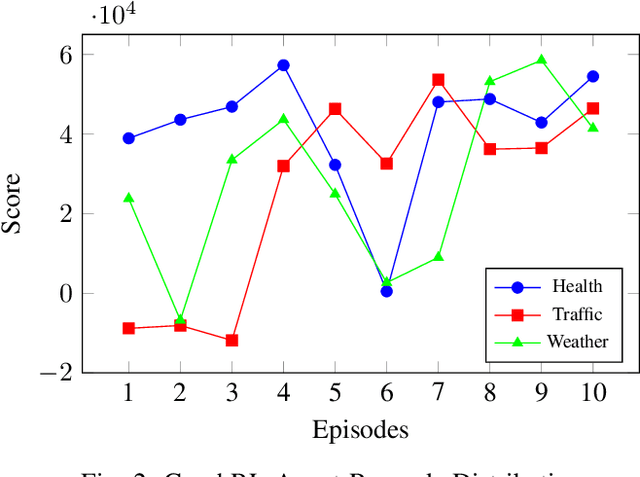

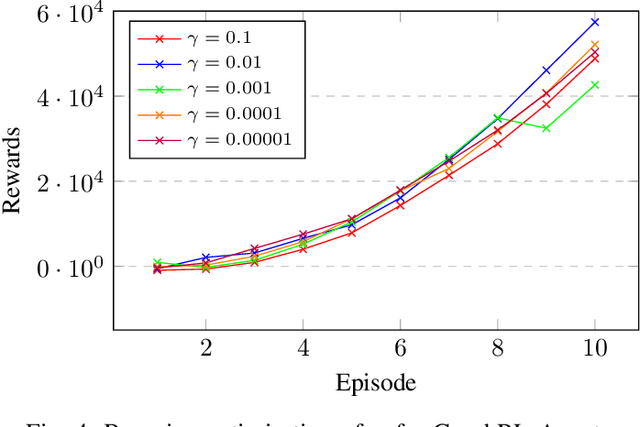

PDRL: Multi-Agent based Reinforcement Learning for Predictive Monitoring

Sep 20, 2023Abstract:Reinforcement learning has been increasingly applied in monitoring applications because of its ability to learn from previous experiences and can make adaptive decisions. However, existing machine learning-based health monitoring applications are mostly supervised learning algorithms, trained on labels and they cannot make adaptive decisions in an uncertain complex environment. This study proposes a novel and generic system, predictive deep reinforcement learning (PDRL) with multiple RL agents in a time series forecasting environment. The proposed generic framework accommodates virtual Deep Q Network (DQN) agents to monitor predicted future states of a complex environment with a well-defined reward policy so that the agent learns existing knowledge while maximizing their rewards. In the evaluation process of the proposed framework, three DRL agents were deployed to monitor a subject's future heart rate, respiration, and temperature predicted using a BiLSTM model. With each iteration, the three agents were able to learn the associated patterns and their cumulative rewards gradually increased. It outperformed the baseline models for all three monitoring agents. The proposed PDRL framework is able to achieve state-of-the-art performance in the time series forecasting process. The proposed DRL agents and deep learning model in the PDRL framework are customized to implement the transfer learning in other forecasting applications like traffic and weather and monitor their states. The PDRL framework is able to learn the future states of the traffic and weather forecasting and the cumulative rewards are gradually increasing over each episode.

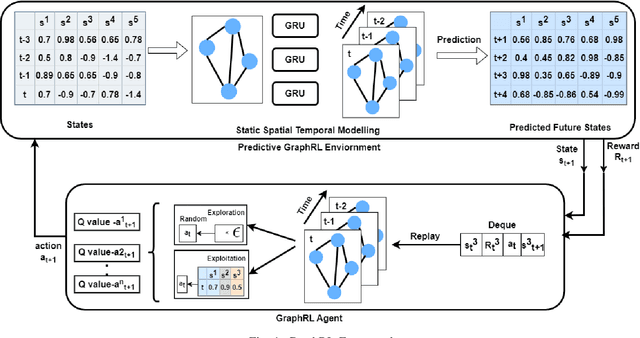

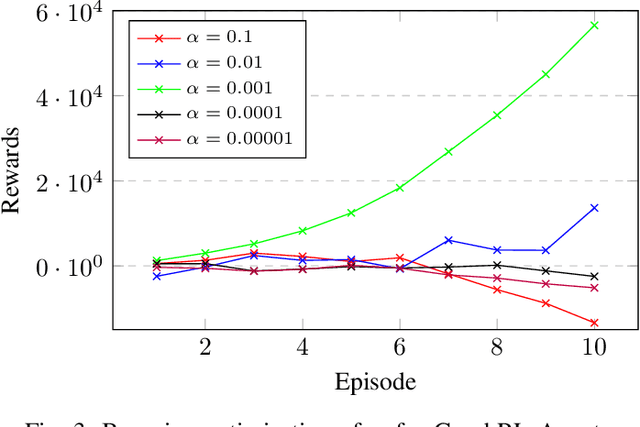

Graph-enabled Reinforcement Learning for Time Series Forecasting with Adaptive Intelligence

Sep 18, 2023

Abstract:Reinforcement learning is well known for its ability to model sequential tasks and learn latent data patterns adaptively. Deep learning models have been widely explored and adopted in regression and classification tasks. However, deep learning has its limitations such as the assumption of equally spaced and ordered data, and the lack of ability to incorporate graph structure in terms of time-series prediction. Graphical neural network (GNN) has the ability to overcome these challenges and capture the temporal dependencies in time-series data. In this study, we propose a novel approach for predicting time-series data using GNN and monitoring with Reinforcement Learning (RL). GNNs are able to explicitly incorporate the graph structure of the data into the model, allowing them to capture temporal dependencies in a more natural way. This approach allows for more accurate predictions in complex temporal structures, such as those found in healthcare, traffic and weather forecasting. We also fine-tune our GraphRL model using a Bayesian optimisation technique to further improve performance. The proposed framework outperforms the baseline models in time-series forecasting and monitoring. The contributions of this study include the introduction of a novel GraphRL framework for time-series prediction and the demonstration of the effectiveness of GNNs in comparison to traditional deep learning models such as RNNs and LSTMs. Overall, this study demonstrates the potential of GraphRL in providing accurate and efficient predictions in dynamic RL environments.

Multimodality Fusion for Smart Healthcare: a Journey from Data, Information, Knowledge to Wisdom

Jun 25, 2023

Abstract:Multimodal medical data fusion has emerged as a transformative approach in smart healthcare, enabling a comprehensive understanding of patient health and personalized treatment plans. In this paper, a journey from data, information, and knowledge to wisdom (DIKW) is explored through multimodal fusion for smart healthcare. A comprehensive review of multimodal medical data fusion focuses on the integration of various data modalities are presented. It explores different approaches such as Feature selection, Rule-based systems, Machine learning, Deep learning, and Natural Language Processing for fusing and analyzing multimodal data. The paper also highlights the challenges associated with multimodal fusion in healthcare. By synthesizing the reviewed frameworks and insights, a generic framework for multimodal medical data fusion is proposed while aligning with the DIKW mechanism. Moreover, it discusses future directions aligned with the four pillars of healthcare: Predictive, Preventive, Personalized, and Participatory approaches based on the DIKW and the generic framework. The components from this comprehensive survey form the foundation for the successful implementation of multimodal fusion in smart healthcare. The findings of this survey can guide researchers and practitioners in leveraging the power of multimodal fusion with the approaches to revolutionize healthcare and improve patient outcomes.

Exploring the Landscape of Machine Unlearning: A Comprehensive Survey and Taxonomy

May 16, 2023Abstract:Machine unlearning (MU) is gaining increasing attention due to the need to remove or modify predictions made by machine learning (ML) models. While training models have become more efficient and accurate, the importance of unlearning previously learned information has become increasingly significant in fields such as privacy, security, and fairness. This paper presents a comprehensive survey of MU, covering current state-of-the-art techniques and approaches, including data deletion, perturbation, and model updates. In addition, commonly used metrics and datasets are also presented. The paper also highlights the challenges that need to be addressed, including attack sophistication, standardization, transferability, interpretability, training data, and resource constraints. The contributions of this paper include discussions about the potential benefits of MU and its future directions. Additionally, the paper emphasizes the need for researchers and practitioners to continue exploring and refining unlearning techniques to ensure that ML models can adapt to changing circumstances while maintaining user trust. The importance of unlearning is further highlighted in making Artificial Intelligence (AI) more trustworthy and transparent, especially with the increasing importance of AI in various domains that involve large amounts of personal user data.

Sentiment analysis and opinion mining on educational data: A survey

Feb 08, 2023Abstract:Sentiment analysis AKA opinion mining is one of the most widely used NLP applications to identify human intentions from their reviews. In the education sector, opinion mining is used to listen to student opinions and enhance their learning-teaching practices pedagogically. With advancements in sentiment annotation techniques and AI methodologies, student comments can be labelled with their sentiment orientation without much human intervention. In this review article, (1) we consider the role of emotional analysis in education from four levels: document level, sentence level, entity level, and aspect level, (2) sentiment annotation techniques including lexicon-based and corpus-based approaches for unsupervised annotations are explored, (3) the role of AI in sentiment analysis with methodologies like machine learning, deep learning, and transformers are discussed, (4) the impact of sentiment analysis on educational procedures to enhance pedagogy, decision-making, and evaluation are presented. Educational institutions have been widely invested to build sentiment analysis tools and process their student feedback to draw their opinions and insights. Applications built on sentiment analysis of student feedback are reviewed in this study. Challenges in sentiment analysis like multi-polarity, polysemous, negation words, and opinion spam detection are explored and their trends in the research space are discussed. The future directions of sentiment analysis in education are discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge