Xusheng Du

LoD Sketch Extraction from Architectural Models Using Generative AI: Dataset Construction for Multi-Level Architectural Design Generation

Jan 23, 2026Abstract:For architectural design, representation across multiple Levels of Details (LoD) is essential for achieving a smooth transition from conceptual massing to detailed modeling. However, traditional LoD modeling processes rely on manual operations that are time-consuming, labor-intensive, and prone to geometric inconsistencies. While the rapid advancement of generative artificial intelligence (AI) has opened new possibilities for generating multi-level architectural models from sketch inputs, its application remains limited by the lack of high-quality paired LoD training data. To address this issue, we propose an automatic LoD sketch extraction framework using generative AI models, which progressively simplifies high-detail architectural models to automatically generate geometrically consistent and hierarchically coherent multi-LoD representations. The proposed framework integrates computer vision techniques with generative AI methods to establish a progressive extraction pipeline that transitions from detailed representations to volumetric abstractions. Experimental results demonstrate that the method maintains strong geometric consistency across LoD levels, achieving SSIM values of 0.7319 and 0.7532 for the transitions from LoD3 to LoD2 and from LoD2 to LoD1, respectively, with corresponding normalized Hausdorff distances of 25.1% and 61.0% of the image diagonal, reflecting controlled geometric deviation during abstraction. These results verify that the proposed framework effectively preserves global structure while achieving progressive semantic simplification across different LoD levels, providing reliable data and technical support for AI-driven multi-level architectural generation and hierarchical modeling.

Sketch-Based Facade Renovation With Generative AI: A Streamlined Framework for Bypassing As-Built Modelling in Industrial Adaptive Reuse

Jan 13, 2026Abstract:Facade renovation offers a more sustainable alternative to full demolition, yet producing design proposals that preserve existing structures while expressing new intent remains challenging. Current workflows typically require detailed as-built modelling before design, which is time-consuming, labour-intensive, and often involves repeated revisions. To solve this issue, we propose a three-stage framework combining generative artificial intelligence (AI) and vision-language models (VLM) that directly processes rough structural sketch and textual descriptions to produce consistent renovation proposals. First, the input sketch is used by a fine-tuned VLM model to predict bounding boxes specifying where modifications are needed and which components should be added. Next, a stable diffusion model generates detailed sketches of new elements, which are merged with the original outline through a generative inpainting pipeline. Finally, ControlNet is employed to refine the result into a photorealistic image. Experiments on datasets and real industrial buildings indicate that the proposed framework can generate renovation proposals that preserve the original structure while improving facade detail quality. This approach effectively bypasses the need for detailed as-built modelling, enabling architects to rapidly explore design alternatives, iterate on early-stage concepts, and communicate renovation intentions with greater clarity.

Multi-View Depth Consistent Image Generation Using Generative AI Models: Application on Architectural Design of University Buildings

Mar 05, 2025

Abstract:In the early stages of architectural design, shoebox models are typically used as a simplified representation of building structures but require extensive operations to transform them into detailed designs. Generative artificial intelligence (AI) provides a promising solution to automate this transformation, but ensuring multi-view consistency remains a significant challenge. To solve this issue, we propose a novel three-stage consistent image generation framework using generative AI models to generate architectural designs from shoebox model representations. The proposed method enhances state-of-the-art image generation diffusion models to generate multi-view consistent architectural images. We employ ControlNet as the backbone and optimize it to accommodate multi-view inputs of architectural shoebox models captured from predefined perspectives. To ensure stylistic and structural consistency across multi-view images, we propose an image space loss module that incorporates style loss, structural loss and angle alignment loss. We then use depth estimation method to extract depth maps from the generated multi-view images. Finally, we use the paired data of the architectural images and depth maps as inputs to improve the multi-view consistency via the depth-aware 3D attention module. Experimental results demonstrate that the proposed framework can generate multi-view architectural images with consistent style and structural coherence from shoebox model inputs.

Sketch-Guided Motion Diffusion for Stylized Cinemagraph Synthesis

Dec 01, 2024

Abstract:Designing stylized cinemagraphs is challenging due to the difficulty in customizing complex and expressive flow motions. To achieve intuitive and detailed control of the generated cinemagraphs, freehand sketches can provide a better solution to convey personalized design requirements than only text inputs. In this paper, we propose Sketch2Cinemagraph, a sketch-guided framework that enables the conditional generation of stylized cinemagraphs from freehand sketches. Sketch2Cinemagraph adopts text prompts for initial content generation and provides hand-drawn sketch controls for both spatial and motion cues. The latent diffusion model is adopted to generate target stylized landscape images along with realistic versions. Then, a pre-trained object detection model is utilized to segment and obtain masks for the flow regions. We proposed a novel latent motion diffusion model to estimate the motion field in the fluid regions of the generated landscape images. The input motion sketches serve as the conditions to control the generated vector fields in the masked fluid regions with the prompt. To synthesize the cinemagraph frames, the pixels within fluid regions are subsequently warped to the target locations for each timestep using a frame generator. The results verified that Sketch2Cinemagraph can generate high-fidelity and aesthetically appealing stylized cinemagraphs with continuous temporal flow from intuitive sketch inputs. We showcase the advantages of Sketch2Cinemagraph through quantitative comparisons against the state-of-the-art generation approaches.

Bearing Fault Diagnosis using Graph Sampling and Aggregation Network

Aug 12, 2024

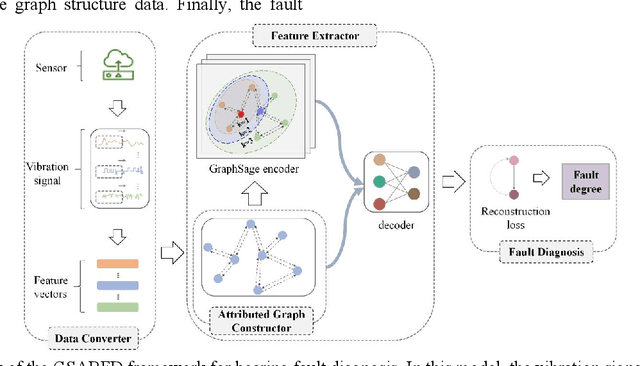

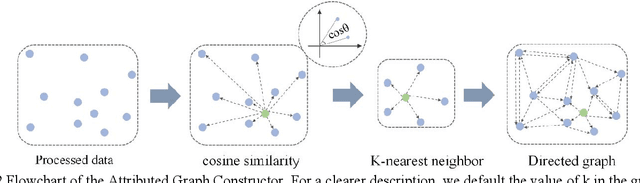

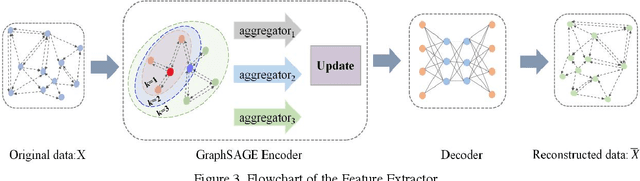

Abstract:Bearing fault diagnosis technology has a wide range of practical applications in industrial production, energy and other fields. Timely and accurate detection of bearing faults plays an important role in preventing catastrophic accidents and ensuring product quality. Traditional signal analysis techniques and deep learning-based fault detection algorithms do not take into account the intricate correlation between signals, making it difficult to further improve detection accuracy. To address this problem, we introduced Graph Sampling and Aggregation (GraphSAGE) network and proposed GraphSAGE-based Bearing fault Diagnosis (GSABFD) algorithm. The original vibration signal is firstly sliced through a fixed size non-overlapping sliding window, and the sliced data is feature transformed using signal analysis methods; then correlations are constructed for the transformed vibration signal and further transformed into vertices in the graph; then the GraphSAGE network is used for training; finally the fault level of the object is calculated in the output layer of the network. The proposed algorithm is compared with five advanced algorithms in a real-world public dataset for experiments, and the results show that the GSABFD algorithm improves the AUC value by 5% compared with the next best algorithm.

Sketch-Guided Scene Image Generation

Jul 09, 2024Abstract:Text-to-image models are showcasing the impressive ability to create high-quality and diverse generative images. Nevertheless, the transition from freehand sketches to complex scene images remains challenging using diffusion models. In this study, we propose a novel sketch-guided scene image generation framework, decomposing the task of scene image scene generation from sketch inputs into object-level cross-domain generation and scene-level image construction. We employ pre-trained diffusion models to convert each single object drawing into an image of the object, inferring additional details while maintaining the sparse sketch structure. In order to maintain the conceptual fidelity of the foreground during scene generation, we invert the visual features of object images into identity embeddings for scene generation. In scene-level image construction, we generate the latent representation of the scene image using the separated background prompts, and then blend the generated foreground objects according to the layout of the sketch input. To ensure the foreground objects' details remain unchanged while naturally composing the scene image, we infer the scene image on the blended latent representation using a global prompt that includes the trained identity tokens. Through qualitative and quantitative experiments, we demonstrate the ability of the proposed approach to generate scene images from hand-drawn sketches surpasses the state-of-the-art approaches.

Generative AI for Architectural Design: A Literature Review

Mar 30, 2024

Abstract:Generative Artificial Intelligence (AI) has pioneered new methodological paradigms in architectural design, significantly expanding the innovative potential and efficiency of the design process. This paper explores the extensive applications of generative AI technologies in architectural design, a trend that has benefited from the rapid development of deep generative models. This article provides a comprehensive review of the basic principles of generative AI and large-scale models and highlights the applications in the generation of 2D images, videos, and 3D models. In addition, by reviewing the latest literature from 2020, this paper scrutinizes the impact of generative AI technologies at different stages of architectural design, from generating initial architectural 3D forms to producing final architectural imagery. The marked trend of research growth indicates an increasing inclination within the architectural design community towards embracing generative AI, thereby catalyzing a shared enthusiasm for research. These research cases and methodologies have not only proven to enhance efficiency and innovation significantly but have also posed challenges to the conventional boundaries of architectural creativity. Finally, we point out new directions for design innovation and articulate fresh trajectories for applying generative AI in the architectural domain. This article provides the first comprehensive literature review about generative AI for architectural design, and we believe this work can facilitate more research work on this significant topic in architecture.

SGDraw: Scene Graph Drawing Interface Using Object-Oriented Representation

Nov 30, 2022

Abstract:Scene understanding is an essential and challenging task in computer vision. To provide the visually fundamental graphical structure of an image, the scene graph has received increased attention due to its powerful semantic representation. However, it is difficult to draw a proper scene graph for image retrieval, image generation, and multi-modal applications. The conventional scene graph annotation interface is not easy to use in image annotations, and the automatic scene graph generation approaches using deep neural networks are prone to generate redundant content while disregarding details. In this work, we propose SGDraw, a scene graph drawing interface using object-oriented scene graph representation to help users draw and edit scene graphs interactively. For the proposed object-oriented representation, we consider the objects, attributes, and relationships of objects as a structural unit. SGDraw provides a web-based scene graph annotation and generation tool for scene understanding applications. To verify the effectiveness of the proposed interface, we conducted a comparison study with the conventional tool and the user experience study. The results show that SGDraw can help generate scene graphs with richer details and describe the images more accurately than traditional bounding box annotations. We believe the proposed SGDraw can be useful in various vision tasks, such as image retrieval and generation.

Graph Neural Network-based Early Bearing Fault Detection

Apr 24, 2022Abstract:Early detection of faults is of importance to avoid catastrophic accidents and ensure safe operation of machinery. A novel graph neural network-based fault detection method is proposed to build a bridge between AI and real-world running mechanical systems. First, the vibration signals, which are Euclidean structured data, are converted into graph (non-Euclidean structured data), so that the vibration signals, which are originally independent of each other, are correlated with each other. Second, inputs the dataset together with its corresponding graph into the GNN for training, which contains graphs in each hidden layer of the network, enabling the graph neural network to learn the feature values of itself and its neighbors, and the obtained early features have stronger discriminability. Finally, determines the top-n objects that are difficult to reconstruct in the output layer of the GNN as fault objects. A public datasets of bearings have been used to verify the effectiveness of the proposed method. We find that the proposed method can successfully detect faulty objects that are mixed in the normal object region.

Fluctuation-based Outlier Detection

Apr 21, 2022

Abstract:Outlier detection is an important topic in machine learning and has been used in a wide range of applications. Outliers are objects that are few in number and deviate from the majority of objects. As a result of these two properties, we show that outliers are susceptible to a mechanism called fluctuation. This article proposes a method called fluctuation-based outlier detection (FBOD) that achieves a low linear time complexity and detects outliers purely based on the concept of fluctuation without employing any distance, density or isolation measure. Fundamentally different from all existing methods. FBOD first converts the Euclidean structure datasets into graphs by using random links, then propagates the feature value according to the connection of the graph. Finally, by comparing the difference between the fluctuation of an object and its neighbors, FBOD determines the object with a larger difference as an outlier. The results of experiments comparing FBOD with seven state-of-the-art algorithms on eight real-world tabular datasets and three video datasets show that FBOD outperforms its competitors in the majority of cases and that FBOD has only 5% of the execution time of the fastest algorithm. The experiment codes are available at: https://github.com/FluctuationOD/Fluctuation-based-Outlier-Detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge