Hao Jin

Sketch-Guided Motion Diffusion for Stylized Cinemagraph Synthesis

Dec 01, 2024

Abstract:Designing stylized cinemagraphs is challenging due to the difficulty in customizing complex and expressive flow motions. To achieve intuitive and detailed control of the generated cinemagraphs, freehand sketches can provide a better solution to convey personalized design requirements than only text inputs. In this paper, we propose Sketch2Cinemagraph, a sketch-guided framework that enables the conditional generation of stylized cinemagraphs from freehand sketches. Sketch2Cinemagraph adopts text prompts for initial content generation and provides hand-drawn sketch controls for both spatial and motion cues. The latent diffusion model is adopted to generate target stylized landscape images along with realistic versions. Then, a pre-trained object detection model is utilized to segment and obtain masks for the flow regions. We proposed a novel latent motion diffusion model to estimate the motion field in the fluid regions of the generated landscape images. The input motion sketches serve as the conditions to control the generated vector fields in the masked fluid regions with the prompt. To synthesize the cinemagraph frames, the pixels within fluid regions are subsequently warped to the target locations for each timestep using a frame generator. The results verified that Sketch2Cinemagraph can generate high-fidelity and aesthetically appealing stylized cinemagraphs with continuous temporal flow from intuitive sketch inputs. We showcase the advantages of Sketch2Cinemagraph through quantitative comparisons against the state-of-the-art generation approaches.

RS-MoE: Mixture of Experts for Remote Sensing Image Captioning and Visual Question Answering

Nov 03, 2024Abstract:Remote Sensing Image Captioning (RSIC) presents unique challenges and plays a critical role in applications. Traditional RSIC methods often struggle to produce rich and diverse descriptions. Recently, with advancements in VLMs, efforts have emerged to integrate these models into the remote sensing domain and to introduce descriptive datasets specifically designed to enhance VLM training. This paper proposes RS-MoE, a first Mixture of Expert based VLM specifically customized for remote sensing domain. Unlike traditional MoE models, the core of RS-MoE is the MoE Block, which incorporates a novel Instruction Router and multiple lightweight Large Language Models (LLMs) as expert models. The Instruction Router is designed to generate specific prompts tailored for each corresponding LLM, guiding them to focus on distinct aspects of the RSIC task. This design not only allows each expert LLM to concentrate on a specific subset of the task, thereby enhancing the specificity and accuracy of the generated captions, but also improves the scalability of the model by facilitating parallel processing of sub-tasks. Additionally, we present a two-stage training strategy for tuning our RS-MoE model to prevent performance degradation due to sparsity. We fine-tuned our model on the RSICap dataset using our proposed training strategy. Experimental results on the RSICap dataset, along with evaluations on other traditional datasets where no additional fine-tuning was applied, demonstrate that our model achieves state-of-the-art performance in generating precise and contextually relevant captions. Notably, our RS-MoE-1B variant achieves performance comparable to 13B VLMs, demonstrating the efficiency of our model design. Moreover, our model demonstrates promising generalization capabilities by consistently achieving state-of-the-art performance on the Remote Sensing Visual Question Answering (RSVQA) task.

Federated Control in Markov Decision Processes

May 07, 2024

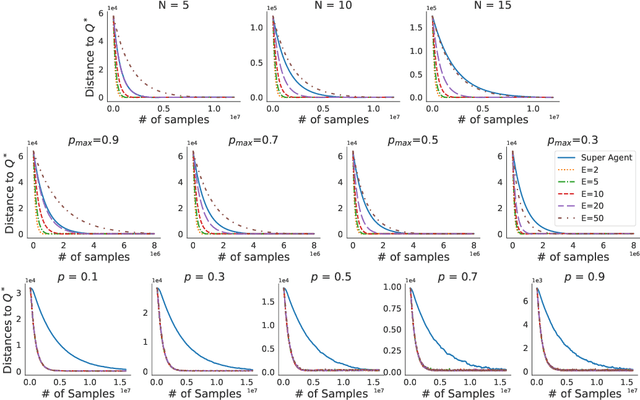

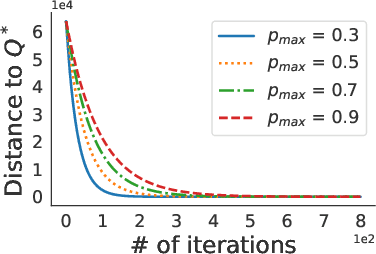

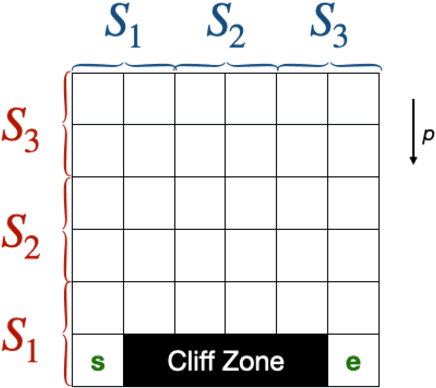

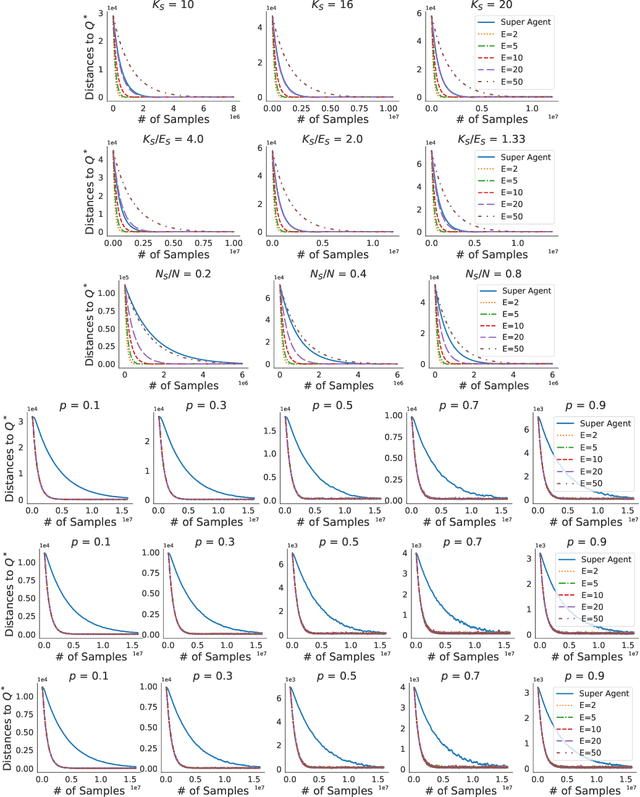

Abstract:We study problems of federated control in Markov Decision Processes. To solve an MDP with large state space, multiple learning agents are introduced to collaboratively learn its optimal policy without communication of locally collected experience. In our settings, these agents have limited capabilities, which means they are restricted within different regions of the overall state space during the training process. In face of the difference among restricted regions, we firstly introduce concepts of leakage probabilities to understand how such heterogeneity affects the learning process, and then propose a novel communication protocol that we call Federated-Q protocol (FedQ), which periodically aggregates agents' knowledge of their restricted regions and accordingly modifies their learning problems for further training. In terms of theoretical analysis, we justify the correctness of FedQ as a communication protocol, then give a general result on sample complexity of derived algorithms FedQ-X with the RL oracle , and finally conduct a thorough study on the sample complexity of FedQ-SynQ. Specifically, FedQ-X has been shown to enjoy linear speedup in terms of sample complexity when workload is uniformly distributed among agents. Moreover, we carry out experiments in various environments to justify the efficiency of our methods.

A Semi-automatic Oriental Ink Painting Framework for Robotic Drawing from 3D Models

Aug 26, 2023Abstract:Creating visually pleasing stylized ink paintings from 3D models is a challenge in robotic manipulation. We propose a semi-automatic framework that can extract expressive strokes from 3D models and draw them in oriental ink painting styles by using a robotic arm. The framework consists of a simulation stage and a robotic drawing stage. In the simulation stage, geometrical contours were automatically extracted from a certain viewpoint and a neural network was employed to create simplified contours. Then, expressive digital strokes were generated after interactive editing according to user's aesthetic understanding. In the robotic drawing stage, an optimization method was presented for drawing smooth and physically consistent strokes to the digital strokes, and two oriental ink painting styles termed as Noutan (shade) and Kasure (scratchiness) were applied to the strokes by robotic control of a brush's translation, dipping and scraping. Unlike existing methods that concentrate on generating paintings from 2D images, our framework has the advantage of rendering stylized ink paintings from 3D models by using a consumer-grade robotic arm. We evaluate the proposed framework by taking 3 standard models and a user-defined model as examples. The results show that our framework is able to draw visually pleasing oriental ink paintings with expressive strokes.

Federated Reinforcement Learning with Environment Heterogeneity

Apr 06, 2022

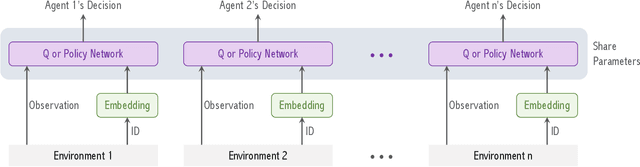

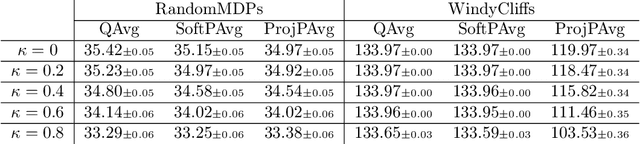

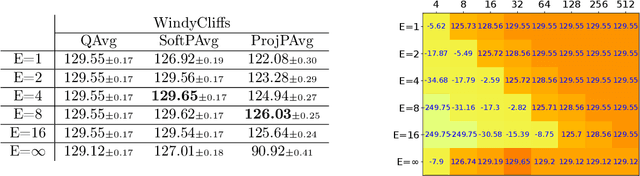

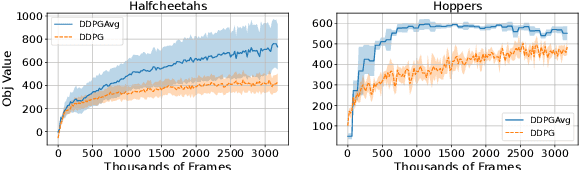

Abstract:We study a Federated Reinforcement Learning (FedRL) problem in which $n$ agents collaboratively learn a single policy without sharing the trajectories they collected during agent-environment interaction. We stress the constraint of environment heterogeneity, which means $n$ environments corresponding to these $n$ agents have different state transitions. To obtain a value function or a policy function which optimizes the overall performance in all environments, we propose two federated RL algorithms, \texttt{QAvg} and \texttt{PAvg}. We theoretically prove that these algorithms converge to suboptimal solutions, while such suboptimality depends on how heterogeneous these $n$ environments are. Moreover, we propose a heuristic that achieves personalization by embedding the $n$ environments into $n$ vectors. The personalization heuristic not only improves the training but also allows for better generalization to new environments.

Meta-Regularization: An Approach to Adaptive Choice of the Learning Rate in Gradient Descent

Apr 12, 2021

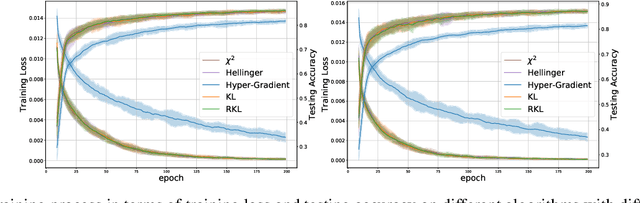

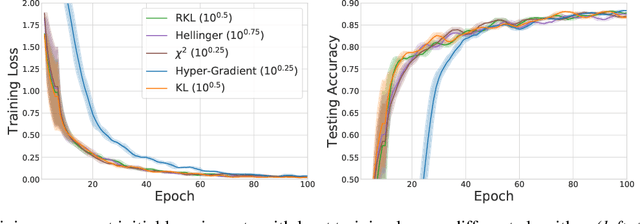

Abstract:We propose \textit{Meta-Regularization}, a novel approach for the adaptive choice of the learning rate in first-order gradient descent methods. Our approach modifies the objective function by adding a regularization term on the learning rate, and casts the joint updating process of parameters and learning rates into a maxmin problem. Given any regularization term, our approach facilitates the generation of practical algorithms. When \textit{Meta-Regularization} takes the $\varphi$-divergence as a regularizer, the resulting algorithms exhibit comparable theoretical convergence performance with other first-order gradient-based algorithms. Furthermore, we theoretically prove that some well-designed regularizers can improve the convergence performance under the strong-convexity condition of the objective function. Numerical experiments on benchmark problems demonstrate the effectiveness of algorithms derived from some common $\varphi$-divergence in full batch as well as online learning settings.

Natural Language Adversarial Attacks and Defenses in Word Level

Sep 24, 2019

Abstract:Up until recent two years, inspired by the big amount of research about adversarial example in the field of computer vision, there has been a growing interest in adversarial attacks for Natural Language Processing (NLP). What followed was a very few works of adversarial defense for NLP. However, there exists no defense method against the successful synonyms substitution based attacks that aim to satisfy all the lexical, grammatical, semantic constraints and thus are hard to perceived by humans. To fill this gap, we postulate the generalization of the model leads to the existence of adversarial examples, and propose an adversarial defense method called Synonyms Encoding Method (SEM), which inserts an encoder before the input layer of the model and then trains the model to eliminate adversarial perturbations. Extensive experiments demonstrate that SEM can efficiently defend current best synonym substitution based adversarial attacks with almost no decay on the accuracy for benign examples. Besides, to better evaluate SEM, we also propose a strong attack method called Improved Genetic Algorithm (IGA) that adopts the genetic metaheuristic against synonyms substitution based attacks. Compared with existing genetic based adversarial attack, the proposed IGA can achieve higher attack success rate at the same time maintain the transferability of adversarial examples.

Towards Better Generalization: BP-SVRG in Training Deep Neural Networks

Aug 18, 2019

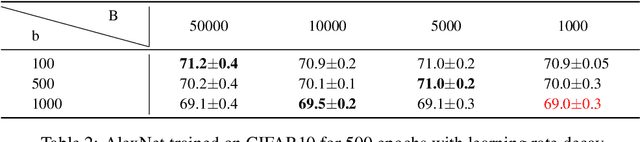

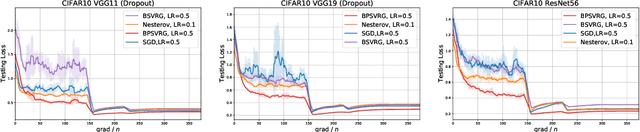

Abstract:Stochastic variance-reduced gradient (SVRG) is a classical optimization method. Although it is theoretically proved to have better convergence performance than stochastic gradient descent (SGD), the generalization performance of SVRG remains open. In this paper we investigate the effects of some training techniques, mini-batching and learning rate decay, on the generalization performance of SVRG, and verify the generalization performance of Batch-SVRG (B-SVRG). In terms of the relationship between optimization and generalization, we believe that the average norm of gradients on each training sample as well as the norm of average gradient indicate how flat the landscape is and how well the model generalizes. Based on empirical observations of such metrics, we perform a sign switch on B-SVRG and derive a practical algorithm, BatchPlus-SVRG (BP-SVRG), which is numerically shown to enjoy better generalization performance than B-SVRG, even SGD in some scenarios of deep neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge