Jiajun He

Assessing generative modeling approaches for free energy estimates in condensed matter

Dec 30, 2025Abstract:The accurate estimation of free energy differences between two states is a long-standing challenge in molecular simulations. Traditional approaches generally rely on sampling multiple intermediate states to ensure sufficient overlap in phase space and are, consequently, computationally expensive. Several generative-model-based methods have recently addressed this challenge by learning a direct bridge between distributions, bypassing the need for intermediate states. However, it remains unclear which approaches provide the best trade-off between efficiency, accuracy, and scalability. In this work, we systematically review these methods and benchmark selected approaches with a focus on condensed-matter systems. In particular, we investigate the performance of discrete and continuous normalizing flows in the context of targeted free energy perturbation as well as FEAT (Free energy Estimators with Adaptive Transport) together with the escorted Jarzynski equality, using coarse-grained monatomic ice and Lennard-Jones solids as benchmark systems. We evaluate accuracy, data efficiency, computational cost, and scalability with system size. Our results provide a quantitative framework for selecting effective free energy estimation strategies in condensed-phase systems.

Pinching-Antenna System-Assisted Localization: A Stochastic Geometry Perspective

Nov 19, 2025

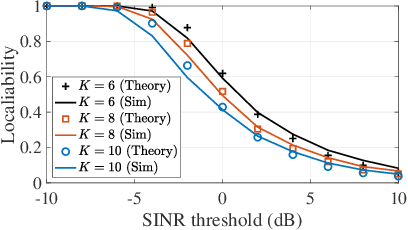

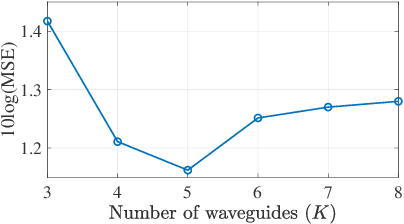

Abstract:This paper proposes a novel localization framework underpinned by a pinching-antenna (PA) system, in which the target location is estimated using received signal strength (RSS) measurements obtained from downlink signals transmitted by the PAs. To develop a comprehensive analytical framework, we employ stochastic geometry to model the spatial distribution of the PAs, enabling tractable and insightful network-level performance analysis. Closed-form expressions for target localizability and the Cramer-Rao lower bound (CRLB) distribution are analytically derived, enabling the evaluation of the fundamental limits of PA-assisted localization systems without extensive simulations. Furthermore, the proposed framework provides practical guidance for selecting the optimal waveguide number to maximize localization performance. Numerical results also highlight the superiority of the PA-assisted approach over conventional fixed-antenna systems in terms of the CRLB.

Adversarial Learning-Based Radio Map Reconstruction for Fingerprinting Localization

Nov 18, 2025Abstract:This letter presents a feature-guided adversarial framework, namely ComGAN, which is designed to reconstruct an incomplete fingerprint database by inferring missing received signal strength (RSS) values at unmeasured reference points (RPs). An auxiliary subnetwork is integrated into a conditional generative adversarial network (cGAN) to enable spatial feature learning. An optimization method is then developed to refine the RSS predictions by aggregating multiple prediction sets, achieving an improved localization performance. Experimental results demonstrate that the proposed scheme achieves a root mean squared error (RMSE) comparable to the ground-truth measurements while outperforming state-of-the-art reconstruction methods. When the reconstructed fingerprint is combined with measured data for training, the fingerprinting localization achieves accuracy comparable to models trained on fully measured datasets.

PARCO: Phoneme-Augmented Robust Contextual ASR via Contrastive Entity Disambiguation

Sep 04, 2025Abstract:Automatic speech recognition (ASR) systems struggle with domain-specific named entities, especially homophones. Contextual ASR improves recognition but often fails to capture fine-grained phoneme variations due to limited entity diversity. Moreover, prior methods treat entities as independent tokens, leading to incomplete multi-token biasing. To address these issues, we propose Phoneme-Augmented Robust Contextual ASR via COntrastive entity disambiguation (PARCO), which integrates phoneme-aware encoding, contrastive entity disambiguation, entity-level supervision, and hierarchical entity filtering. These components enhance phonetic discrimination, ensure complete entity retrieval, and reduce false positives under uncertainty. Experiments show that PARCO achieves CER of 4.22% on Chinese AISHELL-1 and WER of 11.14% on English DATA2 under 1,000 distractors, significantly outperforming baselines. PARCO also demonstrates robust gains on out-of-domain datasets like THCHS-30 and LibriSpeech.

RNE: a plug-and-play framework for diffusion density estimation and inference-time control

Jun 06, 2025

Abstract:In this paper, we introduce the Radon-Nikodym Estimator (RNE), a flexible, plug-and-play framework for diffusion inference-time density estimation and control, based on the concept of the density ratio between path distributions. RNE connects and unifies a variety of existing density estimation and inference-time control methods under a single and intuitive perspective, stemming from basic variational inference and probabilistic principles therefore offering both theoretical clarity and practical versatility. Experiments demonstrate that RNE achieves promising performances in diffusion density estimation and inference-time control tasks, including annealing, composition of diffusion models, and reward-tilting.

Progressive Tempering Sampler with Diffusion

Jun 05, 2025Abstract:Recent research has focused on designing neural samplers that amortize the process of sampling from unnormalized densities. However, despite significant advancements, they still fall short of the state-of-the-art MCMC approach, Parallel Tempering (PT), when it comes to the efficiency of target evaluations. On the other hand, unlike a well-trained neural sampler, PT yields only dependent samples and needs to be rerun -- at considerable computational cost -- whenever new samples are required. To address these weaknesses, we propose the Progressive Tempering Sampler with Diffusion (PTSD), which trains diffusion models sequentially across temperatures, leveraging the advantages of PT to improve the training of neural samplers. We also introduce a novel method to combine high-temperature diffusion models to generate approximate lower-temperature samples, which are minimally refined using MCMC and used to train the next diffusion model. PTSD enables efficient reuse of sample information across temperature levels while generating well-mixed, uncorrelated samples. Our method significantly improves target evaluation efficiency, outperforming diffusion-based neural samplers.

Integrated Image Reconstruction and Target Recognition based on Deep Learning Technique

May 07, 2025Abstract:Computational microwave imaging (CMI) has gained attention as an alternative technique for conventional microwave imaging techniques, addressing their limitations such as hardware-intensive physical layer and slow data collection acquisition speed to name a few. Despite these advantages, CMI still encounters notable computational bottlenecks, especially during the image reconstruction stage. In this setting, both image recovery and object classification present significant processing demands. To address these challenges, our previous work introduced ClassiGAN, which is a generative deep learning model designed to simultaneously reconstruct images and classify targets using only back-scattered signals. In this study, we build upon that framework by incorporating attention gate modules into ClassiGAN. These modules are intended to refine feature extraction and improve the identification of relevant information. By dynamically focusing on important features and suppressing irrelevant ones, the attention mechanism enhances the overall model performance. The proposed architecture, named Att-ClassiGAN, significantly reduces the reconstruction time compared to traditional CMI approaches. Furthermore, it outperforms current advanced methods, delivering improved Normalized Mean Squared Error (NMSE), higher Structural Similarity Index (SSIM), and better classification outcomes for the reconstructed targets.

FEAT: Free energy Estimators with Adaptive Transport

Apr 15, 2025

Abstract:We present Free energy Estimators with Adaptive Transport (FEAT), a novel framework for free energy estimation -- a critical challenge across scientific domains. FEAT leverages learned transports implemented via stochastic interpolants and provides consistent, minimum-variance estimators based on escorted Jarzynski equality and controlled Crooks theorem, alongside variational upper and lower bounds on free energy differences. Unifying equilibrium and non-equilibrium methods under a single theoretical framework, FEAT establishes a principled foundation for neural free energy calculations. Experimental validation on toy examples, molecular simulations, and quantum field theory demonstrates improvements over existing learning-based methods.

Towards Training One-Step Diffusion Models Without Distillation

Feb 11, 2025Abstract:Recent advances in one-step generative models typically follow a two-stage process: first training a teacher diffusion model and then distilling it into a one-step student model. This distillation process traditionally relies on both the teacher model's score function to compute the distillation loss and its weights for student initialization. In this paper, we explore whether one-step generative models can be trained directly without this distillation process. First, we show that the teacher's score function is not essential and propose a family of distillation methods that achieve competitive results without relying on score estimation. Next, we demonstrate that initialization from teacher weights is indispensable in successful training. Surprisingly, we find that this benefit is not due to improved ``input-output" mapping but rather the learned feature representations, which dominate distillation quality. Our findings provide a better understanding of the role of initialization in one-step model training and its impact on distillation quality.

No Trick, No Treat: Pursuits and Challenges Towards Simulation-free Training of Neural Samplers

Feb 10, 2025

Abstract:We consider the sampling problem, where the aim is to draw samples from a distribution whose density is known only up to a normalization constant. Recent breakthroughs in generative modeling to approximate a high-dimensional data distribution have sparked significant interest in developing neural network-based methods for this challenging problem. However, neural samplers typically incur heavy computational overhead due to simulating trajectories during training. This motivates the pursuit of simulation-free training procedures of neural samplers. In this work, we propose an elegant modification to previous methods, which allows simulation-free training with the help of a time-dependent normalizing flow. However, it ultimately suffers from severe mode collapse. On closer inspection, we find that nearly all successful neural samplers rely on Langevin preconditioning to avoid mode collapsing. We systematically analyze several popular methods with various objective functions and demonstrate that, in the absence of Langevin preconditioning, most of them fail to adequately cover even a simple target. Finally, we draw attention to a strong baseline by combining the state-of-the-art MCMC method, Parallel Tempering (PT), with an additional generative model to shed light on future explorations of neural samplers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge