Jiajia Liu

A Machine Learning-Based Multimodal Framework for Wearable Sensor-Based Archery Action Recognition and Stress Estimation

Nov 18, 2025Abstract:In precision sports such as archery, athletes' performance depends on both biomechanical stability and psychological resilience. Traditional motion analysis systems are often expensive and intrusive, limiting their use in natural training environments. To address this limitation, we propose a machine learning-based multimodal framework that integrates wearable sensor data for simultaneous action recognition and stress estimation. Using a self-developed wrist-worn device equipped with an accelerometer and photoplethysmography (PPG) sensor, we collected synchronized motion and physiological data during real archery sessions. For motion recognition, we introduce a novel feature--Smoothed Differential Acceleration (SmoothDiff)--and employ a Long Short-Term Memory (LSTM) model to identify motion phases, achieving 96.8% accuracy and 95.9% F1-score. For stress estimation, we extract heart rate variability (HRV) features from PPG signals and apply a Multi-Layer Perceptron (MLP) classifier, achieving 80% accuracy in distinguishing high- and low-stress levels. The proposed framework demonstrates that integrating motion and physiological sensing can provide meaningful insights into athletes' technical and mental states. This approach offers a foundation for developing intelligent, real-time feedback systems for training optimization in archery and other precision sports.

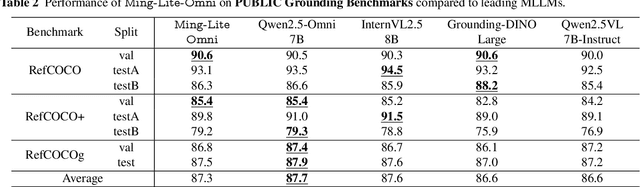

Ming-Omni: A Unified Multimodal Model for Perception and Generation

Jun 11, 2025

Abstract:We propose Ming-Omni, a unified multimodal model capable of processing images, text, audio, and video, while demonstrating strong proficiency in both speech and image generation. Ming-Omni employs dedicated encoders to extract tokens from different modalities, which are then processed by Ling, an MoE architecture equipped with newly proposed modality-specific routers. This design enables a single model to efficiently process and fuse multimodal inputs within a unified framework, thereby facilitating diverse tasks without requiring separate models, task-specific fine-tuning, or structural redesign. Importantly, Ming-Omni extends beyond conventional multimodal models by supporting audio and image generation. This is achieved through the integration of an advanced audio decoder for natural-sounding speech and Ming-Lite-Uni for high-quality image generation, which also allow the model to engage in context-aware chatting, perform text-to-speech conversion, and conduct versatile image editing. Our experimental results showcase Ming-Omni offers a powerful solution for unified perception and generation across all modalities. Notably, our proposed Ming-Omni is the first open-source model we are aware of to match GPT-4o in modality support, and we release all code and model weights to encourage further research and development in the community.

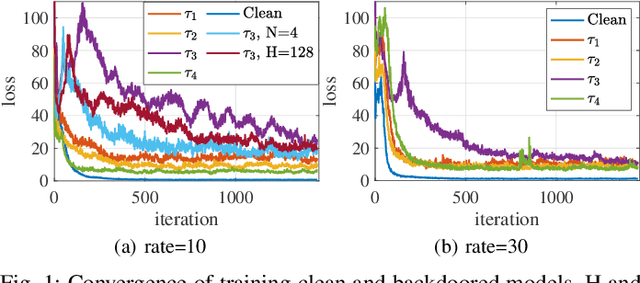

BLAST: A Stealthy Backdoor Leverage Attack against Cooperative Multi-Agent Deep Reinforcement Learning based Systems

Jan 03, 2025

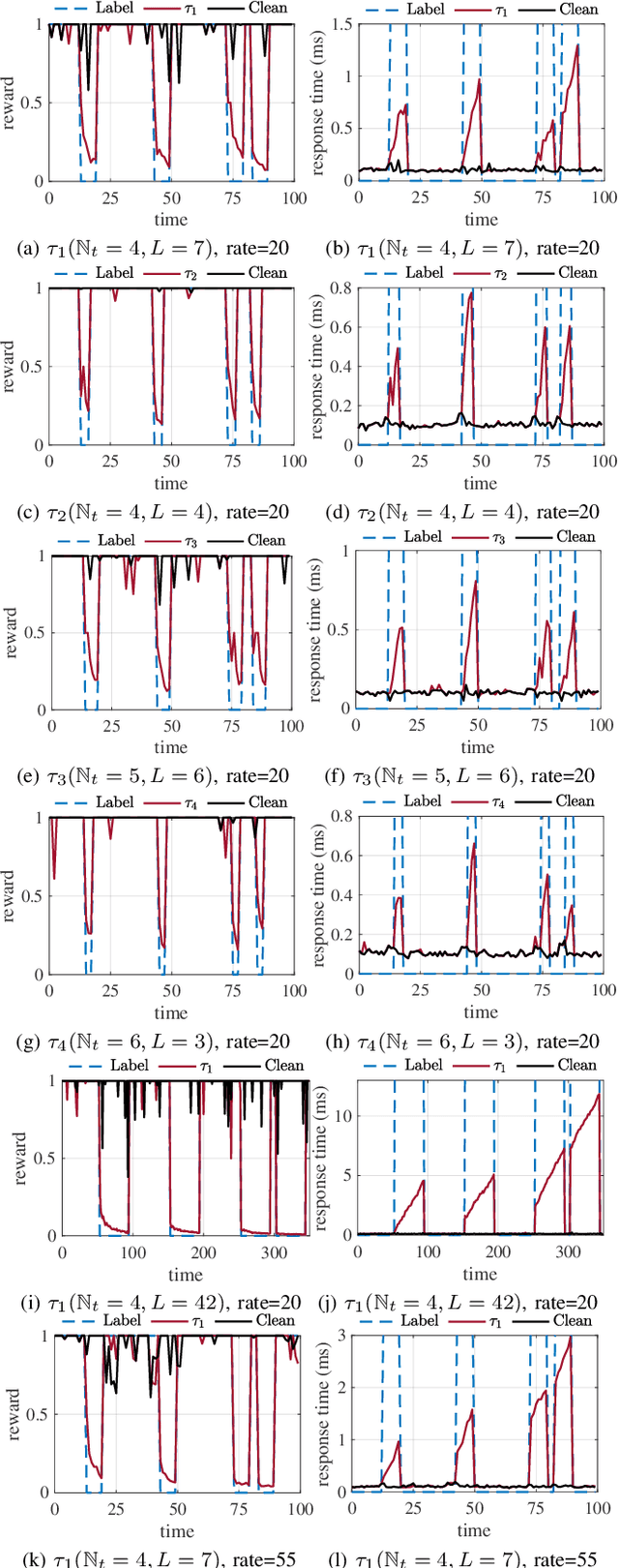

Abstract:Recent studies have shown that cooperative multi-agent deep reinforcement learning (c-MADRL) is under the threat of backdoor attacks. Once a backdoor trigger is observed, it will perform malicious actions leading to failures or malicious goals. However, existing backdoor attacks suffer from several issues, e.g., instant trigger patterns lack stealthiness, the backdoor is trained or activated by an additional network, or all agents are backdoored. To this end, in this paper, we propose a novel backdoor leverage attack against c-MADRL, BLAST, which attacks the entire multi-agent team by embedding the backdoor only in a single agent. Firstly, we introduce adversary spatiotemporal behavior patterns as the backdoor trigger rather than manual-injected fixed visual patterns or instant status and control the period to perform malicious actions. This method can guarantee the stealthiness and practicality of BLAST. Secondly, we hack the original reward function of the backdoor agent via unilateral guidance to inject BLAST, so as to achieve the \textit{leverage attack effect} that can pry open the entire multi-agent system via a single backdoor agent. We evaluate our BLAST against 3 classic c-MADRL algorithms (VDN, QMIX, and MAPPO) in 2 popular c-MADRL environments (SMAC and Pursuit), and 2 existing defense mechanisms. The experimental results demonstrate that BLAST can achieve a high attack success rate while maintaining a low clean performance variance rate.

A Spatiotemporal Stealthy Backdoor Attack against Cooperative Multi-Agent Deep Reinforcement Learning

Sep 12, 2024

Abstract:Recent studies have shown that cooperative multi-agent deep reinforcement learning (c-MADRL) is under the threat of backdoor attacks. Once a backdoor trigger is observed, it will perform abnormal actions leading to failures or malicious goals. However, existing proposed backdoors suffer from several issues, e.g., fixed visual trigger patterns lack stealthiness, the backdoor is trained or activated by an additional network, or all agents are backdoored. To this end, in this paper, we propose a novel backdoor attack against c-MADRL, which attacks the entire multi-agent team by embedding the backdoor only in a single agent. Firstly, we introduce adversary spatiotemporal behavior patterns as the backdoor trigger rather than manual-injected fixed visual patterns or instant status and control the attack duration. This method can guarantee the stealthiness and practicality of injected backdoors. Secondly, we hack the original reward function of the backdoored agent via reward reverse and unilateral guidance during training to ensure its adverse influence on the entire team. We evaluate our backdoor attacks on two classic c-MADRL algorithms VDN and QMIX, in a popular c-MADRL environment SMAC. The experimental results demonstrate that our backdoor attacks are able to reach a high attack success rate (91.6\%) while maintaining a low clean performance variance rate (3.7\%).

Power Optimization and Deep Learning for Channel Estimation of Active IRS-Aided IoT

Jul 12, 2024

Abstract:In this paper, channel estimation of an active intelligent reflecting surface (IRS) aided uplink Internet of Things (IoT) network is investigated. Firstly, the least square (LS) estimators for the direct channel and the cascaded channel are presented, respectively. The corresponding mean square errors (MSE) of channel estimators are derived. Subsequently, in order to evaluate the influence of adjusting the transmit power at the IoT devices or the reflected power at the active IRS on Sum-MSE performance, two situations are considered. In the first case, under the total power sum constraint of the IoT devices and active IRS, the closed-form expression of the optimal power allocation factor is derived. In the second case, when the transmit power at the IoT devices is fixed, there exists an optimal reflective power at active IRS. To further improve the estimation performance, the convolutional neural network (CNN)-based direct channel estimation (CDCE) algorithm and the CNN-based cascaded channel estimation (CCCE) algorithm are designed. Finally, simulation results demonstrate the existence of an optimal power allocation strategy that minimizes the Sum-MSE, and further validate the superiority of the proposed CDCE / CCCE algorithms over their respective traditional LS and minimum mean square error (MMSE) baselines.

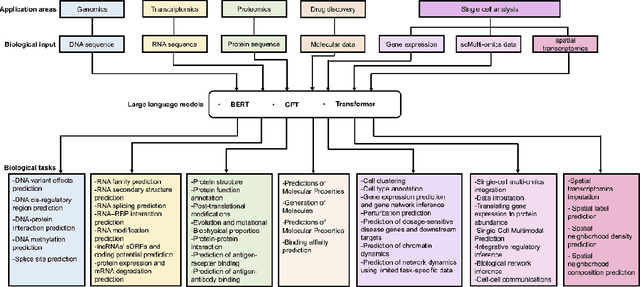

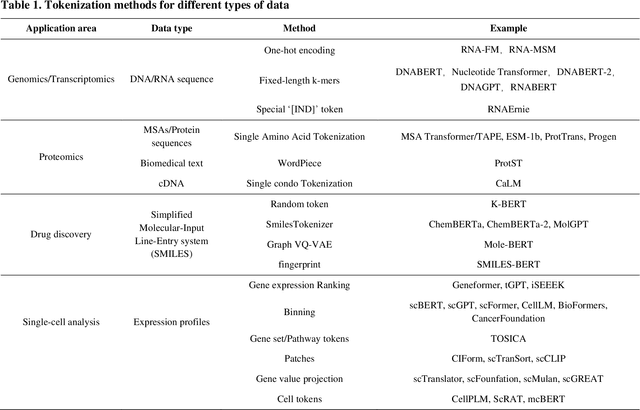

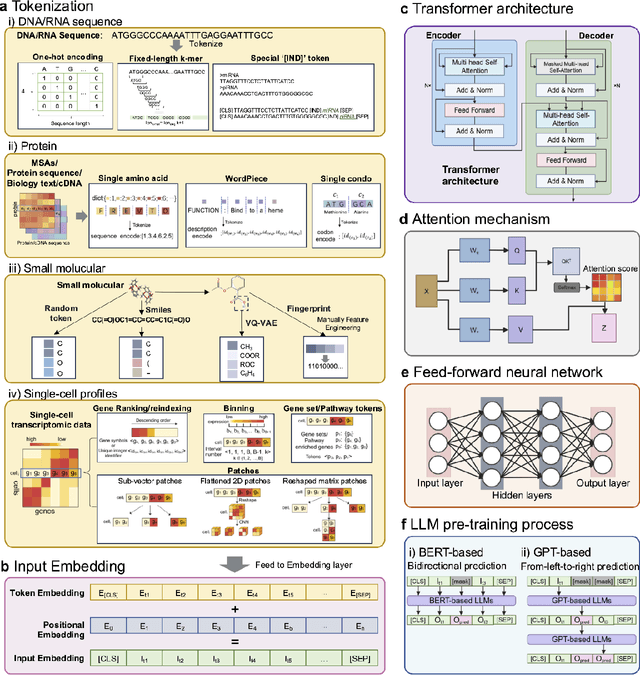

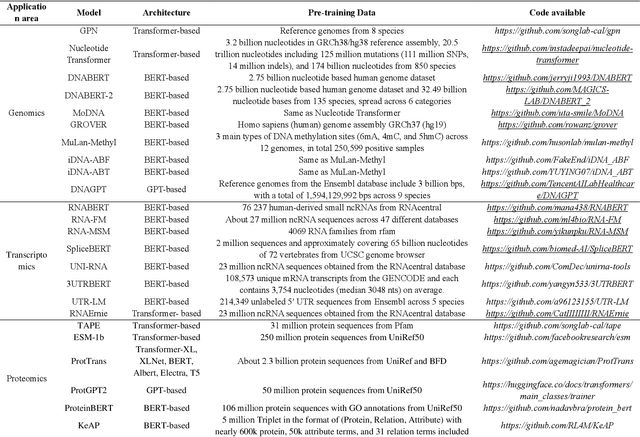

Large language models in bioinformatics: applications and perspectives

Jan 08, 2024

Abstract:Large language models (LLMs) are a class of artificial intelligence models based on deep learning, which have great performance in various tasks, especially in natural language processing (NLP). Large language models typically consist of artificial neural networks with numerous parameters, trained on large amounts of unlabeled input using self-supervised or semi-supervised learning. However, their potential for solving bioinformatics problems may even exceed their proficiency in modeling human language. In this review, we will present a summary of the prominent large language models used in natural language processing, such as BERT and GPT, and focus on exploring the applications of large language models at different omics levels in bioinformatics, mainly including applications of large language models in genomics, transcriptomics, proteomics, drug discovery and single cell analysis. Finally, this review summarizes the potential and prospects of large language models in solving bioinformatic problems.

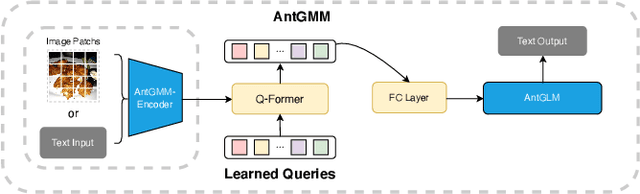

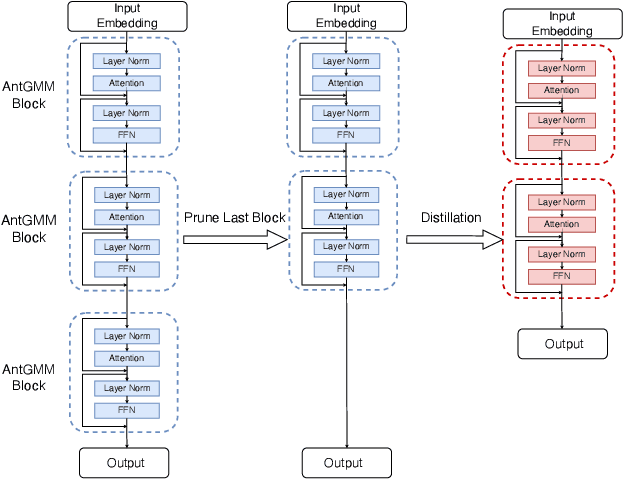

Large Multimodal Model Compression via Efficient Pruning and Distillation at AntGroup

Dec 10, 2023

Abstract:The deployment of Large Multimodal Models (LMMs) within AntGroup has significantly advanced multimodal tasks in payment, security, and advertising, notably enhancing advertisement audition tasks in Alipay. However, the deployment of such sizable models introduces challenges, particularly in increased latency and carbon emissions, which are antithetical to the ideals of Green AI. This paper introduces a novel multi-stage compression strategy for our proprietary LLM, AntGMM. Our methodology pivots on three main aspects: employing small training sample sizes, addressing multi-level redundancy through multi-stage pruning, and introducing an advanced distillation loss design. In our research, we constructed a dataset, the Multimodal Advertisement Audition Dataset (MAAD), from real-world scenarios within Alipay, and conducted experiments to validate the reliability of our proposed strategy. Furthermore, the effectiveness of our strategy is evident in its operational success in Alipay's real-world multimodal advertisement audition for three months from September 2023. Notably, our approach achieved a substantial reduction in latency, decreasing it from 700ms to 90ms, while maintaining online performance with only a slight performance decrease. Moreover, our compressed model is estimated to reduce electricity consumption by approximately 75 million kWh annually compared to the direct deployment of AntGMM, demonstrating our commitment to green AI initiatives. We will publicly release our code and the MAAD dataset after some reviews\footnote{https://github.com/MorinW/AntGMM$\_$Pruning}.

Learning Implicit Entity-object Relations by Bidirectional Generative Alignment for Multimodal NER

Aug 03, 2023

Abstract:The challenge posed by multimodal named entity recognition (MNER) is mainly two-fold: (1) bridging the semantic gap between text and image and (2) matching the entity with its associated object in image. Existing methods fail to capture the implicit entity-object relations, due to the lack of corresponding annotation. In this paper, we propose a bidirectional generative alignment method named BGA-MNER to tackle these issues. Our BGA-MNER consists of \texttt{image2text} and \texttt{text2image} generation with respect to entity-salient content in two modalities. It jointly optimizes the bidirectional reconstruction objectives, leading to aligning the implicit entity-object relations under such direct and powerful constraints. Furthermore, image-text pairs usually contain unmatched components which are noisy for generation. A stage-refined context sampler is proposed to extract the matched cross-modal content for generation. Extensive experiments on two benchmarks demonstrate that our method achieves state-of-the-art performance without image input during inference.

Don't Watch Me: A Spatio-Temporal Trojan Attack on Deep-Reinforcement-Learning-Augment Autonomous Driving

Nov 22, 2022Abstract:Deep reinforcement learning (DRL) is one of the most popular algorithms to realize an autonomous driving (AD) system. The key success factor of DRL is that it embraces the perception capability of deep neural networks which, however, have been proven vulnerable to Trojan attacks. Trojan attacks have been widely explored in supervised learning (SL) tasks (e.g., image classification), but rarely in sequential decision-making tasks solved by DRL. Hence, in this paper, we explore Trojan attacks on DRL for AD tasks. First, we propose a spatio-temporal DRL algorithm based on the recurrent neural network and attention mechanism to prove that capturing spatio-temporal traffic features is the key factor to the effectiveness and safety of a DRL-augment AD system. We then design a spatial-temporal Trojan attack on DRL policies, where the trigger is hidden in a sequence of spatial and temporal traffic features, rather than a single instant state used in existing Trojan on SL and DRL tasks. With our Trojan, the adversary acts as a surrounding normal vehicle and can trigger attacks via specific spatial-temporal driving behaviors, rather than physical or wireless access. Through extensive experiments, we show that while capturing spatio-temporal traffic features can improve the performance of DRL for different AD tasks, they suffer from Trojan attacks since our designed Trojan shows high stealthy (various spatio-temporal trigger patterns), effective (less than 3.1\% performance variance rate and more than 98.5\% attack success rate), and sustainable to existing advanced defenses.

A Temporal-Pattern Backdoor Attack to Deep Reinforcement Learning

May 05, 2022

Abstract:Deep reinforcement learning (DRL) has made significant achievements in many real-world applications. But these real-world applications typically can only provide partial observations for making decisions due to occlusions and noisy sensors. However, partial state observability can be used to hide malicious behaviors for backdoors. In this paper, we explore the sequential nature of DRL and propose a novel temporal-pattern backdoor attack to DRL, whose trigger is a set of temporal constraints on a sequence of observations rather than a single observation, and effect can be kept in a controllable duration rather than in the instant. We validate our proposed backdoor attack to a typical job scheduling task in cloud computing. Numerous experimental results show that our backdoor can achieve excellent effectiveness, stealthiness, and sustainability. Our backdoor's average clean data accuracy and attack success rate can reach 97.8% and 97.5%, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge