Hongan Wang

Representation Learning with Mutual Influence of Modalities for Node Classification in Multi-Modal Heterogeneous Networks

May 12, 2025Abstract:Nowadays, numerous online platforms can be described as multi-modal heterogeneous networks (MMHNs), such as Douban's movie networks and Amazon's product review networks. Accurately categorizing nodes within these networks is crucial for analyzing the corresponding entities, which requires effective representation learning on nodes. However, existing multi-modal fusion methods often adopt either early fusion strategies which may lose the unique characteristics of individual modalities, or late fusion approaches overlooking the cross-modal guidance in GNN-based information propagation. In this paper, we propose a novel model for node classification in MMHNs, named Heterogeneous Graph Neural Network with Inter-Modal Attention (HGNN-IMA). It learns node representations by capturing the mutual influence of multiple modalities during the information propagation process, within the framework of heterogeneous graph transformer. Specifically, a nested inter-modal attention mechanism is integrated into the inter-node attention to achieve adaptive multi-modal fusion, and modality alignment is also taken into account to encourage the propagation among nodes with consistent similarities across all modalities. Moreover, an attention loss is augmented to mitigate the impact of missing modalities. Extensive experiments validate the superiority of the model in the node classification task, providing an innovative view to handle multi-modal data, especially when accompanied with network structures.

HOGSA: Bimanual Hand-Object Interaction Understanding with 3D Gaussian Splatting Based Data Augmentation

Jan 06, 2025

Abstract:Understanding of bimanual hand-object interaction plays an important role in robotics and virtual reality. However, due to significant occlusions between hands and object as well as the high degree-of-freedom motions, it is challenging to collect and annotate a high-quality, large-scale dataset, which prevents further improvement of bimanual hand-object interaction-related baselines. In this work, we propose a new 3D Gaussian Splatting based data augmentation framework for bimanual hand-object interaction, which is capable of augmenting existing dataset to large-scale photorealistic data with various hand-object pose and viewpoints. First, we use mesh-based 3DGS to model objects and hands, and to deal with the rendering blur problem due to multi-resolution input images used, we design a super-resolution module. Second, we extend the single hand grasping pose optimization module for the bimanual hand object to generate various poses of bimanual hand-object interaction, which can significantly expand the pose distribution of the dataset. Third, we conduct an analysis for the impact of different aspects of the proposed data augmentation on the understanding of the bimanual hand-object interaction. We perform our data augmentation on two benchmarks, H2O and Arctic, and verify that our method can improve the performance of the baselines.

Universal Features Guided Zero-Shot Category-Level Object Pose Estimation

Jan 06, 2025

Abstract:Object pose estimation, crucial in computer vision and robotics applications, faces challenges with the diversity of unseen categories. We propose a zero-shot method to achieve category-level 6-DOF object pose estimation, which exploits both 2D and 3D universal features of input RGB-D image to establish semantic similarity-based correspondences and can be extended to unseen categories without additional model fine-tuning. Our method begins with combining efficient 2D universal features to find sparse correspondences between intra-category objects and gets initial coarse pose. To handle the correspondence degradation of 2D universal features if the pose deviates much from the target pose, we use an iterative strategy to optimize the pose. Subsequently, to resolve pose ambiguities due to shape differences between intra-category objects, the coarse pose is refined by optimizing with dense alignment constraint of 3D universal features. Our method outperforms previous methods on the REAL275 and Wild6D benchmarks for unseen categories.

Diffgrasp: Whole-Body Grasping Synthesis Guided by Object Motion Using a Diffusion Model

Dec 30, 2024

Abstract:Generating high-quality whole-body human object interaction motion sequences is becoming increasingly important in various fields such as animation, VR/AR, and robotics. The main challenge of this task lies in determining the level of involvement of each hand given the complex shapes of objects in different sizes and their different motion trajectories, while ensuring strong grasping realism and guaranteeing the coordination of movement in all body parts. Contrasting with existing work, which either generates human interaction motion sequences without detailed hand grasping poses or only models a static grasping pose, we propose a simple yet effective framework that jointly models the relationship between the body, hands, and the given object motion sequences within a single diffusion model. To guide our network in perceiving the object's spatial position and learning more natural grasping poses, we introduce novel contact-aware losses and incorporate a data-driven, carefully designed guidance. Experimental results demonstrate that our approach outperforms the state-of-the-art method and generates plausible whole-body motion sequences.

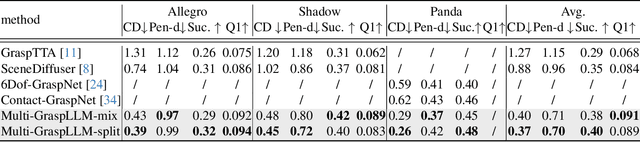

Multi-GraspLLM: A Multimodal LLM for Multi-Hand Semantic Guided Grasp Generation

Dec 11, 2024

Abstract:Multi-hand semantic grasp generation aims to generate feasible and semantically appropriate grasp poses for different robotic hands based on natural language instructions. Although the task is highly valuable, due to the lack of multi-hand grasp datasets with fine-grained contact description between robotic hands and objects, it is still a long-standing difficult task. In this paper, we present Multi-GraspSet, the first large-scale multi-hand grasp dataset with automatically contact annotations. Based on Multi-GraspSet, we propose Multi-GraspLLM, a unified language-guided grasp generation framework. It leverages large language models (LLM) to handle variable-length sequences, generating grasp poses for diverse robotic hands in a single unified architecture. Multi-GraspLLM first aligns the encoded point cloud features and text features into a unified semantic space. It then generates grasp bin tokens which are subsequently converted into grasp pose for each robotic hand via hand-aware linear mapping. The experimental results demonstrate that our approach significantly outperforms existing methods on Multi-GraspSet. More information can be found on our project page https://multi-graspllm.github.io.

SegGrasp: Zero-Shot Task-Oriented Grasping via Semantic and Geometric Guided Segmentation

Oct 11, 2024

Abstract:Task-oriented grasping, which involves grasping specific parts of objects based on their functions, is crucial for developing advanced robotic systems capable of performing complex tasks in dynamic environments. In this paper, we propose a training-free framework that incorporates both semantic and geometric priors for zero-shot task-oriented grasp generation. The proposed framework, SegGrasp, first leverages the vision-language models like GLIP for coarse segmentation. It then uses detailed geometric information from convex decomposition to improve segmentation quality through a fusion policy named GeoFusion. An effective grasp pose can be generated by a grasping network with improved segmentation. We conducted the experiments on both segmentation benchmark and real-world robot grasping. The experimental results show that SegGrasp surpasses the baseline by more than 15\% in grasp and segmentation performance.

RulePrompt: Weakly Supervised Text Classification with Prompting PLMs and Self-Iterative Logical Rules

Mar 05, 2024

Abstract:Weakly supervised text classification (WSTC), also called zero-shot or dataless text classification, has attracted increasing attention due to its applicability in classifying a mass of texts within the dynamic and open Web environment, since it requires only a limited set of seed words (label names) for each category instead of labeled data. With the help of recently popular prompting Pre-trained Language Models (PLMs), many studies leveraged manually crafted and/or automatically identified verbalizers to estimate the likelihood of categories, but they failed to differentiate the effects of these category-indicative words, let alone capture their correlations and realize adaptive adjustments according to the unlabeled corpus. In this paper, in order to let the PLM effectively understand each category, we at first propose a novel form of rule-based knowledge using logical expressions to characterize the meanings of categories. Then, we develop a prompting PLM-based approach named RulePrompt for the WSTC task, consisting of a rule mining module and a rule-enhanced pseudo label generation module, plus a self-supervised fine-tuning module to make the PLM align with this task. Within this framework, the inaccurate pseudo labels assigned to texts and the imprecise logical rules associated with categories mutually enhance each other in an alternative manner. That establishes a self-iterative closed loop of knowledge (rule) acquisition and utilization, with seed words serving as the starting point. Extensive experiments validate the effectiveness and robustness of our approach, which markedly outperforms state-of-the-art weakly supervised methods. What is more, our approach yields interpretable category rules, proving its advantage in disambiguating easily-confused categories.

Self-supervised Learning of Implicit Shape Representation with Dense Correspondence for Deformable Objects

Aug 24, 2023

Abstract:Learning 3D shape representation with dense correspondence for deformable objects is a fundamental problem in computer vision. Existing approaches often need additional annotations of specific semantic domain, e.g., skeleton poses for human bodies or animals, which require extra annotation effort and suffer from error accumulation, and they are limited to specific domain. In this paper, we propose a novel self-supervised approach to learn neural implicit shape representation for deformable objects, which can represent shapes with a template shape and dense correspondence in 3D. Our method does not require the priors of skeleton and skinning weight, and only requires a collection of shapes represented in signed distance fields. To handle the large deformation, we constrain the learned template shape in the same latent space with the training shapes, design a new formulation of local rigid constraint that enforces rigid transformation in local region and addresses local reflection issue, and present a new hierarchical rigid constraint to reduce the ambiguity due to the joint learning of template shape and correspondences. Extensive experiments show that our model can represent shapes with large deformations. We also show that our shape representation can support two typical applications, such as texture transfer and shape editing, with competitive performance. The code and models are available at https://iscas3dv.github.io/deformshape

Novel-view Synthesis and Pose Estimation for Hand-Object Interaction from Sparse Views

Aug 22, 2023Abstract:Hand-object interaction understanding and the barely addressed novel view synthesis are highly desired in the immersive communication, whereas it is challenging due to the high deformation of hand and heavy occlusions between hand and object. In this paper, we propose a neural rendering and pose estimation system for hand-object interaction from sparse views, which can also enable 3D hand-object interaction editing. We share the inspiration from recent scene understanding work that shows a scene specific model built beforehand can significantly improve and unblock vision tasks especially when inputs are sparse, and extend it to the dynamic hand-object interaction scenario and propose to solve the problem in two stages. We first learn the shape and appearance prior knowledge of hands and objects separately with the neural representation at the offline stage. During the online stage, we design a rendering-based joint model fitting framework to understand the dynamic hand-object interaction with the pre-built hand and object models as well as interaction priors, which thereby overcomes penetration and separation issues between hand and object and also enables novel view synthesis. In order to get stable contact during the hand-object interaction process in a sequence, we propose a stable contact loss to make the contact region to be consistent. Experiments demonstrate that our method outperforms the state-of-the-art methods. Code and dataset are available in project webpage https://iscas3dv.github.io/HO-NeRF.

Unsupervised Model-based speaker adaptation of end-to-end lattice-free MMI model for speech recognition

Nov 17, 2022Abstract:Modeling the speaker variability is a key challenge for automatic speech recognition (ASR) systems. In this paper, the learning hidden unit contributions (LHUC) based adaptation techniques with compact speaker dependent (SD) parameters are used to facilitate both speaker adaptive training (SAT) and unsupervised test-time speaker adaptation for end-to-end (E2E) lattice-free MMI (LF-MMI) models. An unsupervised model-based adaptation framework is proposed to estimate the SD parameters in E2E paradigm using LF-MMI and cross entropy (CE) criterions. Various regularization methods of the standard LHUC adaptation, e.g., the Bayesian LHUC (BLHUC) adaptation, are systematically investigated to mitigate the risk of overfitting, on E2E LF-MMI CNN-TDNN and CNN-TDNN-BLSTM models. Lattice-based confidence score estimation is used for adaptation data selection to reduce the supervision label uncertainty. Experiments on the 300-hour Switchboard task suggest that applying BLHUC in the proposed unsupervised E2E adaptation framework to byte pair encoding (BPE) based E2E LF-MMI systems consistently outperformed the baseline systems by relative word error rate (WER) reductions up to 10.5% and 14.7% on the NIST Hub5'00 and RT03 evaluation sets, and achieved the best performance in WERs of 9.0% and 9.7%, respectively. These results are comparable to the results of state-of-the-art adapted LF-MMI hybrid systems and adapted Conformer-based E2E systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge