He Huang

TVMC: Time-Varying Mesh Compression via Multi-Stage Anchor Mesh Generation

Oct 26, 2025Abstract:Time-varying meshes, characterized by dynamic connectivity and varying vertex counts, hold significant promise for applications such as augmented reality. However, their practical utilization remains challenging due to the substantial data volume required for high-fidelity representation. While various compression methods attempt to leverage temporal redundancy between consecutive mesh frames, most struggle with topological inconsistency and motion-induced artifacts. To address these issues, we propose Time-Varying Mesh Compression (TVMC), a novel framework built on multi-stage coarse-to-fine anchor mesh generation for inter-frame prediction. Specifically, the anchor mesh is progressively constructed in three stages: initial, coarse, and fine. The initial anchor mesh is obtained through fast topology alignment to exploit temporal coherence. A Kalman filter-based motion estimation module then generates a coarse anchor mesh by accurately compensating inter-frame motions. Subsequently, a Quadric Error Metric-based refinement step optimizes vertex positions to form a fine anchor mesh with improved geometric fidelity. Based on the refined anchor mesh, the inter-frame motions relative to the reference base mesh are encoded, while the residual displacements between the subdivided fine anchor mesh and the input mesh are adaptively quantized and compressed. This hierarchical strategy preserves consistent connectivity and high-quality surface approximation, while achieving an efficient and compact representation of dynamic geometry. Extensive experiments on standard MPEG dynamic mesh sequences demonstrate that TVMC achieves state-of-the-art compression performance. Compared to the latest V-DMC standard, it delivers a significant BD-rate gain of 10.2% ~ 16.9%, while preserving high reconstruction quality. The code is available at https://github.com/H-Huang774/TVMC.

Optimal Real-time Communication in 6G Ultra-Massive V2X Mobile Networks

Oct 08, 2025Abstract:This paper introduces a novel cooperative vehicular communication algorithm tailored for future 6G ultra-massive vehicle-to-everything (V2X) networks leveraging integrated space-air-ground communication systems. Specifically, we address the challenge of real-time information exchange among rapidly moving vehicles. We demonstrate the existence of an upper bound on channel capacity given a fixed number of relays, and propose a low-complexity relay selection heuristic algorithm. Simulation results verify that our proposed algorithm achieves superior channel capacities compared to existing cooperative vehicular communication approaches.

Energy Detection over Composite $κ-μ$ Shadowed Fading Channels with Inverse Gaussian Distribution in Ultra mMTC Networks

Aug 29, 2025Abstract:This paper investigates the characteristics of energy detection (ED) over composite $\kappa$-$\mu$ shadowed fading channels in ultra machine-type communication (mMTC) networks. We have derived the closed-form expressions of the probability density function (PDF) of signal-to-noise ratio (SNR) based on the Inverse Gaussian (\emph{IG}) distribution. By adopting novel integration and mathematical transformation techniques, we derive a truncation-based closed-form expression for the average detection probability for the first time. It can be observed from our simulations that the number of propagation paths has a more pronounced effect on average detection probability compared to average SNR, which is in contrast to earlier studies that focus on device-to-device networks. It suggests that for 6G mMTC network design, we should consider enhancing transmitter-receiver placement and antenna alignment strategies, rather than relying solely on increasing the device-to-device average SNR.

Word Level Timestamp Generation for Automatic Speech Recognition and Translation

May 21, 2025

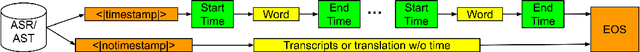

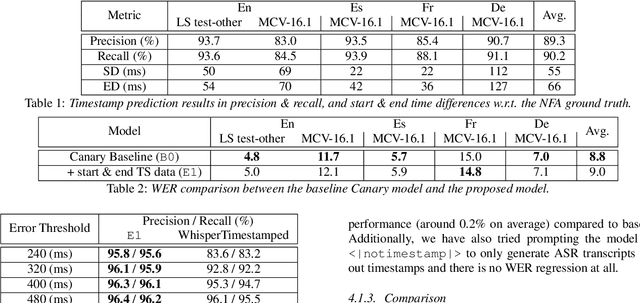

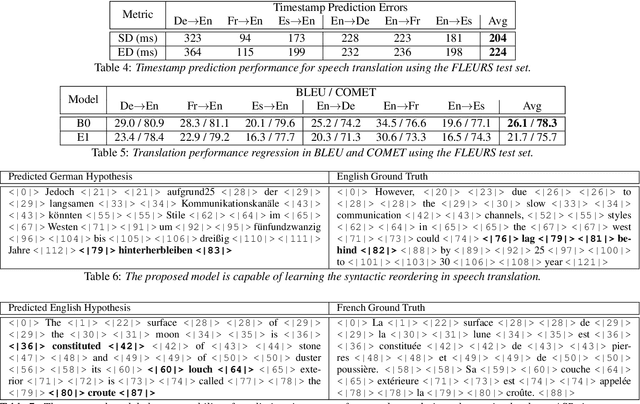

Abstract:We introduce a data-driven approach for enabling word-level timestamp prediction in the Canary model. Accurate timestamp information is crucial for a variety of downstream tasks such as speech content retrieval and timed subtitles. While traditional hybrid systems and end-to-end (E2E) models may employ external modules for timestamp prediction, our approach eliminates the need for separate alignment mechanisms. By leveraging the NeMo Forced Aligner (NFA) as a teacher model, we generate word-level timestamps and train the Canary model to predict timestamps directly. We introduce a new <|timestamp|> token, enabling the Canary model to predict start and end timestamps for each word. Our method demonstrates precision and recall rates between 80% and 90%, with timestamp prediction errors ranging from 20 to 120 ms across four languages, with minimal WER degradation. Additionally, we extend our system to automatic speech translation (AST) tasks, achieving timestamp prediction errors around 200 milliseconds.

Granary: Speech Recognition and Translation Dataset in 25 European Languages

May 19, 2025Abstract:Multi-task and multilingual approaches benefit large models, yet speech processing for low-resource languages remains underexplored due to data scarcity. To address this, we present Granary, a large-scale collection of speech datasets for recognition and translation across 25 European languages. This is the first open-source effort at this scale for both transcription and translation. We enhance data quality using a pseudo-labeling pipeline with segmentation, two-pass inference, hallucination filtering, and punctuation restoration. We further generate translation pairs from pseudo-labeled transcriptions using EuroLLM, followed by a data filtration pipeline. Designed for efficiency, our pipeline processes vast amount of data within hours. We assess models trained on processed data by comparing their performance on previously curated datasets for both high- and low-resource languages. Our findings show that these models achieve similar performance using approx. 50% less data. Dataset will be made available at https://hf.co/datasets/nvidia/Granary

IPENS:Interactive Unsupervised Framework for Rapid Plant Phenotyping Extraction via NeRF-SAM2 Fusion

May 19, 2025Abstract:Advanced plant phenotyping technologies play a crucial role in targeted trait improvement and accelerating intelligent breeding. Due to the species diversity of plants, existing methods heavily rely on large-scale high-precision manually annotated data. For self-occluded objects at the grain level, unsupervised methods often prove ineffective. This study proposes IPENS, an interactive unsupervised multi-target point cloud extraction method. The method utilizes radiance field information to lift 2D masks, which are segmented by SAM2 (Segment Anything Model 2), into 3D space for target point cloud extraction. A multi-target collaborative optimization strategy is designed to effectively resolve the single-interaction multi-target segmentation challenge. Experimental validation demonstrates that IPENS achieves a grain-level segmentation accuracy (mIoU) of 63.72% on a rice dataset, with strong phenotypic estimation capabilities: grain volume prediction yields R2 = 0.7697 (RMSE = 0.0025), leaf surface area R2 = 0.84 (RMSE = 18.93), and leaf length and width predictions achieve R2 = 0.97 and 0.87 (RMSE = 1.49 and 0.21). On a wheat dataset,IPENS further improves segmentation accuracy to 89.68% (mIoU), with equally outstanding phenotypic estimation performance: spike volume prediction achieves R2 = 0.9956 (RMSE = 0.0055), leaf surface area R2 = 1.00 (RMSE = 0.67), and leaf length and width predictions reach R2 = 0.99 and 0.92 (RMSE = 0.23 and 0.15). This method provides a non-invasive, high-quality phenotyping extraction solution for rice and wheat. Without requiring annotated data, it rapidly extracts grain-level point clouds within 3 minutes through simple single-round interactions on images for multiple targets, demonstrating significant potential to accelerate intelligent breeding efficiency.

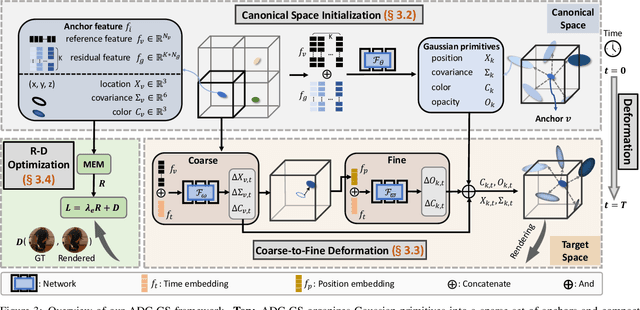

ADC-GS: Anchor-Driven Deformable and Compressed Gaussian Splatting for Dynamic Scene Reconstruction

May 13, 2025

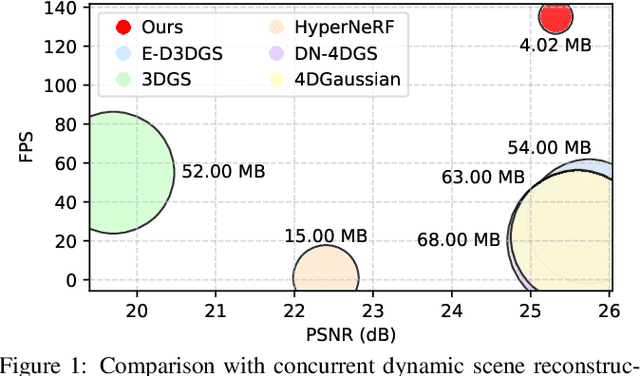

Abstract:Existing 4D Gaussian Splatting methods rely on per-Gaussian deformation from a canonical space to target frames, which overlooks redundancy among adjacent Gaussian primitives and results in suboptimal performance. To address this limitation, we propose Anchor-Driven Deformable and Compressed Gaussian Splatting (ADC-GS), a compact and efficient representation for dynamic scene reconstruction. Specifically, ADC-GS organizes Gaussian primitives into an anchor-based structure within the canonical space, enhanced by a temporal significance-based anchor refinement strategy. To reduce deformation redundancy, ADC-GS introduces a hierarchical coarse-to-fine pipeline that captures motions at varying granularities. Moreover, a rate-distortion optimization is adopted to achieve an optimal balance between bitrate consumption and representation fidelity. Experimental results demonstrate that ADC-GS outperforms the per-Gaussian deformation approaches in rendering speed by 300%-800% while achieving state-of-the-art storage efficiency without compromising rendering quality. The code is released at https://github.com/H-Huang774/ADC-GS.git.

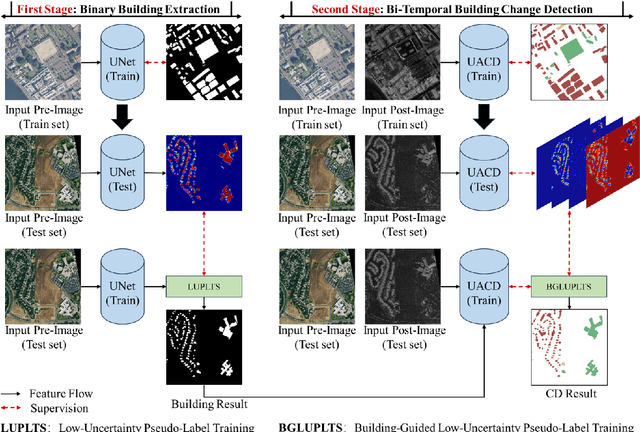

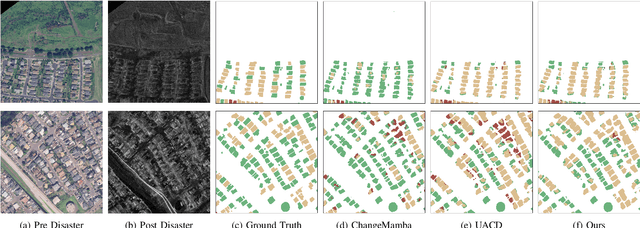

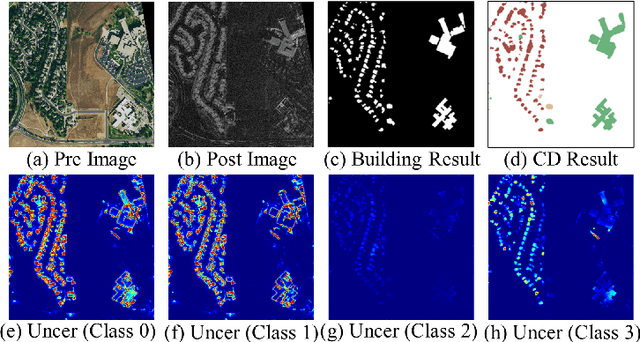

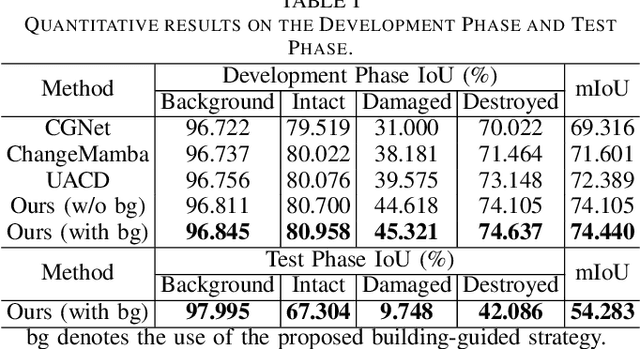

Building-Guided Pseudo-Label Learning for Cross-Modal Building Damage Mapping

May 08, 2025

Abstract:Accurate building damage assessment using bi-temporal multi-modal remote sensing images is essential for effective disaster response and recovery planning. This study proposes a novel Building-Guided Pseudo-Label Learning Framework to address the challenges of mapping building damage from pre-disaster optical and post-disaster SAR images. First, we train a series of building extraction models using pre-disaster optical images and building labels. To enhance building segmentation, we employ multi-model fusion and test-time augmentation strategies to generate pseudo-probabilities, followed by a low-uncertainty pseudo-label training method for further refinement. Next, a change detection model is trained on bi-temporal cross-modal images and damaged building labels. To improve damage classification accuracy, we introduce a building-guided low-uncertainty pseudo-label refinement strategy, which leverages building priors from the previous step to guide pseudo-label generation for damaged buildings, reducing uncertainty and enhancing reliability. Experimental results on the 2025 IEEE GRSS Data Fusion Contest dataset demonstrate the effectiveness of our approach, which achieved the highest mIoU score (54.28%) and secured first place in the competition.

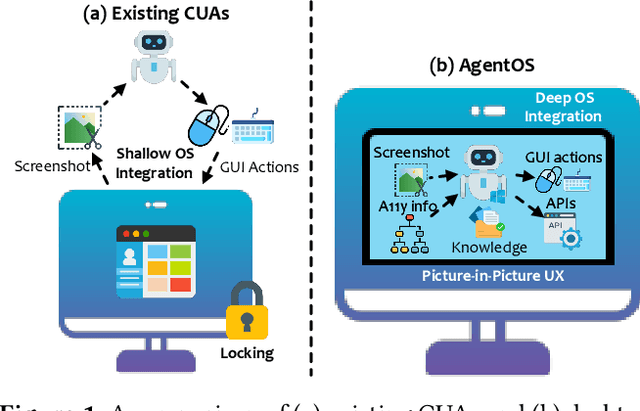

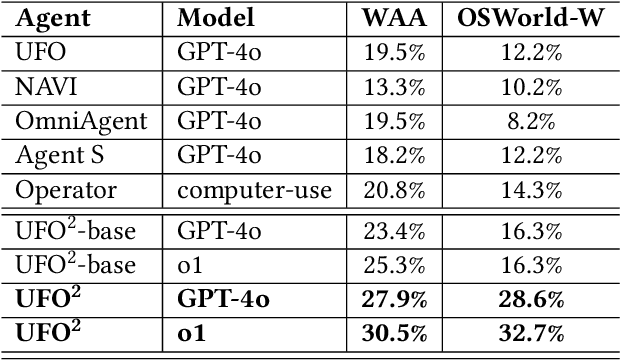

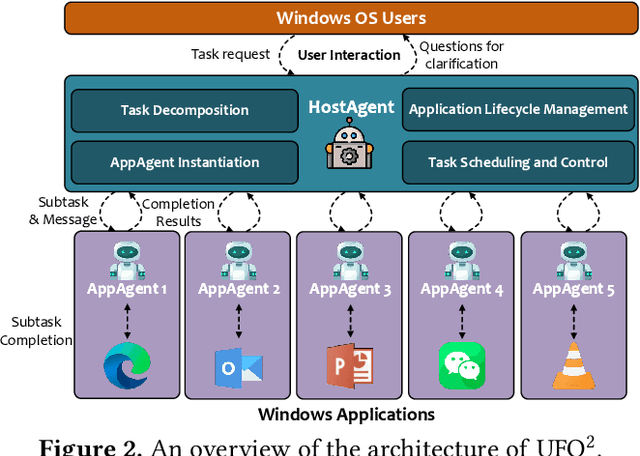

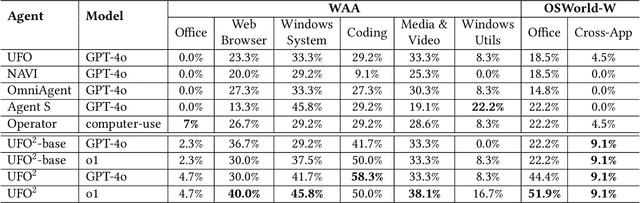

UFO2: The Desktop AgentOS

Apr 20, 2025

Abstract:Recent Computer-Using Agents (CUAs), powered by multimodal large language models (LLMs), offer a promising direction for automating complex desktop workflows through natural language. However, most existing CUAs remain conceptual prototypes, hindered by shallow OS integration, fragile screenshot-based interaction, and disruptive execution. We present UFO2, a multiagent AgentOS for Windows desktops that elevates CUAs into practical, system-level automation. UFO2 features a centralized HostAgent for task decomposition and coordination, alongside a collection of application-specialized AppAgent equipped with native APIs, domain-specific knowledge, and a unified GUI--API action layer. This architecture enables robust task execution while preserving modularity and extensibility. A hybrid control detection pipeline fuses Windows UI Automation (UIA) with vision-based parsing to support diverse interface styles. Runtime efficiency is further enhanced through speculative multi-action planning, reducing per-step LLM overhead. Finally, a Picture-in-Picture (PiP) interface enables automation within an isolated virtual desktop, allowing agents and users to operate concurrently without interference. We evaluate UFO2 across over 20 real-world Windows applications, demonstrating substantial improvements in robustness and execution accuracy over prior CUAs. Our results show that deep OS integration unlocks a scalable path toward reliable, user-aligned desktop automation.

Degradation Alchemy: Self-Supervised Unknown-to-Known Transformation for Blind Hyperspectral Image Fusion

Mar 19, 2025Abstract:Hyperspectral image (HSI) fusion is an efficient technique that combines low-resolution HSI (LR-HSI) and high-resolution multispectral images (HR-MSI) to generate high-resolution HSI (HR-HSI). Existing supervised learning methods (SLMs) can yield promising results when test data degradation matches the training ones, but they face challenges in generalizing to unknown degradations. To unleash the potential and generalization ability of SLMs, we propose a novel self-supervised unknown-to-known degradation transformation framework (U2K) for blind HSI fusion, which adaptively transforms unknown degradation into the same type of degradation as those handled by pre-trained SLMs. Specifically, the proposed U2K framework consists of: (1) spatial and spectral Degradation Wrapping (DW) modules that map HR-HSI to unknown degraded HR-MSI and LR-HSI, and (2) Degradation Transformation (DT) modules that convert these wrapped data into predefined degradation patterns. The transformed HR-MSI and LR-HSI pairs are then processed by a pre-trained network to reconstruct the target HR-HSI. We train the U2K framework in a self-supervised manner using consistency loss and greedy alternating optimization, significantly improving the flexibility of blind HSI fusion. Extensive experiments confirm the effectiveness of our proposed U2K framework in boosting the adaptability of five existing SLMs under various degradation settings and surpassing state-of-the-art blind methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge