Yujun Guo

Building-Guided Pseudo-Label Learning for Cross-Modal Building Damage Mapping

May 08, 2025

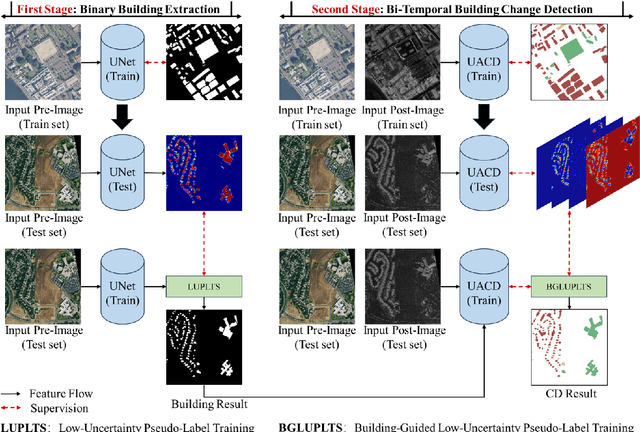

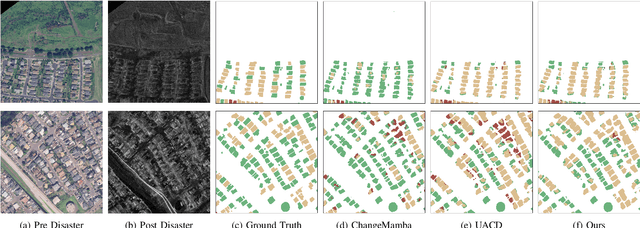

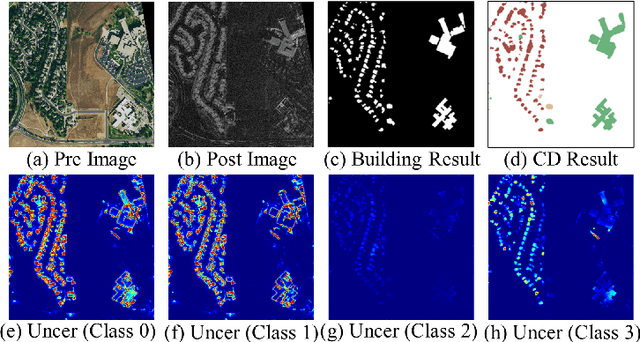

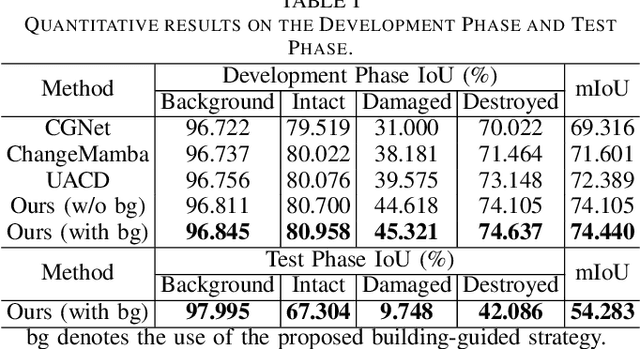

Abstract:Accurate building damage assessment using bi-temporal multi-modal remote sensing images is essential for effective disaster response and recovery planning. This study proposes a novel Building-Guided Pseudo-Label Learning Framework to address the challenges of mapping building damage from pre-disaster optical and post-disaster SAR images. First, we train a series of building extraction models using pre-disaster optical images and building labels. To enhance building segmentation, we employ multi-model fusion and test-time augmentation strategies to generate pseudo-probabilities, followed by a low-uncertainty pseudo-label training method for further refinement. Next, a change detection model is trained on bi-temporal cross-modal images and damaged building labels. To improve damage classification accuracy, we introduce a building-guided low-uncertainty pseudo-label refinement strategy, which leverages building priors from the previous step to guide pseudo-label generation for damaged buildings, reducing uncertainty and enhancing reliability. Experimental results on the 2025 IEEE GRSS Data Fusion Contest dataset demonstrate the effectiveness of our approach, which achieved the highest mIoU score (54.28%) and secured first place in the competition.

Degradation Alchemy: Self-Supervised Unknown-to-Known Transformation for Blind Hyperspectral Image Fusion

Mar 19, 2025Abstract:Hyperspectral image (HSI) fusion is an efficient technique that combines low-resolution HSI (LR-HSI) and high-resolution multispectral images (HR-MSI) to generate high-resolution HSI (HR-HSI). Existing supervised learning methods (SLMs) can yield promising results when test data degradation matches the training ones, but they face challenges in generalizing to unknown degradations. To unleash the potential and generalization ability of SLMs, we propose a novel self-supervised unknown-to-known degradation transformation framework (U2K) for blind HSI fusion, which adaptively transforms unknown degradation into the same type of degradation as those handled by pre-trained SLMs. Specifically, the proposed U2K framework consists of: (1) spatial and spectral Degradation Wrapping (DW) modules that map HR-HSI to unknown degraded HR-MSI and LR-HSI, and (2) Degradation Transformation (DT) modules that convert these wrapped data into predefined degradation patterns. The transformed HR-MSI and LR-HSI pairs are then processed by a pre-trained network to reconstruct the target HR-HSI. We train the U2K framework in a self-supervised manner using consistency loss and greedy alternating optimization, significantly improving the flexibility of blind HSI fusion. Extensive experiments confirm the effectiveness of our proposed U2K framework in boosting the adaptability of five existing SLMs under various degradation settings and surpassing state-of-the-art blind methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge