Yiling Xu

TVMC: Time-Varying Mesh Compression via Multi-Stage Anchor Mesh Generation

Oct 26, 2025Abstract:Time-varying meshes, characterized by dynamic connectivity and varying vertex counts, hold significant promise for applications such as augmented reality. However, their practical utilization remains challenging due to the substantial data volume required for high-fidelity representation. While various compression methods attempt to leverage temporal redundancy between consecutive mesh frames, most struggle with topological inconsistency and motion-induced artifacts. To address these issues, we propose Time-Varying Mesh Compression (TVMC), a novel framework built on multi-stage coarse-to-fine anchor mesh generation for inter-frame prediction. Specifically, the anchor mesh is progressively constructed in three stages: initial, coarse, and fine. The initial anchor mesh is obtained through fast topology alignment to exploit temporal coherence. A Kalman filter-based motion estimation module then generates a coarse anchor mesh by accurately compensating inter-frame motions. Subsequently, a Quadric Error Metric-based refinement step optimizes vertex positions to form a fine anchor mesh with improved geometric fidelity. Based on the refined anchor mesh, the inter-frame motions relative to the reference base mesh are encoded, while the residual displacements between the subdivided fine anchor mesh and the input mesh are adaptively quantized and compressed. This hierarchical strategy preserves consistent connectivity and high-quality surface approximation, while achieving an efficient and compact representation of dynamic geometry. Extensive experiments on standard MPEG dynamic mesh sequences demonstrate that TVMC achieves state-of-the-art compression performance. Compared to the latest V-DMC standard, it delivers a significant BD-rate gain of 10.2% ~ 16.9%, while preserving high reconstruction quality. The code is available at https://github.com/H-Huang774/TVMC.

3DGS-VBench: A Comprehensive Video Quality Evaluation Benchmark for 3DGS Compression

Aug 09, 2025Abstract:3D Gaussian Splatting (3DGS) enables real-time novel view synthesis with high visual fidelity, but its substantial storage requirements hinder practical deployment, prompting state-of-the-art (SOTA) 3DGS methods to incorporate compression modules. However, these 3DGS generative compression techniques introduce unique distortions lacking systematic quality assessment research. To this end, we establish 3DGS-VBench, a large-scale Video Quality Assessment (VQA) Dataset and Benchmark with 660 compressed 3DGS models and video sequences generated from 11 scenes across 6 SOTA 3DGS compression algorithms with systematically designed parameter levels. With annotations from 50 participants, we obtained MOS scores with outlier removal and validated dataset reliability. We benchmark 6 3DGS compression algorithms on storage efficiency and visual quality, and evaluate 15 quality assessment metrics across multiple paradigms. Our work enables specialized VQA model training for 3DGS, serving as a catalyst for compression and quality assessment research. The dataset is available at https://github.com/YukeXing/3DGS-VBench.

3DGS-IEval-15K: A Large-scale Image Quality Evaluation Database for 3D Gaussian-Splatting

Jun 17, 2025Abstract:3D Gaussian Splatting (3DGS) has emerged as a promising approach for novel view synthesis, offering real-time rendering with high visual fidelity. However, its substantial storage requirements present significant challenges for practical applications. While recent state-of-the-art (SOTA) 3DGS methods increasingly incorporate dedicated compression modules, there is a lack of a comprehensive framework to evaluate their perceptual impact. Therefore we present 3DGS-IEval-15K, the first large-scale image quality assessment (IQA) dataset specifically designed for compressed 3DGS representations. Our dataset encompasses 15,200 images rendered from 10 real-world scenes through 6 representative 3DGS algorithms at 20 strategically selected viewpoints, with different compression levels leading to various distortion effects. Through controlled subjective experiments, we collect human perception data from 60 viewers. We validate dataset quality through scene diversity and MOS distribution analysis, and establish a comprehensive benchmark with 30 representative IQA metrics covering diverse types. As the largest-scale 3DGS quality assessment dataset to date, our work provides a foundation for developing 3DGS specialized IQA metrics, and offers essential data for investigating view-dependent quality distribution patterns unique to 3DGS. The database is publicly available at https://github.com/YukeXing/3DGS-IEval-15K.

Rasterizing Wireless Radiance Field via Deformable 2D Gaussian Splatting

Jun 15, 2025Abstract:Modeling the wireless radiance field (WRF) is fundamental to modern communication systems, enabling key tasks such as localization, sensing, and channel estimation. Traditional approaches, which rely on empirical formulas or physical simulations, often suffer from limited accuracy or require strong scene priors. Recent neural radiance field (NeRF-based) methods improve reconstruction fidelity through differentiable volumetric rendering, but their reliance on computationally expensive multilayer perceptron (MLP) queries hinders real-time deployment. To overcome these challenges, we introduce Gaussian splatting (GS) to the wireless domain, leveraging its efficiency in modeling optical radiance fields to enable compact and accurate WRF reconstruction. Specifically, we propose SwiftWRF, a deformable 2D Gaussian splatting framework that synthesizes WRF spectra at arbitrary positions under single-sided transceiver mobility. SwiftWRF employs CUDA-accelerated rasterization to render spectra at over 100000 fps and uses a lightweight MLP to model the deformation of 2D Gaussians, effectively capturing mobility-induced WRF variations. In addition to novel spectrum synthesis, the efficacy of SwiftWRF is further underscored in its applications in angle-of-arrival (AoA) and received signal strength indicator (RSSI) prediction. Experiments conducted on both real-world and synthetic indoor scenes demonstrate that SwiftWRF can reconstruct WRF spectra up to 500x faster than existing state-of-the-art methods, while significantly enhancing its signal quality. Code and datasets will be released.

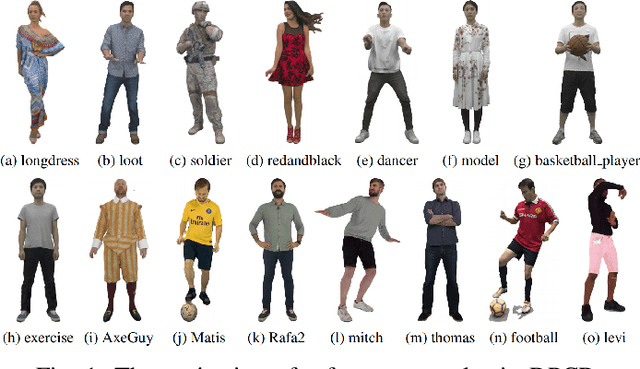

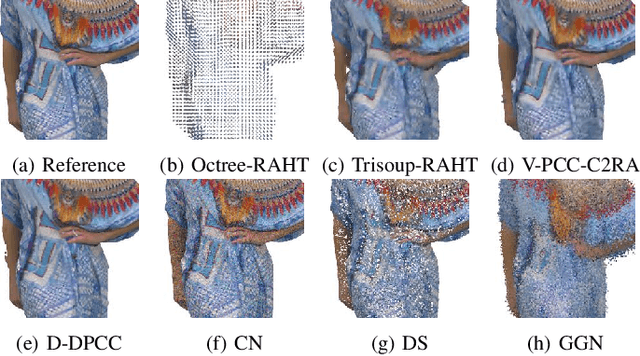

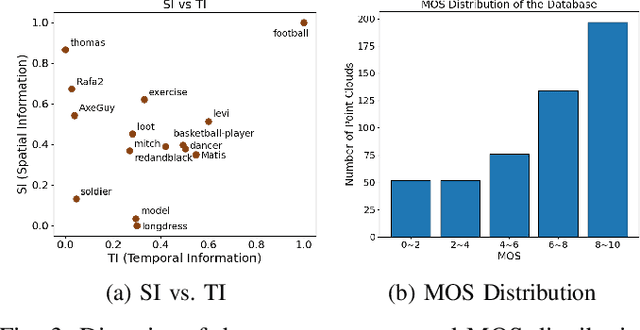

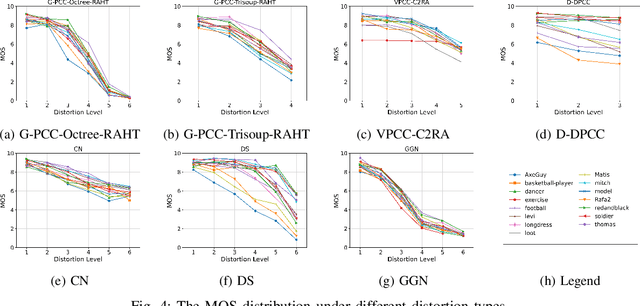

DPCD: A Quality Assessment Database for Dynamic Point Clouds

May 18, 2025

Abstract:Recently, the advancements in Virtual/Augmented Reality (VR/AR) have driven the demand for Dynamic Point Clouds (DPC). Unlike static point clouds, DPCs are capable of capturing temporal changes within objects or scenes, offering a more accurate simulation of the real world. While significant progress has been made in the quality assessment research of static point cloud, little study has been done on Dynamic Point Cloud Quality Assessment (DPCQA), which hinders the development of quality-oriented applications, such as interframe compression and transmission in practical scenarios. In this paper, we introduce a large-scale DPCQA database, named DPCD, which includes 15 reference DPCs and 525 distorted DPCs from seven types of lossy compression and noise distortion. By rendering these samples to Processed Video Sequences (PVS), a comprehensive subjective experiment is conducted to obtain Mean Opinion Scores (MOS) from 21 viewers for analysis. The characteristic of contents, impact of various distortions, and accuracy of MOSs are presented to validate the heterogeneity and reliability of the proposed database. Furthermore, we evaluate the performance of several objective metrics on DPCD. The experiment results show that DPCQA is more challenge than that of static point cloud. The DPCD, which serves as a catalyst for new research endeavors on DPCQA, is publicly available at https://huggingface.co/datasets/Olivialyt/DPCD.

Textured mesh Quality Assessment using Geometry and Color Field Similarity

May 16, 2025Abstract:Textured mesh quality assessment (TMQA) is critical for various 3D mesh applications. However, existing TMQA methods often struggle to provide accurate and robust evaluations. Motivated by the effectiveness of fields in representing both 3D geometry and color information, we propose a novel point-based TMQA method called field mesh quality metric (FMQM). FMQM utilizes signed distance fields and a newly proposed color field named nearest surface point color field to realize effective mesh feature description. Four features related to visual perception are extracted from the geometry and color fields: geometry similarity, geometry gradient similarity, space color distribution similarity, and space color gradient similarity. Experimental results on three benchmark datasets demonstrate that FMQM outperforms state-of-the-art (SOTA) TMQA metrics. Furthermore, FMQM exhibits low computational complexity, making it a practical and efficient solution for real-world applications in 3D graphics and visualization. Our code is publicly available at: https://github.com/yyyykf/FMQM.

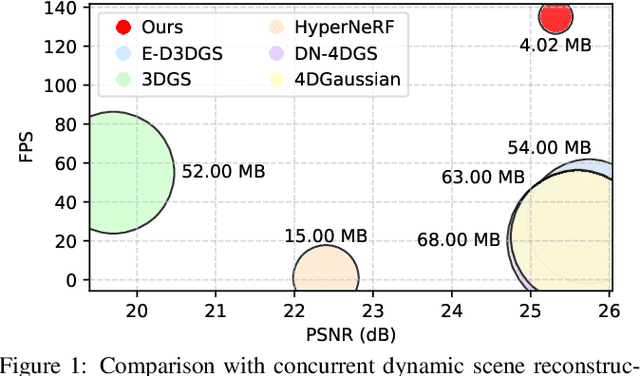

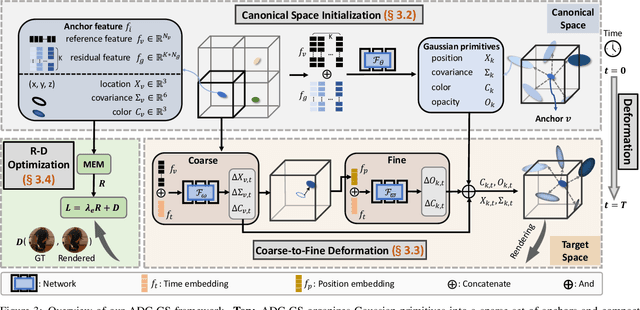

ADC-GS: Anchor-Driven Deformable and Compressed Gaussian Splatting for Dynamic Scene Reconstruction

May 13, 2025

Abstract:Existing 4D Gaussian Splatting methods rely on per-Gaussian deformation from a canonical space to target frames, which overlooks redundancy among adjacent Gaussian primitives and results in suboptimal performance. To address this limitation, we propose Anchor-Driven Deformable and Compressed Gaussian Splatting (ADC-GS), a compact and efficient representation for dynamic scene reconstruction. Specifically, ADC-GS organizes Gaussian primitives into an anchor-based structure within the canonical space, enhanced by a temporal significance-based anchor refinement strategy. To reduce deformation redundancy, ADC-GS introduces a hierarchical coarse-to-fine pipeline that captures motions at varying granularities. Moreover, a rate-distortion optimization is adopted to achieve an optimal balance between bitrate consumption and representation fidelity. Experimental results demonstrate that ADC-GS outperforms the per-Gaussian deformation approaches in rendering speed by 300%-800% while achieving state-of-the-art storage efficiency without compromising rendering quality. The code is released at https://github.com/H-Huang774/ADC-GS.git.

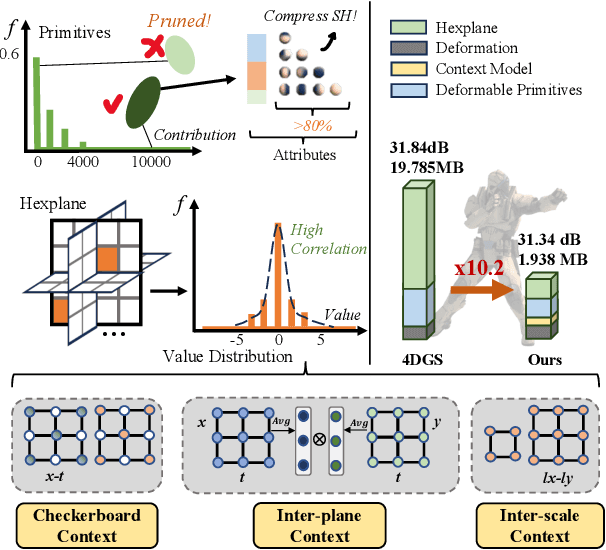

Light4GS: Lightweight Compact 4D Gaussian Splatting Generation via Context Model

Mar 18, 2025

Abstract:3D Gaussian Splatting (3DGS) has emerged as an efficient and high-fidelity paradigm for novel view synthesis. To adapt 3DGS for dynamic content, deformable 3DGS incorporates temporally deformable primitives with learnable latent embeddings to capture complex motions. Despite its impressive performance, the high-dimensional embeddings and vast number of primitives lead to substantial storage requirements. In this paper, we introduce a \textbf{Light}weight \textbf{4}D\textbf{GS} framework, called Light4GS, that employs significance pruning with a deep context model to provide a lightweight storage-efficient dynamic 3DGS representation. The proposed Light4GS is based on 4DGS that is a typical representation of deformable 3DGS. Specifically, our framework is built upon two core components: (1) a spatio-temporal significance pruning strategy that eliminates over 64\% of the deformable primitives, followed by an entropy-constrained spherical harmonics compression applied to the remainder; and (2) a deep context model that integrates intra- and inter-prediction with hyperprior into a coarse-to-fine context structure to enable efficient multiscale latent embedding compression. Our approach achieves over 120x compression and increases rendering FPS up to 20\% compared to the baseline 4DGS, and also superior to frame-wise state-of-the-art 3DGS compression methods, revealing the effectiveness of our Light4GS in terms of both intra- and inter-prediction methods without sacrificing rendering quality.

D2GV: Deformable 2D Gaussian Splatting for Video Representation in 400FPS

Mar 07, 2025Abstract:Implicit Neural Representations (INRs) have emerged as a powerful approach for video representation, offering versatility across tasks such as compression and inpainting. However, their implicit formulation limits both interpretability and efficacy, undermining their practicality as a comprehensive solution. We propose a novel video representation based on deformable 2D Gaussian splatting, dubbed D2GV, which aims to achieve three key objectives: 1) improved efficiency while delivering superior quality; 2) enhanced scalability and interpretability; and 3) increased friendliness for downstream tasks. Specifically, we initially divide the video sequence into fixed-length Groups of Pictures (GoP) to allow parallel training and linear scalability with video length. For each GoP, D2GV represents video frames by applying differentiable rasterization to 2D Gaussians, which are deformed from a canonical space into their corresponding timestamps. Notably, leveraging efficient CUDA-based rasterization, D2GV converges fast and decodes at speeds exceeding 400 FPS, while delivering quality that matches or surpasses state-of-the-art INRs. Moreover, we incorporate a learnable pruning and quantization strategy to streamline D2GV into a more compact representation. We demonstrate D2GV's versatility in tasks including video interpolation, inpainting and denoising, underscoring its potential as a promising solution for video representation. Code is available at: \href{https://github.com/Evan-sudo/D2GV}{https://github.com/Evan-sudo/D2GV}.

Differentiable Low-computation Global Correlation Loss for Monotonicity Evaluation in Quality Assessment

Jan 26, 2025Abstract:In this paper, we propose a global monotonicity consistency training strategy for quality assessment, which includes a differentiable, low-computation monotonicity evaluation loss function and a global perception training mechanism. Specifically, unlike conventional ranking loss and linear programming approaches that indirectly implement the Spearman rank-order correlation coefficient (SROCC) function, our method directly converts SROCC into a loss function by making the sorting operation within SROCC differentiable and functional. Furthermore, to mitigate the discrepancies between batch optimization during network training and global evaluation of SROCC, we introduce a memory bank mechanism. This mechanism stores gradient-free predicted results from previous batches and uses them in the current batch's training to prevent abrupt gradient changes. We evaluate the performance of the proposed method on both images and point clouds quality assessment tasks, demonstrating performance gains in both cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge