Gianmarco Mengaldo

Enhancing Language Models for Robust Greenwashing Detection

Jan 29, 2026Abstract:Sustainability reports are critical for ESG assessment, yet greenwashing and vague claims often undermine their reliability. Existing NLP models lack robustness to these practices, typically relying on surface-level patterns that generalize poorly. We propose a parameter-efficient framework that structures LLM latent spaces by combining contrastive learning with an ordinal ranking objective to capture graded distinctions between concrete actions and ambiguous claims. Our approach incorporates gated feature modulation to filter disclosure noise and utilizes MetaGradNorm to stabilize multi-objective optimization. Experiments in cross-category settings demonstrate superior robustness over standard baselines while revealing a trade-off between representational rigidity and generalization.

eXIAA: eXplainable Injections for Adversarial Attack

Nov 13, 2025Abstract:Post-hoc explainability methods are a subset of Machine Learning (ML) that aim to provide a reason for why a model behaves in a certain way. In this paper, we show a new black-box model-agnostic adversarial attack for post-hoc explainable Artificial Intelligence (XAI), particularly in the image domain. The goal of the attack is to modify the original explanations while being undetected by the human eye and maintain the same predicted class. In contrast to previous methods, we do not require any access to the model or its weights, but only to the model's computed predictions and explanations. Additionally, the attack is accomplished in a single step while significantly changing the provided explanations, as demonstrated by empirical evaluation. The low requirements of our method expose a critical vulnerability in current explainability methods, raising concerns about their reliability in safety-critical applications. We systematically generate attacks based on the explanations generated by post-hoc explainability methods (saliency maps, integrated gradients, and DeepLIFT SHAP) for pretrained ResNet-18 and ViT-B16 on ImageNet. The results show that our attacks could lead to dramatically different explanations without changing the predictive probabilities. We validate the effectiveness of our attack, compute the induced change based on the explanation with mean absolute difference, and verify the closeness of the original image and the corrupted one with the Structural Similarity Index Measure (SSIM).

Human Behavior Atlas: Benchmarking Unified Psychological and Social Behavior Understanding

Oct 06, 2025Abstract:Using intelligent systems to perceive psychological and social behaviors, that is, the underlying affective, cognitive, and pathological states that are manifested through observable behaviors and social interactions, remains a challenge due to their complex, multifaceted, and personalized nature. Existing work tackling these dimensions through specialized datasets and single-task systems often miss opportunities for scalability, cross-task transfer, and broader generalization. To address this gap, we curate Human Behavior Atlas, a unified benchmark of diverse behavioral tasks designed to support the development of unified models for understanding psychological and social behaviors. Human Behavior Atlas comprises over 100,000 samples spanning text, audio, and visual modalities, covering tasks on affective states, cognitive states, pathologies, and social processes. Our unification efforts can reduce redundancy and cost, enable training to scale efficiently across tasks, and enhance generalization of behavioral features across domains. On Human Behavior Atlas, we train three models: OmniSapiens-7B SFT, OmniSapiens-7B BAM, and OmniSapiens-7B RL. We show that training on Human Behavior Atlas enables models to consistently outperform existing multimodal LLMs across diverse behavioral tasks. Pretraining on Human Behavior Atlas also improves transfer to novel behavioral datasets; with the targeted use of behavioral descriptors yielding meaningful performance gains.

Deriving Strategic Market Insights with Large Language Models: A Benchmark for Forward Counterfactual Generation

May 26, 2025

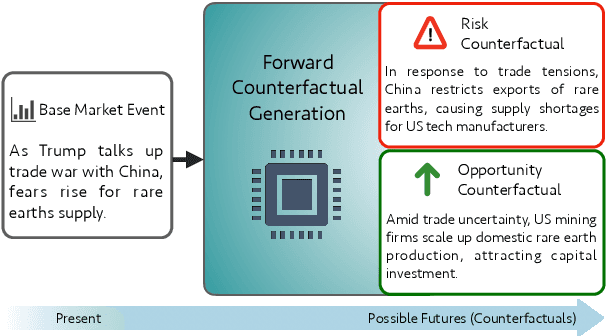

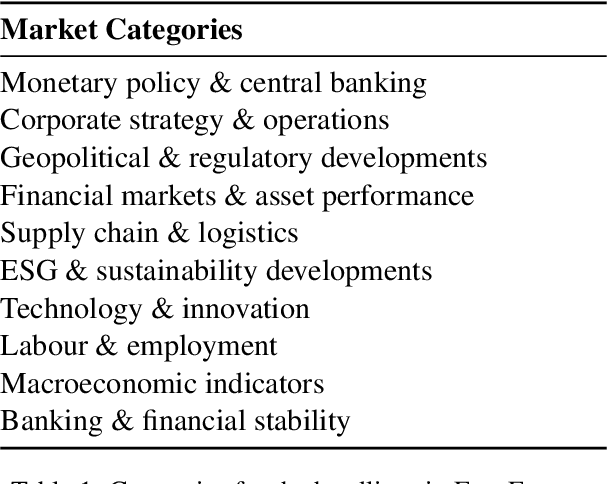

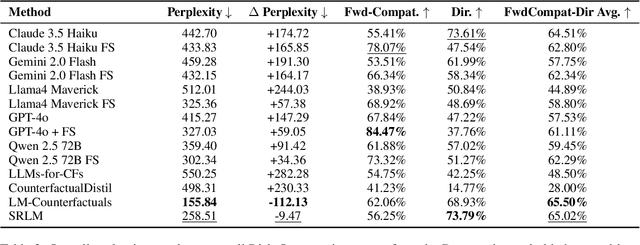

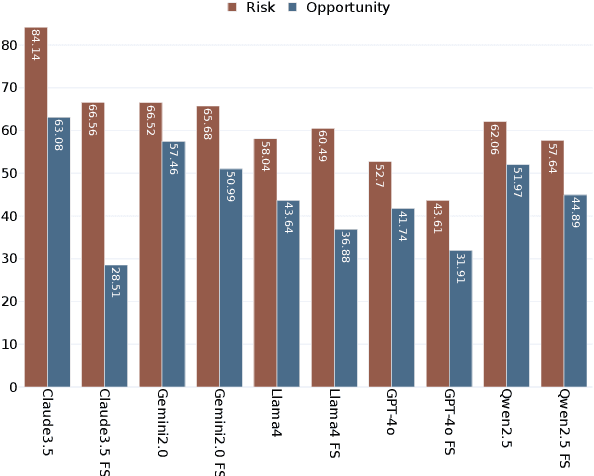

Abstract:Counterfactual reasoning typically involves considering alternatives to actual events. While often applied to understand past events, a distinct form-forward counterfactual reasoning-focuses on anticipating plausible future developments. This type of reasoning is invaluable in dynamic financial markets, where anticipating market developments can powerfully unveil potential risks and opportunities for stakeholders, guiding their decision-making. However, performing this at scale is challenging due to the cognitive demands involved, underscoring the need for automated solutions. Large Language Models (LLMs) offer promise, but remain unexplored for this application. To address this gap, we introduce a novel benchmark, Fin-Force-FINancial FORward Counterfactual Evaluation. By curating financial news headlines and providing structured evaluation, Fin-Force supports LLM based forward counterfactual generation. This paves the way for scalable and automated solutions for exploring and anticipating future market developments, thereby providing structured insights for decision-making. Through experiments on Fin-Force, we evaluate state-of-the-art LLMs and counterfactual generation methods, analyzing their limitations and proposing insights for future research.

ClimaEmpact: Domain-Aligned Small Language Models and Datasets for Extreme Weather Analytics

Apr 27, 2025Abstract:Accurate assessments of extreme weather events are vital for research and policy, yet localized and granular data remain scarce in many parts of the world. This data gap limits our ability to analyze potential outcomes and implications of extreme weather events, hindering effective decision-making. Large Language Models (LLMs) can process vast amounts of unstructured text data, extract meaningful insights, and generate detailed assessments by synthesizing information from multiple sources. Furthermore, LLMs can seamlessly transfer their general language understanding to smaller models, enabling these models to retain key knowledge while being fine-tuned for specific tasks. In this paper, we propose Extreme Weather Reasoning-Aware Alignment (EWRA), a method that enhances small language models (SLMs) by incorporating structured reasoning paths derived from LLMs, and ExtremeWeatherNews, a large dataset of extreme weather event-related news articles. EWRA and ExtremeWeatherNews together form the overall framework, ClimaEmpact, that focuses on addressing three critical extreme-weather tasks: categorization of tangible vulnerabilities/impacts, topic labeling, and emotion analysis. By aligning SLMs with advanced reasoning strategies on ExtremeWeatherNews (and its derived dataset ExtremeAlign used specifically for SLM alignment), EWRA improves the SLMs' ability to generate well-grounded and domain-specific responses for extreme weather analytics. Our results show that the approach proposed guides SLMs to output domain-aligned responses, surpassing the performance of task-specific models and offering enhanced real-world applicability for extreme weather analytics.

Dynamical errors in machine learning forecasts

Apr 16, 2025

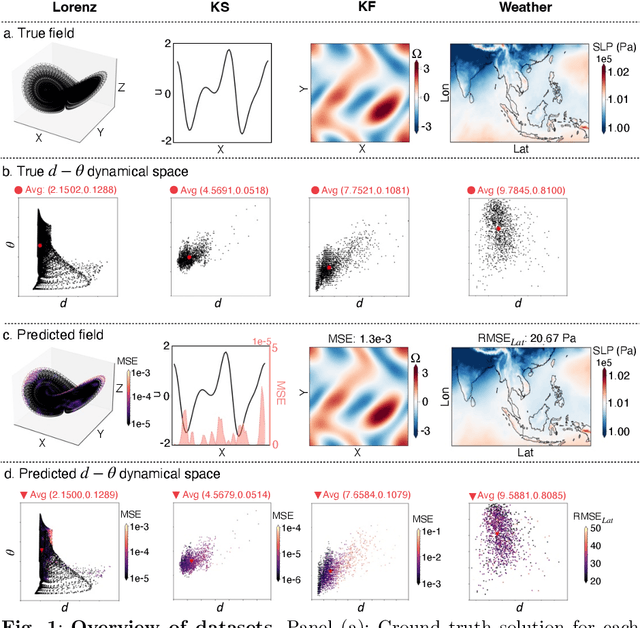

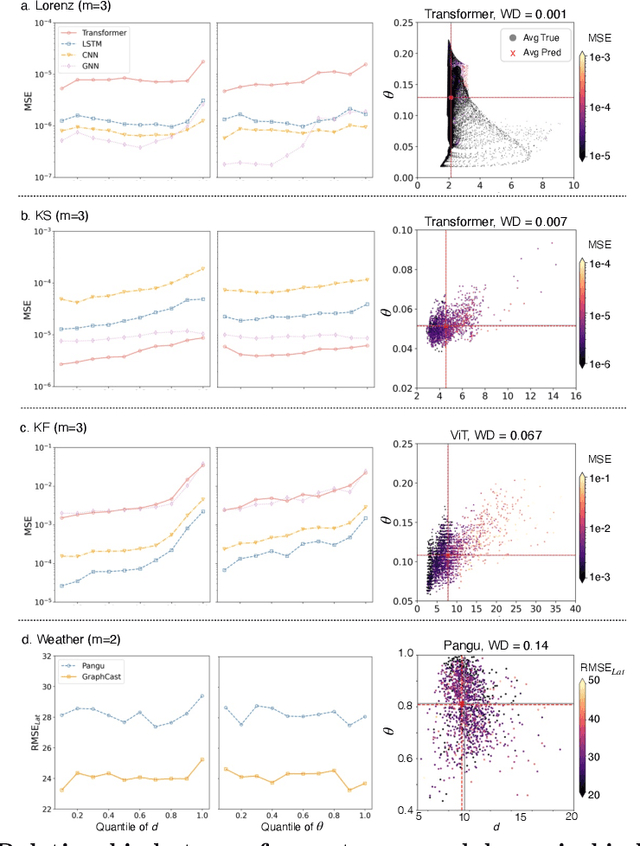

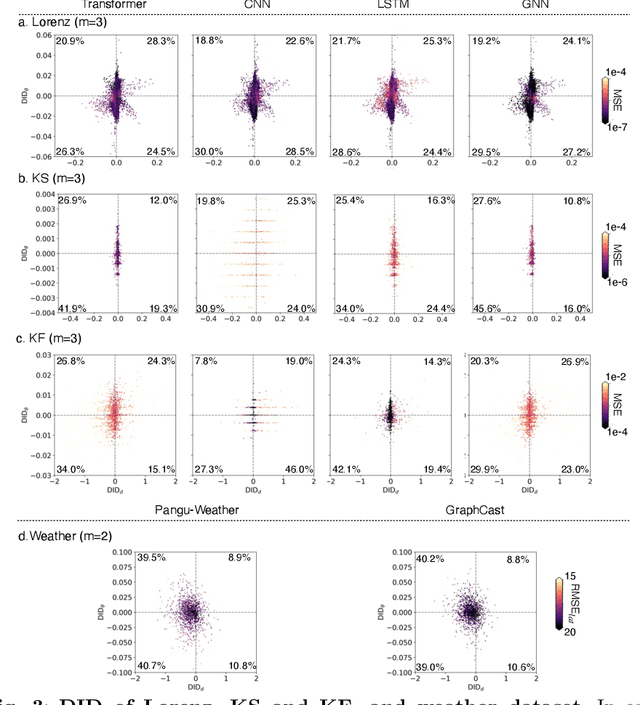

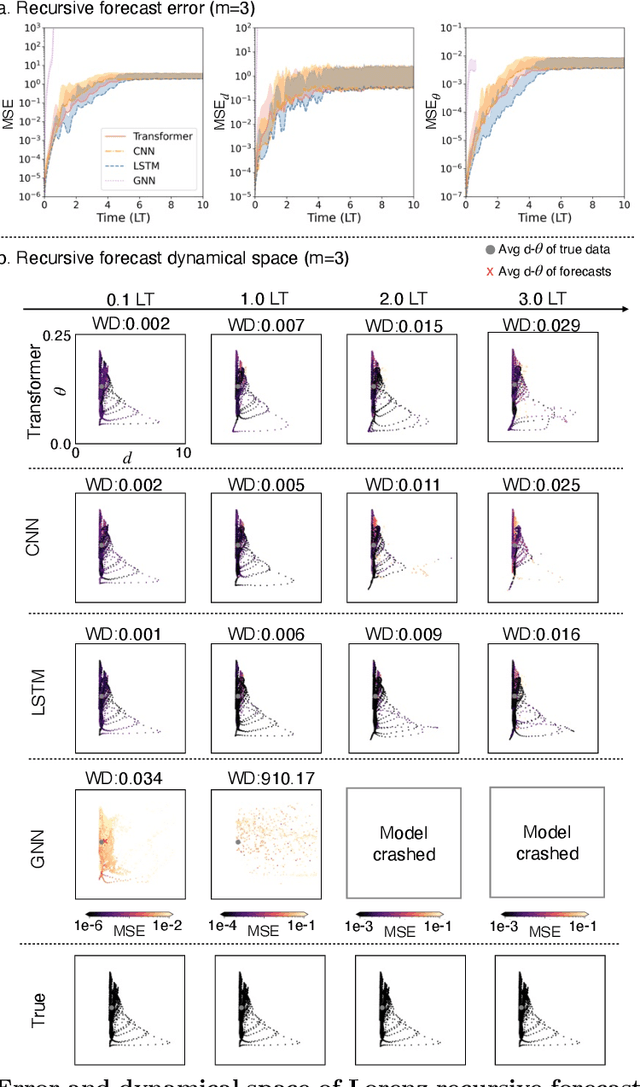

Abstract:In machine learning forecasting, standard error metrics such as mean absolute error (MAE) and mean squared error (MSE) quantify discrepancies between predictions and target values. However, these metrics do not directly evaluate the physical and/or dynamical consistency of forecasts, an increasingly critical concern in scientific and engineering applications. Indeed, a fundamental yet often overlooked question is whether machine learning forecasts preserve the dynamical behavior of the underlying system. Addressing this issue is essential for assessing the fidelity of machine learning models and identifying potential failure modes, particularly in applications where maintaining correct dynamical behavior is crucial. In this work, we investigate the relationship between standard forecasting error metrics, such as MAE and MSE, and the dynamical properties of the underlying system. To achieve this goal, we use two recently developed dynamical indices: the instantaneous dimension ($d$), and the inverse persistence ($\theta$). Our results indicate that larger forecast errors -- e.g., higher MSE -- tend to occur in states with higher $d$ (higher complexity) and higher $\theta$ (lower persistence). To further assess dynamical consistency, we propose error metrics based on the dynamical indices that measure the discrepancy of the forecasted $d$ and $\theta$ versus their correct values. Leveraging these dynamical indices-based metrics, we analyze direct and recursive forecasting strategies for three canonical datasets -- Lorenz, Kuramoto-Sivashinsky equation, and Kolmogorov flow -- as well as a real-world weather forecasting task. Our findings reveal substantial distortions in dynamical properties in ML forecasts, especially for long forecast lead times or long recursive simulations, providing complementary information on ML forecast fidelity that can be used to improve ML models.

XAI4Extremes: An interpretable machine learning framework for understanding extreme-weather precursors under climate change

Mar 11, 2025Abstract:Extreme weather events are increasing in frequency and intensity due to climate change. This, in turn, is exacting a significant toll in communities worldwide. While prediction skills are increasing with advances in numerical weather prediction and artificial intelligence tools, extreme weather still present challenges. More specifically, identifying the precursors of such extreme weather events and how these precursors may evolve under climate change remain unclear. In this paper, we propose to use post-hoc interpretability methods to construct relevance weather maps that show the key extreme-weather precursors identified by deep learning models. We then compare this machine view with existing domain knowledge to understand whether deep learning models identified patterns in data that may enrich our understanding of extreme-weather precursors. We finally bin these relevant maps into different multi-year time periods to understand the role that climate change is having on these precursors. The experiments are carried out on Indochina heatwaves, but the methodology can be readily extended to other extreme weather events worldwide.

Tell me why: Visual foundation models as self-explainable classifiers

Feb 26, 2025Abstract:Visual foundation models (VFMs) have become increasingly popular due to their state-of-the-art performance. However, interpretability remains crucial for critical applications. In this sense, self-explainable models (SEM) aim to provide interpretable classifiers that decompose predictions into a weighted sum of interpretable concepts. Despite their promise, recent studies have shown that these explanations often lack faithfulness. In this work, we combine VFMs with a novel prototypical architecture and specialized training objectives. By training only a lightweight head (approximately 1M parameters) on top of frozen VFMs, our approach (ProtoFM) offers an efficient and interpretable solution. Evaluations demonstrate that our approach achieves competitive classification performance while outperforming existing models across a range of interpretability metrics derived from the literature. Code is available at https://github.com/hturbe/proto-fm.

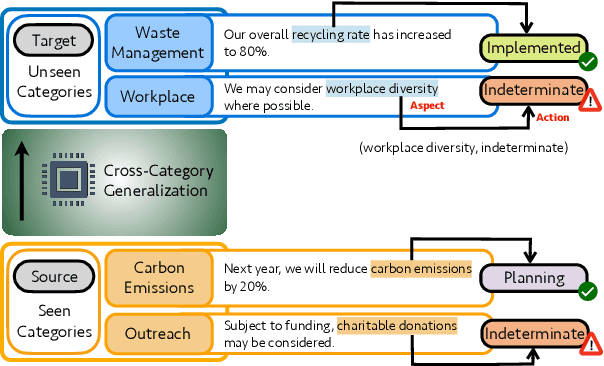

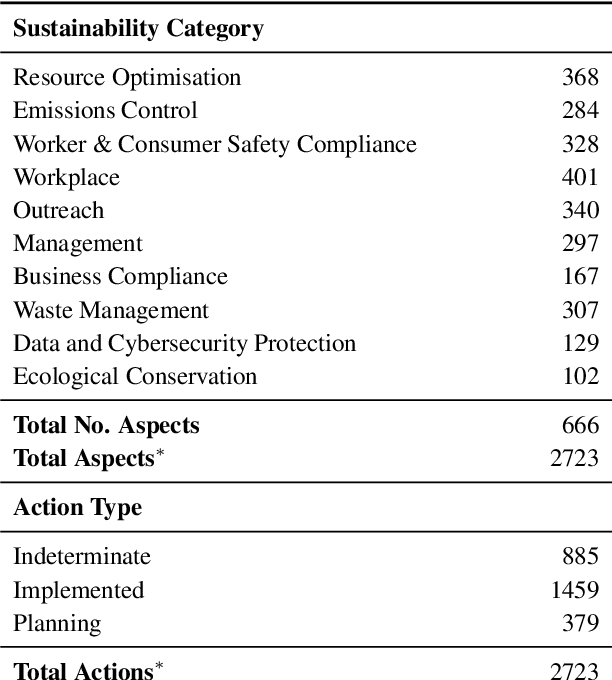

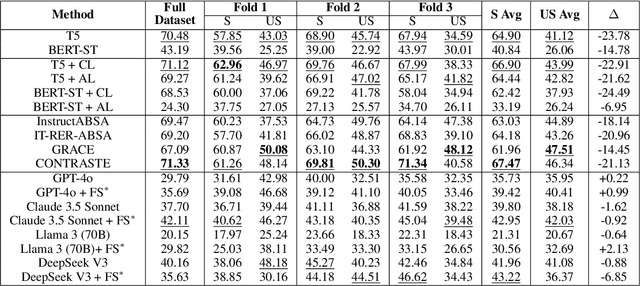

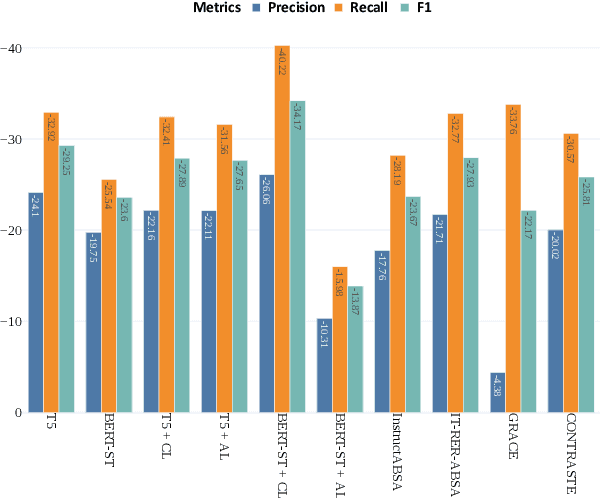

Towards Robust ESG Analysis Against Greenwashing Risks: Aspect-Action Analysis with Cross-Category Generalization

Feb 20, 2025

Abstract:Sustainability reports are key for evaluating companies' environmental, social and governance, ESG performance, but their content is increasingly obscured by greenwashing - sustainability claims that are misleading, exaggerated, and fabricated. Yet, existing NLP approaches for ESG analysis lack robustness against greenwashing risks, often extracting insights that reflect misleading or exaggerated sustainability claims rather than objective ESG performance. To bridge this gap, we introduce A3CG - Aspect-Action Analysis with Cross-Category Generalization, as a novel dataset to improve the robustness of ESG analysis amid the prevalence of greenwashing. By explicitly linking sustainability aspects with their associated actions, A3CG facilitates a more fine-grained and transparent evaluation of sustainability claims, ensuring that insights are grounded in verifiable actions rather than vague or misleading rhetoric. Additionally, A3CG emphasizes cross-category generalization. This ensures robust model performance in aspect-action analysis even when companies change their reports to selectively favor certain sustainability areas. Through experiments on A3CG, we analyze state-of-the-art supervised models and LLMs, uncovering their limitations and outlining key directions for future research.

CondensNet: Enabling stable long-term climate simulations via hybrid deep learning models with adaptive physical constraints

Feb 18, 2025Abstract:Accurate and efficient climate simulations are crucial for understanding Earth's evolving climate. However, current general circulation models (GCMs) face challenges in capturing unresolved physical processes, such as cloud and convection. A common solution is to adopt cloud resolving models, that provide more accurate results than the standard subgrid parametrisation schemes typically used in GCMs. However, cloud resolving models, also referred to as super paramtetrizations, remain computationally prohibitive. Hybrid modeling, which integrates deep learning with equation-based GCMs, offers a promising alternative but often struggles with long-term stability and accuracy issues. In this work, we find that water vapor oversaturation during condensation is a key factor compromising the stability of hybrid models. To address this, we introduce CondensNet, a novel neural network architecture that embeds a self-adaptive physical constraint to correct unphysical condensation processes. CondensNet effectively mitigates water vapor oversaturation, enhancing simulation stability while maintaining accuracy and improving computational efficiency compared to super parameterization schemes. We integrate CondensNet into a GCM to form PCNN-GCM (Physics-Constrained Neural Network GCM), a hybrid deep learning framework designed for long-term stable climate simulations in real-world conditions, including ocean and land. PCNN-GCM represents a significant milestone in hybrid climate modeling, as it shows a novel way to incorporate physical constraints adaptively, paving the way for accurate, lightweight, and stable long-term climate simulations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge